Did you know that Playwright allows you to block certain resource types, e.g., images, and, thus, speed up your scraping or testing? And that's just one of its benefits.

In this article, you'll learn how to block different resources in Playwright and ways to measure the performance boost.

Let's get started!

Prerequisites

Before getting further, you'll need to meet some prerequisites:

You must have Python 3 on your device. Note that some systems have it pre-installed. After that, install Playwright and the browser binaries for Chromium, Firefox, and WebKit.

pip install playwright

playwright install

Intro to Playwright

Playwright is a Python library that automates Chromium, Firefox, and WebKit browsers with a single API. It allows us to surf the web with a headless browser programmatically.

Playwright is also available for Node.js, and everything shown below can be done with a similar syntax. Check the docs for more details.

Here's how to start a browser (i.e., Chromium) in a few lines, navigate to a page, and get its title!

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

browser = p.chromium.launch()

page = browser.new_page()

page.goto("https://www.zenrows.com/")

print(page.title())

# Web Scraping API & Data Extraction - ZenRows

page.context.close()

browser.close()

Logging Network Events

Subscribe to events such as request or response and log their content to see what's happening. Since we didn't give Playwright other instructions, it'll load the entire page. This includes HTML, CSS, executing JavaScript, getting images, etc.

Now add these two lines before requesting the page:

page.on("request", lambda request: print(

">>", request.method, request.url,

request.resource_type))

page.on("response", lambda response: print(

"<<", response.status, response.url))

page.goto("https://www.zenrows.com/")

# >> GET https://www.zenrows.com/ document

# << 200 https://www.zenrows.com/

# >> GET https://cdn.zenrows.com/images/home/header.png image

# << 200 https://cdn.zenrows.com/images/home/header.png

# ... and many more

The entire output is almost 50 lines long, with 24 resource requests. We probably won't need all this for our scraping needs, right?

Let's see how to block some of these and save time and bandwidth!

Blocking Resources

Why load resources and content we won't use? Learn how to avoid unnecessary data and network requests with the following techniques:

Block by Glob Pattern

page also exposes a method route that will execute a handler for each matching route or pattern. Say you don't want SVGs to load. Using a pattern like "**/*.svg" will match requests ending with that extension. As for the handler, we need no logic for the moment, only to abort the request. For that, we'll use lambda and the route param's abort method.

page.route("**/*.svg", lambda route: route.abort())

page.goto("https://www.zenrows.com/")

Note: according to the official documentation, patterns like "**/*.{png,jpg,jpeg}" should work, but we found otherwise. Anyway, it's doable with the next blocking strategy.

Block by Regex

If you're into Regex, here's a method you can use. Compiling it first is mandatory. Regexes are tricky, but they offer a ton of flexibility.

We'll use this approach to block three image extensions. Here's how it works:

import re

# ...

page.route(re.compile(r"\.(jpg|png|svg)$"),

lambda route: route.abort())

page.goto("https://www.zenrows.com/")

There are 23 requests and only 15 responses. We just saved 8 images from being downloaded!

Block by Resource Type

You now might be wondering the following:

What happens if a "jpeg" extension is used instead of "jpg?" Or avif, gif, webp? Should we maintain an updated list?

Luckily, the route param exposed in the lambda function above includes the original request and resource type. One of those is image. Perfect! You can access the whole resource type list.

We'll now match every request ("**/*") and add conditional logic to the lambda function. In case it's an image, abort the request. Else, continue with it as usual.

page.route("**/*", lambda route: route.abort()

if route.request.resource_type == "image"

else route.continue_()

)

page.goto("https://www.zenrows.com/")

Take into consideration that some trackers use images.

Function Handler

Instead of using lambdas, we can define functions for the handlers. That comes in handy when we need to reuse it, or it grows past a single conditional.

Suppose we want to block aggressively now. Looking at the output from the previous runs will show a string of the used resources. We'll add those to a list and then check if it contains the targeted type.

excluded_resource_types = ["stylesheet", "script", "image", "font"]

def block_aggressively(route):

if (route.request.resource_type in excluded_resource_types):

route.abort()

else:

route.continue_()

# ...

page.route("**/*", block_aggressively)

page.goto("https://www.zenrows.com/")

We are entirely in control now, and the versatility is absolute. From routes.request, the original URL, headers, and others are available.

Being even more strict: block everything that isn't document type. That will effectively prevent anything but the initial HTML from being loaded.

def block_aggressively(route):

if (route.request.resource_type != "document"):

route.abort()

else:

route.continue_()

There is a single response now! We got the HTML without any other resource being downloaded. We surely saved a lot of time and bandwidth, right? But... how much exactly?

Measuring Performance Boost

We can claim we got it better only if we can support it with evidence. Let's measure the differences! We'll use three approaches:

Note that just running a script with several URLs will also do. Spoiler: we did that for 10 URLs. The result is 1.3 seconds vs. 8.4.

HAR Files

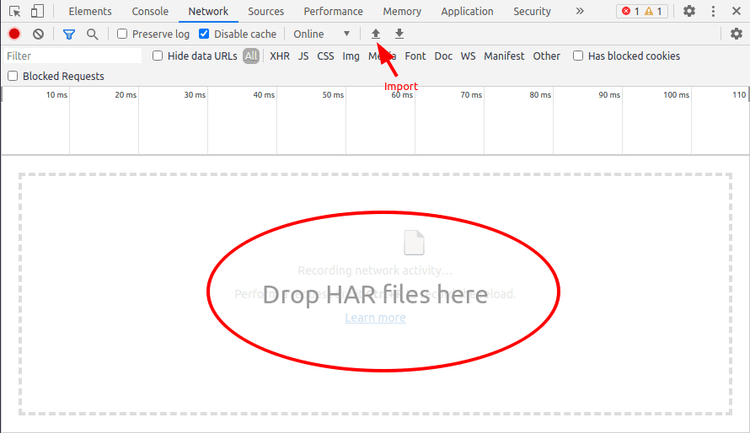

For those of you used to checking the DevTools' Network tab, we have good news! Playwright allows HAR recording by providing an extra parameter in the new_page method. As easy as that.

page = browser.new_page(record_har_path="playwright_test.har")

page.goto("https://www.zenrows.com/")

There are some HAR visualizers out there, but the easiest way is to use Chrome DevTools. Open the ''Network'' tab and click on the import button or drag&drop the HAR file.

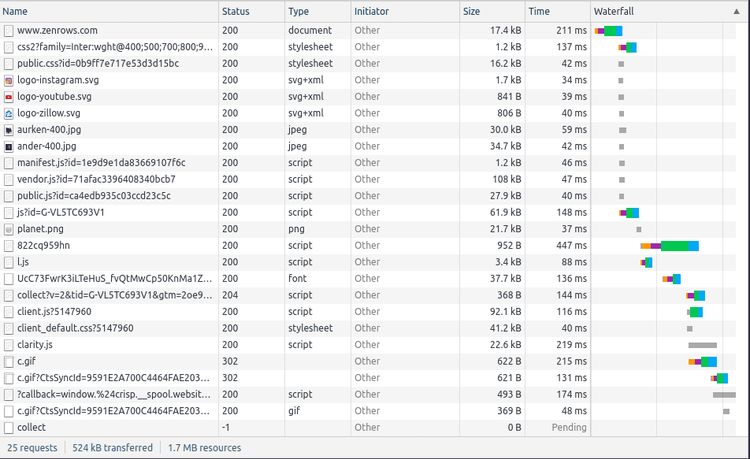

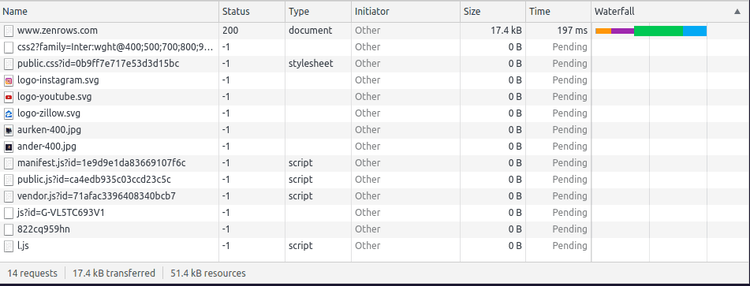

Check time! Let's see the comparison between the two HAR files. The first one displays results without blocking (regular navigation), while the second with blocking everything except for the initial document.

Almost every resource has a "-1" Status and "Pending" Time on the blocking side. That's DevTools's way of telling us that those were blocked and, thus, not downloaded. We can see clearly on the bottom left that we performed fewer requests, and the transferred data amount is a fraction of the original! From 524kB to 17.4kB, that's a 96% cut.

Browser's Performance API

Browsers offer an interface that helps check the performance for various functions, e.g., timing. Playwright can evaluate JavaScript, so we'll use that to print those results.

The output is a JSON object with a lot of timestamps. The most straightforward check is to get the difference between navigationStart and loadEventEnd. When blocking, it should be under half a second (i.e., 346ms); for regular navigation, it goes above a second or even two (i.e., 1363ms).

page.goto("https://www.zenrows.com/")

print(page.evaluate("JSON.stringify(window.performance)"))

# {"timing":{"connectStart":1632902378272,"navigationStart":1632902378244, ...

As you can see, blocking can save you a second, and even more for slower sites. The less you download, the faster you can scrape!

CDP Session

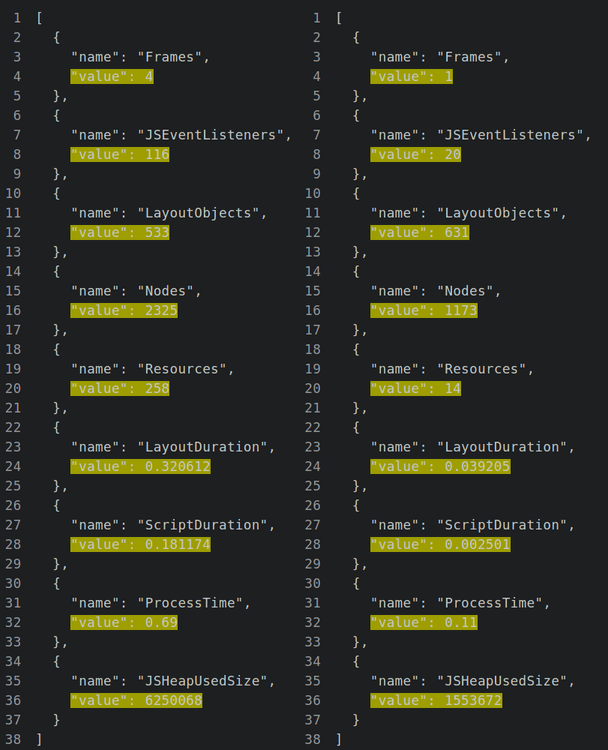

Going a step further, we connect directly with Chrome DevTools Protocol. Playwright creates a CDP Session for us to extract, say, performance metrics.

We have to create a client from the page context and start communication with CDP. In this case, we'll enable "Performance" before visiting the page and getting the metrics after it.

The output is a JSON-like string with very interesting values, e.g., nodes, process time, JS Heap used, etc.

client = page.context.new_cdp_session(page)

client.send("Performance.enable")

page.goto("https://www.zenrows.com/")

print(client.send("Performance.getMetrics"))

Conclusion

Here's a quick recap of what you need to remember:

- Load only needed resources.

- Save time and bandwidth when possible.

- Measure your efforts and performance before scaling up.

Scraping is a process with multiple steps, some more challenging than others, think rotating proxies. All these add processing time and sometimes even charge per bandwidth. You can achieve the same results while saving resources. For example, JS content is unnecessary if you're only interested in the initial static data.

To save time and money, consider ZenRows. This is an affordable scraping API that provides unmatched data extraction speed and accuracy while getting around any anti-bot protection systems. Try it for free today!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.