People and companies often use web crawling tools to easily extract data from different sources since they're fast, effective and can save you from a lot of stress compared to other methods.

So which one to use? We researched and tested many free and paid available on the web, then came up with the 20 best web crawling tools and software to use:

| Tool | Best for | Technical knowledge | Ease of use | High crawling speed | Price |

|---|---|---|---|---|---|

| ZenRows | Developers | Basic coding skills | ✅ | ✅ | 14-day free trial (no credit card required), then plans start as low as $49 per month |

| HTTrack | Copying websites | Basic coding skills | - | ✅ | Free web crawling tool |

| ParseHub | Scheduled browsing | No coding knowledge | - | - | Free version available. Standard plans start at $189 per month |

| Scrapy | Web scraping using a free library | Basic coding skills | - | ✅ | Free web crawling tool |

| Octoparse | Non-coders to scrape data | No coding knowledge | ✅ | ✅ | Free version. Paid plans start at $89 per month |

| Import.io | Pricing analysts | Basic coding skills | - | ✅ | 14 days of trial. Standard plans from $299 per month |

| Webz.io | Dark web monitoring | Basic coding skills | ✅ | ✅ | Free version. Custom prices depend on resource requirements |

| Dexi.io | Analyzing real-time data in e-commerce | No coding knowledge | ✅ | ✅ | Free basic plan. Premium plans start at $119 per month |

| Zyte | Programmers who need less basic features | Proficient coding skills | ✅ | ✅ | 14 days of trial. Paid plans from $29 a month |

| WebHarvy | SEO professionals | No coding knowledge | ✅ | - | Free version. Paid plans start from $139 per month |

| ScraperAPI | Testing alternative crawling APIs | Basic coding skill | ✅ | - | Seven-day free trial. Standard plans start as low as $49 per month |

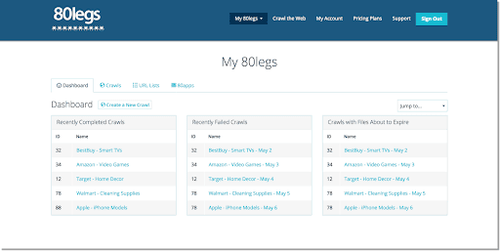

| 80legs | Getting data quickly | Basic coding skills | ✅ | ✅ | Free tier. Pro plans as low as $29 per month |

| UiPath | All sizes of teams | Basic coding skills | ✅ | ✅ | 60 days of trial. Plans from $420 per month |

| Apache Nutch | Writing scalable web crawlers | Proficient coding skills | - | ✅ | Free web crawling tool |

| Outwit Hub | Small projects | No coding knowledge | ✅ | ✅ | Free version available. Paid plan starts at $110/month |

| Cyotek WebCopy | Users with a tight budget | No coding knowledge. No programming skills required | ✅ | - | Free web crawling tool |

| WebSPHINX | Browsing offline | Basic coding skill | ✅ | - | Free web crawling tool |

| Helium Scraper | Fast extraction | Proficient coding skills | - | ✅ | Ten days of trial. Paid plans cost $99 per month and beyond |

| Mozenda | Multi-threaded extraction | No coding knowledge | ✅ | - | 30 days of trial, then the quote is upon request. |

| Apify | Integrating with many systems | Basic coding skills | - | - | Lifetime free basic plan. Pro plans at $49 per month |

Let's get into the details and discuss these web crawling tools as well as their pros and cons. But before that...

What Is Web Crawling?

Web crawling is the process of using software or automated scripts to extract data from different web pages. These scripts are known as web crawlers, spiders or web scraping bots.

Why Use Data Extraction Tools?

Using data extraction software is essential for web crawling projects since, compared to manual scraping, it's a lot faster, more accurate, and more efficient. Data extraction tools can help with managing complex data streams.

What Are the Types of Web Crawling Tools?

The types of web crawling tools that are commonly used are in-house, commercial, and open-source.

- In-house web crawling tools are created internally by businesses to crawl their own website for various tasks, such as Google bots for crawling web pages.

- Commercial crawling software is basically a commercially available tool, like ZenRows.

- Open-source crawling tools, like Apache Nutch, allow anybody to use and customize them as necessary.

Before buying a web crawling tool, you must first understand what to look for or how to choose the best option for your needs. Now that we have the basics, let's talk about the best ones to use!

20 Best Web Crawling Tools for Smooth Data Extraction

1. ZenRows

Best for developers.

ZenRows is the best web crawling tool to extract data from tons of websites without getting blocked. It's easy to use and can bypass anti-bots and CAPTCHAs, making the process fast and smooth. Some of its features include rotating proxies, headless browsers, and geotargeting. You can get started with ZenRows for free and get 1000 API credits to kickstart your crawling project. The plans start as low as $49 per month.

👍 Pros:

- Easy to use.

- Works with Python, NodeJS, C#, PHP, Java, Ruby, and literally all languages.

- It can bypass anti-bots and CAPTCHAs while crawling.

- You can perform concurrent requests.

- Up to 99.9% uptime guarantee.

- It has large proxy pools and supports geotargeting.

- It supports HTTP and HTTPS protocols.

- Also tested for large-scale web scraping without getting blocked.

👎 Cons:

- It doesn't offer extensions for proxy browsers (the proxy management is done by ZenRows using its smart mode).

2. HTTrack

Best for copying websites.

HTTrack is an open-source and free web crawler that allows you to download an internet website to your PC. This web crawling tool provides users with access to all files in folders, like photos. In addition, HTTrack also offers Proxy support to increase speed.

👍 Pros:

- This website crawling tool has a fast download speed.

- Multilingual Windows and Linux/Unix interface.

👎 Cons:

- Only for experienced programmers.

- You'll need the anti-scraping features of other web crawling tools.

3. ParseHub

Best for scheduled crawling.

ParseHub is a web crawling software capable of scraping dynamic web pages. This website crawling tool uses machine learning to identify the trickiest web pages and create output files with the proper data formats. It's downloadable and supports Mac, Windows and Linux. ParseHub has a free basic plan, and its monthly premium starts at $189.

👍 Pros:

- The Parsehub crawling tool can output scraped data into major formats.

- Capable of analyzing, assessing, and transforming web content into useful data.

- Support for regular expressions, IP rotation, scheduled crawling, API, and webhooks.

- No coding skills are required to use this site crawling tool.

👎 Cons:

- The Parsehub scraping process could be slowed down by high-volume scraping.

- The user interface of this web crawling tool makes it difficult to use.

4. Scrapy

Best for web scraping using a free library.

Scrapy is an open-source web crawling tool that runs on Python. The library provides a pre-built framework for programmers to modify a web crawler and extract data from the web at a large scale. It's a free Python crawling library and runs smoothly on Linux, Windows, and Mac.

👍 Pros:

- It's a free web crawling tool

- It uses little CPU and memory space.

- Because Scrapy is asynchronous, it can load many pages concurrently.

- It can do large-scale web scraping.

👎 Cons:

- Scrapy can get detected by anti-bots during web crawling.

- You can't scrape dynamic web pages.

You might want to check out a Scrapy alternative.

5. Octoparse

Best for non-coders to scrape data.

Octoparse is a no-code web crawling tool capable of scraping large amounts of data and turning it into structured spreadsheets with a few clicks. Some of its features include a Point-and-Click Interface to crawl data, automatic IP rotation, and the ability to scrape dynamic sites. This data crawling tool has a free version for small projects, while standard packages start from $89 a month.

👍 Pros:

- Easy to use.

- Beginner-friendly since no coding is required.

- Like ZenRows, Octoparse is capable of crawling dynamic web pages.

- It has automatic IP rotation for anti-bot bypass.

- Offers anonymous data crawling.

👎 Cons:

- No Chrome extension.

- Lacks a feature for extracting PDF data.

6. Import.io

Best for pricing analysts.

Import.io is a website crawling software that lets you create your own datasets without writing a single line of code. It can scan thousands of web pages and create 1,000+ APIs based on your requirements. Import.io offers daily or monthly reports that reveal the products that your competitors have added or withdrawn, pricing data, including modifications, and stock levels. They have a free trial available for 14 days, with monthly prices starting from $299.

👍 Pros:

- Interaction with web forms/login.

- Automated web workflows and interaction.

- It supports geolocation, CAPTCHA resolution, and JavaScript rendering.

👎 Cons:

- The UI is confusing.

- It's more expensive than other web crawling tools.

7. Webz.io (formerly Webhose.io)

Best for dark web monitoring.

Webz.io is one of the top content crawling tools in the market. It can turn online data from the open and dark web into structured data feeds suitable for machine consumption. Webz.io offers a free plan with 1000 requests, and you should talk to the sales team about a paid plan.

👍 Pros:

- Easy to use.

- Smooth onboarding process.

- It can be used for real-time web scraping.

👎 Cons:

- No transparent pricing model.

8. Dexi.io

Best for analyzing real-time data in e-commerce.

Dexi.io is a cloud-based tool for crawling e-commerce sites that has a browser-based editor for setting up a web crawler in real time to extract data. The collected data can be saved on cloud services, like Google Drive and Box.net, or exported as CSV or JSON. Dexi.io has a free trial to get started, with premium plans starting from $119 a month.

👍 Pros:

- User-friendly interface.

- Intelligent robots automate the collection of data.

- The crawlers can be built and managed via API.

- Capable of connecting to a large variety of APIs for data integration and extraction.

👎 Cons:

- To use the Dexi.io crawling tool, you must install Dexi's custom browser.

- Failure is a possibility for complicated undertakings that need data crawling.

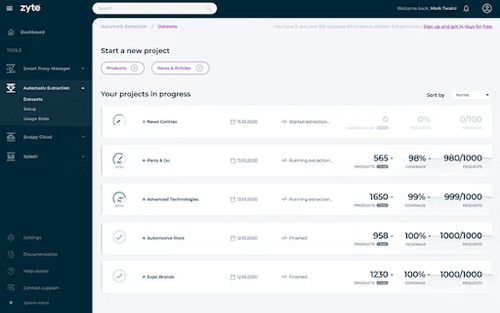

9. Zyte (formerly Scrapinghub)

Zyte is a cloud-based data extraction tool that uses API to extract data. Some of its features include smart proxy management, headless browser support, residential proxies, and support. Zyte's free trial is available for 14 days with monthly prices as low as $29. It also provides a 10% discount on annual plans!

👍 Pros:

- The Zyte crawling tools offer an easy-to-use UI.

- Excellent customer support.

- Automated proxy rotation.

- It supports headless browsers.

- Geolocation is enabled.

👎 Cons:

- Among all service plans of the Zyte crawling tool, lower plans are limited in bandwidth.

- Only add-ons are available for advanced features.

You might want to check out a Zyte alternative.

10. WebHarvy

Best for SEO professionals.

WebHarvy is a web crawler that can be used to easily extract data from web pages. This web crawling software enables you to extract HTML, images, text, and URLs. The basic plan costs $99 for a single license, and the highest is $499 for Unlimited Users.

👍 Pros:

- It supports all types of websites.

- Accessing target websites can be done through proxy servers or a VPN.

- No coding skills are required to use this site crawling tool.

👎 Cons:

- Its web crawling speed is slower compared to other data crawling tools.

- Data could be lost after several days of crawling.

- Sometimes it fails while crawling.

11. ScraperApi

Best for testing alternative crawling APIs.

ScraperApi is one of the website crawling tools for developers building scrapers. It supports proxies, browsers, and CAPTCHAs, allowing developers to obtain raw HTML from any website with a single API call. Coming with a seven-day trial, plans start at $49 per month.

👍 Pros:

- Easy to use.

- It has a proxy pool.

- It's capable of bypassing anti-bots.

- Good customization possibilities.

- It has a 99.9% uptime guarantee.

👎 Cons:

- Smaller plans come with many limitations compared to other competitors.

- This web crawling tool can't scrape a dynamic web page

You might want to check out a ScraperApi alternative.

12. 80legs

Best for getting data quickly.

80legs is a Cloud-Based tool for web crawling. It can be used to create custom web crawlers and extract data by using a URL or specifications of the type of data you want. 80legs has a free plan with limitations, and the paid plans start at $29 per month.

👍 Pros:

- The Datafiniti function enables quick data searches.

- Unlimited crawls per month for the free version.

- Apps listed in 80Legs help low-code skilled users analyze extracted web content.

👎 Cons:

- It doesn't support document and pricing extraction.

13. UiPath

Best for all sizes of teams.

UiPath is a robotic process automation (RPA) software used across small, medium as well as large-scale organizations to build web crawlers. Additionally, creating intelligent web agents doesn't require programming, but the.NET hacker inside of you'll have full access to the data. It has a lifetime free plan, and paid ones start at $420 per month.

👍 Pros:

- Easy to use.

- With an auto-login feature to run the bots.

👎 Cons:

- It's expensive compared to other crawling tools.

- Crawlers built on UiPath don't work well with unstructured data.

14. Apache Nutch

Best for writing scalable web crawlers.

Apache Nutch is a scalable web crawler framework that supports a wide range of data extraction activities. Although it can be customized for smaller jobs, Nutch excels in batch processing massive amounts of data, making it one of the most popular freeware options for many businesses.

👍 Pros:

- It's a free web crawling tool.

- High crawling accuracy.

- Excellent multi-depth crawling capabilities.

👎 Cons:

- High memory space and CPU usage during crawling.

15. OutWit Hub

Best for small projects.

OutWit Hub is one of the easiest online tools for crawling and lets you find and extract all kinds of data from online sources without writing a single line of code. In addition to the free version, OutWit Hub has a pro version for $59.90 a month.

👍 Pros:

- Easy to use.

- Suitable for large-scale web scraping.

- Automatic query and URL generation with patterns.

- It's capable of crawling both structured and unstructured data.

👎 Cons:

- It can get detected and blocked by anti-bots.

16. Cyotek WebCopy

Best for users with a tight budget.

Cyotek WebCopy is a budget-friendly site crawling tool for extracting and downloading webpage data to your local device. When a website is specified, WebCopy scans and downloads its content. Links on the website that point to resources like style sheets, photos or other pages will immediately be remapped to match the local path. Like HTTrack, the Cyotek WebCopy program is free.

👍 Pros:

- It's highly configurable.

- It has different set up options.

- No installation is required to use the Cyoteck crawling tool.

- The tool can identify linked resources.

👎 Cons:

- Lacks a virtual DOM.

- Not capable of JavaScript parsing.

17. WebSPHINX

Best for browsing offline.

WebSPHINX, an acronym for Website-Specific Processors for HTML Information Extraction, is a free Java web crawling library. It has a Crawler Workbench that lets you configure and control a customizable web crawler.

👍 Pros:

- Quick setup.

- Clear documentation.

- It allows you to save pages to your local disk for offline browsing.

- It can extract JavaScript-rendered content.

👎 Cons:

- Unstable for large-scale web crawling.

18. Helium Scraper

Best for fast extraction.

Helium Scraper is a downloadable software for web crawling. It was designed to smoothly extract data from various websites to run multiple off-screen Chromium web browsers. You can find two comparable examples using the active selection mode, and the tool will then automatically find copies of the elements. It costs as low as $99 for a single license.

👍 Pros:

- It supports multiple export formats.

- Ready-to-use templates for web crawling.

- The interface is easy to interact with.

👎 Cons:

- Support for Windows OS only.

- Only for advanced users.

19. Mozenda

Best for multi-threaded extraction.

Mozenda is a cloud-based web crawling software geared toward mostly businesses and enterprises. Some of its products include data harvesting and wrangling. Mozenda has a 30-day trial with 1.5 hours of web data extraction, and the lowest-paid package starts at $250 per month.

👍 Pros:

- Easy to use.

- It allows smart data aggregation and multi-threaded extraction.

- Mozenda lets you extract files, like photos and PDFs, from websites.

👎 Cons:

- Expensive compared to other crawling tools.

- Unstable for large-scale crawling.

- This crawling tool will charge by the hour, even for the trial plan.

20. Apify

Best for integrating with many systems.

Apify is a web scraping and automation platform with flexible and ready-to-use tools for web crawling in different industries like e-commerce, marketing, real estate, etc.

In addition to exporting scraped data in machine-readable formats like JSON or CSV, Apify integrates with your existing Zapier or Make workflows or any other web app using API and webhooks. Apify has a lifetime free plan, and its paid plans start from $49 per month.

👍 Pros:

- Apify crawling tool has datacenter proxies for anti-bot bypass.

- Well-structured documentation.

👎 Cons:

- It's difficult to use this web crawling tool without programming knowledge.

- It has firewall issues while crawling.

Conclusion

Using tested tools for web crawling is a way to get the data you care about quickly, effectively, and headache-free. So in this article, we discussed the 20 best web crawling tools to use, and here are our top five from that list:

- ZenRows: Best for developers.

- HTTrack: Best for copying websites.

- ParseHub: Best for scheduled crawling.

- Scrapy: Best for web scraping using a free library.

- Octoparse: Best for non-coders to scrape data.

Frequent Questions

Which Tool Is Best for Web Crawling?

ZenRows API is the best web crawling tool because it can crawl web pages without getting blocked by anti-bots. Some of its features include premium rotating proxies, headless browsing, CAPTCHA bypass and a 99.9% uptime guarantee.

Additionally, ZenRows is compatible with all programming languages and scrapes the web with limitless scale and bandwidth. Try it out for free!

What to Consider While Selecting Crawling Software?

Price, ease of use, scalability, speed, and documentation are important factors you should consider when selecting a crawling tool. Since ZenRows checks all these boxes, it's not surprising it came out as the best tool for web crawling.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.