Have you ever tried scraping AJAX websites? Sites full of JavaScript and XHR calls? Decipher tons of nested CSS selectors? Or worse, daily changing ones?

Maybe you won't need that ever again. Keep on reading, XHR scraping might prove your ultimate solution!

Prerequisites

First, make sure you have all you need to follow this tutorial:

For the code to work, you need to have Python 3 on your device. Note that some systems have it pre-installed. After that, install Playwright and the browser binaries for Chromium, Firefox, and WebKit.

pip install playwright

playwright install

Intercept Responses

If you read our previous blog post about blocking resources, you know that headless browsers allow request and response inspection.

Let's see how that works:

We will use Playwright in Python for the demo. However, you can opt for Javascript or Puppeteer if you prefer.

We can quickly inspect all the responses on a page. As shown below, the response parameter contains the status, URL, and content itself. That's what we'll use instead of directly scraping content in the HTML using CSS selectors.

page.on("response", lambda response: print(

"<<", response.status, response.url))

Underline## Use Case: Auction.com

Let's dig into this with some examples!

The first one we'll use is auction.com. You might need proxies or a VPN to access it since it isn't available outside the countries it operates in. In any case, using your IP might be problematic for scraping since you'll eventually end up blocked. Learn how to avoid blocking and save yourself the trouble.

Here's how loading the page using Playwright while logging all the responses looks.

from playwright.sync_api import sync_playwright

url = "https://www.auction.com/residential/ca/"

with sync_playwright() as p:

browser = p.chromium.launch()

page = browser.new_page()

page.on("response", lambda response: print(

"<<", response.status, response.url))

page.goto(url, wait_until="networkidle", timeout=90000)

print(page.content())

page.context.close()

browser.close()

auction.com will load an HTML skeleton without the content we are after (house prices or auction dates). The site will then load several resources, such as images, CSS, fonts, and Javascript. If we wanted to save some bandwidth, we could filter out some of these. For now, we're going to focus on the attractive parts.

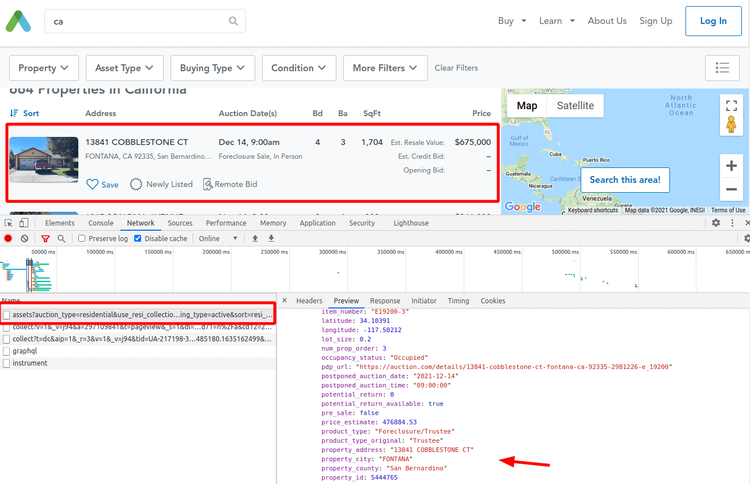

Refer to the ''Network'' tab to see that almost all relevant content comes from an XHR call to an assets endpoint. Ignoring the rest, we can inspect that by checking if the response URL contains the following string: if ("v1/search/assets?" in response.url).

There is a size and time problem, though. The page displays tracking and map, which amounts to over a minute in loading (using proxies) and 130 requests. 😮 We could certainly do better if we blocked certain domains and resources.

We managed to go under 20 seconds with only seven loaded resources. Try it for yourself! We'll leave that as an exercise for you. 😉

<< 407 https://www.auction.com/residential/ca/

<< 200 https://www.auction.com/residential/ca/

<< 200 https://cdn.auction.com/residential/page-assets/styles.d5079a39f6.prod.css

<< 200 https://cdn.auction.com/residential/page-assets/framework.b3b944740c.prod.js

<< 200 https://cdn.cookielaw.org/scripttemplates/otSDKStub.js

<< 200 https://static.hotjar.com/c/hotjar-45084.js?sv=5

<< 200 https://adc-tenbox-prod.imgix.net/resi/propertyImages/no_image_available.v1.jpg

<< 200 https://cdn.mlhdocs.com/rcp_files/auctions/E-19200/photos/thumbnails/2985798-1-G_bigThumb.jpg

# ...

If you're looking for a more straightforward solution, you can try applying changes to the wait_for_selector function. It may not be ideal, but we noticed that sometimes the script stops altogether before loading the content. To avoid those cases altogether, we'll change the waiting method.

By inspecting the results, you can see that the wrapper was there from the skeleton. But each house's content isn't. So, we have to wait for one of those: "h4[data-elm-id]".

with sync_playwright() as p:

def handle_response(response):

# the endpoint we are insterested in

if ("v1/search/assets?" in response.url):

print(response.json()["result"]["assets"]["asset"])

# ...

page.on("response", handle_response)

# really long timeout since it gets stuck sometimes

page.goto(url, timeout=120000)

page.wait_for_selector("h4[data-elm-id]", timeout=120000)

Here we have the output, with even more info than the interface offers! Everything is clean and nicely formatted 😎

[{

"item_id": "E192003",

"global_property_id": 2981226,

"property_id": 5444765,

"property_address": "13841 COBBLESTONE CT",

"property_city": "FONTANA",

"property_county": "San Bernardino",

"property_state": "CA",

"property_zip": "92335",

"property_type": "SFR",

"seller_code": "FSH",

"beds": 4,

"baths": 3,

"sqft": 1704,

"lot_size": 0.2,

"latitude": 34.10391,

"longitude": -117.50212,

...

We could go a step further and use pagination to get the whole list. We'll leave that up to you.

Use Case: Twitter.com

Here's another case where there's no initial content.

To be able to scrape Twitter, you'll undoubtedly need Javascript Rendering. As in the previous example, you could use CSS selectors once the entire content is loaded. Keep in mind, though, that Twitter classes are dynamic and will change frequently.

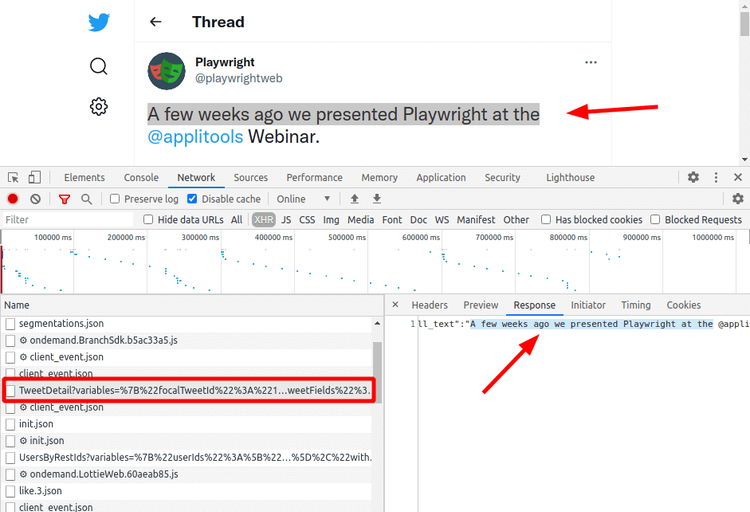

What will most probably remain the same is the API endpoint they use internally to get the main content: TweetDetail. In cases like this, the easiest path is to check the XHR calls in DevTools' ''Network'' tab and look for some content in each request. Twitter can make 20 to 30 JSON or XHR requests per page view!

Once we identify the target calls and responses, the process will be similar.

import json

from playwright.sync_api import sync_playwright

url = "https://twitter.com/playwrightweb/status/1396888644019884033"

with sync_playwright() as p:

def handle_response(response):

# the endpoint we are insterested in

if ("/TweetDetail?" in response.url):

print(json.dumps(response.json()))

browser = p.chromium.launch()

page = browser.new_page()

page.on("response", handle_response)

page.goto(url, wait_until="networkidle")

page.context.close()

browser.close()

The output is a considerable JSON (80kb) with more content than we asked for (over 10 nested structures until we arrive at the tweet content). The good news is that we can now access favorites, retweets, reply counts, images, dates, etc.

Use Case: NSEindia.com

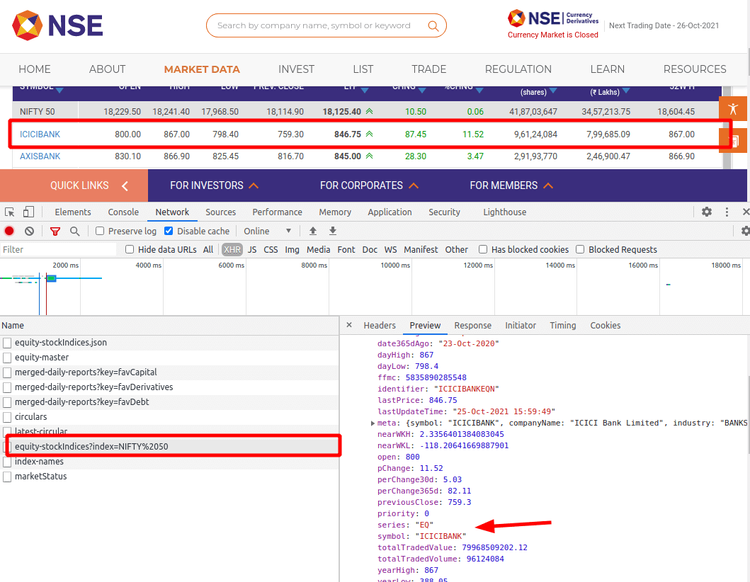

Stock markets are an ever-changing source of important data. Some sites offering this info, such as the National Stock Exchange of India, will start with an empty skeleton. After browsing for a few minutes, we see that the market data loads via XHR.

Another clue is to check for content in the page source. If there isn't any, that usually means it'll load later, which most commonly requires XHR requests. And we can intercept those!

Since we're parsing a list, we will loop over it a print with only part of the data in a structured way: symbol and price for each entry.

from playwright.sync_api import sync_playwright

url = "https://www.nseindia.com/market-data/live-equity-market"

with sync_playwright() as p:

def handle_response(response):

# the endpoint we are insterested in

if ("equity-stockIndices?" in response.url):

items = response.json()["data"]

[print(item["symbol"], item["lastPrice"]) for item in items]

browser = p.chromium.launch()

page = browser.new_page()

page.on("response", handle_response)

page.goto(url, wait_until="networkidle")

page.context.close()

browser.close()

# Output:

# NIFTY 50 18125.4

# ICICIBANK 846.75

# AXISBANK 845

# ...

As before, this is a simplified example. Printing is not the solution to a real-world problem. Instead, each page structure should have a content extractor and a method to store it. Also, the system should handle the crawling part independently.

Conclusion

Here's a recap of what you need to remember:

- Inspect the page looking for clean data.

- API endpoints change less often than CSS selectors and HTML structure.

- Playwright offers more than just JavaScript rendering.

Even if the extracted data is the same, fail-tolerance and effort in writing your spider are fundamental factors. The less you have to change them manually, the better.

Apart from XHR requests, there are many other ways to scrape data beyond selectors. Not every one of them will work on a given website, but adding them to your tool belt might be of great help.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.