Are you new to web scraping and want to start your first project? JavaScript is one of the best programming languages for web scraping.

In this article, you'll learn how to build a web scraper with JavaScript, from the basics to the advanced concepts.

How Good Is JavaScript for Web Scraping

JavaScript's fast execution speed and support for asynchronous programming make it a popular choice among web scrapers. According to the 2023 Stack Overflow Developer Survey, JavaScript remains the most used programming language, with Python trailing behind it.

Although some developers opt for other languages like Python for their simplicity and the availability of rich web scraping libraries, JavaScript is a good choice due to its ability to interact directly with the DOM.

Popular NodeJS web scraping libraries and tools include HTTP clients like Axios, HTML parsers like Cheerio and DOMParser, and headless browsers, including jsdom, Puppeteer, Selenium, and Playwright.

Prerequisites

Scraping a website with Node.js requires some initial setups. Let's go through them in this section.

Set Up the Environment

Download and install the latest Node.js version from the Node.js download page. This installation adds Node.js to your machine's path so you can install dependencies with the Node package manager (npm).

You can write your code using any IDE. But this tutorial uses VS Code on a Windows operating system to write the scraping scripts.

Set Up a NodeJS Project

After installing Node.js and VS Code, the next step is to set up a Node.js project and install the required libraries.

First, create a new project folder with a scraper.js file and open VS Code to that directory.

You'll need an HTTP client to request the target website and a parser to read its HTML content. For this tutorial, you'll use Axios as the HTTP client and Cheerio to parse the returned HTML.

Run the following command to install these packages:

npm install axios cheerio

Now, let's start scraping!

How to Scrape a Website in NodeJS

In this section, you'll scrape product information from the ScrapingCourse demo website, starting with the full-page HTML. You'll then improve your code to target one element and scale it to scrape a whole page before exporting the extracted data to a CSV.

Step 1: Get the HTML of Your Target Page

The first step is to request the target web page and obtain its HTML using Axios.

To extract content with Axios, send a request to the website, validate the response, and log its HTML content:

// import the required library

const axios = require("axios");

// use axios to send an HTTP GET request to the website"s URL

axios.get("https://scrapingcourse.com/ecommerce/")

// validate the response

.then(response => {

// print the response data

console.log(response.data);

})

.catch(error => {

// handle request errors

console.error("Error fetching website:", error);

});

The above code outputs the full-page HTML. Here's a prettified version of the result:

<!DOCTYPE html>

<html lang="en-US">

<head>

<!--- ... --->

<title>An ecommerce store to scrape – Scraping Laboratory</title>

<!--- ... --->

</head>

<body class="home archive ...">

<p class="woocommerce-result-count">Showing 1–16 of 188 results</p>

<ul class="products columns-4">

<!--- ... --->

<li>

<h2 class="woocommerce-loop-product__title">Abominable Hoodie</h2>

<span class="price">

<span class="woocommerce-Price-amount amount">

<bdi>

<span class="woocommerce-Price-currencySymbol">$</span>69.00

</bdi>

</span>

</span>

<a aria-describedby="This product has multiple variants. The options may ...">Select options</a>

</li>

<!--- ... other products omitted for brevity --->

</ul>

</body>

</html>

Next, you'll see how to scrape specific product information from one element.

Step 2: Extract Data from One Element

Getting data from one element involves parsing the website's HTML with Cheerio and extracting the target element using CSS selectors.

To test it, you'll extract the first product's name, price, and image URL from the target page.

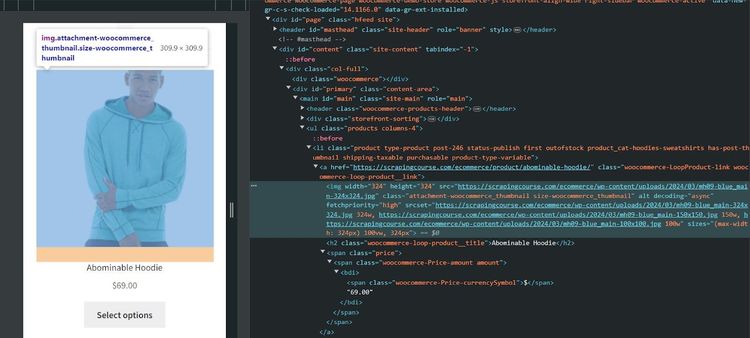

First, let's inspect the target website to expose its DOM attributes. Visit the website via a browser like Chrome, right-click the first product, and select Inspect to open the developer console:

Now, open the target URL with Axios and parse its HTML using Cheerio:

// import the required libraries

const axios = require("axios");

const cheerio = require("cheerio");

// use axios to send an HTTP GET request to the website"s URL

axios.get("https://scrapingcourse.com/ecommerce/")

// validate the response

.then(response => {

// parse the returned HTML

const $ = cheerio.load(response.data);

})

Next is content extraction with Cheerio. Get the first matching attribute of each element, extract each into an object, and log the output. Finally, catch any execution error:

// ...

// validate the response

.then(response => {

// ...

// extract product information from the first product

const data = {

"name": $(".woocommerce-loop-product__title").first().text(),

"price": $("span.price").first().text(),

"image_url": $("img.attachment-woocommerce_thumbnail").first().attr("src")

};

// output the extracted content

console.log(data);

})

.catch(error => {

// handle request errors

console.error("Error fetching website:", error);

});

Combine both snippets and your final code should look like this:

// import the required libraries

const axios = require("axios");

const cheerio = require("cheerio");

// use axios to send an HTTP GET request to the website"s URL

axios.get("https://scrapingcourse.com/ecommerce/")

// validate the response

.then(response => {

// parse the returned HTML

const $ = cheerio.load(response.data);

// extract product information from the first product

const data = {

"name": $(".woocommerce-loop-product__title").first().text(),

"price": $("span.price").first().text(),

"image_url": $("img.attachment-woocommerce_thumbnail").first().attr("src")

};

// output the extracted content

console.log(data);

})

.catch(error => {

// handle request errors

console.error("Error fetching website:", error);

});

The above code extracts the first product's name, price, and image URL, as shown:

{

name: 'Abominable Hoodie',

price: '$69.00',

image_url: 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/mh09-blue_main-324x324.jpg'

}

Let's modify the code to get multiple content from that page.

Step 3: Extract Data from All Elements

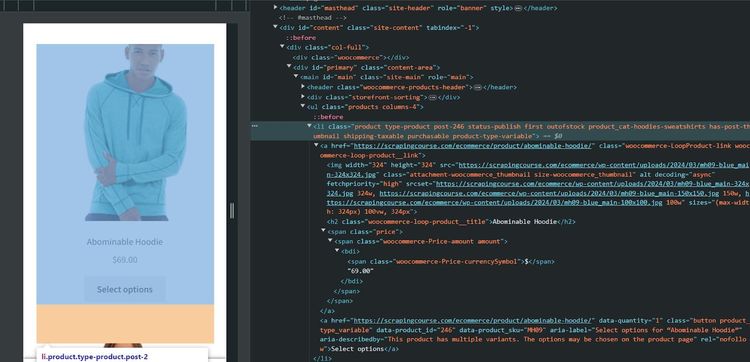

Extracting all elements requires retrieving information from all content containers on the target page. Inspect the page, and you'll see that it arranges each product in a list tag:

You'll loop through each product container to obtain names, prices, and image URLs. Let's scale the previous code to achieve that.

Visit the target website with Axios, parse its HTML with Cheerio, and obtain all the product containers:

// import the required libraries

const axios = require("axios");

const cheerio = require("cheerio");

// use axios to send an HTTP GET request to the website"s URL

axios.get("https://scrapingcourse.com/ecommerce/")

// validate the response

.then(response => {

// parse the returned HTML

const $ = cheerio.load(response.data);

// extract the product containers

const containers = $("li.product");

})

Create an empty array to collect the extracted data and loop through each product container to scrape the target content. Then, log the product data array and catch request errors:

// ...

// validate the response

.then(response => {

// ...

// declare an empty array to collect the extracted data

const data_array = [];

// loop through each product container to extrac its content

containers.each((index, container) => {

const data = {

"name": $(container).find(".woocommerce-loop-product__title").text(),

"price": $(container).find("span.price").text(),

"image_url": $(container).find("img.attachment-woocommerce_thumbnail").attr("src")

};

// push the data into an empty array

data_array.push(data);

})

// output the extracted content

console.log(data_array);

})

.catch(error => {

// handle request errors

console.error("Error fetching website:", error);

});

Merge both snippets to get the following complete code:

// import the required libraries

const axios = require("axios");

const cheerio = require("cheerio");

// use axios to send an HTTP GET request to the website"s URL

axios.get("https://scrapingcourse.com/ecommerce/")

// validate the response

.then(response => {

// parse the returned HTML

const $ = cheerio.load(response.data);

// extract the product containers

const containers = $("li.product");

// declare an empty array to collect the extracted data

const data_array = [];

// loop through each product container to extrac its content

containers.each((index, container) => {

const data = {

"name": $(container).find(".woocommerce-loop-product__title").text(),

"price": $(container).find("span.price").text(),

"image_url": $(container).find("img.attachment-woocommerce_thumbnail").attr("src")

};

// push the data into an empty array

data_array.push(data);

})

// output the extracted content

console.log(data_array);

})

.catch(error => {

// handle request errors

console.error("Error fetching website:", error);

});

The above code extracts the names, prices, and image URLs of all products on the page, as shown:

[

{

name: 'Abominable Hoodie',

price: '$69.00',

image_url: 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/mh09-blue_main-324x324.jpg'

},

// ... other products omitted for brevity

{

name: 'Artemis Running Short',

price: '$45.00',

image_url: 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/wsh04-black_main-324x324.jpg'

}

]

You now know how to extract multiple content with Axios and Cheerio in JavaScript. Great job!

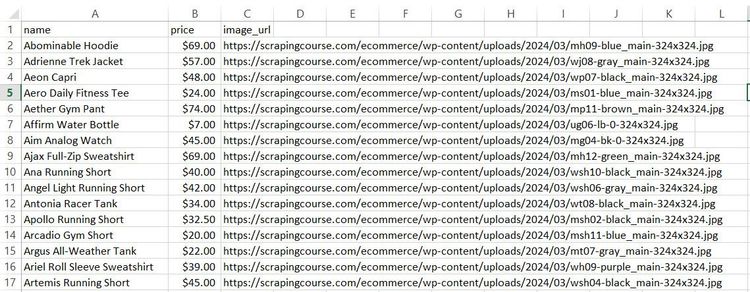

Step 4: Export Your Data to a CSV File

The final step is to organize your data into a CSV file in preparation for storage or processing.

Define a function to create CSV-formatted strings of the data. Then, write the extracted content into CSV using the Node.js built-in fs library. Extend the previous script with the following code block to achieve this:

const fs = require("fs");

// ...

// validate the response

.then(response => {

// ...

// specify the CSV headers

const headers = ["name", "price", "image_url"];

// add the headers to the CSV file

let csvData = headers.join(",") + "\n";

// create CSV-formatted strings

data_array.forEach(item => {

const row = Object.values(item).join(",");

csvData += row + "\n";

});

// write the extracted data to a CSV

fs.writeFile("products.csv", csvData, err => {

if (err) {

console.error("Error writing CSV file:", err);

} else {

console.log("CSV file written successfully.");

}

});

})

Merge that snippet with the previous code, and you should get the following complete code:

// import the required libraries

const axios = require("axios");

const cheerio = require("cheerio");

const fs = require("fs");

// use axios to send an HTTP GET request to the website"s URL

axios.get("https://scrapingcourse.com/ecommerce/")

// validate the response

.then(response => {

// parse the returned HTML

const $ = cheerio.load(response.data);

// extract the product containers

const containers = $("li.product");

// declare an empty array to collect the extracted data

const data_array = [];

// loop through each product container to extract its content

containers.each((index, container) => {

const data = {

"name": $(container).find(".woocommerce-loop-product__title").text(),

"price": $(container).find("span.price").text(),

"image_url": $(container).find("img.attachment-woocommerce_thumbnail").attr("src")

};

// push the data into an empty array

data_array.push(data);

});

// specify the CSV headers

const headers = ["name", "price", "image_url"];

// add the headers to the CSV file

let csvData = headers.join(",") + "\n";

// create CSV-formatted strings

data_array.forEach(item => {

const row = Object.values(item).join(",");

csvData += row + "\n";

});

// write the extracted data to a CSV

fs.writeFile("products.csv", csvData, err => {

if (err) {

console.error("Error writing CSV file:", err);

} else {

console.log("CSV file written successfully.");

}

});

})

.catch(error => {

// handle request errors

console.error("Error fetching website:", error);

});

The code writes the extracted data to a CSV. See the result below:

You just extracted data from a single page with JavaScript and saved it in a CSV. Want to advance your Node.js scraping skills and scrape several pages? Keep reading in the next section.

Advanced Web Scraping Techniques with JavaScript

In this advanced section, you'll learn how to crawl the target page with Axios and Cheerio, deal with anti-bots, and use a headless browser.

Web Crawling with NodeJS

Web crawling involves following all the links on a website and scraping content from the resulting pages. It's essential for scraping paginated websites.

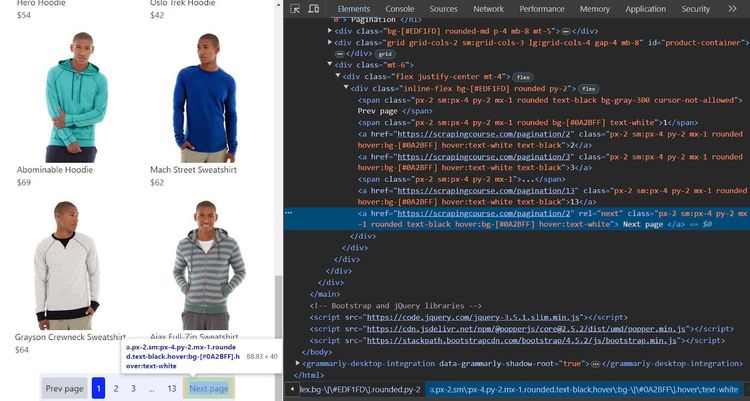

In this example, you'll use the "next button" method to scrape product information from the ScrapingCourse pagination challenge page.

Let's inspect the target website to see how it handles pagination. Right-click the "Next page" button on the navigation bar and click Inspect:

Axios will visit that next-page link iteratively until it's no longer in the DOM. First, define a scraper function that visits the target website and extracts content from its first page into an array. The function accepts a URL parameter, which represents the target website's URL:

// import the required libraries

const axios = require("axios");

const cheerio = require("cheerio");

// define a function to scrape content from a single page

async function scraper(url) {

// open the target website

const response = await axios.get(url);

// parse the returned HTML

const $ = cheerio.load(response.data);

// extract the product containers

const containers = $(".flex.flex-col.items-center.rounded-lg")

// declare an empty array to collect all extracted data

const data_array = [];

// extract content from the current page

containers.each((index, container) => {

const data = {

"name": $(container).find(".self-start.text-left.w-full > span:first-child").text(),

"price": $(container).find(".text-slate-600").text(),

"image_url": $(container).find("img").attr("src")

};

// push the data into an empty array

data_array.push(data);

});

// return the extracted data

return data_array;

}

Extend your code with a function that continuously scrapes content from the next-page link until the last page.

In this function, set the initial page as the starting URL. Open a while loop that updates the initial data array with the newly extracted data and gets the next link URL from the web page:

// scrape all pages recursively

async function scrapeAllPages(startUrl) {

// set the initial page

let currentPage = startUrl;

// specify an item variable to collect all extacted data

let allItems = [];

while (currentPage) {

// execute the scraper function to scrape content from the current page

const items = await scraper(currentPage);

// update the initial array with new content

allItems = allItems.concat(items);

}

}

Extend the loop to get the next-page link. Update the next-page URL, open it iteratively, and return the updated data array:

// scrape all pages recursively

async function scrapeAllPages(startUrl) {

// ...

while (currentPage) {

// ...

// load the HTML content of the current page into Cheerio

const $ = cheerio.load(await axios.get(currentPage).then(res => res.data));

// find the next page link

const nextPage = $('a:contains("Next page")').attr("href");

// check the currently scraped page

console.log("Scraping", nextPage)

// update currentPage to the next page URL if there is a next page

currentPage = nextPage ? nextPage : null;

}

// return the scraped content

return allItems;

}

Finally, run the above function and log the extracted data by adding the following to your code:

// execute the scraper function

scrapeAllPages("https://scrapingcourse.com/pagination")

.then(allItems => {

// log the extracted data

console.log(allItems);

console.log("Scraping complete.");

})

.catch(error => {

console.error("Error scraping pages:", error);

});

Combining all the snippets gives the following final code:

// import the required libraries

const axios = require("axios");

const cheerio = require("cheerio");

// define a function to scrape content from a single page

async function scraper(url) {

// open the target website

const response = await axios.get(url);

// parse the returned HTML

const $ = cheerio.load(response.data);

// extract the product containers

const containers = $(".flex.flex-col.items-center.rounded-lg")

// declare an empty array to collect the extracted data

const data_array = [];

// loop through each product container to extract its content

containers.each((index, container) => {

const data = {

"name": $(container).find(".self-start.text-left.w-full > span:first-child").text(),

"price": $(container).find(".text-slate-600").text(),

"image_url": $(container).find("img").attr("src")

};

// push the data into an empty array

data_array.push(data);

});

// return the extracted data

return data_array;

}

// scrape all pages recursively

async function scrapeAllPages(startUrl) {

// set the initial page

let currentPage = startUrl;

// specify an item variable to collect all extacted data

let allItems = [];

while (currentPage) {

// execute the scraper function to scrape content from the current page

const items = await scraper(currentPage);

// update the initial array with new content

allItems = allItems.concat(items);

// load the HTML content of the current page into Cheerio

const $ = cheerio.load(await axios.get(currentPage).then(res => res.data));

// find the next page link

const nextPage = $('a:contains("Next page")').attr("href");

// check the currently scraped page

console.log("Scraping", nextPage)

// update currentPage to the next page URL if there is a next page

currentPage = nextPage ? nextPage : null;

}

// return the scraped content

return allItems;

}

// execute the scraper function

scrapeAllPages("https://scrapingcourse.com/pagination")

.then(allItems => {

// log the extracted data

console.log(allItems);

console.log("Scraping complete.");

})

.catch(error => {

console.error("Error scraping pages:", error);

});

The code navigates all pages and outputs the names, prices, and image URLs from each product across all pages:

[

{

name: 'Chaz Kangeroo Hoodie',

price: '$52',

image_url: 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/mh01-gray_main.jpg'

},

// ... other products omitted for brevity

{

name: 'Breathe-Easy Tank',

price: '$34',

image_url: 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/wt09-white_main.jpg'

}

]

You can now extract content from multiple pages using Cheerio and Axios. Bravo!

Avoid Getting Blocked When Scraping with JavaScript

Anti-bot measures may prevent you from accessing your target data while scraping single or multiple pages with JavaScript. You'll have to find a way to bypass them to scrape without getting blocked.

You can set up a web scraping proxy or configure request headers to avoid anti-bot detection. However, these methods are usually insufficient against anti-bots like Cloudflare and Akamai, which employ more advanced mechanisms, like machine learning, to detect bots.

For instance, the current scraper won't work with a Cloudflare-protected website like the G2 Review page. Try it out with the following code:

// import the required libraries

const axios = require("axios");

const cheerio = require("cheerio");

// use axios to send an HTTP GET request to the website"s URL

axios.get("https://www.g2.com/products/asana/reviews")

// validate the response

.then(response => {

// parse the returned HTML

const $ = cheerio.load(response.data);

// output the extracted content

console.log($.html())

})

.catch(error => {

// handle request errors

console.error("Error fetching website:", error);

});

The scraper gets blocked with an Axios 403 forbidden error, indicating that the request couldn't bypass Cloudflare Turnstile:

AxiosError: Request failed with status code 403

The best way to bypass any anti-bot system and scrape without getting blocked is to use a web scraping API like ZenRows. It auto-rotates premium proxies, fixes your request headers, helps you bypass CAPTCHAs and other anti-bot measures. ZenRows also features headless browser functionality for interacting with dynamic web pages.

Let's use ZenRows to scrape the protected website that blocked you previously.

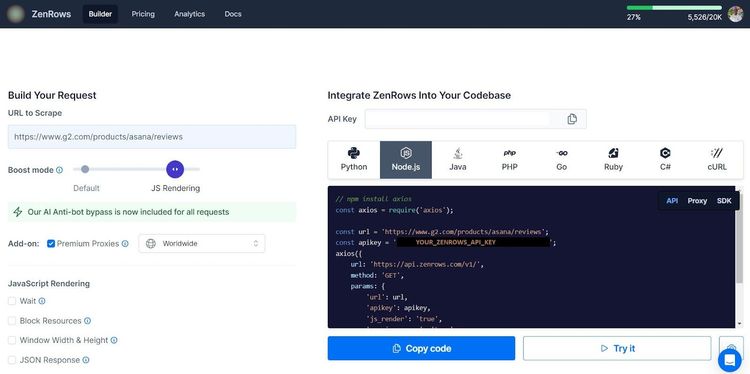

Sign up to open the Request Builder. Paste the target URL in the link box, set the Boost mode to JS Rendering, and activate Premium Proxies. Select Node.js as your language choice and use the API request mode. Copy and paste the generated code into your scraper file:

Here's a slightly modified version of the generated code:

// npm install axios

const axios = require("axios");

axios({

url: "https://api.zenrows.com/v1/",

method: "GET",

params: {

"url": "https://www.g2.com/products/asana/reviews",

"apikey": "<YOUR_ZENROWS_API_KEY>",

"js_render": "true",

"premium_proxy": "true",

},

})

.then(response => console.log(response.data))

.catch(error => console.log(error));

The code accesses the protected website and extracts its full-page HTML. See a prettified version of the result below, showing only the essential elements:

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/images/favicon.ico" rel="shortcut icon" type="image/x-icon" />

<title>Asana Reviews, Pros + Cons, and Top Rated Features</title>

</head>

<body>

<!-- other content omitted for brevity -->

</body>

Congratulations! Your scraper just bypassed an anti-bot-protected website using ZenRows.

How to Scrape with a Headless Browser in NodeJS

Many websites render content dynamically with JavaScript. An example is infinite scrolling.

The only way to scrape such websites is to use headless browsers like Puppeteer, Playwright, Selenium, or jsdom because Axios and Cheerio don't support dynamic content scraping.

A headless browser is a tool with browser functionalities for automating web actions.

Let's use Puppeteer to scrape product information from the ScrapingCourse infinite scrolling challenge page to see how it works.

To start, install Puppeteer using npm:

npm i puppeteer

Define a function that obtains all product containers and iterates through them to extract names, prices, and image URLs:

// import the required library

const puppeteer = require("puppeteer");

// define a scraper function

async function scraper(page) {

// iterate through the product containers to extract the products

const products = await page.$$eval(".flex.flex-col.items-center.rounded-lg", elements => {

return elements.map(element => {

const nameElement = element.querySelector(".self-start.text-left.w-full > span:first-child");

const priceElement = element.querySelector(".text-slate-600");

const imageElement = element.querySelector("img");

});

});

}

Validate the extracted products and return them in an object. Then, log the extracted data:

`javascrript // import the required library const puppeteer = require("puppeteer");

// define a scraper function async function scraper(page) {

// ...

return elements.map(element => {

// ...

// return the extracted data

return {

name: nameElement ? nameElement.textContent.trim() : "",

price: priceElement ? priceElement.textContent.trim() : "",

image: imageElement ? imageElement.src : ""

};

});

});

// output the result data

console.log(products);

}

Next is to implement the scrolling logic. Launch Puppeteer in headless mode, create a new page instance, and open the target website:

(async () => {

// launch the browser in headless mode

const browser = await puppeteer.launch({ headless: "new" });

const page = await browser.newPage();

// open the target page

await page.goto("https://scrapingcourse.com/infinite-scrolling");

})();

Set the initial scroll height to zero. Open a while loop that scrolls to the bottom of the page and waits for more content to load. Obtain the current page height and update the previous height. Then, add a logic to break the loop and execute the code once the new height is the same as the previous one:

(async () => {

// ...

// get the last scroll height

let lastHeight = 0;

while (true) {

// scroll to bottom

await page.evaluate("window.scrollTo(0, document.body.scrollHeight);");

// wait for the page to load

await new Promise(resolve => setTimeout(resolve, 10000));

// get the new height value

const newHeight = await page.evaluate("document.body.scrollHeight");

// break the loop if there are no more heights to scroll

if (newHeight === lastHeight) {

// extract data once all content has loaded

await scraper(page);

break;

}

// update the last height to the new height

lastHeight = newHeight;

}

// close the browser

await browser.close();

})();

Merge all the snippets. Your final code should look like this:

// import the required library

const puppeteer = require("puppeteer");

// define a scraper function

async function scraper(page) {

// iterate through the product containers to extract the products

const products = await page.$$eval(".flex.flex-col.items-center.rounded-lg", elements => {

return elements.map(element => {

const nameElement = element.querySelector(".self-start.text-left.w-full > span:first-child");

const priceElement = element.querySelector(".text-slate-600");

const imageElement = element.querySelector("img");

// return the extracted data

return {

name: nameElement ? nameElement.textContent.trim() : "",

price: priceElement ? priceElement.textContent.trim() : "",

image: imageElement ? imageElement.src : ""

};

});

});

// output the result data

console.log(products);

}

(async () => {

// launch the browser in headless mode

const browser = await puppeteer.launch({ headless: "new" });

const page = await browser.newPage();

// open the target page

await page.goto("https://scrapingcourse.com/infinite-scrolling");

// get the last scroll height

let lastHeight = 0;

while (true) {

// scroll to bottom

await page.evaluate("window.scrollTo(0, document.body.scrollHeight);");

// wait for the page to load

await new Promise(resolve => setTimeout(resolve, 10000));

// get the new height value

const newHeight = await page.evaluate("document.body.scrollHeight");

// break the loop if there are no more heights to scroll

if (newHeight === lastHeight) {

// extract data once all content has loaded

await scraper(page);

break;

}

// update the last height to the new height

lastHeight = newHeight;

}

// close the browser

await browser.close();

})();

The code scrolls the page continuously and extracts all product names, prices, and image sources:

[

{

'name': 'Chaz Kangeroo Hoodie',

'price': '$52',

'image': 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/mh01-gray_main.jpg'

},

// ... other products omitted for brevity

{

'name': 'Breathe-Easy Tank',

'price': '$34',

'image': 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/wt09-white_main.jpg'

}

]

You just advanced your scraping knowledge by scraping a website that loads content dynamically with infinite scrolling using Puppeteer. Good job!

Conclusion

In this tutorial, you've seen the basic and advanced concepts of web scraping with JavaScript and Node.js. Below is a summary of what you've learned to:

- Obtain a website's full-page HTML with Axios.

- Retrieve data from a single element with Axios and Cheerio.

- Extract multiple elements from a single page.

- Scrape a paginated website by navigating the next-page links.

- Write collected data to a CSV file.

- Deal with dynamically loaded content, such as infinite scrolling with Puppeteer.

Remember to watch out for anti-bot measures while scraping with JavaScript. The best way to avoid them is to integrate ZenRows with your JavaScript web scraper and scrape any website at scale without getting blocked. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.