Many websites have implemented advanced anti-bot protection, like DataDome, to prevent you from scraping the web. The good news is you'll see how DataDome works and how to bypass it in this guide, covering:

- Method 1: Automated browsers.

- Method 2: Quality proxies.

- Method 3: Web scraping API (the easiest and most reliable).

- Method 4: CAPTCHA bypass.

- Method 5: Scrape cached content.

- Method 6: Honeypot traps.

Ready? Let's dive in!

What Is DataDome?

DataDome is a web security suite that uses various detection techniques to prevent cyber threats, including DDoS attacks, SQL or XSS injection, and card and payment fraud. It also targets web scrapers, preventing them from scraping pages and accessing data.

DataDome protects websites across various industries, including e-commerce, news publications, music streaming, and real estate. So, you'll encounter DataDome and similar anti-bots like Cloudflare while web scraping.

How Does DataDome Work?

DataDome uses server-side and client-side techniques to detect known and unknown bots. It maintains a regularly updated dataset of trillions of anti-bot signals to keep up with the ever-evolving bot landscape.

To improve bot detection accuracy, DataDome analyzes individual user behavior, geolocation, network, browser fingerprints, and many more using multi-layered machine-learning algorithms.

DataDome can prevent you from obtaining the data you want. Let's learn the different techniques to bypass it.

Method #1: Stealth Browsers

Automated browsers, including Selenium, Puppeteer, and Playwright, can't bypass DataDome's security measures because they contain obvious bot-like information, like the presence of an automated WebDriver.

However, each has a patched extension with a stealth mode to increase your chances of evading DataDome during scraping. They include:

- The Puppeteer Extra Stealth Plugin for Puppeteer.

- Playwright stealth for bypassing blocks in Playwright.

- The Selenium Stealth extension for Selenium.

- Undetected ChromeDriver for Selenium.

These extensions patch inconsistencies in browser fingerprints, override JavaScript variables, and remove bot-like information specific to automated browsers.

Method #2: Quality Proxies

Stealth browsers are helpful but can be unreliable. Your IP address can get banned if it exceeds a particular request quota. Proxies are essential for web scraping as they hide your IP address, allowing you to bypass DataDome bot detection and extract data.

Proxies can be static or rotating. But the best for data extraction are residential web scraping proxies. For instance, integrating the ZenRows API with your scraper gives you auto-rotating premium proxies.

Accessing a DataDome-protected page like Best Western with ZenRows is as straightforward as the following Python snippet:

# pip install requests

import requests

# specify the target URL

url = "https://www.bestwestern.com/"

# specify the proxy parameter

proxy = "http://<YOUR_ZENROWS_API_KEY>:js_render=true&[email protected]:8001"

proxies = {"http": proxy, "https": proxy}

# send request and get response

response = requests.get(url, proxies=proxies, verify=False)

print(response.text)

The code above uses ZenRows to route your request through rotated proxies. Try ZenRows for free.

Method #3: Web Scraping API

A more straightforward and effective solution to evade DataDome is to use a web scraping API like ZenRows. It fixes your request headers, auto-rotates premium proxies, and bypasses CAPTCHAs and anti-bot protections like Data Dome.

For example, the previous DataDome-protected website will block your scraper. Try it out with the following Python code:

# import the required library

import requests

response = requests.get("https://www.bestwestern.com")

if response.status_code !=200:

print(f"An error occured with {response.status_code}")

else:

print(response.text)

The code outputs a 403 forbidden error, indicating that DataDome has blocked your request:

An error occured with 403

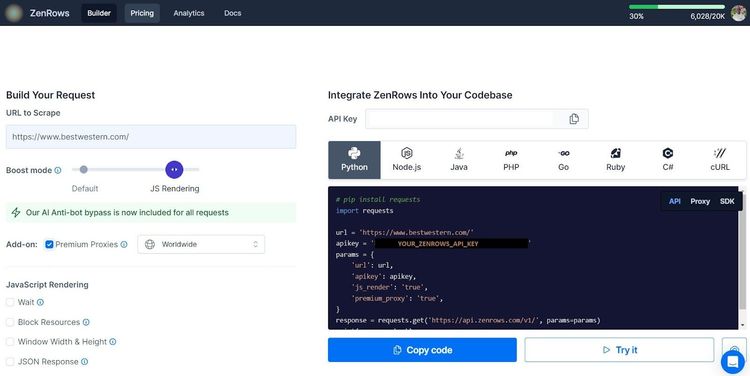

Let's retry that request with ZenRows to bypass DataDome. Sign up to load the ZenRows Request Builder. Paste the target URL in the link box, toggle on JS Rendering, and activate Premium Proxies. Choose your programming language (we've used Python in this example) and select the API request mode. Copy and paste the generated code into your script:

A slightly modified version of the generated code should look like this:

# pip install requests

import requests

# define your request parameters

params = {

"url": "https://www.bestwestern.com/",

"apikey": "<YOUR_ZENROWS_API_KEY>",

"js_render": "true",

"premium_proxy": "true",

}

# send your request and get the response

response = requests.get("https://api.zenrows.com/v1/", params=params)

print(response.text)

The above code accesses the protected website and outputs its HTML:

<html lang="en-us">

<head>

<title>Best Western Hotels - Book Online For The Lowest Rate</title>

</head>

<body class="bestWesternContent bwhr-brand">

<header>

<!-- ... -->

</header>

<!-- ... -->

</body>

</html>

You just Bypassed DataDome with ZenRows. That's great!

Method #4: CAPTCHA Bypass

Solving DataDome CAPTCHA can serve as hard proof that the user is human. There are two types of CAPTCHA-solving services:

- Automated CAPTCHA solvers: These provide quick solutions to challenges based on machine learning techniques, such as optical character recognition (OCR) and object detection.

- Human solvers: They are a team of human workers who manually solve all CAPTCHAs and provide you with a response. Although slow and expensive, this method is reliable.

However, it's best to avoid letting your scraper hit CAPTCHA, as it can slow the scraping process and add costs. The best way is to bypass DataDome CAPTCHA entirely by using CAPTCHA proxies. A good CAPTCHA proxy should be fast, scalable, and able to support different renderings without compromising speed.

Method #5: Scrape Cached Content

Search engines like Google and Bing keep cached versions of websites. Another way to bypass a DataDome-protected website is to scrape its cached version from a search engine's snapshot.

You can access Google's cache of a web page with the following URL format:

<https://webcache.googleusercontent.com/search?q=cache:{website_url}>

For instance, here's the URL to access a cached version of Best Western's home page:

https://webcache.googleusercontent.com/search?q=cache:https://www.bestwestern.com/

You can also access old copies of a protected website from the Internet Archive, a catalog of web page snapshots. Paste the URL of the protected website in the link box and select a snapshot date to view its archive.

However, the downside of these methods is that archived and cached website versions can be old, and you'll likely scrape outdated content. Additionally, the cache method won't work if the target website doesn't support caching or the search engine changes its caching policies.

Method #6: Stay Away From Honeypot Traps

Honeypots are traps that mimic legitimate websites or resources. They could be hidden links, fake data repositories, login forms, or decoy servers. DataDome employs such traps to detect and block web scraping bots.

You can avoid honeypots by analyzing the network traffic patterns to identify deceptive links and deploying premium proxies to mimic a legitimate user. Then, ensure you respect a website's robots.txt during scraping.

Advanced Techniques DataDome Uses to Detect Bot

DataDome's security measures are advanced and hard to beat. Understanding how they work can help you bypass them.

Server-side Measures

DataDome employs server-side bot detection measures to analyze the user's browsing session, server connection pattern, and all related metadata. This method leverages browsing session protocol specifications, including HTTP, TCP, and TLS, to fingerprint a user and detect inconsistencies like bot-like behavior.

HTTP/2 Fingerprinting

HTTP/2 is a binary protocol that sends data as frames within a stream. It also introduces header field compression, allowing concurrent requests and responses with little overhead. The HTTP/2 fingerprinting method analyzes the client-server protocol configurations, including the TLS handshake, exchanged data streams, and compression algorithm, to identify the request source.

TCP/IP Fingerprinting

TCP/IP allows computers to communicate over a network. TCP/IP fingerprinting is a measure that identifies the hosting device of the request source. It analyzes parameters like TCP headers, supported TCP options, and Windows size and matches these with a database of known devices to detect irregular fingerprint patterns.

TLS Fingerprinting

TLS fingerprinting is a server-side technique that web servers use to determine a web client's identity (browsers, CLI tools, or scripts). It identifies the client by analyzing only the parameters in the first packet connection before any application data exchange occurs.

Server-side Behavioral

This technique analyzes browsing sessions and navigations based on server logs, including requests, executed and attempted operations, IP addresses, and interaction with honeypots.

This data is monitored for anomalies and outliers. For instance, if the frequency of the requests is too high, it can be rate-limited; if a request's country of origin changes during a browsing session, it indicates the activation of a proxy.

Client-side Signals

Client-side signals are parameters collected from the end-user device. They can be collected in the browser using JavaScript or an SDK in mobile applications. Some of the techniques used for client-side signals are:

Operating System and Hardware Data

DataDome collects the client-side operating system and hardware details, including the device model, vendor, manufacturer, CPU, and GPU. These details are somewhat static and are less likely to change over time but provide valuable information for identifying devices.

If you're interested in that data, you can execute the following JavaScript in your Developer Console:

console.log("OS: " + navigator.platform);

console.log("Available RAM in GB: " + navigator.deviceMemory);

document.body.innerHTML += '<canvas id="glcanvas" width="0" height="0"></canvas>';

var canvas = document.getElementById("glcanvas");

var gl = canvas.getContext("experimental-webgl");

var dbgRender = gl.getExtension("WEBGL_debug_renderer_info");

console.log("GL renderer: " + gl.getParameter(gl.RENDERER));

console.log("GL vendor: " + gl.getParameter(gl.VENDOR));

console.log("Unmasked renderer: " + gl.getParameter(dbgRender.UNMASKED_RENDERER_WEBGL));

console.log("Unmasked vendor: " + gl.getParameter(dbgRender.UNMASKED_VENDOR_WEBGL));

The output will be something similar to this:

OS: Linux x86_64

Available RAM in GB: 2

GL renderer: WebKit WebGL

GL vendor: WebKit

Unmasked renderer: NVIDIA Corporation

Unmasked vendor: NVIDIA GeForce GTX 775M OpenGL Engine

It's worth noting that fingerprinting techniques for identifying the OS and hardware are more effective in mobile applications. These methods may have access to globally persistent data such as the MAC address or the International Mobile Equipment Identity (IMEI), which makes mobile device fingerprinting more reliable.

Browser Fingerprinting

Browser fingerprinting is a technique for uniquely identifying a web browser by collecting various information about it. The collected information includes the browser type and version, screen resolution, and IP address. This data creates a unique "fingerprint" to track browsers across websites and sessions.

Some techniques employed in browser fingerprinting are Canvas fingerprinting, Audio fingerprinting, storage and persistent tracking, and media device fingerprinting.

Behavioral Data

Behavioral data is generated when a user interacts with a website or app. They can come from gestures like moving the mouse, clicking, touching the screen, typing quickly, or using sensors (like the accelerometer in a device).

So, how does DataDome succeed with these methods?

How Does DataDome Apply These Techniques

DataDome's bot detection engine is a system capable of making quick decisions using the abovementioned detection techniques. It applies them in three layers:

- Verified bots and custom rules: It sets clear rules to allow/block requests based on their direct attributes, like source IP address, source domain, or user agent. That's where verified bots, like Google's crawler, are let in without question.

- Signature-based bot detection: This layer reduces the fingerprinting synthesis to signatures the detection engine can check on the fly. This line of defense is where most bots get caught: automated browsers, proxies, virtual machines, and emulators.

- Machine learning detection: This layer employs machine learning algorithms to learn continuously from collected signals. The algorithms help the detection machine identify subtle indicators of bot behavior and contradictions in how a device presents itself. Even advanced scrapers, built with well-configured automated browsers and residential IPs, can get caught at this level.

Reverse Engineering the Anti-bot System With JS Deobfuscation

Since client-side measures rely on running a script in the user's device, they must be shipped with the protected website or application. These scripts contain proprietary code and are protected using obfuscation. These measures may make it more complex and slower to reverse-engineer, but it doesn't make it impossible.

Start using an online JavaScript deobfuscator, like DeObfuscate.io, and head to https://js.datadome.co/tags.js, where DataDome hosts its script. The JavaScript deobfuscator renames variables to more human-friendly formats, abstracts proxy functions, and simplifies expressions.

Some obfuscation techniques aren't easily reversible and will need you to go further than automated tools.

Let's go through these techniques step by step.

String Concealing

Variables and functions are renamed to meaningless names to lower the script's readability, and all references to strings are replaced with hexadecimal representations. Besides renaming and encoding, they're hidden using a string-concealing function.

larone() is an example of the function in the script since it's called in the script over 1200 times and contains alphabet characters from "a" to "z" with three special symbols, indicating character manipulation. Throughout the code, this function will often be reassigned to a variable to confuse debugging.

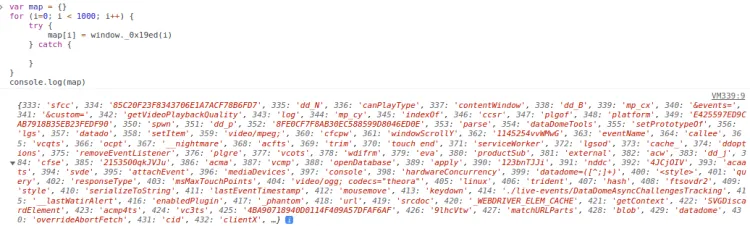

When called with an integer as a parameter, it returns a string that will uncover function calls and variable names. Translating its usage to the corresponding functions will be a massive step in the deobfuscation, so let's see what this function returns for each call in a file.

You can set a breakpoint in the browser's developer console where the said function will be available as window.larone and write a simple loop to generate a mapping of parameters into strings outputs:

After that, we'll write a script to replace calls to the larone() function in the JS file with their string equivalent. This turn statements like document[_0x3b60ec(132)]("\x63\x61\x6e\x76\x61\x73")["\x67\x65\x74\x43\x6f\x6e\x74\x65\x78\x74"](_0x3b60ec(406)); into more expressive ones like document.createElement("canvas").getContext("webgl");.

After converting these hexadecimal identifiers to human-readable versions and replacing the string-concealing function, some of the puzzles we figured out are CAPTCHAs, POST request's payload, DataDome JS Keys and the Chrome ID.

Editing Variables at Runtime

A significant matter in the script is the huge array of cryptic values. Following where it's used leads us to the first self-invoking function in the script. It shuffles the array by moving the first element to the last position until an expression equals the second integer parameter:

(function (margart, aloura) {

var masal = margart();

while (true) {

try {

var yasameen =

(parseInt(larone(392)) / 1) * (-parseInt(larone(713)) / 2) +

(-parseInt(larone(390)) / 3) * (-parseInt(larone(606)) / 4) +

parseInt(larone(756)) / 5 +

(-parseInt(larone(629)) / 6) * (-parseInt(larone(739)) / 7) +

(-parseInt(larone(679)) / 8) * (parseInt(larone(426)) / 9) +

parseInt(larone(385)) / 10 +

(parseInt(larone(362)) / 11) * (-parseInt(larone(699)) / 12);

if (yasameen === aloura) break;

else masal.push(masal.shift());

} catch (prabh) {

masal.push(masal.shift());

}

}

})(jincy, 291953);

By setting a convenient breakpoint and adding a counter to the function above, we see it's called about 300 times.

The sole purpose of this function is to edit the encryption global variable until the yasameen variable evaluates the second integer parameter. It reorganizes it by shifting the first element to the last position. That happens at runtime and may disorient the reader when decrypting a concealed string.

Control Flow Obfuscation

Developers like to organize their code by its responsibility, like data, business logic, HTTP libraries, encryption, etc. That allows us to separate concerns and follow the flow of execution, especially when debugging.

Good code is readable, and obfuscators know that, so they take advantage of it using control flow obfuscation. This process rearranges instructions to make following the script's logic difficult for humans. If the script is compiled and obfuscated with such techniques, it can even crash decompilers trying to make sense of it.

In our case, the primary function in the script heavily uses recursion and nested calls to blur the path of execution. That is noticeable with the main() function and the executeModule() function it defines. We've also renamed the variables to more expressive names:

!(function main(allModules, secondArgument, modulesToCall) {

function executeModule(moduleIndex) {

if (!secondArgument[moduleIndex]) {

if (!allModules[moduleIndex]) {

var err = new Error("Cannot find module '" + moduleIndex + "'")

}

}

// ...

allModules[moduleIndex][0].call(

// ...

function (key) {

return executeModule(allModules[moduleIndex][1][key] || key);

},

// ...

main,

modulesToCall

// ...

);

}

return secondArgument[moduleIndex].exports;

}

for (...) executeModule(modulesToCall[i]);

return executeModule;

})(...)

Figuring out the flow of execution of such functions isn't trivial. We suspect it of orchestrating all the execution and of handling JavaScript modules. Why? Because the error message Cannot find module in the above code gives it away.

Note: This could be due to control flow obfuscation or standard JS module bundling. But it does obfuscate our understanding of the script, so we'll count it as obfuscation.

Analyzing the Deobfuscated Script

So far, we've peeled quite a few obfuscation layers to understand how to bypass DataDome. It's time to step back and take a bird's-eye view of the script. The script contains three categories: the global variables, the string concealing functions and the main function:

// Global variables, with import paths as keys and small integers as values

...

var global3 = {

"./common/DataDomeOptions": 1,

"./common/DataDomeTools": 2,

"./http/DataDomeResponse": 5,

}

var global4 = {...}

// ...

// First self-invoking function to shuffle global array defined below

(function (first, second) {

...

})(globalArray, 123456)

// String-concealing function

function concealString(first, second) {

// ...

}

// Global array of 500+ cryptic strings

globalArray = [

"CMvZB2X2zwrpChrPB25Z",

"AM5Oz25VBMTUzwHWzwPQBMvOzwHSBgTSAxbSBwjTAg4",

// ...

]

// Main function

!(function main(first, second, third) {

// Orchestrate JavaScript module passed in first argument

})(

// Nine JavaScript modules,

{

1: [

function () {},

global1,

],

2: [...],

// ...

}

{},

[6], // Entrypoint module

)

Our analysis will focus on the main function. You'll notice it has a substantial first parameter, and most of the file's code is in this parameter alone. The first parameter is an object that follows a specific pattern, and each value of the object is an array of 2 elements: a function with three arguments and a global object.

Most modules are auxiliary to bot detection and deal with events tracking, HTTP requests and string handling. We'll leave those for you to discover on your own. As for bot detection, the modules related to it are:

-

Module 1: It initializes DataDome's options, like

this.endpoint,this.isSalesForce, andthis.exposeCaptchaFunction. It also defines and runs a function calledthis.check. This function loads all DataDome options and is called early in the entry point module. -

Module 3: This is where fingerprinting happens. It defines over 35 functions, with names prefixed by

dd_, and runs them asynchronously. Each of these functions gathers a set of fingerprinting signals. For example, the functionsthis.dd_j()andthis.dd_k()check window variables indicating the use of PhantomJS. -

Module 6: It's the last argument of the

main()function. It loads DataDome options (using module 1) and enables tracking of events (using modules 7 and 8).

Conclusion

Whew! That was quite a ride. Congratulations on sticking with us till the end. In this article, we introduced the techniques of the DataDome bot detection system, reverse-engineered the anti-bot, and discussed some DataDome bypass techniques.

As a recap, the methods to bypass DataDome bot detection are:

- Use stealth browsers.

- Implement quality proxies.

- Employ a web scraping API.

- Use CAPTCHA-bypass services.

- Scrape the cached version of a website.

- Avoid honeypots.

While these methods are effective, they can be expensive and stressful, and there's still the possibility of getting detected while scaling. The best way to avoid this is to use an all-in-one web scraping solution like ZenRows to bypass DataDome and other anti-bots. Try ZenRows for free.

Frequent Questions

What Does DataDome Do?

Datadome is a web security platform that identifies, classifies, and blocks web scrapers and cyber threats. It uses advanced methods like machine learning to analyze user behavior, detect suspicious patterns, and provide real-time bot protection. Its services include protection against the following activities:

- Account takeover.

- Scraping.

- Denial of Service.

- Card cracking.

- Credential stuffing.

- Server overload.

- Fake account creation.

- Vulnerability scanning.

What Is a DataDome Cookie?

DataDome cookies are behavioral signals collected from users during web interaction to understand usage patterns. These parameters improve DataDome's detection models and help it wade off web scrapers more effectively.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.