Web scraping is the process of automatically extracting large amounts of information from the internet. Data extraction is on the rise, but most websites don't provide it willingly or via API.

Bypassing more protected websites with solutions like Axios is nearly impossible. Not to mention extracting JavaScript-rendered content (a.k.a dynamic scraping). That's why headless browsers are thriving. And this is where web scraping with Puppeteer enters.

Today, you'll learn how to use Puppeteer for web scraping in Node.js to extract your target information.

Can Puppeteer Be Used for Web Scraping?

Puppeteer is a Node library that provides a high-level API to control Chrome or Chromium over the DevTools Protocol. With it, you can browse the internet with a headless browser programmatically. Thus, it allows you to do almost anything that you can do in a standard browser (e.g., visit pages, click links, take screenshots, etc.).

One of its most common uses is precisely web scraping.

So, let's see how to build a crawler and parser.

We can tell Puppeteer to visit our target page, select elements, and extract their data. Then, parse all the links available on the page and add them to the crawl.

Does it sound like a web spider? Surely!

Let's dive in:

What Are the Advantages of Using Puppeteer for Web Scraping?

Axios and Cheerio are excellent options for scraping with JavaScript. However, that poses two issues: crawling dynamic content and anti-scraping software. We'll cover how to bypass anti-bot systems a bit later in the article.

Since Puppeteer is a headless browser, it has no problem scraping dynamic content. It'll load the target page and run the JS on it, maybe even trigger XHR requests to get extra content. You wouldn't be able to do that with a static scraper. Or Single-page applications (SPAs), where the initial HTML is almost empty of data.

What else will it do? It can also render images and allow you to take screenshots, and has plugins for bypassing anti-bots like Cloudflare. For instance, you could program your script to navigate a page and screenshot it at certain times. Then analyze those to gain a competitive advantage. The options are endless!

Prerequisites

Here's what you need to do to follow this tutorial:

For the code to work, you must have Node or nvm and npm on your device. Note that some systems have it pre-installed. After that, install all the necessary libraries by running npm install. It'll create a package.json file with all the dependencies.

npm install puppeteer

The code runs in Node v16, but you can always check the compatibility of each feature.

How Do You Use Puppeteer to Scrape a Website?

You are now ready to start scraping! Open your favorite editor, create a new file, index.js, and add the following code:

const puppeteer = require('puppeteer');

(async () => {

// Initiate the browser

const browser = await puppeteer.launch();

// Create a new page with the default browser context

const page = await browser.newPage();

// Go to the target website

await page.goto('https://example.com');

// Get pages HTML content

const content = await page.content();

console.log(content);

// Closes the browser and all of its pages

await browser.close();

})();

You can run the script on Node.js with node test.js. It'll print the HTML content of the example page containing <title>Example Domain</title>.

Hey, you already scraped your first page! Nice!

Selecting Nodes With Puppeteer

Printing the whole page might not be a good solution for most use cases. We'd better select parts of it and access their content or attributes.

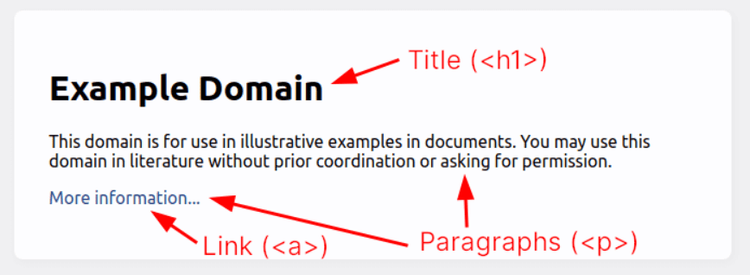

As shown above, we can extract relevant data from the highlighted nodes. We'll use CSS Selectors for this purpose. Puppeteer Page API exposes methods to access the page as the user's browsing would.

-

$(selector), likedocument.querySelector, will find an element. -

$$(selector)executesdocument.querySelectorAllthat locates all the matching nodes. -

$x(expression)evaluates the XPath expression, which helps find texts. -

evaluate(pageFunction, args)will execute any JavaScript instructions on the browser and return the result.

These are but a few examples. Puppeteer does give us all the flexibility we need.

We'll now extract the nodes: an H1 containing the title and the a tag's href attribute for the link.

await page.goto('https://example.com');

// Get the node and extract the text

const titleNode = await page.$('h1');

const title = await page.evaluate(el => el.innerText, titleNode);

// We can do both actions with one command

// In this case, extract the href attribute instead of the text

const link = await page.$eval('a', anchor => anchor.getAttribute('href'));

console.log({ title, link });

The output will be this one:

{

title: 'Example Domain',

link: 'https://www.iana.org/domains/example'

}

As seen, there are several ways to achieve the result. Puppeteer exposes several functions that will allow you to customize the data extraction.

Now, how can we get dynamic content?

Waiting for Content to Load or Appear

How do we scrape data that isn't present? It might be on a script (React uses JSON objects) or waiting for an XHR request to the server. Puppeteer allows us to do that as well. Once again, you've got many more options before you. We'll list only some of them. Check the Page API documentation for more info.

-

waitForNetworkIdlestops the script until the network is idle. -

waitForSelectorpauses until a node that matchesselectoris present. -

waitForNavigationwaits for the browser to navigate to a new URL. -

waitForTimeoutsleeps a number of milliseconds but is now obsolete and not recommended.

Let's use a YouTube video to illustrate that better:

The comments, for instance, are loaded asynchronously after an XHR request. That means that we must wait for that content to be present.

Below you can see how to do it using waitForSelector.

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.youtube.com/watch?v=tmNXKqeUtJM');

const videosTitleSelector = '#items h3 #video-title';

await page.waitForSelector(videosTitleSelector);

const titles = await page.$$eval(

videosTitleSelector,

titles => titles.map(title => title.innerText)

);

console.log(titles );

// [

// 'Why Black Holes Could Delete The Universe – The Information Paradox',

// 'Why All The Planets Are On The Same Orbital Plane',

// ...

// ]

await browser.close();

})();

We can also use waitForNetworkIdle. The output will be similar, but the process will differ. This option will pause the execution until the network goes idle. Though, it might not work in cases where the page is full of items, slow to load, or streams content, among others.

In contrast, waitForSelector will only check that at least one comment title is present. When a node like that appears, it'll return and resume the execution.

Congrats! You now know how to scrape some data.

Let's see how to crawl new pages:

How Do You Scrape Multiple Pages Using Puppeteer?

We'll need links first! You can't build a crawler using only the seed URL.

Let's assume that you're only interested in related videos.

First, we should scrape the links instead of the titles:

page.waitForSelector('#items h3 #video-title');

const videoLinks = await page.$$eval(

'#items .details a',

links => links.map(link => link.href)

);

console.log(videoLinks);

// [

// 'https://www.youtube.com/watch?v=ceFl7NlpykQ',

// 'https://www.youtube.com/watch?v=d3zTfXvYZ9s',

// 'https://www.youtube.com/watch?v=DxQK1WDYI_k',

// 'https://www.youtube.com/watch?v=jSMZoLjB9JE',

// ]

Great! You went from a single page to 20. The next step is to navigate to those and continue extracting data and more links.

Explore the topic further in our web crawling in JavaScript tutorial. We won't go into more details here, as it falls outside the scope of this article.

Alright! Here's a quick recap of what you've seen so far:

- Install and run Puppeteer.

- Scrape data using selectors.

- Extract links from the HTML.

- Crawl the new links.

- Repeat from #2.

Additional Puppeteer Features

We might have covered the fundamentals of Puppeteer scraping, but that doesn't mean there isn't much more to learn.

Let's take a look at other important features.

How Do You Take a Screenshot Using Puppeteer?

It'd be nice if you could see what the scraper is doing, right?

You can always run on headful mode with puppeteer.launch({ headless: false });. Unfortunately, that won't work for large-scale scraping. Luckily, Puppeteer also offers the feature to take screenshots. That's useful for debugging, creating snapshots, etc.

await page.screenshot({ path: 'youtube.png', fullPage: true });

As easy as that! The fullPage parameter defaults to false, so you have to pass it as true to get the whole page's content.

We'll see later how this will be helpful for us.

Execute JavaScript With Puppeteer

You already know that Puppeteer offers multiple features to interact with the target site and browser. Say you want to try something different, though. For example, a feature that runs with JavaScript directly on the browser. How to do that?

evaluate will take any JavaScript function or expression and execute it for you. With it, you can virtually do anything on the page: add/remove DOM nodes, modify styles, check for items on localStorage and expose them on a node, read/transform cookies, etc.

const storageItem = await page.evaluate("JSON.parse(localStorage.getItem('ytidb::LAST_RESULT_ENTRY_KEY'))");

console.log(storageItem);

// {

// data: { hasSucceededOnce: true },

// expiration: 1665752343778,

// creation: 1663160343778

// }

Let's clear it up with an example:

We'll explore localStorage. You can access a YouTube-generated key and return it with evaluate. As we've already mentioned, you can control almost anything that happens on the browser, even things that aren't visible to users.

Take into account that we aren't adding exception control or any defensive measure for brevity. In the case above, if the key does not exist, it would return null. But some other issues might fail and crush your scraper.

Submit a Form With Puppeteer

Another typical action while browsing is submitting forms. You can replicate that behavior when scraping.

Let's explore a real-use case! Say, using Puppeteer to log in to a website.

We'll fill in the search form and submit it. For that, we need to click a button and type text. Our entry point will be the same. Then, the search form will take us to a different page.

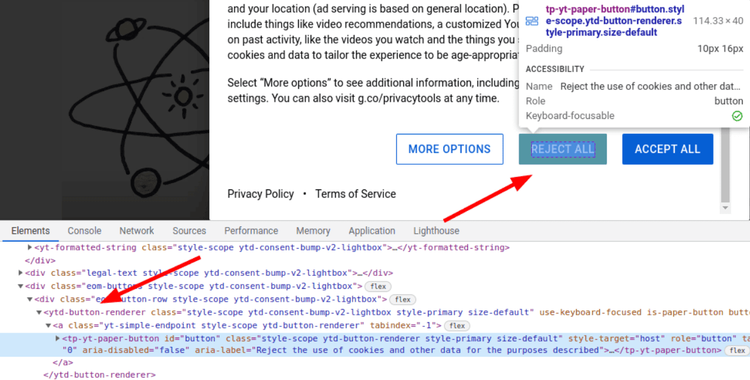

Remember that Puppeteer will browse the web without cookies. That is unless you tell it otherwise. In YouTube's case, that means seeing the cookie banner on top of the page, prohibiting interaction until you accept or reject them. Thus, we must remove that, or the search won't work.

We have to locate the button, wait for it to be present, click it, and then wait again for a second. The last step is necessary for the dialog to disappear.

According to the click's documentation, we should click and wait for navigation using Promise.all. It didn't work in our case, so we opted for the alternative way.

Don't worry about the long CSS selector. There are several buttons, and we have to be specific. Besides, YouTube uses custom HTML elements such as ytd-button-renderer.

const cookieConsentSelector = 'tp-yt-paper-dialog .eom-button-row:first-child ytd-button-renderer:first-child';

await page.waitForSelector(cookieConsentSelector);

page.click(cookieConsentSelector);

await page.waitForTimeout(1000);

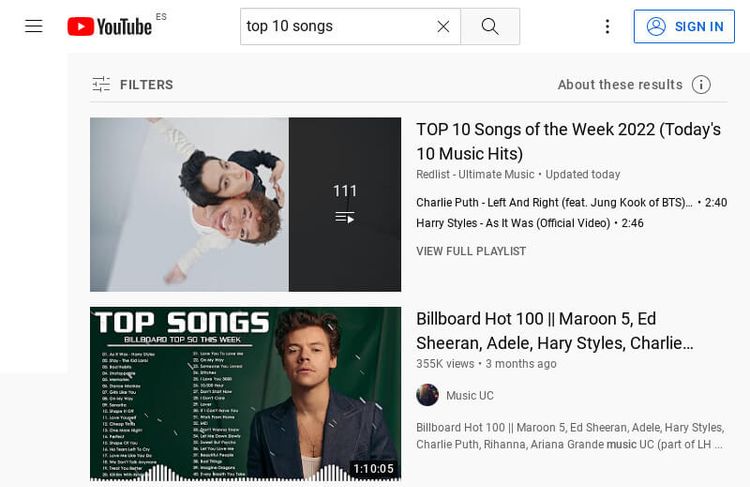

The next step is to fill in the form. For this, we'll use two Puppeteer functions: type to enter the query and press to submit the form by hitting Enter. We could also click the button.

Put simply, we're coding the instructions a user would otherwise perform directly on the browser.

const searchInputEl = await page.$('#search-form input');

await searchInputEl.type('top 10 songs');

await searchInputEl.press('Enter');

Lastly, wait for the search page to load and take a screenshot. You already know how to do that.

await page.waitForSelector('ytd-two-column-search-results-renderer ytd-video-renderer');

await page.screenshot({ path: 'youtube_search.png', fullPage: true });

And there you have it! You just submitted a form with Puppeteer.

Block or Intercept Requests in Puppeteer

So, the scraper is loading the images. That's great for debugging purposes but not for a large-scale crawling project.

Spiders should optimize resources and increase the crawling speed when possible. And not loading pictures is an easy one. To that end, we can take advantage of Puppeteer's support for resource blocking or intercepting requests.

By calling page.setRequestInterception(true), the library will enable you to check requests and abort them. It's crucial to run this part before visiting the page.

await page.setRequestInterception(true);

// Check for files that end/contains png or jpg

page.on('request', interceptedRequest => {

if (

interceptedRequest.url().endsWith('.png') ||

interceptedRequest.url().endsWith('.jpg') ||

interceptedRequest.url().includes('.png?') ||

interceptedRequest.url().includes('.jpg?')

) {

interceptedRequest.abort();

} else {

interceptedRequest.continue();

}

});

// Go to the target website

await page.goto('https://www.youtube.com/watch?v=tmNXKqeUtJM');

Don't worry about elegance, it's important to get the job done for now.

Each of the intercepted requests is an HTTPRequest. Apart from the URL, you can access the resource type. It makes it simpler for us to block all images.

// list the resources we don't want to load

const excludedResourceTypes = ['stylesheet', 'image', 'font', 'media', 'other', 'xhr', 'manifest'];

page.on('request', interceptedRequest => {

// block resources based in their type

if (excludedResourceTypes.includes(interceptedRequest.resourceType())) {

interceptedRequest.abort();

} else {

interceptedRequest.continue();

}

});

These are the two main ways of blocking requests with Puppeteer. We can try a more comprehensive option by checking the URL or block in a more general approach with types.

We won't go into details here, but there is a plugin to block resources. And a more specific one that implements adblocker.

By blocking these resources, you might be saving 80% on bandwidth! It comes as no surprise that most of the web content is image or video based. And those weigh way more than plain text.

On top of that, less traffic means faster scraping. And if you are employing metered proxies, cheaper, too.

Speaking of proxies, how can we use them in Puppeteer?

Avoiding Bot Detection

It comes as no surprise that anti-bot software gets more popular with every passing day. Almost any website can run a defensive solution thanks to the easy integrations.

Time to learn how to bypass anti-bot solutions (e.g., Cloudflare or Akamai) using Puppeteer.

You might have already guessed it. The most common solution to avoiding detection is to use proxies with Puppeteer.

Using Proxies With Puppeteer

Proxies are servers that act as intermediaries between your connection and your target site. You send your requests to the proxy, which will then relay them to the final server.

Intermediaries might be slower but much more effective and needed for scraping.

Probably the easiest way to ban a scraper is by its IP. Millions of requests from the same IP in just a day? It's a no-brainer for all defensive systems.

However, thanks to proxies, you can have different IPs. Rotating proxies can assign a new address per request, making it more difficult for anti-bots to ban your scraper.

We'll use a free one for the demo, though we don't recommend them for your project. They might do for testing but aren't reliable. Note that the one below might not work for you since they are short-lived.

(async () => {

const browser = await puppeteer.launch({

// pass the proxy to the browser

args: ['--proxy-server=23.26.236.11:3128'],

});

const page = await browser.newPage();

// example page that will print the calling IP address

await page.goto('https://www.httpbin.org/ip');

const ip = await page.$eval('pre', node => node.innerText);

console.log(ip);

// {

// "origin": "23.26.236.11"

// }

await browser.close();

})();

Puppeteer accepts a set of arguments that Chromium will set on launch. You can check their documentation on network settings for more info.

This implementation will send all the scraper's requests using the same proxy. That might not work for you. As mentioned above, unless your proxy rotates the IPs, the target server will see the same IP once and again. And ban it.

Fortunately, there are Node.js libraries that help us with rotation. puppeteer-page-proxy supports both HTTP and HTTPS proxies, authentication, and changing the used proxy per page. Or even per request, thanks to request interception (as we saw earlier).

Avoid Geoblocking With Premium Proxies

Some anti-bot vendors, such as Cloudflare, allow clients to customize the challenge level by location.

Let's take a store based in France. It might sell a small percentage in the rest of Europe but doesn't ship to the rest of the world.

In that case, it would make sense to have different levels of strictness. Lower security in Europe since it's more common to browse the site there. Higher challenge options when accessing from outside.

The solution is the same as the section above: proxies. In this case, they must allow geolocation. Premium or residential proxies usually offer this feature. You would get different URLs for each country you want, and those will only use IPs from the selected country. They might look like this: "http://my-user--country-FR:my-password@my-proxy-provider:1234".

Setting HTTP Headers in Puppeteer

By default, Puppeteer sends HeadlessChrome as its user agent. No need for the latest tech to realize that it might be web scraping software.

Again, there are several ways to set HTTP headers in Puppeteer. One of the most common is using setExtraHTTPHeaders. You have to execute all header-related functions before visiting the page. Like this, it will have all the required data set before accessing any external site.

But be careful with this if you use it to set the user agent.

const page = await browser.newPage();

// set headers

await page.setExtraHTTPHeaders({

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36',

'custom-header': '1',

});

// example page that will print the sent headers

await page.goto('https://www.httpbin.org/headers');

const pageContent = await page.$eval('pre', node => JSON.parse(node.innerText));

const userAgent = await page.evaluate(() => navigator.userAgent);

console.log({ headers: pageContent.headers, userAgent });

// {

// headers: {

// 'Custom-Header': '1',

// 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36',

// ...

// },

// userAgent: 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) HeadlessChrome/101.0.4950.0 Safari/537.36'

// }

Even if you can't spot the difference, anti-bot systems sure can.

We sent the headers, no problem there. But the navigator.userAgent property present on the browser is the default one. We'll add another two in the following snippet (appVersion and platform) to see that it goes right.

Let's try changing the user agent via arguments on browser creation.

const browser = await puppeteer.launch({

args: ['--user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36'],

});

const appVersion = await page.evaluate('navigator.appVersion');

const platform = await page.evaluate('navigator.platform');

// {

// userAgent: 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36',

// appVersion: '5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36',

// platform: 'Linux x86_64',

// }

Oops, we've got a problem here. Let's resort to a third option: setUserAgent.

await page.setUserAgent('Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36');

// {

// userAgent: 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36',

// appVersion: '5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36',

// platform: 'Linux x86_64',

// }

Same result as before. At least this one is easier to handle, and we could change the user agent per request. We can combine this with the user-agents Node.js package.

There must be a solution, right? Of course! Puppeteer's feature evaluateOnNewDocument can alter the navigator object before visiting the page, meaning that it sees what we want to show.

For that, we have to overwrite the platform property. The function below will return a hardcoded string when JavaScript accesses the value.

await page.evaluateOnNewDocument(() =>

Object.defineProperty(navigator, 'platform', {

get: function () {

return 'Win32';

},

})

);

// {

// userAgent: 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36',

// platform: 'Win32',

// }

Great! We can now set custom headers and the user agent. Plus, modify properties that don't match.

We've simplified this detection part a bit. To avoid these problems, it's common to add a plugin called Puppeteer Stealth. This technique is also used to bypass detection. Refer to our in-depth guide on how to avoid bot detection to learn more. It uses Python for the code examples, but the principles are the same.

You did it! You now know how to start your Puppeteer scraping project and extract the data you desire.

Conclusion

In this Puppeteer tutorial, you've learned from installation to advanced topics. Don't forget the two main reasons to favor Puppeteer or other headless browsers: extracting dynamic data and bypassing anti-bot systems.

Here's a quick recap of the five main points you need to remember:

- When and why to use Puppeteer for scraping.

- Install and apply the basics to start extracting data.

- CSS Selectors to get the data you are after.

- If you can do it manually, Puppeteer might have a feature for you.

- Avoid bot detection with good proxies and HTTP headers.

Nobody said that using Puppeteer for web scraping was easy, but hey, you're now closer to accessing any content you want! You can also compare Puppeteer with Selenium if you're considering an alternative.

As one guide can't cover all the info you need, to get even closer to success, you can:

- Check the official documentation when you need additional features.

- Refer to our blog and learn from our step-by-step tutorials such as this one. For instance, we didn't cover crawling here. You need to go from one page to thousands and scale your scraping system.

- Leave it to the professionals. Your web scraping journey will surely encounter many challenges to solve. If you want to save yourself the trouble and the time, try ZenRows for free today!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.