C++ remains a highly efficient language. The performance of C++ web scraping might surprise you if you have to parse tons of pages or very large ones! In this step-by-step tutorial, you'll learn how to do data scraping in C++ with the libcurl and libxml2 libraries.

Let's dive in!

Is C++ Good for Web Scraping?

C++ is a viable option for web scraping, especially when resource usage matters. At the same time, most developers tend to choose other languages. That's because they're easier to use, have a larger community, and come with more libraries.

Python web scraping, for example, is a popular choice thanks to its extensive packages. JavaScript with Node.js is also commonly used. You can discover more about the best programming languages for web scraping in our article.

Using C++ can make all the difference when performance is critical, as its low-level nature makes it fast and efficient. It's a well-suited tool for handling large-scale web scraping tasks.

C++ Web Scraping Libraries: Prerequisites

C++ isn't a language designed for the web, but some good tools exist to extract data from the internet.

To build a web scraper in C++, you'll need the following:

- libcurl: An open-source and easy-to-use HTTP client for C and C++ built on top of cURL.

- libxml2: A HTML and XML parser with a complete element selection API based on XPath.

libcurl will help you retrieve web pages from the web. Then, you can parse their HTML content and extract data from them with libxml2.

Before seeing how to install them, initialize a C++ project in your IDE.

On Windows, you can rely on Visual Studio with C++. Visual Studio Code with the C/C++ extension will do on macOS or Linux. Follow the instructions and create a project based on your local compiler.

Initialize it with the following scraper.cpp file:

#include <iostream>

int main() {

std::cout << "Hello, World!";

return 0;

}

The main() function will contain the scraping logic.

Next, install the C++ package manager vcpkg and set it up in VS IDEs, as explained in the official guide.

To install libcurl, run:

vcpkg install curl

Then, to install libxml2, launch:

vcpkg install libxml2

Fantastic! You're now ready to learn the basics of data scraping with C++!

How to Web Scrape in C++

To do web scraping with C++, you need to:

- Download the target page with libcurl.

- Parse the retrieved HTML document and scrape data from it with libxml2.

- Export the collected data to a file.

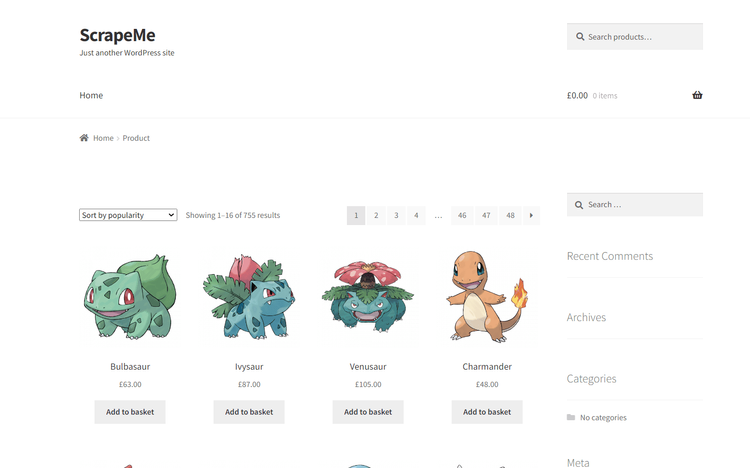

As a target site, we'll use ScrapeMe, an e-commerce that contains a paginated list of Pokémon-inspired products:

The C++ spider you're about to build will be able to retrieve all product data.

Let's perform web scraping using C++!

Step 1: Scrape by Requesting Your Target Page

Making a request with libcurl involves boilerplate operations you want to avoid repeating every time. Encapsulate them in a reusable function that initializes a cURL instance and uses it to run an HTTP GET request to the URL passed as a parameter. Then, it returns the HTML document returned by the server as a string.

std::string get_request(std::string url) {

// initialize curl locally

CURL *curl = curl_easy_init();

std::string result;

if (curl) {

// perform the GET request

curl_easy_setopt(curl, CURLOPT_URL, url.c_str());

curl_easy_setopt(curl, CURLOPT_WRITEFUNCTION, [](void *contents, size_t size, size_t nmemb, std::string *response) {

((std::string*) response)->append((char*) contents, size * nmemb);

return size * nmemb; });

curl_easy_setopt(curl, CURLOPT_WRITEDATA, &result);

curl_easy_perform(curl);

// free up the local curl resources

curl_easy_cleanup(curl);

}

return result;

}

If you have any doubts about how libcurl works, take a look at the official tutorial from the docs.

Now, use it in the main() function of scraper.cpp to retrieve HTML content as a string:

#include <iostream>

#include <curl/curl.h>

#include "libxml/HTMLparser.h"

#include "libxml/xpath.h"

// std::string get_request(std::string url) { ... }

int main() {

// initialize curl globally

curl_global_init(CURL_GLOBAL_ALL);

// download the target HTML document

// and print it

std::string html_document = get_request("https://scrapeme.live/shop/");

std::cout << html_document;

// scraping logic...

// free up the global curl resources

curl_global_cleanup();

return 0;

}

The script prints this:

<!doctype html>

<html lang="en-GB">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=2.0">

<link rel="profile" href="http://gmpg.org/xfn/11">

<link rel="pingback" href="https://scrapeme.live/xmlrpc.php">

<title>Products – ScrapeMe</title>

<!-- Omitted for brevity... -->

Great! That's the HTML code of the target page!

Step 2: Parse the HTML Data You Want Using C++

After retrieving the HTML document, feed it to libxml2:

htmlDocPtr doc = htmlReadMemory(html_document.c_str(), html_document.length(), nullptr, nullptr, HTML_PARSE_NOERROR);

htmlReadMemory() parses the HTML string and builds a tree on which you can apply XPath selectors.

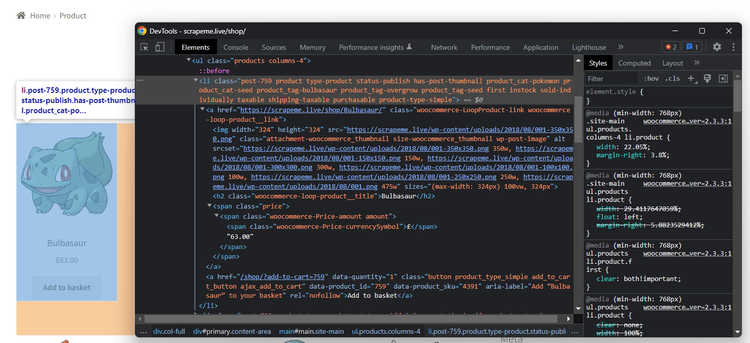

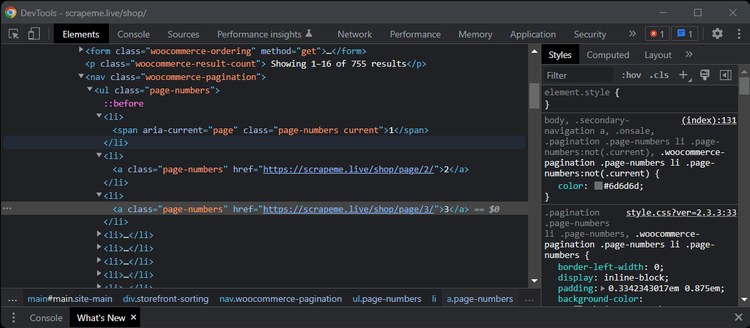

Inspect the target site to define an effective selector strategy. In the browser, right-click on a product HTML node, and opt for the "Inspect" option. The following DevTools section will open:

Analyze the HTML code and note that you can get all li.product elements with the XPath selector below:

//li[contains(@class, 'product')]

Use it to retrieve all HTML products:

xmlXPathContextPtr context = xmlXPathNewContext(doc);

xmlXPathObjectPtr product_html_elements = xmlXPathEvalExpression((xmlChar *) "//li[contains(@class, 'product')]", context);

xmlXPathNewContext() sets the XPath context to the entire document. Next, xmlXPathEvalExpression() applies the selector strategy defined above.

Given a product, the useful information to scrape is:

- The product URL in the

<a>. - The product image in the

<img>. - The product name in the

<h2>. - The product price in the

<span>.

To store this data, you need to define a new data structure:

struct PokemonProduct {

std::string url;

std::string image;

std::string name;

std::string price;

};

In C++, a struct is a collection of different data fields grouped under the same name.

Since there are several products on the page, you'll need an array of PokemonProduct:

std::vector<PokemonProduct> pokemon_products;

Don't forget to enable the vector feature in C++:

#include <vector>

Iterate over the list of the product nodes and extract the desired data:

for (int i = 0; i < product_html_elements->nodesetval->nodeNr; ++i) {

// get the current element of the loop

xmlNodePtr product_html_element = product_html_elements->nodesetval->nodeTab[i];

// set the context to restrict XPath selectors

// to the children of the current element

xmlXPathSetContextNode(product_html_element, context);

xmlNodePtr url_html_element = xmlXPathEvalExpression((xmlChar *) ".//a", context)->nodesetval->nodeTab[0];

std::string url = std::string(reinterpret_cast<char *>(xmlGetProp(url_html_element, (xmlChar *) "href")));

xmlNodePtr image_html_element = xmlXPathEvalExpression((xmlChar *) ".//a/img", context)->nodesetval->nodeTab[0];

std::string image = std::string(reinterpret_cast<char *>(xmlGetProp(image_html_element, (xmlChar *) "src")));

xmlNodePtr name_html_element = xmlXPathEvalExpression((xmlChar *) ".//a/h2", context)->nodesetval->nodeTab[0];

std::string name = std::string(reinterpret_cast<char *>(xmlNodeGetContent(name_html_element)));

xmlNodePtr price_html_element = xmlXPathEvalExpression((xmlChar *) ".//a/span", context)->nodesetval->nodeTab[0];

std::string price = std::string(reinterpret_cast<char *>(xmlNodeGetContent(price_html_element)));

PokemonProduct pokemon_product = {url, image, name, price};

pokemon_products.push_back(pokemon_product);

}

Great! pokemon_products will contain all product data of interest!

After the loop, remember to free up the resources allocated by libxml2:

// free up libxml2 resources

xmlXPathFreeContext(context);

xmlFreeDoc(doc);

Now when we're able to extract the data we wanted, the next step is to get the output. We'll see that next, as well as the final code.

Step 3: Export Data to CSV

All that remains is to export the data to a more useful format, such as CSV. You don't need extra libraries. You only have to open a .csv file, convert PokemonProduct structures to CSV records, and append them to the file.

// create the CSV file of output

std::ofstream csv_file("products.csv");

// populate it with the header

csv_file << "url,image,name,price" << std::endl;

// populate the CSV output file

for (int i = 0; i < pokemon_products.size(); ++i) {

// transform a PokemonProduct instance to a

// CSV string record

PokemonProduct p = pokemon_products.at(i);

std::string csv_record = p.url + "," + p.image + "," + p.name + "," + p.price;

csv_file << csv_record << std::endl;

}

// free up the resources for the CSV file

csv_file.close();

Put it all together, and you'll get this final code for your scraper:

#include <iostream>

#include <curl/curl.h>

#include "libxml/HTMLparser.h"

#include "libxml/xpath.h"

#include <vector>

std::string get_request(std::string url) {

// initialize curl locally

CURL *curl = curl_easy_init();

std::string result;

if (curl) {

// perform the GET request

curl_easy_setopt(curl, CURLOPT_URL, url.c_str());

curl_easy_setopt(curl, CURLOPT_WRITEFUNCTION, [](void *contents, size_t size, size_t nmemb, std::string *response) {

((std::string*) response)->append((char*) contents, size * nmemb);

return size * nmemb;

});

curl_easy_setopt(curl, CURLOPT_WRITEDATA, &result);

curl_easy_perform(curl);

// free up the local curl resources

curl_easy_cleanup(curl);

}

return result;

}

// to store the scraped data of interest

// for each product

struct PokemonProduct {

std::string url;

std::string image;

std::string name;

std::string price;

};

int main() {

// initialize curl globally

curl_global_init(CURL_GLOBAL_ALL);

// retrieve the HTML content of the target page

std::string html_document = get_request("https://scrapeme.live/shop/");

// parse the HTML document returned by the server

htmlDocPtr doc = htmlReadMemory(html_document.c_str(), html_document.length(), nullptr, nullptr, HTML_PARSE_NOERROR);

// initialize the XPath context for libxml2

// to the entire document

xmlXPathContextPtr context = xmlXPathNewContext(doc);

// get the product HTML elements

xmlXPathObjectPtr product_html_elements = xmlXPathEvalExpression((xmlChar *) "//li[contains(@class, 'product')]", context);

// to store the scraped products

std::vector<PokemonProduct> pokemon_products;

// iterate the list of product HTML elements

for (int i = 0; i < product_html_elements->nodesetval->nodeNr; ++i) {

// get the current element of the loop

xmlNodePtr product_html_element = product_html_elements->nodesetval->nodeTab[i];

// set the context to restrict XPath selectors

// to the children of the current element

xmlXPathSetContextNode(product_html_element, context);

xmlNodePtr url_html_element = xmlXPathEvalExpression((xmlChar *) ".//a", context)->nodesetval->nodeTab[0];

std::string url = std::string(reinterpret_cast<char *>(xmlGetProp(url_html_element, (xmlChar *) "href")));

xmlNodePtr image_html_element = xmlXPathEvalExpression((xmlChar *) ".//a/img", context)->nodesetval->nodeTab[0];

std::string image = std::string(reinterpret_cast<char *>(xmlGetProp(image_html_element, (xmlChar *) "src")));

xmlNodePtr name_html_element = xmlXPathEvalExpression((xmlChar *) ".//a/h2", context)->nodesetval->nodeTab[0];

std::string name = std::string(reinterpret_cast<char *>(xmlNodeGetContent(name_html_element)));

xmlNodePtr price_html_element = xmlXPathEvalExpression((xmlChar *) ".//a/span", context)->nodesetval->nodeTab[0];

std::string price = std::string(reinterpret_cast<char *>(xmlNodeGetContent(price_html_element)));

PokemonProduct pokemon_product = {url, image, name, price};

pokemon_products.push_back(pokemon_product);

}

// free up libxml2 resources

xmlXPathFreeContext(context);

xmlFreeDoc(doc);

// create the CSV file of output

std::ofstream csv_file("products.csv");

// populate it with the header

csv_file << "url,image,name,price" << std::endl;

// populate the CSV output file

for (int i = 0; i < pokemon_products.size(); ++i) {

// transform a PokemonProduct instance to a

// CSV string record

PokemonProduct p = pokemon_products.at(i);

std::string csv_record = p.url + "," + p.image + "," + p.name + "," + p.price;

csv_file << csv_record << std::endl;

}

// free up the resources for the CSV file

csv_file.close();

// free up the global curl resources

curl_global_cleanup();

return 0;

}

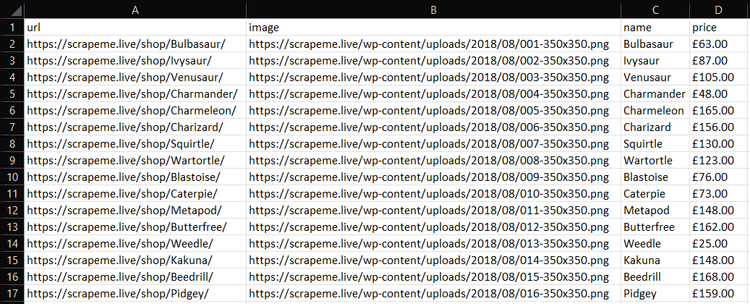

Run the web scraping C++ script to generate a products.csv file. Open it, and you'll see the output below:

Well done!

Web Crawling with C++

The target website has several product pages, right? To scrape it entirely, you have to discover and visit all pages. That's what web crawling is about.

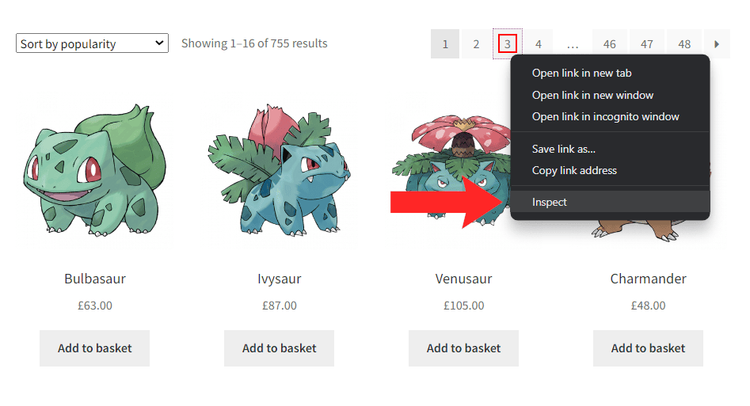

First, you need to find a way to find all pagination pages. Start by inspecting any pagination number HTML element with the DevTools:

You can select them with this:

//li/a[contains(@class, 'page-numbers')]

To implement web crawling, these are the steps:

- Visit a page.

- Get the pagination link elements.

- Add the newly discovered URLs to a queue.

- Repeat the cycle with a new page.

To achieve that, you need some supporting data structures to avoid visiting the same page twice (details below):

#include <iostream>

#include <curl/curl.h>

#include "libxml/HTMLparser.h"

#include "libxml/xpath.h"

#include <vector>

// std::string get_request(std::string url) { ... }

// struct PokemonProduct { ... }

int main() {

// initialize curl globally

curl_global_init(CURL_GLOBAL_ALL);

std::vector<PokemonProduct> pokemon_products;

// web page to start scraping from

std::string first_page = "https://scrapeme.live/shop/page/1/";

// initialize the list of pages to scrape

std::vector<std::string> pages_to_scrape = {first_page};

// initialize the list of pages discovered

std::vector<std::string> pages_discovered = {first_page};

// current iteration

int i = 1;

// max number of iterations allowed

int max_iterations = 5;

// until there is still a page to scrape or

// the limit gets hit

while (!pages_to_scrape.empty() && i <= max_iterations) {

// get the first page to scrape

// and remove it from the list

std::string page_to_scrape = pages_to_scrape.at(0);

pages_to_scrape.erase(pages_to_scrape.begin());

std::string html_document = get_request(pages_to_scrape);

htmlDocPtr doc = htmlReadMemory(html_document.c_str(), html_document.length(), nullptr, nullptr, HTML_PARSE_NOERROR);

// scraping logic...

// re-initialize the XPath context to

// restore it to the entire document

context = xmlXPathNewContext(doc);

// extract the list of pagination links

xmlXPathObjectPtr pagination_html_elements = xmlXPathEvalExpression((xmlChar *)"//a[@class='page-numbers']", context);

// iterate over it to discover new links to scrape

for (int i = 0; i < pagination_html_elements->nodesetval->nodeNr; ++i) {

xmlNodePtr pagination_html_element = pagination_html_elements->nodesetval->nodeTab[i];

// extract the pagination URL

xmlXPathSetContextNode(pagination_html_element, context);

std::string pagination_link = std::string(reinterpret_cast<char *>(xmlGetProp(pagination_html_element, (xmlChar *) "href")));

// if the page discovered is new

if (std::find(pages_discovered.begin(), pages_discovered.end(), pagination_link) == pages_discovered.end())

{

// if the page discovered should be scraped

pages_discovered.push_back(pagination_link);

if (std::find(pages_to_scrape.begin(), pages_to_scrape.end(), pagination_link) == pages_to_scrape.end())

{

pages_to_scrape.push_back(pagination_link);

}

}

}

// free up libxml2 resources

xmlXPathFreeContext(context);

xmlFreeDoc(doc);

// increment the iteration counter

i++;

}

// export logic...

return 0;

}

This C++ data scraping script crawls a web page, scrapes it, and gets the new pagination URLs. If these links are unknown, it adds them to the crawling queue. It repeats this logic until the queue is empty or reaches the max_iterations limit.

At the end of the while cycle, pokemon_products will contain all products discovered in the pages visited. In other words, you just crawled ScrapeMe! Congrats!

Headless Browser Scraping in C++

Some sites rely on JavaScript for rendering or data retrieval. In that case, you can't use a simple HTML parser to extract data from them and need a tool that can render web pages in a browser. It exists and is called a headless browser.

Selenium is one of the most popular headless browsers, and webdriverxx is its C++ binding.

To install it, launch the following commands in the root folder of your project:

git clone https://github.com/durdyev/webdriverxx

cd webdriverxx

mkdir build

cd build && cmake ..

sudo make && sudo make install

Launch them in the Windows Subsystem for Linux (WSL) if you're a Windows user.

Then, download the Selenium Grid server, install it, set it up, and run it. That's the only prerequisite required by webdriverxx.

Control a Chrome instance to extract the data from ScrapeMe with the following code. It's a translation of the web scraping C++ logic seen earlier. Note that the FindElements() and FindElement() methods allow you to select HTML elements with webdriverxx.

#include <iostream>

#include <vector>

#include <webdriverxx/webdriver.h>

struct PokemonProduct {

std::string url;

std::string image;

std::string name;

std::string price;

};

int main() {

webdriverxx::WebDriver driver = Start(Chrome());

// visit the target page in the controlled browser

driver.Navigate("https://scrapeme.live/shop/");

// perform an XPath query

webdriverxx::WebElements product_html_elements = driver.FindElements(webdriverxx::By::XPath("//li[contains(@class, 'product')]"));

// To store the scraped products

std::vector<PokemonProduct> pokemon_products;

// Iterate over the list of product HTML elements

for (auto& product_html_element : product_html_elements) {

std::string url = product_html_element.FindElement(webdriverxx::By::XPath(".//a")).GetAttribute("href");

std::string image = product_html_element.FindElement(webdriverxx::By::XPath(".//a/img")).GetAttribute("src");

std::string name = product_html_element.FindElement(webdriverxx::By::XPath(".//a/h2")).GetText();

std::string price = product_html_element.FindElement(webdriverxx::By::XPath(".//a/span")).GetText();

PokemonProduct pokemon_product = {url, image, name, price};

pokemon_products.push_back(pokemon_product);

}

// stop the Chrome driver

driver.Stop();

// create the CSV file of output

std::ofstream csv_file("products.csv");

// populate it with the header

csv_file << "url,image,name,price" << std::endl;

// populate the CSV output file

for (int i = 0; i < pokemon_products.size(); ++i) {

// transform a PokemonProduct instance to a

// CSV string record

PokemonProduct p = pokemon_products.at(i);

std::string csv_record = p.url + "," + p.image + "," + p.name + "," + p.price;

csv_file << csv_record << std::endl;

}

// free up the resources for the CSV file

csv_file.close();

return 0;

}

Keep in mind that a headless browser is a powerful tool that can perform any human interaction on a page. It's also capable of operations that HTTP clients and parsers can only dream about. For example, you can use webdriverxx to take a screenshot of the current viewport as below:

webdriverxx::WebDriver driver = Start(Chrome());

// visit the target page in the controlled browser

driver.Navigate("https://scrapeme.live/shop/");

// take a screenshot in Base64

webdriverxx::string screenshot_data = driver.GetScreenshot();

// initialize the screenshot file

std::ofstream screenshot_file("screenshot.png"=);

csv_file << screenshot_data;

csv_file.close();

That produces this:

Et voilà! You now know how to do web scraping using C++ on dynamic-content sites.

Challenges of Web Scraping in C++

Web scraping with C++ is definitely efficient but not flawless. Many websites rely on anti-bot solutions to protect their data. Your requests may get blocked because of those technologies.

That's a huge problem and the biggest challenge when getting data from the internet with a script. There are, of course, some solutions. Take a look at our in-depth guide on how to do web scraping without getting blocked.

Most of these techniques are workarounds and tricks that might only work for a while. A better alternative to avoid blocks is ZenRows, a full-featured web scraping API that provides premium proxies and headless browser capabilities. It helps you bypass anti-bots like Cloudflare while using cURL in C++.

Conclusion

This step-by-step tutorial explained how to perform web scraping with C++. You saw the basics and dug into more complex topics. You have become a C++ data extraction ninja!

Now, you know:

- Why C++ is great for efficient scraping.

- The basics of scraping in C++.

- How to web crawl in C++.

- How to use a headless browser in C++ to extract data from JavaScript-rendered sites.

Unfortunately, anti-scraping technologies can stop you anytime, but you can bypass them all with ZenRows, a scraping tool with the best built-in anti-bot bypass features on the market. All you need to get the desired data is a single API call.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.