Web scraping in Golang is a popular approach to automatically retrieve data from the web. Follow this step-by-step tutorial to learn how to easily scrape data in Go and get to know the popular libraries Colly and chromedp.

Let's start!

Prerequisites

Set Up the Environment

Here are the prerequisites you have to meet for this tutorial:

- Go 1.19+: Any version of Go greater than or equal to 1.19 will be okay. You'll see version 1.19 in action here because it's the latest at the time of writing.

- A Go IDE: Visual Studio Code with the Go extension is the recommended one.

Before proceeding with this web scraping guide, make sure that you have the necessary tools installed. Follow the links above to download, install, and set up the required tools by following the installation wizards.

Set Up a Go Project

After installing Go, it's time to initialize your Golang web scraper project.

Create a web-scraper-go folder and enter it in the terminal:

mkdir web-scraper-go

cd web-scraper-go

Then, launch the command below:

go mod init web-scraper

The init command will initialize a web-scraper Go module inside the web-scraper-go project folder.

web-scraper-go will now contain a go.mod file that looks as follows:

module web-scraper-go

go 1.19

Note that the last line changes depending on your language version.

You're now ready to set up your web scraping Go script. Create a scraper.go file and initialize it as below:

package main

import (

"fmt"

)

func main() {

// scraping logic...

fmt.Println("Hello, World!")

}

The first line contains the name of the global package. Then, there are some imports, followed by the main() function. This represents the entry point of any Go program and will contain the Golang web scraping logic.

Run the script to verify that everything works as expected:

go run scraper.go

That will print:

Hello, World!

Now that you've set up a basic Go project, let's delve deeper into how to build a data scraper using Golang.

How to Scrape a Website in Go

To learn how to scrape a website in Go, use ScrapeMe as a target website.

As you can see above, it's a Pokémon store. Our mission will be to extract all product data from it.

Step 1: Getting Started with Colly

Colly is an open-source library that provides a clean interface based on callbacks to write a scraper, crawler or spider. It comes with an advanced Go web scraping API that allows you to download an HTML page, automatically parse its content, select HTML elements from the DOM and retrieve data from them.

Install Colly and its dependencies:

go get github.com/gocolly/colly

This command will create a go.sum file in your project root and update the go.mod file with all the required dependencies accordingly.

Wait for the installation process to end. Then, import Colly in your scraper.go file as below:

package main

import (

"fmt"

// importing Colly

"github.com/gocolly/colly"

)

func main() {

// scraping logic...

fmt.Println("Hello, World!")

}

Before starting to scrape with this library, you need to understand a few key concepts.

First, Colly's main entity is the Collector. A Collector allows you to perform HTTP requests. Also, it gives you access to the web scraping callbacks offered by the Colly interface.

Initialize a Colly Collector with the NewCollector function:

c := colly.NewCollector()

Use Colly to visit a web page with Visit():

c.Visit("https://en.wikipedia.org/wiki/Main_Page")

Attach different types of callback functions to a Collector as below:

c.OnRequest(func(r *colly.Request) {

fmt.Println("Visiting: ", r.URL)

})

c.OnError(func(_ *colly.Response, err error) {

log.Println("Something went wrong: ", err)

})

c.OnResponse(func(r *colly.Response) {

fmt.Println("Page visited: ", r.Request.URL)

})

c.OnHTML("a", func(e *colly.HTMLElement) {

// printing all URLs associated with the a links in the page

fmt.Println("%v", e.Attr("href"))

})

c.OnScraped(func(r *colly.Response) {

fmt.Println(r.Request.URL, " scraped!")

})

These functions are executed in the following order:

-

OnRequest(): Called before performing an HTTP request withVisit(). -

OnError(): Called if an error occurred during the HTTP request. -

OnResponse(): Called after receiving a response from the server. -

OnHTML(): Called right afterOnResponse()if the received content is HTML. -

OnScraped(): Called after allOnHTML()callback executions.

Each of these functions accepts a callback as a parameter. The specific callback is executed when the event associated with the Colly function is raised. So, those five Colly functions help you build your Golang data scraper.

Step 2: Visit the Target HTML Page

Perform an HTTP GET request to download the target HTML page in Colly with:

// downloading the target HTML page

c.Visit("https://scrapeme.live/shop/")

The Visit() function starts the life cycle of Colly by firing the onRequest event. The other events will follow.

Step 3: Find the HTML Elements of Interest

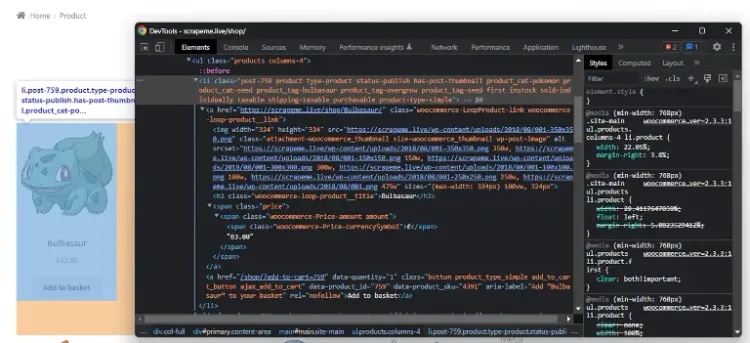

This data scraping Go tutorial is about retrieving all product data, so let's grab the HTML product elements. Right-click on a product element on the page, then choose the "Inspect" option to access the DevTools section:

Here, note that a target li HTML element has the .product class and stores:

- An

aelement with the product URL. - An

imgelement with the product image. - An

h2element with the product name. - A

.priceelement with product price.

Select all li.product HTML product elements in the page using Colly:

c.OnHTML("li.product", func(e *colly.HTMLElement) {

// ...

})

The OnHTML() function can be associated with a CSS selector and a callback function. Colly will execute the callback when it founds the HTML elements matching the selector. Note that the e parameter of the callback function represents a single li.product HTMLElement.

Let's now see how to extract data from an HTML element with the functions exposed by Colly.

Step 4: Scrape the Product Data from the Selected HTML Elements

Before getting started, you need a data structure where to store the scraped data. Define a PokemonProduct Struct as follows:

// defining a data structure to store the scraped data

type PokemonProduct struct {

url, image, name, price string

}

If you're not familiar with this, a Go Struct is a collection of typed fields you can instance to collect data.

Then, initialize a slice of PokemonProduct that will contain the scraped data:

// initializing the slice of structs that will contain the scraped data

var pokemonProducts []PokemonProduct

In Go, slices provide an efficient way to work with sequences of typed data. You can think of them as sort of lists.

Now, implement the scraping logic:

// iterating over the list of HTML product elements

c.OnHTML("li.product", func(e *colly.HTMLElement) {

// initializing a new PokemonProduct instance

pokemonProduct := PokemonProduct{}

// scraping the data of interest

pokemonProduct.url = e.ChildAttr("a", "href")

pokemonProduct.image = e.ChildAttr("img", "src")

pokemonProduct.name = e.ChildText("h2")

pokemonProduct.price = e.ChildText(".price")

// adding the product instance with scraped data to the list of products

pokemonProducts = append(pokemonProducts, pokemonProduct)

})

The HTMLElement interface exposes the ChildAttr() and ChildText() methods. These allow you to extract text of an attribute value from a child identified by a CSS selector, respectively. With two simple functions, you implemented the entire data extraction logic.

Finally, you can append a new element to the slice of scraped elements with append(). Learn more about how append() works in Go.

Fantastic! You just learned how to scrape a web page in Go using Colly.

Export the retrieved data to CSV as a next step.

Step 5: Convert Scraped Data to CSV

Export your scraped data to a CSV file in Go with the logic below:

// opening the CSV file

file, err := os.Create("products.csv")

if err != nil {

log.Fatalln("Failed to create output CSV file", err)

}

defer file.Close()

// initializing a file writer

writer := csv.NewWriter(file)

// defining the CSV headers

headers := []string{

"url",

"image",

"name",

"price",

}

// writing the column headers

writer.Write(headers)

// adding each Pokemon product to the CSV output file

for _, pokemonProduct := range pokemonProducts {

// converting a PokemonProduct to an array of strings

record := []string{

pokemonProduct.url,

pokemonProduct.image,

pokemonProduct.name,

pokemonProduct.price,

}

// writing a new CSV record

writer.Write(record)

}

defer writer.Flush()

This snippet creates a products.csv file and initializes it with the header columns. Then, it iterates over the slice of scraped PokemonProducts, converts each of them to a new CSV record, and appends it to the CSV file.

To make this snippet work, make sure you have the following imports:

import (

"encoding/csv"

"log"

"os"

// ...

)

So, this is what the scraping script looks like now:

package main

import (

"encoding/csv"

"github.com/gocolly/colly"

"log"

"os"

)

// initializing a data structure to keep the scraped data

type PokemonProduct struct {

url, image, name, price string

}

func main() {

// initializing the slice of structs to store the data to scrape

var pokemonProducts []PokemonProduct

// creating a new Colly instance

c := colly.NewCollector()

// visiting the target page

c.Visit("https://scrapeme.live/shop/")

// scraping logic

c.OnHTML("li.product", func(e *colly.HTMLElement) {

pokemonProduct := PokemonProduct{}

pokemonProduct.url = e.ChildAttr("a", "href")

pokemonProduct.image = e.ChildAttr("img", "src")

pokemonProduct.name = e.ChildText("h2")

pokemonProduct.price = e.ChildText(".price")

pokemonProducts = append(pokemonProducts, pokemonProduct)

})

// opening the CSV file

file, err := os.Create("products.csv")

if err != nil {

log.Fatalln("Failed to create output CSV file", err)

}

defer file.Close()

// initializing a file writer

writer := csv.NewWriter(file)

// writing the CSV headers

headers := []string{

"url",

"image",

"name",

"price",

}

writer.Write(headers)

// writing each Pokemon product as a CSV row

for _, pokemonProduct := range pokemonProducts {

// converting a PokemonProduct to an array of strings

record := []string{

pokemonProduct.url,

pokemonProduct.image,

pokemonProduct.name,

pokemonProduct.price,

}

// adding a CSV record to the output file

writer.Write(record)

}

defer writer.Flush()

}

Run your Go data scraper with:

go run scraper.go

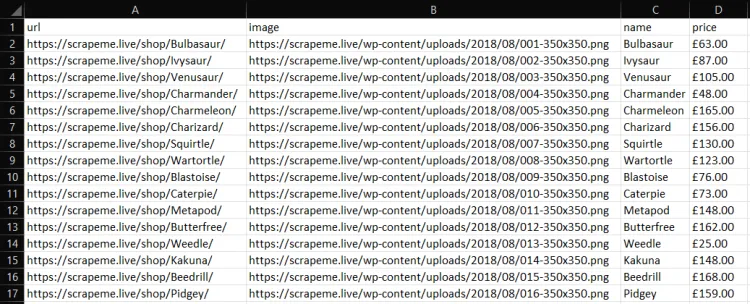

Then, you'll find a products.csv file in the root directory of your project. Open it, and it should contain this:

That's it!

Advanced Techniques in Web Scraping with Golang

Now that you know the basics of web scraping with Go, it's time to dig into more advanced approaches.

Web Crawling with Go

Note that the list of Pokémon products to scrape is paginated. As a result, the target website consists of many web pages. If you want to extract all the product data, you need to visit the whole website.

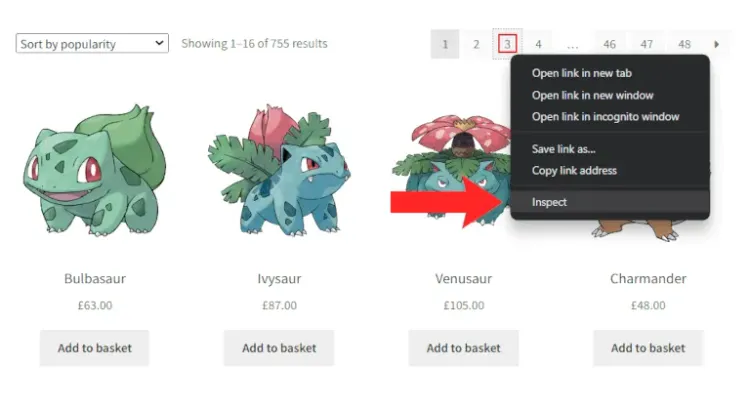

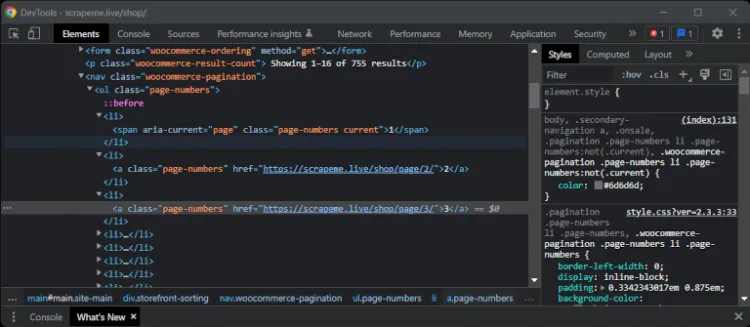

To perform web crawling in Go and scrape the entire website, you first need all the pagination links. So, right-click on any pagination number HTML element and click the "Inspect" option.

Your browser will give access to the DevTools section below with the selected HTML element highlighted:

If you take a look at all the pagination HTML elements, you'll see they are all identified by the .page-numbers CSS selector. Use this info to implement crawling in Go as below:

// initializing the list of pages to scrape with an empty slice

var pagesToScrape []string

// the first pagination URL to scrape

pageToScrape := "https://scrapeme.live/shop/page/1/"

// initializing the list of pages discovered with a pageToScrape

pagesDiscovered := []string{ pageToScrape }

// current iteration

i := 1

// max pages to scrape

limit := 5

// initializing a Colly instance

c := colly.NewCollector()

// iterating over the list of pagination links to implement the crawling logic

c.OnHTML("a.page-numbers", func(e *colly.HTMLElement) {

// discovering a new page

newPaginationLink := e.Attr("href")

// if the page discovered is new

if !contains(pagesToScrape, newPaginationLink) {

// if the page discovered should be scraped

if !contains(pagesDiscovered, newPaginationLink) {

pagesToScrape = append(pagesToScrape, newPaginationLink)

}

pagesDiscovered = append(pagesDiscovered, newPaginationLink)

}

})

c.OnHTML("li.product", func(e *colly.HTMLElement) {

// scraping logic...

})

c.OnScraped(func(response *colly.Response) {

// until there is still a page to scrape

if len(pagesToScrape) != 0 && i < limit {

// getting the current page to scrape and removing it from the list

pageToScrape = pagesToScrape[0]

pagesToScrape = pagesToScrape[1:]

// incrementing the iteration counter

i++

// visiting a new page

c.Visit(pageToScrape)

}

})

// visiting the first page

c.Visit(pageToScrape)

// convert the data to CSV...

Since you may want to stop your Go data scraper programmatically, you'll need a limit variable. This represents the maximum number of pages that the Golang web spider can visit.

In the last line, the snippet crawls the first pagination page. Then, the onHTML event is fired. In the onHTML() callback, the Go web crawler searches for new pagination links. If it finds a new link, it adds it to the crawling queue. Then, it repeats this logic with a new link. Finally, it stops when limit is hit or there are no new pages to crawl.

The crawling logic above would not be possible without the pagesDiscovered and pagesToScrape slice variables. These help you keep track of what pages the Go crawler scraped and will visit soon.

Note that the contains() function is a custom Go utility function defined as below:

func contains(s []string, str string) bool {

for _, v := range s {

if v == str {

return true

}

}

return false

}

Its purpose is simply to check whether a string is present in a slice.

Well done! Now you can crawl the ScrapeMe paginated website with Golang!

Avoid Being Blocked

Many websites implement anti-scraping anti-bot techniques. The most basic approach involves banning HTTP requests based on their headers. Specifically, they generally block HTTP requests that come with an invalid User-Agent header.

Set a global User-Agent header for all the requests performed by Colly with the UserAgent Collect field as follows:

// setting a valid User-Agent header

c.UserAgent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

Don't forget that this is just one of the many anti-scraping techniques you may have to deal with.

Use ZenRows to easily get around these challenges while web scraping.

Parallel Web Scraping in Golang

Data scraping in Go can take a lot of time. The reason could be a slow internet connection, an overloaded web server or simply lots of pages to scrape. That's why Colly supports parallel scraping! If you don't know what that means, parallel web scraping in Go involves extracting data from multiple pages simultaneously.

In detail, this is the list of all pagination pages you want your crawler to visit:

pagesToScrape := []string{

"https://scrapeme.live/shop/page/1/",

"https://scrapeme.live/shop/page/2/",

// ...

"https://scrapeme.live/shop/page/47/",

"https://scrapeme.live/shop/page/48/",

}

With parallel scraping, your Go data spider will be able to visit and extract data from several web pages at the same time. That will make your scraping process way faster!

Use Colly to implement a parallel web spider:

c := colly.NewCollector(

// turning on the asynchronous request mode in Colly

colly.Async(true),

)

c.Limit(&colly.LimitRule{

// limit the parallel requests to 4 request at a time

Parallelism: 4,

})

c.OnHTML("li.product", func(e *colly.HTMLElement) {

// scraping logic...

})

// registering all pages to scrape

for _, pageToScrape := range pagesToScrape {

c.Visit(pageToScrape)

}

// wait for tColly to visit all pages

c.Wait()

// export logic...

Colly comes with an async mode. When enabled, this allows Colly to visit several pages at the same time. Specifically, Colly will visit as many pages simultaneously as the value of the Parallelism parameter.

By enabling the parallel mode in your Golang web scraping script, you'll achieve better performance. At the same time, you may need to change some of your code logic. That's because most data structures in Go are not thread-safe, so your script may come across a race condition.

Great! You just learned the basics of how to do parallel web scraping!

Scraping Dynamic-Content Websites with a Headless Browser in Go

A static-content website comes with all its content pre-loaded in the HTML pages returned by the server. This means that you can scrape data from a static-content website simply by parsing its HTML content.

On the other hand, other websites rely on JavaScript for page rendering or use it to perform API calls and retrieve data asynchronously. These websites are called dynamic-content websites and require a browser to be rendered.

You'll need a tool that can run JavaScript, such as a headless browser, which is library provides browser capabilities and allows you to load a web page in a special browser with no GUI. You can then instruct the headless browser to mimic user interactions.

The most popular headless browser library for Golang is chromedp. Install it with:

go get -u github.com/chromedp/chromedp

Then, use chromedp to scrape data from ScrapeMe in a browser as follows:

package main

import (

"context"

"github.com/chromedp/cdproto/cdp"

"github.com/chromedp/chromedp"

"log"

)

type PokemonProduct struct {

url, image, name, price string

}

func main() {

var pokemonProducts []PokemonProduct

// initializing a chrome instance

ctx, cancel := chromedp.NewContext(

context.Background(),

chromedp.WithLogf(log.Printf),

)

defer cancel()

// navigate to the target web page and select the HTML elements of interest

var nodes []*cdp.Node

chromedp.Run(ctx,

chromedp.Navigate("https://scrapeme.live/shop"),

chromedp.Nodes(".product", &nodes, chromedp.ByQueryAll),

)

// scraping data from each node

var url, image, name, price string

for _, node := range nodes {

chromedp.Run(ctx,

chromedp.AttributeValue("a", "href", &url, nil, chromedp.ByQuery, chromedp.FromNode(node)),

chromedp.AttributeValue("img", "src", &image, nil, chromedp.ByQuery, chromedp.FromNode(node)),

chromedp.Text("h2", &name, chromedp.ByQuery, chromedp.FromNode(node)),

chromedp.Text(".price", &price, chromedp.ByQuery, chromedp.FromNode(node)),

)

pokemonProduct := PokemonProduct{}

pokemonProduct.url = url

pokemonProduct.image = image

pokemonProduct.name = name

pokemonProduct.price = price

pokemonProducts = append(pokemonProducts, pokemonProduct)

}

// export logic

}

The chromedp Nodes() function enables you to instruct the headless browser to perform a query. This way, you can select the product HTML elements and store them in the nodes variable. Then, iterate over them and apply the AttributeValue() and Text() methods to get the data of interest.

Performing web scraping in Go with Colly or chomedp is not that different. What changes between the two approaches is that chromedp runs the scraping instructions in a browser.

With chromedp, you can crawl dynamic-content websites and interact with a web page in a browser as a real user would. This also means that your script is less likely to be detected as a bot, so chromedp makes it easy to scrape a web page without getting blocked.

On the contrary, Colly is limited to static-content websites and doesn't offer browser's capabilities.

Put All Together: Final Code

This is what the complete Golang scraper based on Colly with crawling and basic anti-block logic looks like:

package main

import (

"encoding/csv"

"github.com/gocolly/colly"

"log"

"os"

)

// defining a data structure to store the scraped data

type PokemonProduct struct {

url, image, name, price string

}

// it verifies if a string is present in a slice

func contains(s []string, str string) bool {

for _, v := range s {

if v == str {

return true

}

}

return false

}

func main() {

// initializing the slice of structs that will contain the scraped data

var pokemonProducts []PokemonProduct

// initializing the list of pages to scrape with an empty slice

var pagesToScrape []string

// the first pagination URL to scrape

pageToScrape := "https://scrapeme.live/shop/page/1/"

// initializing the list of pages discovered with a pageToScrape

pagesDiscovered := []string{ pageToScrape }

// current iteration

i := 1

// max pages to scrape

limit := 5

// initializing a Colly instance

c := colly.NewCollector()

// setting a valid User-Agent header

c.UserAgent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

// iterating over the list of pagination links to implement the crawling logic

c.OnHTML("a.page-numbers", func(e *colly.HTMLElement) {

// discovering a new page

newPaginationLink := e.Attr("href")

// if the page discovered is new

if !contains(pagesToScrape, newPaginationLink) {

// if the page discovered should be scraped

if !contains(pagesDiscovered, newPaginationLink) {

pagesToScrape = append(pagesToScrape, newPaginationLink)

}

pagesDiscovered = append(pagesDiscovered, newPaginationLink)

}

})

// scraping the product data

c.OnHTML("li.product", func(e *colly.HTMLElement) {

pokemonProduct := PokemonProduct{}

pokemonProduct.url = e.ChildAttr("a", "href")

pokemonProduct.image = e.ChildAttr("img", "src")

pokemonProduct.name = e.ChildText("h2")

pokemonProduct.price = e.ChildText(".price")

pokemonProducts = append(pokemonProducts, pokemonProduct)

})

c.OnScraped(func(response *colly.Response) {

// until there is still a page to scrape

if len(pagesToScrape) != 0 && i < limit {

// getting the current page to scrape and removing it from the list

pageToScrape = pagesToScrape[0]

pagesToScrape = pagesToScrape[1:]

// incrementing the iteration counter

i++

// visiting a new page

c.Visit(pageToScrape)

}

})

// visiting the first page

c.Visit(pageToScrape)

// opening the CSV file

file, err := os.Create("products.csv")

if err != nil {

log.Fatalln("Failed to create output CSV file", err)

}

defer file.Close()

// initializing a file writer

writer := csv.NewWriter(file)

// defining the CSV headers

headers := []string{

"url",

"image",

"name",

"price",

}

// writing the column headers

writer.Write(headers)

// adding each Pokemon product to the CSV output file

for _, pokemonProduct := range pokemonProducts {

// converting a PokemonProduct to an array of strings

record := []string{

pokemonProduct.url,

pokemonProduct.image,

pokemonProduct.name,

pokemonProduct.price,

}

// writing a new CSV record

writer.Write(record)

}

defer writer.Flush()

}

Fantastic! In about 100 lines of code, you built a web scraper in Golang!

Other Web Scraping Libraries for Go

Other great libraries for web scraping with Golang are:

- ZenRows: A complete web scraping API that handles all anti-bot bypass for you. It comes with headless browser capabilities, CAPTCHAs bypass, rotating proxies and more.

- GoQuery: A Go library that offers a syntax and a set of features similar to jQuery. You can use it to perform web scraping just like you would do in JQuery.

- Ferret: A portable, extensible and fast web scraping system that aims to simplify data extraction from the web. Ferret allows users to focus on the data and is based on a unique declarative language.

- Selenium: Probably the most well-known headless browser, ideal for scraping dynamic content. It doesn't offer official support but there's a port to use it in Go.

Conclusion

In this step-by-step Go tutorial, you saw the building blocks to get started on Golang web scraping.

As a recap, you learned:

- How to perform basic data scraping with Go using Colly.

- How to achieve crawling logic to visit a whole website.

- The reason why you may need a Go headless browser solution.

- How to scrape a dynamic-content website with chromedp.

Scraping can become challenging because of the anti-scraping measures implemented by several websites. Many libraries struggle to bypass these obstacles. A best practice to avoid these problems is to use a web scraping API, such as ZenRows. This solution enables you to bypass all anti-bot systems with a single API call.

Frequent Questions

How Do You Scrape in Golang?

You can perform web scraping in Golang just as you can with any other programming language. First, get a web scraping Go library. Then, adopt it to visit your target website, select the HTML elements of interest and extract data from them.

What Is the Best Way to Scrape With Golang?

There are several ways to scrape web pages using the Go programming language. Typically, this involves adopting popular web scraping Go libraries. The best way to scrape web pages with Golang depends on the specific requirements of your project. Each of the libraries has its own strengths and weaknesses, so choose the one that best fits your use case.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.