Web scraping in Perl is a great option thanks to the built-in C++, C, and Java integration capabilities in the language. In this step-by-step tutorial, you'll learn how to do data scraping in Perl with the HTML::Tiny and HTTP::TreeBuilder libraries. Let's dive in!

Is It Possible to Do Web Scraping with Perl?

Perl is a viable language for scraping the web, especially thanks to its multi-language integration. At the same time, it isn't the most popular language for that goal. Most developers prefer languages that are easier to use, have a larger community, and come with more libraries.

Python web scraping, for example, is a great option because of its extensive ecosystem. JavaScript with Node.js is also a common choice. You can read our guide on the best programming languages for web scraping.

Perl comes with top-notch text parsing capabilities and easy integration with C, C++, and Java. It is a well-suited tool for handling heavy page or multi-language scraping tasks.

How to Do Web Scraping in Perl

Performing web scraping using Perl involves the steps below:

- Get the HTML document associated with the target page with the HTTP client

HTTP::Tiny. - Parse the HTML content with the HTML parser

HTML::TreeBuilder. - Export the scraped data to a CSV file with

Text::CSV.

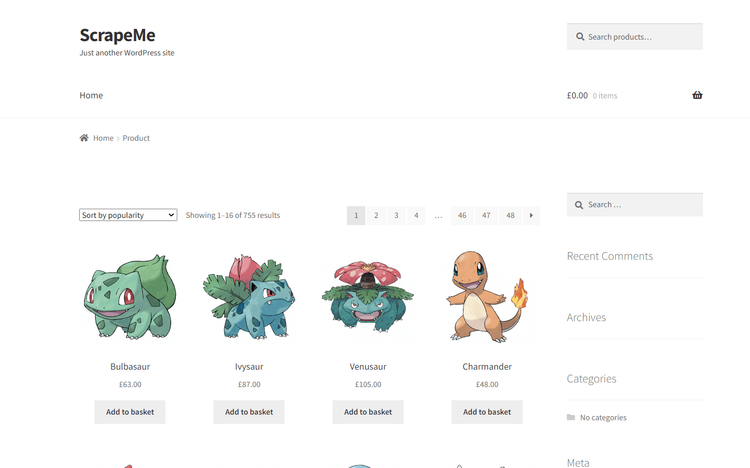

The target site here will be ScrapeMe, an e-commerce platform with a paginated list of Pokémon products. And the goal of the Perl scraper you're about to build is to retrieve all product data from each page.

Time to write some code!

Step 1: Installing the Tools

Before getting started, make sure you have Perl installed on your machine. Otherwise, download Perl, run the installer, and follow the wizard. If you're a Windows user, install Strawberry Perl.

Create a folder for your project and open it in your Perl IDE. IntelliJ IDEA with the Perl plugin or Visual Studio Code with the Perl extension will do.

Then, add a scraper.pl file and initialize it as follows. This is the easiest Perl script, but it'll soon contain some scraping logic.

use strict;

use warnings;

print "Hello, World!\n";

Open the terminal in the project folder and launch the command below to run it:

perl scraper.pl

If everything works as expected, it'll print the below text:

Hello, World!

Great! You're fully set up and ready to install the scraping libraries.

To perform Perl web scraping, you'll need two cpan libraries:

-

HTTP::Tiny: A lightweight, simple, and fast HTTP/1.1 client. It supports proxies and custom headers. -

HTML::TreeBuilder: A parser that exposes an easy-to-use API to turn HTML content into a tree and explore it.

Install the two packages with the following command. The operation will take a while, so be patient.

cpan HTTP::Tiny HTML::TreeBuilder

Now, import HTTP::Tiny and HTML::TreeBuilder by adding these two lines on top of the scraper.pl file:

use HTTP::Tiny;

use HTML::TreeBuilder;

Fantastic! You're now ready to learn the Perl web scraping basics!

Step 2: Get a Web Page's HTML

Initialize an HTTP::Tiny instance and use the get() method to get the HTML from a URL:

my $http = HTTP::Tiny->new();

my $response = $http->get('https://scrapeme.live/shop/');

Behind the scenes, the script sends an HTTP GET to the URL passed as a parameter. It interprets the response produced by the server and stores it in the $response variable.

The HTML content of the page will now be in the content field:

my $html_content = $response->{content};

Put it all together in scraper.pl to do web scraping in Perl:

use strict;

use warnings;

use HTTP::Tiny;

use HTML::TreeBuilder;

# initialize the HTTP client

my $http = HTTP::Tiny->new();

# Retrieve the HTML code of the page to scrape

my $response = $http->get('https://scrapeme.live/shop/');

my $html_content = $response->{content};

# Print the HTML document

print "$html_content\n";

Launch it, and it'll produce the HTML code of the target page in the terminal:

<!doctype html>

<html lang="en-GB">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=2.0">

<link rel="profile" href="http://gmpg.org/xfn/11">

<link rel="pingback" href="https://scrapeme.live/xmlrpc.php">

<title>Products – ScrapeMe</title>

<!-- Omitted for brevity... -->

Well done!

Step 3: Extract Data from that HTML

After retrieving the HTML code, feed it to the parse() method of an HTML::TreeBuilder instance. The HTML::TreeBuilder internal tree will now represent the DOM of the target page.

my $tree = HTML::TreeBuilder->new();

$tree->parse($response->{content});

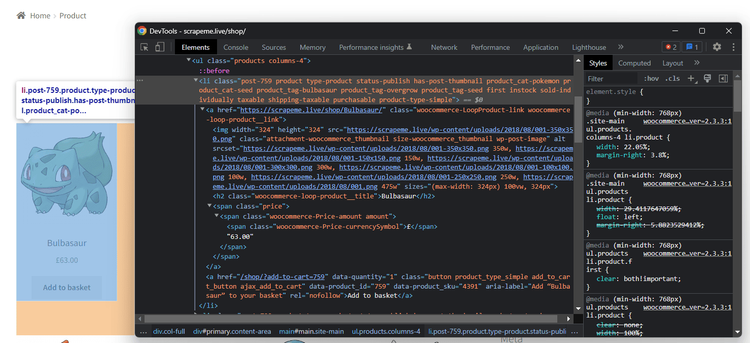

The next step is to select the product HTML elements and extract the desired data from them. This requires an understanding of the page structure, as you have to define an effective selector strategy.

Open the target page in the browser, right-click on a product HTML node, and select "Inspect". The DevTools below will open this window:

Explore the HTML code, and you'll note that each product element is <li> with a product class.

Retrieve them all. The look_down() method will select all nodes whose HTML tag is li and that have the product class.

my @html_products = $tree->look_down('_tag', 'li', class => qr/product/);

qr() is a Perl function to turn a string into a regular expression. Without the cast, look_down() would search for elements whose class attribute is equal to the string passed as a parameter.

Given a product HTML element, the useful data to extract is:

- The product URL in the

<a>. - The product image in the

<img>. - The product name in the

<h2>. - The product price in

<span>.

You'll need a custom class to store that information:

package PokemonProduct;

use Moo;

has 'url' => (is => 'ro');

has 'image' => (is => 'ro');

has 'name' => (is => 'ro');

has 'price' => (is => 'ro');

In Perl, a class is a package. Use Moo to make it easier to define a data class.

The page has many products, so define an array to store the PokemonProduct instances:

my @pokemon_products;

Iterate over the list of the product HTML elements and scrape the data of interest from each of them:

foreach my $html_product (@html_products) {

# Extract the data of interest from the current product HTML element

my $url = $html_product->look_down('_tag', 'a')->attr('href');

my $image = $html_product->look_down('_tag', 'img')->attr('src');

my $name = $html_product->look_down('_tag', 'h2')->as_text;

my $price = $html_product->look_down('_tag', 'span')->as_text;

# Store the scraped data in a PokemonProduct object

my $pokemon_product = PokemonProduct->new(url => $url, image => $image, name => $name, price => $price);

# Add the PokemonProduct to the list of scraped objects

push @pokemon_products, $pokemon_product;

}

```

Awesome! pokemon_products will now contain all product data as planned!

The current scraper.pl file contains:

use strict;

use warnings;

use HTTP::Tiny;

use HTML::TreeBuilder;

# Define a data structure where

# to store the scraped data

package PokemonProduct;

use Moo;

has 'url' => (is => 'ro');

has 'image' => (is => 'ro');

has 'name' => (is => 'ro');

has 'price' => (is => 'ro');

# initialize the HTTP client

my $http = HTTP::Tiny->new();

# Retrieve the HTML code of the page to scrape

my $response = $http->get('https://scrapeme.live/shop/');

my $html_content = $response->{content};

print "$html_content\n";

# initialize the HTML parser

my $tree = HTML::TreeBuilder->new();

# Parse the HTML document returned by the server

$tree->parse($response->{content});

# Select all HTML product elements

my @html_products = $tree->look_down('_tag', 'li', class => qr/product/);

# Initialize the list of objects that will contain the scraped data

my @pokemon_products;

# Iterate over the list of HTML products to

# extract data from them

foreach my $html_product (@html_products) {

# Extract the data of interest from the current product HTML element

my $url = $html_product->look_down('_tag', 'a')->attr('href');

my $image = $html_product->look_down('_tag', 'img')->attr('src');

my $name = $html_product->look_down('_tag', 'h2')->as_text;

my $price = $html_product->look_down('_tag', 'span')->as_text;

# Store the scraped data in a PokemonProduct object

my $pokemon_product = PokemonProduct->new(url => $url, image => $image, name => $name, price => $price);

# Add the PokemonProduct to the list of scraped objects

push @pokemon_products, $pokemon_product;

}

Good job! It only remains to get the output. See that in the next step, together with the final code.

Step 4: Export to CSV

Now that you've extracted the desired data, all that remains is to transform it into a more useful format.

To achieve that, install the Text::CSV library:

cpan Text::CSV

Next, import it into your scraper.pl script:

use Text::CSV;

Open a CSV file, convert pokemon_product instances to CSV records, and append them to the file:

# Define the header row of the CSV file

my @csv_headers = qw(url image name price);

# Create a CSV file and write the header

my $csv = Text::CSV->new({ binary => 1, auto_diag => 1, eol => $/ });

open my $file, '>:encoding(utf8)', 'products.csv' or die "Failed to create products.csv: $!";

$csv->print($file, \@csv_headers);

# Populate the CSV file

foreach my $pokemon_product (@pokemon_products) {

# PokemonProduct to CSV record

my @row = map { $pokemon_product->$_ } @csv_headers;

$csv->print($file, \@row);

}

# Release the file resources

close $file;

Put it all together, and you'll get the final code of your scraper:

use strict;

use warnings;

use HTTP::Tiny;

use HTML::TreeBuilder;

use Text::CSV;

# Define a data structure where

# to store the scraped data

package PokemonProduct;

use Moo;

has 'url' => (is => 'ro');

has 'image' => (is => 'ro');

has 'name' => (is => 'ro');

has 'price' => (is => 'ro');

# initialize the HTTP client

my $http = HTTP::Tiny->new();

# Retrieve the HTML code of the page to scrape

my $response = $http->get('https://scrapeme.live/shop/');

my $html_content = $response->{content};

print "$html_content\n";

# initialize the HTML parser

my $tree = HTML::TreeBuilder->new();

# Parse the HTML document returned by the server

$tree->parse($response->{content});

# Select all HTML product elements

my @html_products = $tree->look_down('_tag', 'li', class => qr/product/);

# Initialize the list of objects that will contain the scraped data

my @pokemon_products;

# Iterate over the list of HTML products to

# extract data from them

foreach my $html_product (@html_products) {

# Extract the data of interest from the current product HTML element

my $url = $html_product->look_down('_tag', 'a')->attr('href');

my $image = $html_product->look_down('_tag', 'img')->attr('src');

my $name = $html_product->look_down('_tag', 'h2')->as_text;

my $price = $html_product->look_down('_tag', 'span')->as_text;

# Store the scraped data in a PokemonProduct object

my $pokemon_product = PokemonProduct->new(url => $url, image => $image, name => $name, price => $price);

# Add the PokemonProduct to the list of scraped objects

push @pokemon_products, $pokemon_product;

}

# Define the header row of the CSV file

my @csv_headers = qw(url image name price);

# Create a CSV file and write the header

my $csv = Text::CSV->new({ binary => 1, auto_diag => 1, eol => $/ });

open my $file, '>:encoding(utf8)', 'products.csv' or die "Failed to create products.csv: $!";

$csv->print($file, \@csv_headers);

# Populate the CSV file

foreach my $pokemon_product (@pokemon_products) {

# PokemonProduct to CSV record

my @row = map { $pokemon_product->$_ } @csv_headers;

$csv->print($file, \@row);

}

# Release the file resources

close $file;

Launch the Perl web scraping script:

perl scraper.pl

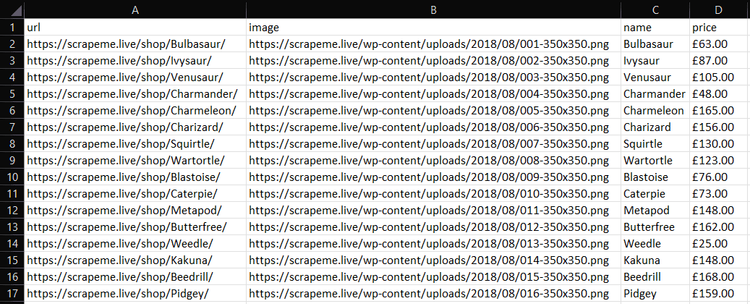

A new products.csv file will appear in the root folder of your project. Open it, and you'll see this output:

Wonderful! You just extracted the product data from the first page and learned the basics of Perl web scraping. However, there's still more to learn. Keep reading to become an expert.

Advanced Web Scraping in Perl

The basics aren't enough to scrape entire websites, more complex structures or anti-bot measures. Let's jump into the advanced concepts of web scraping with Perl!

Scrape All Pages: Web Crawling with Perl

You saw how to scrape product data from a single page, but don't forget that the target site has several pages. You have to discover and visit all product pages to scrape all data. That's what web crawling is all about.

These are the steps to implement web crawling:

- Visit a page.

- Get the pagination link elements.

- Add the newly discovered URLs to a queue.

- Repeat the cycle with a new page.

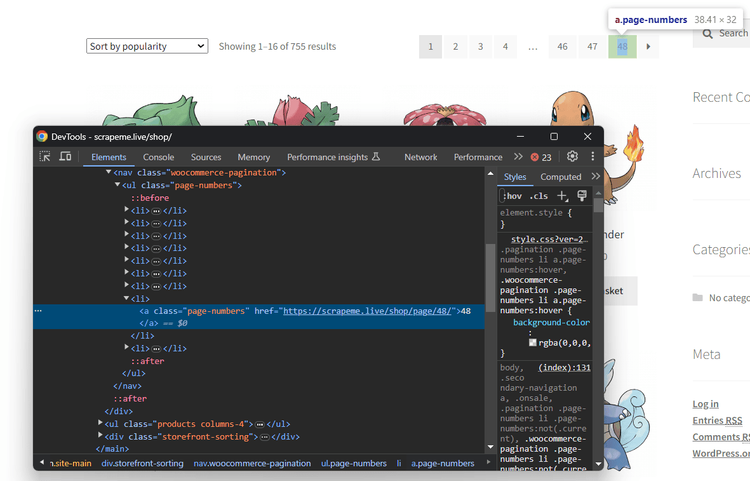

Start by inspecting a pagination number HTML element with the DevTools:

The pagination link elements are <a> nodes with the page-numbers class. Thus, you can get all pagination links like this:

my @new_pagination_links = map { $_->attr('href') } $tree->look_down('_tag', 'a', class => 'page-numbers');

Next, you need to define the supporting data structures to avoid visiting the same page twice. Initialize them with the URL of the first pagination page:

# First page to scrape

my $first_page = "https://scrapeme.live/shop/page/1/";

# Initialize the list of pages to scrape

my @pages_to_scrape = ($first_page);

# Initializing the list of pages discovered

my @pages_discovered = ($first_page);

Then, you can implement the crawling logic. The below snippet scrapes a web page and gets the new paging URLs from it. If any of these links is unknown, it adds it to the crawling queue. It repeats this logic until the queue is empty or reaches a limit number of iterations.

# Current iteration

my $i = 0;

# Max pages to scrape

my $limit = 5;

# Iterate until there is still a page to scrape or the limit is reached

while (@pages_to_scrape && $i < $limit) {

# Get the current page to scrape by popping it from the list

my $page_to_scrape = pop @pages_to_scrape;

# Retrieve the HTML code of the page to scrape

my $response = $http->get($page_to_scrape);

# Parse the HTML document returned by the server

$tree->parse($response->{content});

# scraping logic...

# Retrieve the list of pagination URLs

my @new_pagination_links = map { $_->attr('href') } $tree->look_down('_tag', 'a', class => 'page-numbers');

# Iterate over the list of pagination links to find new URLs

# to scrape

foreach my $new_pagination_link (@new_pagination_links) {

# If the page discovered is new

unless (grep { $_ eq $new_pagination_link } @pages_discovered) {

push @pages_discovered, $new_pagination_link;

# If the page discovered needs to be scraped

unless (grep { $_ eq $new_pagination_link } @pages_to_scrape) {

push @pages_to_scrape, $new_pagination_link;

}

}

}

# Increment the iteration counter

$i++;

}

This what your entire Perl web scraping script now looks like:

use strict;

use warnings;

use HTTP::Tiny;

use HTML::TreeBuilder;

use Text::CSV;

# Define a data structure where

# to store the scraped data

package PokemonProduct;

use Moo;

has 'url' => (is => 'ro');

has 'image' => (is => 'ro');

has 'name' => (is => 'ro');

has 'price' => (is => 'ro');

# initialize the HTTP client

my $http = HTTP::Tiny->new();

# initialize the HTML parser

my $tree = HTML::TreeBuilder->new();

# Initialize the list of objects that will contain the scraped data

my @pokemon_products;

# First page to scrape

my $first_page = "https://scrapeme.live/shop/page/1/";

# Initialize the list of pages to scrape

my @pages_to_scrape = ($first_page);

# Initializing the list of pages discovered

my @pages_discovered = ($first_page);

# Current iteration

my $i = 0;

# Max pages to scrape

my $limit = 5;

# Iterate until there is still a page to scrape or the limit is reached

while (@pages_to_scrape && $i < $limit) {

# Get the current page to scrape by popping it from the list

my $page_to_scrape = pop @pages_to_scrape;

# Retrieve the HTML code of the page to scrape

my $response = $http->get($page_to_scrape);

# Parse the HTML document returned by the server

$tree->parse($response->{content});

# Select all HTML product elements

my @html_products = $tree->look_down('_tag', 'li', class => qr/product/);

# Iterate over the list of HTML products to

# extract data from them

foreach my $html_product (@html_products) {

# Extract the data of interest from the current product HTML element

my $url = $html_product->look_down('_tag', 'a')->attr('href');

my $image = $html_product->look_down('_tag', 'img')->attr('src');

my $name = $html_product->look_down('_tag', 'h2')->as_text;

my $price = $html_product->look_down('_tag', 'span')->as_text;

# Store the scraped data in a PokemonProduct object

my $pokemon_product = PokemonProduct->new(url => $url, image => $image, name => $name, price => $price);

# Add the PokemonProduct to the list of scraped objects

push @pokemon_products, $pokemon_product;

}

# Retrieve the list of pagination URLs

my @new_pagination_links = map { $_->attr('href') } $tree->look_down('_tag', 'a', class => 'page-numbers');

# Iterate over the list of pagination links to find new URLs

# to scrape

foreach my $new_pagination_link (@new_pagination_links) {

# If the page discovered is new

unless (grep { $_ eq $new_pagination_link } @pages_discovered) {

push @pages_discovered, $new_pagination_link;

# If the page discovered needs to be scraped

unless (grep { $_ eq $new_pagination_link } @pages_to_scrape) {

push @pages_to_scrape, $new_pagination_link;

}

}

}

# Increment the iteration counter

$i++;

}

# Define the header row of the CSV file

my @csv_headers = qw(url image name price);

# Create a CSV file and write the header

my $csv = Text::CSV->new({ binary => 1, auto_diag => 1, eol => $/ });

open my $file, '>:encoding(utf8)', 'products.csv' or die "Failed to create products.csv: $!";

$csv->print($file, \@csv_headers);

# Populate the CSV file

foreach my $pokemon_product (@pokemon_products) {

# PokemonProduct to CSV record

my @row = map { $pokemon_product->$_ } @csv_headers;

$csv->print($file, \@row);

}

# Release the file resources

close $file;

The output CSV fill will contain all products discovered in the pages visited.

Congrats! You just crawled ScrapeMe and achieved your initial scraping goal!

How to Avoid Getting Blocked

Companies know that data is the most valuable asset on Earth. That's why many websites adopt anti-scraping measures. These systems can detect requests from automated scripts, like your Perl scraper, and block them.

For example, sites like G2 use Cloudflare to prevent bots from accessing their pages. Let's say you want to get access to https://www.g2.com/products/asana/reviews:

my $http = HTTP::Tiny->new();

my $response = $http->get('https://www.g2.com/products/asana/reviews');

my $html_content = $response->{content};

print "$html_content\n";

Your scraper will print the following 403 Forbidden page:

<!DOCTYPE html>

<html lang="en-US">

<head>

<title>Just a moment...</title>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<meta http-equiv="X-UA-Compatible" content="IE=Edge">

<meta name="robots" content="noindex,nofollow">

<meta name="viewport" content="width=device-width,initial-scale=1">

<link href="/cdn-cgi/styles/challenges.css" rel="stylesheet">

</head>

<!-- Omitted for brevity... -->

Anti-bot measures represent the biggest challenge when performing web scraping. But, as shown in our web scraping without getting blocked guide, there are some solutions.

At the same time, most of those workarounds are tricky and don't work consistently. An effective alternative to avoid any blocks is ZenRows, a full-featured web scraping API that provides premium proxies, headless browser capabilities, and a complete anti-bot toolkit.

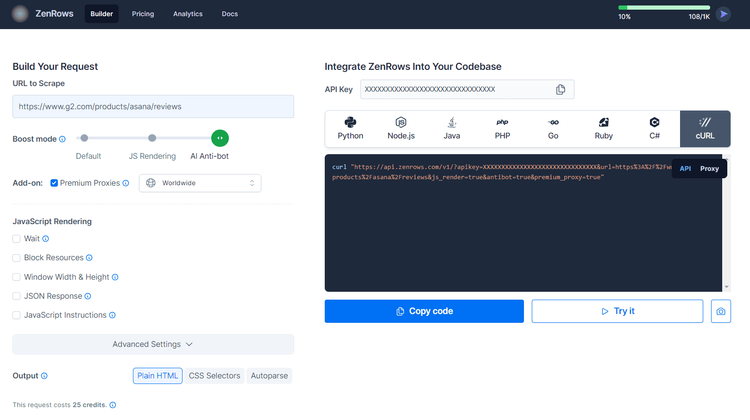

To get started with ZenRows, sign up for free to get your free 1,000 credits. You'll reach the Request Builder page.

Paste your target URL (https://www.g2.com/products/asana/reviews), check "Premium Proxy" and enable the "AI Anti-bot" feature (it includes advanced anti-bot bypass tools, as well as JavaScript rendering).

Next, select the "cURL" option, and then the "API" connection mode to get your auto-generated target URL.

Now, pass it to HTTP:Tiny client:

my $http = HTTP::Tiny->new();

my $response = $http->get('https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fasana%2Freviews&js_render=true&antibot=true&premium_proxy=true');

my $html_content = $response->{content};

print "$html_content\n";

You'll get the following output:

<!DOCTYPE html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/assets/favicon-fdacc4208a68e8ae57a80bf869d155829f2400fa7dd128b9c9e60f07795c4915.ico" rel="shortcut icon" type="image/x-icon" />

<title>Asana Reviews 2023: Details, Pricing, & Features | G2</title>

<!-- omitted for brevity ... -->

Amazing! Say goodbye to anti-bot limitations!

Rendering JS: Headless Browser Scraping in Perl

Many modern sites use JavaScript for dynamically retrieving the page or retrieving data. In such a scenario, you can't use an HTML parser to scrape data from the web pages. You'll instead need a tool that can render pages in a browser, typically a headless browser.

Selenium-Remote-Driver is the Perl binding of Selenium, one of the most popular headless browsers.

Install the Selenium Chrome package. It allows you to control and instruct a Chrome instance to run specific actions on a web page.

cpan Selenium::Chrome

Download the ChromeDriver associated with your version of Chrome. Place the chromedriver executable in the project folder and create a Selenium::Chrome instance. Use it to extract data from ScrapeMe with the following script, which is a translation of the data extraction logic defined earlier and will produce the same result as the script seen in step 4.

use strict;

use warnings;

use Selenium::Chrome;

use Text::CSV;

# Define a data structure where

# to store the scraped data

package PokemonProduct;

use Moo;

has 'url' => (is => 'ro');

has 'image' => (is => 'ro');

has 'name' => (is => 'ro');

has 'price' => (is => 'ro');

# initialize the Selenium driver

my $driver = Selenium::Chrome->new('bynary' => './chromedriver'); #'./chromedriver.exe' on Windows

# Visit the HTML page of the page to scrape

$driver->get('https://scrapeme.live/shop/');

# Select all HTML product elements

my @html_products = $driver->find_elements('li.product', 'css');

# Initialize the list of objects that will contain the scraped data

my @pokemon_products;

# Iterate over the list of HTML products to

# extract data from them

foreach my $html_product (@html_products) {

# Extract the data of interest from the current product HTML element

my $url = $driver->find_child_element($html_product, 'a', 'tag_name')->get_attribute('href');

my $image = $driver->find_child_element($html_product, 'img', 'tag_name')->get_attribute('src');

my $name = $driver->find_child_element($html_product, 'h2', 'tag_name')->get_text();

my $price = $driver->find_child_element($html_product, 'span', 'tag_name')->get_text();

# Store the scraped data in a PokemonProduct object

my $pokemon_product = PokemonProduct->new(url => $url, image => $image, name => $name, price => $price);

# Add the PokemonProduct to the list of scraped objects

push @pokemon_products, $pokemon_product;

}

# Close the browser instance

$driver->quit();

$driver->shutdown_binary;

# Define the header row of the CSV file

my @csv_headers = qw(url image name price);

# Create a CSV file and write the header

my $csv = Text::CSV->new({ binary => 1, auto_diag => 1, eol => $/ });

open my $file, '>:encoding(utf8)', 'products.csv' or die "Failed to create products.csv: $!";

$csv->print($file, \@csv_headers);

# Populate the CSV file

foreach my $pokemon_product (@pokemon_products) {

# PokemonProduct to CSV record

my @row = map { $pokemon_product->$_ } @csv_headers;

$csv->print($file, \@row);

}

# Release the file resources

close $file;

A headless browser can interact with a page like a human user, simulating clicks, mouse movements, and more. Plus, it can do much more than an HTML parser. For example, you can use capture_screenshot() to take a screenshot of the current viewport and export it to a file:

$driver->capture_screenshot('screenshot.png');

screenshot.png will contain the image below:

Perfect! You now know how to build a web scraping Perl script for dynamic content sites.

Conclusion

This step-by-step tutorial walked you through how to build a Perl web scraping script. You started from the basics and then explored more complex topics. You have become a Perl web scraping ninja!

Now, you know why Perl is great for efficient scraping, the basics of web scraping using this language, how to perform crawling, and how to use a headless browser to extract data from JavaScript-rendered sites.

Yet, it doesn't matter how sophisticated your Perl scraper is because anti-scraping measures can still stop it! Bypass them all with ZenRows, a scraping tool with the best built-in anti-bot bypass features. All you need to get the desired data is a single API call.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.