Web scraping has become increasingly popular and is now a trending topic in the IT community. As a result, several libraries help you scrape data from a website. Here, you'll learn how to build a web scraper in PHP using one of the most popular web scraping libraries.

In this tutorial, you'll learn the basics of web scraping in PHP. And then how to get around the most popular anti-scraping systems and learn more advanced techniques and concepts, such as parallel scraping and headless browsers.

Follow this tutorial and become an expert in web scraping with PHP! Let's not waste more time and build our first scraper in PHP.

Prerequisites

That is the list of prerequisites you need for the simple scraper to work:

- PHP >= 7.29.0

- Composer >= 2 If you don't have these installed on your systems, you can download them by following the links above.

Then, you also require the following Composer library:

-

voku/simple_html_dom>= 4.8 You can add this to your project's dependencies with the following command:

composer require voku/simple_html_dom

Also, you'll need the built-in cURL PHP library. cURL comes with the curl-ext PHP extension, which is automatically present and enabled in most PHP packages. If the PHP package you installed didn't include curl-ext, you could install it, as explained in this guide.

Let's now learn more about the dependencies mentioned here.

Introduction

voku/simple_html_dom is a fork of the Simple HTML DOM Parser project that replaces string manipulation with DOMDocument and other modern PHP classes. With nearly two million installs, voku/simple_html_dom is a fast, reliable, and simple library for parsing HTML documents and performing web scraping in PHP.

curl-ext is a PHP extension that enables the cURL HTTP client in PHP, which allows you to perform HTTP requests in PHP.

You can find the code of the demo web scraper in this GitHub repo. Clone it and install the project's dependencies with the following commands:

git clone https://github.com/Tonel/simple-scraper-php

cd simple-scraper-php

composer update

Follow this tutorial and learn how to build a web scraper app in PHP!

Basic Web Scraping in PHP

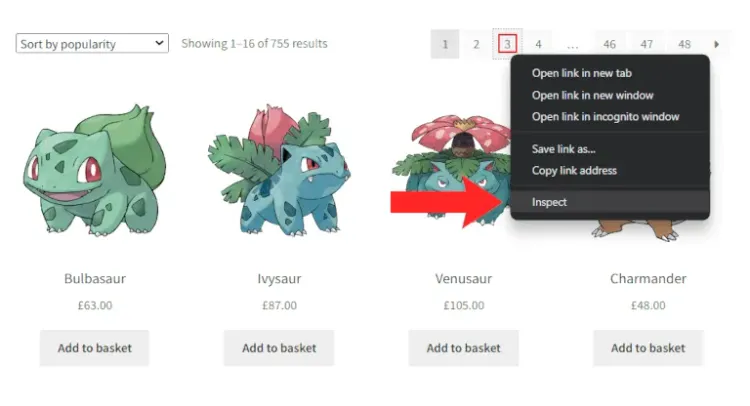

Here, you'll see how to perform web scraping on <https://scrapeme.live/shop/>, a website designed as a scraping target.

In detail, this is what the shop looks like:

As you can see, scrapeme.live is nothing more than a paginated list of Pokemon-inspired products. Let's build a simple web scraper in PHP that crawls the website and scrapes data from all these products.

First, you need to download the HTML of the page you want to scrape. You can download an HTML document in PHP with cURL as follows:

// initialize the cURL request

$curl = curl_init();

// set the URL to reach with a GET HTTP request

curl_setopt($curl, CURLOPT_URL, "https://scrapeme.live/shop/");

// get the data returned by the cURL request as a string

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

// make the cURL request follow eventual redirects,

// and reach the final page of interest

curl_setopt($curl, CURLOPT_FOLLOWLOCATION, true);

// execute the cURL request and

// get the HTML of the page as a string

$html = curl_exec($curl);

// release the cURL resources

curl_close($curl);

You now have the HTML of the https://scrapeme.live/shop/ page stored in the $html variable. Load this into a HtmlDomParser instance with the str_get_html() function as below:

require_once __DIR__ . "../../vendor/autoload.php";

use voku\helper\HtmlDomParser;

$curl = curl_init();

curl_setopt($curl, CURLOPT_URL, "https://scrapeme.live/shop/");

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_FOLLOWLOCATION, true);

$html = curl_exec($curl);

curl_close($curl);

// initialize HtmlDomParser

$htmlDomParser = HtmlDomParser::str_get_html($html);

You can now use HtmlDomParser to browse the DOM of the HTML page and start the data extraction.

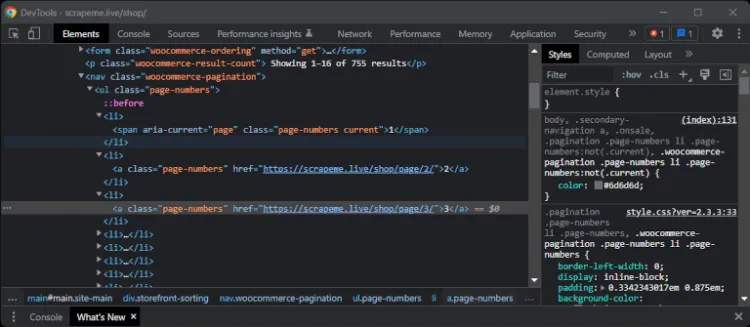

Let's now retrieve the list of all pagination links to crawl the entire website section. Right-click the pagination number HTML element and select the "Inspect" option.

At this point, the browser should open a DevTools window or section with the DOM element highlighted, as below:

In the WebTools window, you can see that the page-numbers CSS class identifies the pagination HTML elements. Note that a CSS class doesn't uniquely identify an HTML element, and many nodes could have the same class. It's precisely what happens with page-numbers in the scrapeme.live page.

Therefore, if you want to use a CSS selector to pick the elements in the DOM, you should use the CSS class along with other selectors. In particular, you can use HtmlDomParser with the .page-numbers a CSS selector to select all the pagination HTML elements on the page. Then, iterate through them to extract all the required URLs from the href attribute as follows:

// retrieve the HTML pagination elements with

// the ".page-numbers a" CSS selector

$paginationElements = $htmlDomParser->find(".page-numbers a");

$paginationLinks = [];

foreach ($paginationElements as $paginationElement) {

// populate the paginationLinks set with the URL

// extracted from the href attribute of the HTML pagination element

$paginationLink = $paginationElement->getAttribute("href");

// avoid duplicates in the list of URLs

if (!in_array($paginationLink, $paginationLinks)) {

$paginationLinks[] = $paginationLink;

}

}

// print the paginationLinks array

print_r($paginationLinks);

Note that the find() function allows you to extract DOM elements based on a CSS selector. Also, considering that the pagination element is placed twice on the web page, you need to define custom logic to avoid duplicate elements in the $paginationLinks array.

If executed, this script would return:

Array (

[0] => https://scrapeme.live/shop/page/2/

[1] => https://scrapeme.live/shop/page/3/

[2] => https://scrapeme.live/shop/page/4/

[3] => https://scrapeme.live/shop/page/46/

[4] => https://scrapeme.live/shop/page/47/

[5] => https://scrapeme.live/shop/page/48/

)

As shown, all URLs follow the same structure and are characterized by a final number that specifies the pagination number. If you want to iterate over all pages, you only need the number associated with the last page. Retrieve it as follows:

// remove all non-numeric characters in the last element of

// the $paginationLinks array to retrieve the highest pagination number

$highestPaginationNumber = preg_replace("/\D/", "", end($paginationLinks));

$highestPaginationNumber will contain "48".

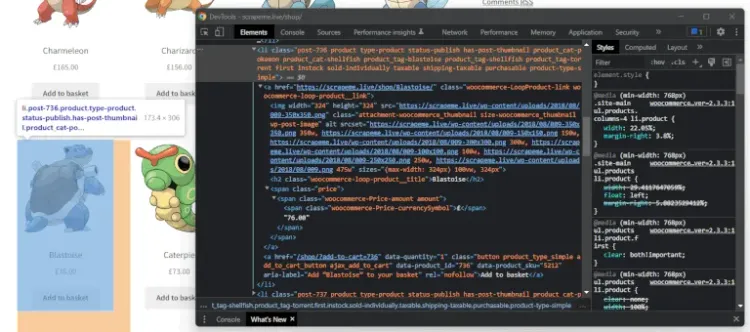

Now, let's retrieve the data associated with a single product. Again, right-click on a product and open the DevTools window with the "Inspect" option. That is what you should get:

As you can see, a product consists of a li.product HTML element containing a URL, an image, a name, and a price. This product information is placed in an a, mg, h2, and span HTML elements, respectively. You can extract this data with HtmlDomParseras below:

$productDataLit = array();

// retrieve the list of products on the page

$productElements = $htmlDomParser->find("li.product");

foreach ($productElements as $productElement) {

// extract the product data

$url = $productElement->findOne("a")->getAttribute("href");

$image = $productElement->findOne("img")->getAttribute("src");

$name = $productElement->findOne("h2")->text;

$price = $productElement->findOne(".price span")->text;

// transform the product data into an associative array

$productData = array(

"url" => $url,

"image" => $image,

"name" => $name,

"price" => $price

);

$productDataList[] = $productData;

}

This logic extracts all product data on one page and saves it in the $productDataList array.

Now, you only have to iterate over each page and apply the scraping logic defined above:

// iterate over all "/shop/page/X" pages and retrieve all product data

for ($paginationNumber = 1; $paginationNumber <= $highestPaginationNumber; $paginationNumber++) {

$curl = curl_init();

curl_setopt($curl, CURLOPT_URL, "https://scrapeme.live/shop/page/$paginationNumber/");

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

$pageHtml = curl_exec($curl);

curl_close($curl);

$paginationHtmlDomParser = HtmlDomParser::str_get_html($pageHtml);

// scraping logic...

}

Et voilà! You just learned how to build a simple web scraper in PHP!

If you want to look at the script's entire code, you can find it here. Run it, and you'll retrieve the following data:

[

{

"url": "https://scrapeme.live/shop/Bulbasaur/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png",

"name": "Bulbasaur",

"price": "£63.00"

},

...

{

"url": "https://scrapeme.live/shop/Blacephalon/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/806-350x350.png",

"name": "Blacephalon",

"price": "£149.00"

}

]

Congratulations! You just extracted all the product data automatically!

Avoiding Being Blocked

The example above uses a website designed for scraping. Extracting all data was a piece of cake, but don't be fooled by this! Scraping a website isn't always that easy, and your script may be intercepted and blocked. Find out how to prevent this from happening!

There are several possible defensive mechanisms to prevent scripts from accessing a website. These techniques try to recognize requests coming from non-human or malicious users based on their behavior and consequently block them.

Bypassing all these anti-scraping systems isn't always easy. However, you can usually avoid most of them with two solutions: common HTTP headers and web proxies. Let's now take a closer look at these two approaches.

1. Using Common HTTP Headers to Simulate a Real User

Many websites block requests that don't appear to come from real users. On the other hand, browsers set some HTTP headers. The exact headers change from vendor to vendor. So, these anti-scraping systems expect these headers to be present. Thus, you can avoid blocks by setting the appropriate HTTP headers.

Specifically, the most critical header you should always set is the User-Agent header (henceforth, UA). It's a string that identifies the application, operating system, vendor, and/or application version from which the HTTP request originates.

By default, cURL sends the curl/XX.YY.ZZ UA header, which makes the request identifiable as a script. You can manually set the UA header with cURL as follows:

curl_setopt($curl, CURLOPT_USERAGENT, "<USER_AGENT_STRING>");

Example:

curl_setopt($curl, CURLOPT_USERAGENT, "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36");

BoldThis line of code sets the UA currently used by the latest version of Google Chrome. It makes the cURL requests harder to recognize as coming from a script.

You can find a list of valid, up-to-date, and trusted UA headers online. In most cases, setting the HTTP UA header is enough to avoid being blocked. If this isn't enough, you can send other HTTP headers with cURL as follows:

curl_setopt($curl, CURLOPT_HTTPHEADER,

array(

"<HTTP_HEADER_1>: <HEADER_VALUE>",

"<HTTP_HEADER_2>: <HEADER_VALUE>",

// ...

)

);

Example:

// set the Content-Language and Authorization HTTP headers

curl_setopt($curl, CURLOPT_HTTPHEADER,

array(

"Content-Language: es",

"Authorization: 32b108le1HBuSYHMuAcCrIjW72UTO3p5X78iIzq1CLuiHKgJ8fB2VdfmcS",

)

);

Find out about what ZenRows has to offer when it comes to setting custom headers.

2. Using Web Proxies to Hide Your IP

Anti-scraping systems tend to block users from visiting many pages in a short amount of time. The primary check looks at the IP from which the requests come. It'll be blocked if the same IP makes many requests in a short time. In other words, to prevent blocks on an IP, you must find a way to hide it.

One of the best ways to do it is through a proxy server. A web proxy is an intermediary server between your machine and the rest of the computers on the internet. When performing requests through a proxy, the target website will see the IP address of the proxy server instead of yours.

Several free proxies are available online, but most are short-lived, unreliable, and often unavailable. You can use them for testing. However, you shouldn't rely on them for a production script.

On the other hand, paid proxy services are more reliable and generally come with IP rotation. That means that the IP exposed by the proxy server will frequently change over time or with each request. It makes it harder for each IP offered by the service to be banned, and even if that happened, you would get a new IP quickly.

ZenRows supports premium proxies. Find out more about how you can use them to avoid blocks.

You can set a web proxy with cURL as follows:

curl_setopt($curl, CURLOPT_PROXY, "<PROXY_URL>");

curl_setopt($curl, CURLOPT_PROXYPORT, "<PROXY_PORT>");

curl_setopt($curl, CURLOPT_PROXYTYPE, "<PROXY_TYPE>");

Example:

curl_setopt($curl, CURLOPT_PROXY, "102.68.128.214");

curl_setopt($curl, CURLOPT_PROXYPORT, "8080");

curl_setopt($curl, CURLOPT_PROXY, CURLPROXY_HTTP);

_Italic_With most web proxies, setting the URL of the proxy in the first line is enough. CURLOPT_PROXYTYPE can take the following values: CURLPROXY_HTTP (default), CURLPROXY_SOCKS4, CURLPROXY_SOCKS5, CURLPROXY_SOCKS4A, or CURLPROXY_SOCKS5_HOSTNAME.

You just learned how to avoid being blocked. Let's now dig into how to make your script faster!

Parallel Scraping

Dealing with multi-threading in PHP is complex. Several libraries can support you, but the most effective solution to perform parallel scraping in PHP doesn't require any of them.

The idea behind this approach to parallel scraping is to make the scraping script ready to be run on multiple instances. It's possible by using HTTP GET parameters.

Consider the paging example presented earlier. Instead of having a script that iterates over all pages, you can modify the script to work on smaller chunks and then launch several instances of the script in parallel.

All you have to do is pass some parameters to the script to define the boundaries of the chunk.

You can accomplish this by introducing two GET parameters as below:

$from = null;

$to = null;

if (isset($_GET["from"]) && is_numeric($_GET["from"])) {

$from = $_GET["from"];

}

if (isset($_GET["to"]) && is_numeric($_GET["to"])) {

$to = $_GET["to"];

}

if (is_null($from) || is_null($to) || $from > $to) {

die("Invalid from and to parameters!");

}

// scrape only the pagination pages whose number goes

// from "$from" to "$to"

for ($paginationNumber = $from; $paginationNumber <= $to; $paginationNumber++) {

// scraping logic...

}

// write the data scraped to a database/file

Now, you can launch several instances of the script by opening these links in your browser:

https://your-domain.com/scripts/scrapeme.live/scrape-products.php?from=1&to=5

https://your-domain.com/scripts/scrapeme.live/scrape-products.php?from=6&to=10

https://your-domain.com/scripts/scrapeme.live/scrape-products.php?from=41&to=45

https://your-domain.com/scripts/scrapeme.live/scrape-products.php?from=46&to=48

These instances will run in parallel and scrape the website simultaneously. You can find the entire code of this new version of the scraping script here.

And there you have it! You have just learned to extract data from a website in parallel through web scraping.

Being able to scrape a website in parallel is already a great improvement, but there are many other advanced techniques you can adopt in your PHP web scraper. Let's find out how to take your web scraping script to the next level.

Advanced Techniques

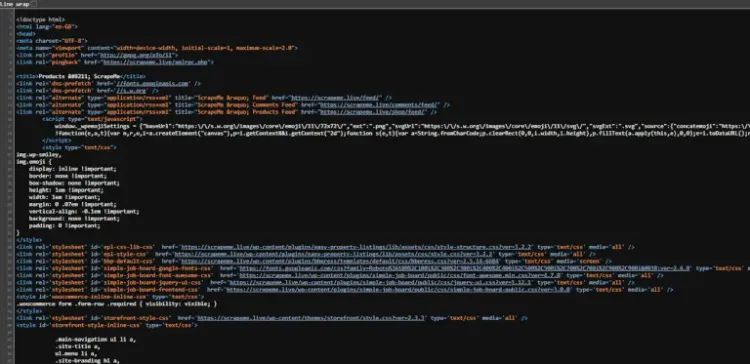

Remember that not all data of interest on a web page are directly displayed in the browser. A web page also consists of metadata and hidden elements. To access this data, right-click on an empty section of the web page and click on "View Page Source."

Here you can see the full DOM of a web page, including hidden elements. In detail, you can find metadata about the web page in the meta HTML tags. In addition, important hidden data may be stored in <input type="hidden"/> elements.

Similarly, some data may be already present on the page via hidden HTML elements. And it's shown by JavaScript only when a particular event occurs. Even though you can't see the data on the page, it's still part of the DOM. Therefore, you can retrieve these hidden HTML elements with HtmlDomParser as you would with visible nodes.

Also, keep in mind that a web page is more than its source code. Web pages can make requests in the browser to retrieve data asynchronously via AJAX and update their DOM accordingly. These AJAX calls generally provide valuable data, and you might need to call them from your web scraping script.

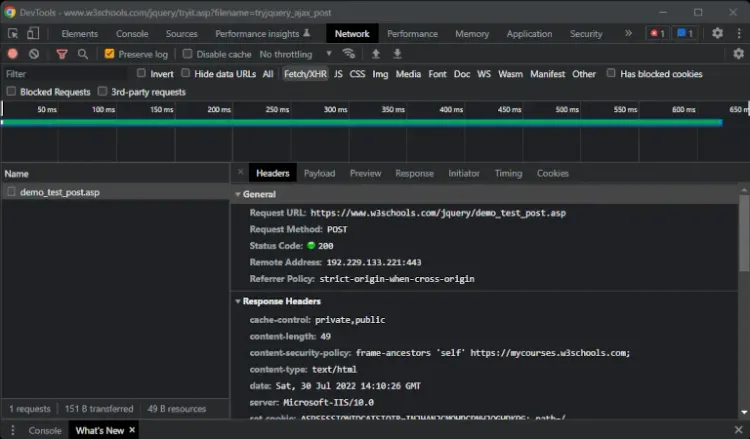

To sniff these calls, you need to use the DevTools window of your browser. Right-click on a blank section of the website, select "Inspect", and reach the "Network" tab. In the "Fetch/XHR" tab, you can see the list of AJAX calls performed by the web page, as in the example below.

Explore all the internal tabs of the selected AJAX request to understand how to perform the AJAX call. Specifically, you can replicate this POST AJAX call with cURL as below:

$curl = curl_init();

curl_setopt($curl, CURLOPT_URL, "https://www.w3schools.com/jquery/demo_test_post.asp");

// specify that the cURL request is a POST

curl_setopt($curl, CURLOPT_POST, true);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

// define the body of the request

curl_setopt($curl, CURLOPT_POSTFIELDS,

// http_build_query is required to simulate

// a FormData request. Ignore it on a JSON request

http_build_query(

array(

"name" => "Donald Duck",

"city" => "Duckburg"

)

)

);

// define the body of the request

curl_setopt($curl, CURLOPT_POSTFIELDS,

array(

"name" => "Donald Duck",

"city" => "Duckburg"

)

);

// replicate the AJAX call

$result = curl_exec($curl);

Congratulations! You just performed a POST call with cURL!

Headless Browsers

Sniffing and replicating AJAX calls is helpful for programmatically retrieving data from a website that is loaded due to user interaction. This data isn't part of the source code of a web page and can't be found in the HTML element obtained from a standard GET cURL request.

However, replicating all possible interactions, sniffing the AJAX calls, and calling them in your script is a cumbersome approach. Sometimes, you need to define a script that can interact with the page via JavaScript as a human user would. You can achieve this with a headless browser.

If you aren't familiar with this concept, a headless browser is a web browser without a graphical user interface that offers automated control of a web page via code. The most popular libraries in PHP providing headless browser functionality are chrome-php` and Selenium WebDriver. For more info, visit our guide on Web Scraping with Selenium.

Also, ZenRows offers web browser capabilities. Learn more about how to extract dynamically loaded data.

Other Libraries

Other useful PHP libraries you might adopt when it comes to web scraping are:

-

Guzzle: an advanced HTTP client that makes it possible to send HTTP requests and is trivial to integrate with web services. You can configure proxies in Guzzle and use it as an alternative to

cURL. -

Goutte: a web scraping library that provides advanced API for crawling websites and extracting data from their HTML web pages. Since it also includes an HTTP client, you can use it as an alternative to both

voku/simple_html_domandcURL.

Conclusion

Here, you learned everything you should know about performing web scraping in PHP, from basic crawling to advanced techniques. As shown above, building a web scraper in PHP that can crawl a website and automatically extract data isn't difficult.

All you need are the correct libraries, and here we have looked at some of the most popular ones.

Also, your web scraper should be able to bypass anti-bots like Cloudflare and may have to retrieve hidden data or interact with the web page like a human user. In this tutorial, you learned how to do all this as well.

If you liked this, take a look at the JavaScript web scraping guide.

Thanks for reading! We hope that you found this tutorial helpful. Feel free to contact us with questions, comments, or suggestions.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.