Web scraping in R is one of the most popular methods people use to extract data from a website. In this step-by-step tutorial, you'll learn how to do web scraping in R thanks to libraries like rvest and RSelenium.

Is R Good for Web Scraping?

Yes, it is! R is an advanced programming language designed for data science that has many data-oriented libraries to support your web scraping goals.

Prerequisites

Set up the Environment

Here are the tools we'll use in this tutorial:

- R 4+: any version of R greater than or equal to 4 will be fine. In this R web scraping tutorial, we used the R 4.2.2 version since it's the latest at the time of writing.

- An R IDE: PyCharm with the R Language for IntelliJ plugin installed and enabled is a great option to build an R web scraper. Similarly, the free Visual Studio Code IDE with the REditorSupport extension will do.

If you don't have these tools installed, click the links above and download them. Install them using the official guides and you'll have everything you need to follow this step-by-step web scraping R guide.

Set Up an R Project in PyCharm

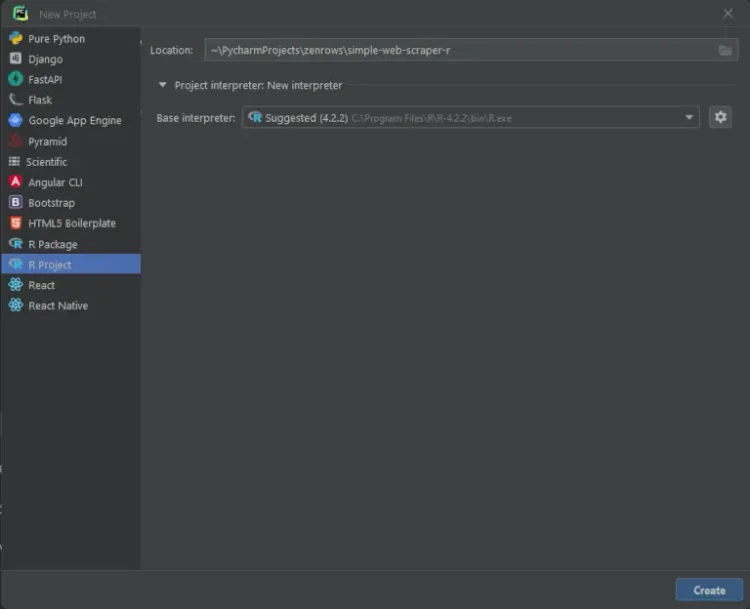

After setting up the environment, initialize an R web scraping project in PyCharm with the following three steps:

- Launch PyCharm and click on the "File > New > Project..." menu option.

- In the left sidebar of the "New Project" pop-up window, select "R Project".

- Give your R project a name and be sure to select an R interpreter as in the image below:

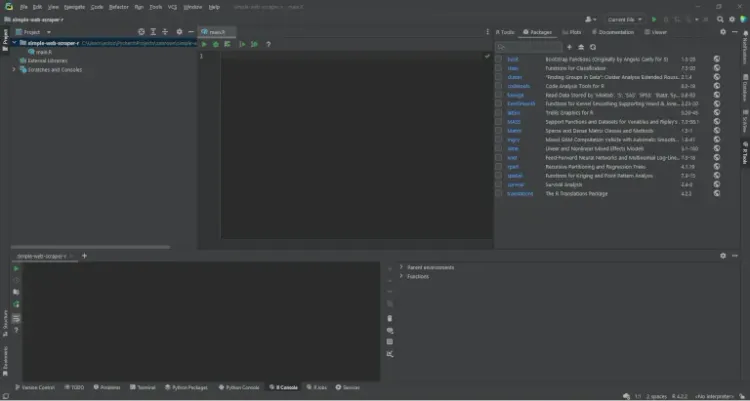

Click "Create" and wait for PyCharm to initialize your R web project. You should now have access to the following project:

PyCharm will create a blank main.R file for you. Note the R Tools section and R Console tab provided by PyCharm since they give you access to the R shell and package management in R, respectively.

We've got the basics out of the way, so let's get into the details of how to build a data scraper in R.

How to Scrape a Website in R

For this R data scraping tutorial, we'll use ScrapeMe as our target site.

Note that ScrapeMe simply contains a paginated list of Pokemon-inspired elements. The R scraper you're about to learn how to build will be able to crawl the complete website and extract all that product data.

Step 1: Install rvest

rvest is an R library that helps you scrape data from web pages through its advanced R web scraping API. This enables you to download an HTML document, parse it, select HTML elements and extract data from them.

To install rvest, open the R Console in PyCharm and launch the command below:

install.packages("rvest")

Wait for the installation process to complete. It may take a few seconds, especially if R needs to install also its dependencies. Once installed, load it into your main.R file:

library(rvest)

If PyCharm doesn't report errors, the installation process ended with success.

Step 2: Retrieve the HTML Page

Download the HTML document using its remote URL with rvest with a single line of code:

# retrieving the target web page

document <- read_html("https://scrapeme.live/shop")

The read_html() function retrieves the HTML downloaded using the URL passed as a parameter, then parses it and assigns the resulting data structure to the document variable.

Step 3: Identify and Select the Most Important HTML elements

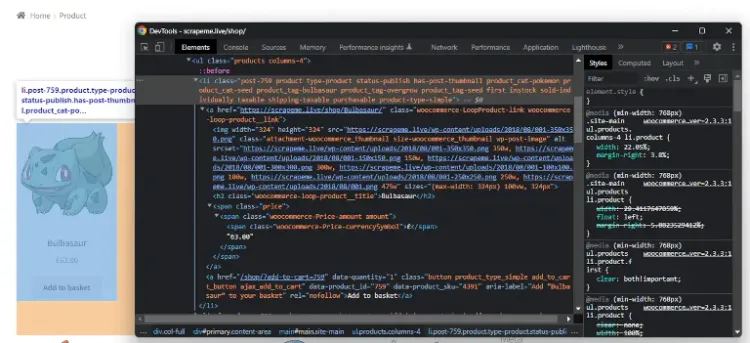

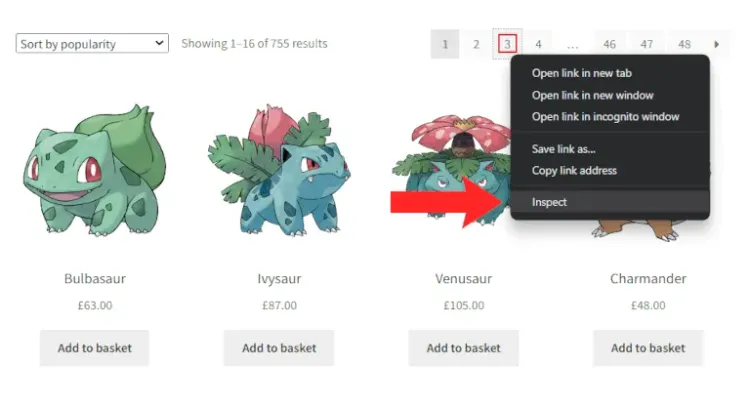

The goal of this R data scraping tutorial is to extract all product data, therefore the product HTML nodes are the most important elements. To select them, right-click on a product HTML element and choose the "Inspect" option. This will launch the following DevTools pop-up:

Notice from the HTML code that a li.product HTML element includes:

- An

athat stores the product URL. - An

imgthat contains the product image. - A

h2that keeps the product name. - A

spanthat stores the product price.

Select all HTML elements contained in the target web page in rvest with:

# selecting the list of product HTML elements

html_products <- document %>% html_elements("li.product")

This executes the html_elements() rvest function on document by using the R %>% pipe operator. Specifically, html_elements() returns the list of HTML elements found applying a CSS selector or XPath expression.

Given a single HTML product, select all four HTML nodes of interest with:

# selecting the "a" HTML element storing the product URL

a_element <- html_product %>% html_element("a")

# selecting the "img" HTML element storing the product image

img_element <- html_product %>% html_element("img")

# selecting the "h2" HTML element storing the product name

h2_element <- html_product %>% html_element("h2")

# selecting the "span" HTML element storing the product price

span_element <- html_product %>% html_element("span")

Step 4: Extract the Data from the HTML Elements

R is inefficient when it comes to appending elements to a list, therefore you should avoid iterating over each HTML product. Instead, use rvest since it allows you to extract data from a list of HTML elements with a single operation:

# extracting data from the list of products and storing the scraped data into 4 lists

product_urls <- html_products %>%

html_element("a") %>%

html_attr("href")

product_images <- html_products %>%

html_element("img") %>%

html_attr("src")

product_names <- html_products %>%

html_element("h2") %>%

html_text2()

product_prices <- html_products %>%

html_element("span") %>%

html_text2()

rvest applies the last function of the queue statement to each HTML element selected with html_element() from html_products. html_attr() returns the string stored in a single attribute. Similarly, html_text2() returns the text in an HTML element as it looks in a browser.

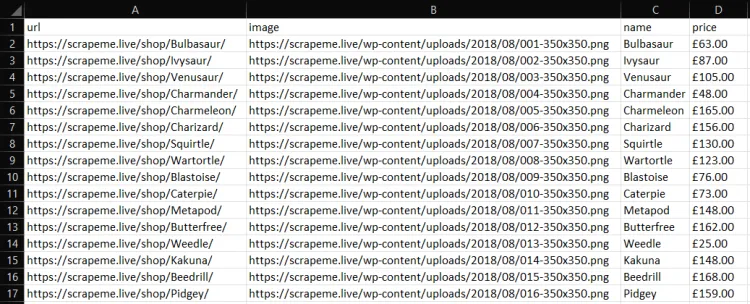

So product_urls, product_images, product_names and product_prices will store scraped data for each of the 4 attributes of product element. The extracted data is stored in 4 lists and now let's convert them into a more manageable dataframe:

# converting the lists containg the scraped data into a dataframe

products <- data.frame(

product_urls,

product_images,

product_names,

product_prices

)

Note that the R data.frame() function aggregates all scraped data into the products variable.

Congrats! You've just learned how to scrape a web page in R using rvest. Go ahead and extract the dataframe to a CSV file in the step below.

Step 5: Export the Scraped Data to CSV

Before converting the products variable into CSV format, change its column names using names(). It allows you to change the names associated with every dataframe component so that the exported CSV file will be easier to read.

# changing the column names of the data frame before exporting it into CSV

names(products) <- c("url", "image", "name", "price")

Export the dataframe object to a CSV file using the write.csv() method, which instructs your R web crawler to produce a products.csv file containing the scraped data.

# export the data frame containing the scraped data to a CSV file

write.csv(products, file = "./products.csv", fileEncoding = "UTF-8")

If you run your script in PyCharm, you'll find a products.csv file in the root folder. It will contain your output:

Well done!

Step 6: Put All Together

This is what the final R data scraper look like:

library(rvest)

httr::set_config(httr::user_agent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"))

# initializing the lists that will store the scraped data

product_urls <- list()

product_images <- list()

product_names <- list()

product_prices <- list()

# initializing the list of pages to scrape with the first pagination links

pages_to_scrape <- list("https://scrapeme.live/shop/page/1/")

# initializing the list of pages discovered

pages_discovered <- pages_to_scrape

# current iteration

i <- 1

# max pages to scrape

limit <- 1

# until there is still a page to scrape

while (length(pages_to_scrape) != 0 && i <= limit) {

# getting the current page to scrape

page_to_scrape <- pages_to_scrape[[1]]

# removing the page to scrape from the list

pages_to_scrape <- pages_to_scrape[-1]

# retrieving the current page to scrape

document <- read_html(page_to_scrape,

user_agent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36")

# extracting the list of pagination links

new_pagination_links <- document %>%

html_elements("a.page-numbers") %>%

html_attr("href")

# iterating over the list of pagination links

for (new_pagination_link in new_pagination_links) {

# if the web page discovered is new and should be scraped

if (!(new_pagination_link %in% pages_discovered) && !(new_pagination_link %in% page_to_scrape)) {

pages_to_scrape <- append(new_pagination_link, pages_to_scrape)

}

# discovering new pages

pages_discovered <- append(new_pagination_link, pages_discovered)

}

# removing duplicates from pages_discovered

pages_discovered <- pages_discovered[!duplicated(pages_discovered)]

# selecting the list of product HTML elements

html_products <- document %>% html_elements("li.product")

# appending the new results to the lists of scraped data

product_urls <- c(

product_urls,

html_products %>%

html_element("a") %>%

html_attr("href")

)

product_images <- c(

product_images,

html_products %>%

html_element("img") %>%

html_attr("src"))

product_names <- c(

product_names,

html_products %>%

html_element("h2") %>%

html_text2()

)

product_prices <- c(

product_prices,

html_products %>%

html_element("span") %>%

html_text2()

)

# incrementing the iteration counter

i <- i + 1

}

# converting the lists containg the scraped data into a data.frame

products <- data.frame(

unlist(product_urls),

unlist(product_images),

unlist(product_names),

unlist(product_prices)

)

# changing the column names of the data frame before exporting it into CSV

names(products) <- c("url", "image", "name", "price")

# export the data frame containing the scraped data to a CSV file

write.csv(products, file = "./products.csv", fileEncoding = "UTF-8", row.names = FALSE)

Advanced Techniques in Web Scraping in R

You just learned the basics of web scraping in R. It's time to dig into more advanced techniques.

Avoid Blocks

Note that there are several anti-scraping techniques your target website may implement. Most websites ban requests based on their HTTP headers. For example they tend to block HTTP requests that don't have a valid User-Agent header. We recommend ZenRows to get around all these challenges.

As explained in the official documentation, rvest uses httr behind the scene and set a global User-Agent for rvest by changing the httr config as follows:

httr::set_config(httr::user_agent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"))

This line sets an HTTP user agent for URL-based requests with httr::set_config() and httr::user_agent().

Keep in mind that this is just a basic ways to avoid being blocked by anti-scraping solutions. Learn more about anti-scraping techniques.

Web Crawling in R

The target website consists of several web pages. So, to scrape the entire website and retrieve all data, you have to extract the list of all pagination links . This is basically what web crawling is about.

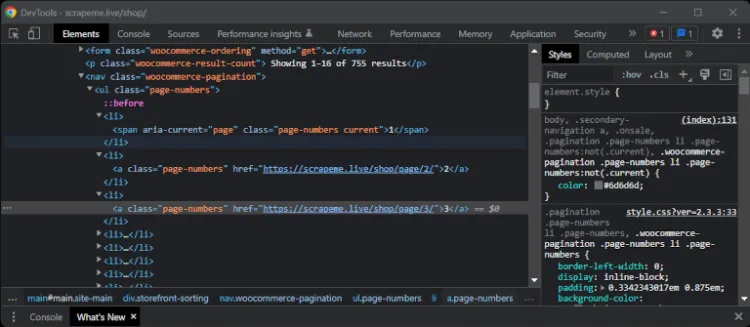

To get started, right-click on the pagination number HTML element and select "Inspect".

Your browser will open the following DevTools window:

By inspecting the pagination HTML elements, you'll notice that they all share the same page-numbers class. So, retrieve all pagination links using the html_elements() method:

pagination_links <- document %>%

html_elements("a.page-numbers") %>%

html_attr("href")

The goal is to visit all web pages and, at the same time, you may want your R crawler to stop programmatically. The best way to do this is by introducing a limit variable:

library(rvest)

httr::set_config(httr::user_agent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"))

# initializing the lists that will store the scraped data

product_urls <- list()

product_images <- list()

product_names <- list()

product_prices <- list()

# initializing the list of pages to scrape with the first pagination links

pages_to_scrape <- list("https://scrapeme.live/shop/page/1/")

# initializing the list of pages discovered

pages_discovered <- pages_to_scrape

# current iteration

i <- 1

# max pages to scrape

limit <- 5

# until there is still a page to scrape

while (length(pages_to_scrape) != 0 && i <= limit) {

# getting the current page to scrape

page_to_scrape <- pages_to_scrape[[1]]

# removing the page to scrape from the list

pages_to_scrape <- pages_to_scrape[-1]

# retrieving the current page to scrape

document <- read_html(page_to_scrape)

# extracting the list of pagination links

new_pagination_links <- document %>%

html_elements("a.page-numbers") %>%

html_attr("href")

# iterating over the list of pagination links

for (new_pagination_link in new_pagination_links) {

# if the web page discovered is new and should be scraped

if (!(new_pagination_link %in% pages_discovered) && !(new_pagination_link %in% page_to_scrape)) {

pages_to_scrape <- append(new_pagination_link, pages_to_scrape)

}

# discovering new pages

pages_discovered <- append(new_pagination_link, pages_discovered)

}

# removing duplicates from pages_discovered

pages_discovered <- pages_discovered[!duplicated(pages_discovered)]

# scraping logic...

# incrementing the iteration counter

i <- i + 1

}

# export logic...

The R web scraping script process crawls a web page, looks for new pagination links and fills the crawling queue. The target website has 48 pages, so assign 48 to limit to scrape the entire website. At the end of the while cycle, pages_discovered will store all the 48 pagination URLs.

Run the R web scraper in PyCharm and it'll produce a products.csv file. This will contain all 755 Pokemon-inspired products.

Congratulations! You just crawled all the product data on ScrapeMe using R!

Parallel Web Scraping in R

If your target site has many pages or the server is slow to respond, data scraping in R can take a long time. Luckily, R supports parallelization! Let's learn how to do parallel web scraping using R.

If you aren't familiar with this, parallel scraping is about scraping many pages simultaneously. This means downloading, parsing and extracting data from several web pages at the same time, making the scraping process faster.

To get started, import the parallel package in your R data scraper:

library(parallel)

Now, use the utility functions exposed by this R library to perform parallel computation.

Now, you have the list of web pages you want to scrape:

pages_to_scrape <- list(

"https://scrapeme.live/shop/page/1/",

"https://scrapeme.live/shop/page/2/",

"https://scrapeme.live/shop/page/3/",

# ...

"https://scrapeme.live/shop/page/48/"

)

Build a parallel web scraper with R as follows:

scrape_page <- function(page_url) {

# loading the rvest library

library(rvest)

# setting the user agent

httr::set_config(httr::user_agent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"))

# retrieving the current page to scrape

document <- read_html(page_url)

html_products <- document %>% html_elements("li.product")

product_urls <- html_products %>%

html_element("a") %>%

html_attr("href")

product_images <- html_products %>%

html_element("img") %>%

html_attr("src")

product_names <-

html_products %>%

html_element("h2") %>%

html_text2()

product_prices <-

html_products %>%

html_element("span") %>%

html_text2()

products <- data.frame(

unlist(product_urls),

unlist(product_images),

unlist(product_names),

unlist(product_prices)

)

names(products) <- c("url", "image", "name", "price")

return(products)

}

# automatically detecting the number of cores

num_cores <- detectCores()

# creting a parallel cluster

cluster <- makeCluster(num_cores)

# execute scrape_page() on each element of pages_to_scrape

# in parallel

scraped_data_list <- parLapply(cluster, pages_to_scrape, scrape_page)

# merging the list of dataframes into

# a single dataframe

products <- do.call("rbind", scraped_data_list)

This R crawler script defines a scrape_page() function that is responsible for scraping a single web page. Then, it initializes an R cluster with makeCluster() to create a set of copies of R instances running in parallel and communicating through sockets.

Use parLapply() to apply the scrape_page() function over the list of web pages. Note that parLapply() is the parallel version of apply(). R will run each scrape_page() function in a new instance.

So each parallel instance will have access only to what is defined inside scrape_page() and, for this reason, the first line of scrape_page() is this:

# loading the rvest library

library(rvest)

If you omitted this line, you'd get the could not find function "read_html" error. This proves that each R instance is limited to what is defined inside scrape_page().

parLapply() returns a list of dataframes, each of which contains the data scraped from a single web page. Then, do.call() flattens out the list of dataframes by applying rbind() to the list. In detail, rbind() allows you to merge different dataframes into a single dataframe, and products will contain the data related to all scraped products in a single dataframe.

If you implement this parallelization logic in your R data scraping script, you'll get a significant increase in performance. On the other hand, the R web scraper will require more memory resources.

Perfect! You now know how to do parallel web scraping using R! But the tutorial is not finished yet!

Scraping Dynamic Content Websites in R

In a static content website, the HTML document returned by the server already contains all the content in its source code. This means that you don't need to render the document in a web browser to retrieve data from it.

But web pages are much more than their HTML source code. When rendered in a browser, a web page can perform HTTP requests through AJAX and run JavaScript, therefore web pages dynamically retrieve data from the Web and change the DOM accordingly.

Most websites rely on API calls to perform front-end operations or asynchronously retrieve data. In other words, the HTML source code doesn't include this valuable data. The only way you can scrape this data is by rendering the target web page in a headless browser.

A headless browser lets you load a web page in a browser with no GUI. So, it enables you to instruct the browser to perform operations and replicate user interactions. Let's now see how to use a headless browser for web scraping in R.

Web Scraping with a Headless Browser in R

Using a headless browser, you can build an R web scraper that is capable of interacting with a website via JavaScript as a human user would. The most popular headless browser library in R is RSelenium, and we'll use it in this tutorial.

Install RSelenium by launching the command below in your R Console:

install.packages("RSelenium");

After installing it, run the code below to extract the data from ScrapeMe:

# load the Chrome driver

# TODO: change the chromever parameter with your Chrome version

driver <- rsDriver(

browser = c("chrome"),

chromever = "108.0.5359.22",

verbose = F,

# enabling the Chrome --headless mode

extraCapabilities = list("chromeOptions" = list(args = list("--headless")))

)

web_driver <- driver[["client"]]

# navigatign to the target web page in the headless Chrome instance

web_driver$navigate("https://scrapeme.live/shop/")

# initializing the lists that will contain the scraped data

product_urls <- list()

product_images <- list()

product_names <- list()

product_prices <- list()

# retrieving all produt HTML elements

html_products <- web_driver$findElements(using = "css selector", value = "li.product")

# iterating over the product list

for (html_product in html_products) {

# scraping the data of interest from each product while populating the 4 scraping data lists

product_urls <- append(

product_urls,

html_product$findChildElement(using = "css selector", value = "a")$getElementAttribute("href")[[1]]

)

product_images <- append(

product_images,

html_product$findChildElement(using = "css selector", value = "img")$getElementAttribute("src")[[1]]

)

product_names <- append(

product_names,

html_product$findChildElement(using = "css selector", value = "h2")$getElementText()[[1]]

)

product_prices <- append(

product_prices,

html_product$findChildElement(using = "css selector", value = "span")$getElementText()[[1]]

)

}

# converting the lists containg the scraped data into a data.frame

products <- data.frame(

unlist(product_urls),

unlist(product_images),

unlist(product_names),

unlist(product_prices)

)

# changing the column names of the data frame before exporting it into CSV

names(products) <- c("url", "image", "name", "price")

# export the data frame containing the scraped data to a CSV file

write.csv(products, file = "./products.csv", fileEncoding = "UTF-8", row.names = FALSE)

Note that the findElements() method allows you to select HTML elements with RSelenium. Then, call findChildElements() to select a child element from a RSelenium HTML element. Finally, use methods like getElementAttribute() and getElementText() to extract data from the selected HTML elements.

The approach to web scraping in R using rvest and RSelenium is very similar. The main difference is that RSelenium runs the scraping process in a browser, giving you access to all browser features.

So if you want to scrape data from a new page with rvest, you need to perform a new HTTP request first. On the contrary, RSelenium allows you to directly navigate to a new web page in the browser as follows:

pagination_element <- web_driver$findElement(using = "css selector", value = "a.page-numbers")

# navigating to a new web page

paginationElement.click();

# waiting for the page to load...

print(web_driver$getTitle()); # prints "Products – Page 2 – ScrapeMe"

RSelenium makes it easy to scrape a web page without getting blocked since it has the ability to scrape a web page like a normal human. This makes it one of the best available options when it comes to data scraping in R.

Other Web Scraping Libraries for R

Other useful libraries for web scraping in R are:

- ZenRows: a web scraping API that bypasses all anti-bot or anti-scraping systems for you, offering rotating proxies, headless browsers, CAPTCHAs bypass and more.

- RCrawler: an R package for web crawling websites and performing web scraping. It offers a large variety of features to extract structured data from a web page.

- xmlTreeParse: an R library to parse XML/HTML files or strings. It generates an R structure representing the XML/HTML tree and allows you to select elements from it.

Conclusion

In this step-by-step tutorial, we learned the basic pieces you need to know to get started on R web scraping.

As a recap, you talked about:

- How to perform basic data scraping using R with rvest.

- How to implement crawling logic to visit an entire website.

- Why you might need an R headless browser solution.

- How to scrape and crawl dynamic websites with RSelenium.

Web scraping with R can get stressful due to anti-scraping systems integrated into several websites, and most of these libraries find it hard to bypass them. One way to solve this is by making use of a web scraping API, like ZenRows. With a single API call, it bypasses all anti-bot systems for you.

Frequent Questions

How Do You Scrape Data from a Website in R?

You can scrape a website in R as you can in any other programming language. First, you require a web scraping R library. Then you have to use it to connect to your target website, extract HTML elements and retrieve the data from them.

What is the Rvest package in R?

rvest is one of the most popular web scraping R libraries available, offering several functions to make R web crawling easier. rvest wraps the xml2 and httr packages, allowing you to download HTML documents and extract data from them.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.