Headless browser scraping is one of the best methods for crawling data from web pages. The usual process requires you to run your code inside a browser. That creates an inconvenience since you have to run it inside an environment with a graphic interface.

The browser will require time and resources to render the web page you're trying to scrap, slowing the process. If your project involves basic data extraction, then you might be able to do it with simple methods. That is where headless browser web scraping comes in.

In this guide, we'll be discussing what headless browsers are, their benefits, and the best options available.

Let's get started!

What Is Headless Browser Scraping?

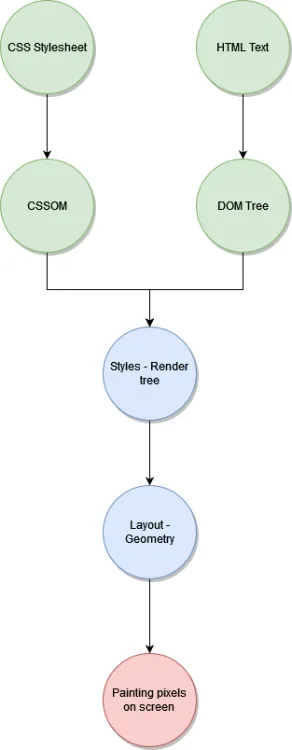

Headless browser scraping is the practice of web scraping but with a headless browser. It means scraping a web page without an actual user interface. For example, this happens when you try scraping through a normal web browser:

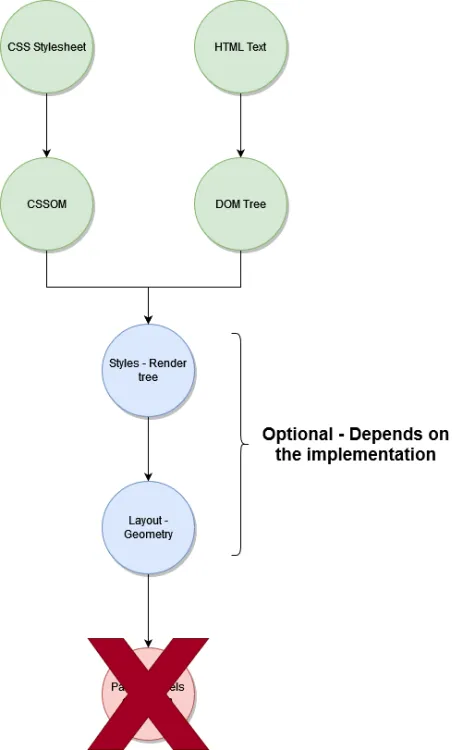

So what if you use a headless crawler? Well, you're literally skipping the rendering step:

While you might get different results depending on the headless browser, looking at the whole process high-level, that happens when you go headless.

For this purpose, you can use most programming languages, such as Node.js, PHP, Java, Ruby, Python, and others. The only requirement for any of these languages is that there is at least a library/package that allows you to interact with headless browsers like Selenium and Puppeteer.

Is Headless Scraping Faster?

Yes, it is, because it requires fewer resources and steps to get the needed information.

When you use a headless browser, you skip the entire UI rendering.

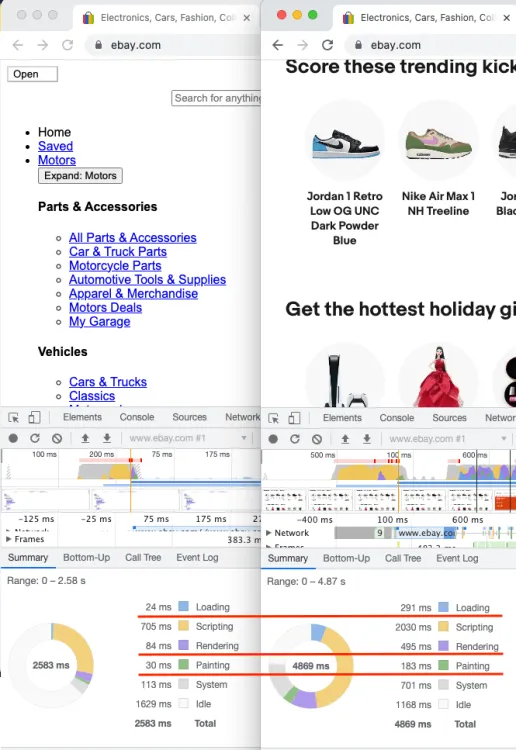

We can use Puppeteer (an automation tool that uses a Chromium-based browser) to check the performance tab inside the DevTools and compare the results of a page load configured to avoid loading any images and CSS styles versus a normal page load.

We'll use the eBay site that relies heavily on images. That is a perfect site for optimization.

Look at that! Two seconds off when we load the page without images and CSS styles.

The time spent painting the page is also less because while we still have to show something, it's notably less complex.

Consider a more realistic scenario; let's say you have 100 clients, each making 100 scraping requests daily. So if you save two seconds off 10,000 requests, on average, that is five (almost six) hours less, only through not rendering all those resources.

Is that number big enough for you now?

Can Headless Browsers Be Detected?

Just because you can scrap a website using the latest technologies doesn't mean you should. Web scraping can be seen as bad, and some developers go the extra mile to block and avoid crawling their web content.

That said, here are techniques to detect headless browser scraping activities:

1. Request Frequency

The request frequency is a clear indicator. This takes us back to the previous point about the performance being a double-edged blade.

On the one hand, it's great to be able to send more requests at the same time. However, a website that doesn't want to be scraped will quickly detect that a single IP sends too many requests per second and block them.

So what can you do to avoid it? If you're coding your own scraper, you can try to throttle the number of requests you send per second, especially if they're through the same IP. That way, you can try and simulate a real user.

How many requests can you send per second? That's up to the website to limit, and you to find out through trial and error.

2. IP Filtering

Another common way to determine if you're a real user or a bot trying to scrape a website is through an updated blacklist of IPs. These IPs aren't trusted because they've detected scraping activities originating from them in the past.

Although bypassing IP filtering shouldn't be a problem, ZenRows offers excellent premium proxies for web scraping.

3. CAPTCHAs

Developers use them to filter bots. These little guys will pose a simple problem that humans can easily solve, while machines require a bit of work. It's a simple test and, while computers can also solve it, it requires you to work a little harder.

There are many ways to bypass CAPTCHAs, but one of the easiest we have found is by using ZenRows' API.

4. User-Agent Detection

All browsers send a special header called "User-Agent," where they add an identifying string that determines which browser is used and its version. If a website tries to protect itself from crawlers and scrapers, it'll look for that header and check if the value is correct.

For example, this would be the User-Agent sent by a normal Google Chrome browser: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36

You can see the string specifies the browser, its version and even the OS that it's using.

Now look at a normal Headless Chrome User-Agent (Headless Chrome is a headless version of Chrome browser): Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) HeadlessChrome/76.0.3803.0 Safari/537.36

You can also see similar information telling us that it's on Linux instead of Windows, but it's also adding the Headless Chrome version. That's an immediate sign we're not real human beings. Busted!

Of course, as with most request headers, we can also fake it through whatever tool we use to scrap the website. But if you forget about it, you'll run into a target filtering by UA and get blocked

5. Browser Fingerprinting

Browser fingerprinting is a technique that involves gathering different characteristics of your system and making a unique hash out of them all.

There is no single way of doing this, but if done correctly, they can identify you and your browser even if you try to mask your identity. One of the common techniques involves canvas fingerprinting, where they draw an image in the background and measure the distinct distortions caused by your particular setup (graphics card, browser version, etc.).

Reading the list of media devices installed in your system will give your identity away in future sessions. Others will even use sound to get a fingerprint, like how it's possible to use the Web Audio API to measure specific distortions in a sound wave generated by an oscillator.

Avoiding detection from these sites isn't trivial. Of course, if your scraping activities don't require it, you can avoid running JavaScript code on their site and prevent them from fingerprinting your browser.

If you start running into more problems, you have two choices: keep whaling at them through research and trial and error or go for a paid scraping service.

After all, if you're trying to scrape a website with many limitations, then you're probably serious about it. If that's your case, consider investing some money and letting the pros handle all these problems.

Which Browser Is Best for Web Scraping?

Is there a "best headless browser for scraping" out there? No, there isn't. After all, the concept of "best" is only valid within the context of the problem you're trying to solve.

That being said, some alternatives might be right for you, at least as a starting point. Some of the most popular browsers for headless web scraping are:

1. ZenRows

ZenRows is an API with an integrated headless browser out of the box. It allows both static and dynamic data scraping with a single API call. You can integrate with all languages, and it also offers SDKs for Python and Node.js. For testing, you can start with a graphical interface or cURL requests. Then scale with your preferred language.

2. Puppeteer

You could say Puppeteer is a Node.js headless browser. It has a great API, and it's relatively easy to use. With a few lines of code, you can start your scraping activities.

3. Selenium

It's widely used for headless testing and provides a very intuitive API for specifying user actions on the scraping target.

4. HTMLUnit

HTMLUnit is a great option for Java developers. Many popular projects use this headless browser, and it's actively maintained.

What Are the Downsides of Headless Browsers for Web Scraping?

If you're web scraping using a headless browser for fun, then chances are nobody will care about your activities. But if you're doing large-scale web scraping, you'll start making enough noise and risk getting detected. The following are some of the downsides of web scraping with a headless browser:

Headless browsers are harder to debug.

To scrape is basically to extract data from a source. To achieve it, we have to reference parts of the DOM, like capturing a certain class name or looking for an input with a specific name.

When the website's structure changes (i.e., its HTML changes), our code is then rendered useless. That happens because our logic can't adapt as we would through visual inspection.

That is all to say that if you're building a scraper, and data suddenly starts being wrong or empty (because the HTML of the scraped page changed), you'll have to manually review and debug the code to understand what's happening.

So you have to resort to workarounds or to using a browser that supports both versions, like Puppeteer, so you can switch the UI on and off when needed.

They do have a significant learning curve.

Browsing a website through code requires you to see the website differently. We're used to browsing a website using visual cues or descriptions (in the case of visual aid assistants).

But now you have to look at the website's code and understand its architecture to properly get the information you want in a mostly change-resistant way to avoid updating the browsing logic every few weeks.

What Are the Benefits of Headless Scraping?

The benefits of using a headless browser for scraping include the following:

1. Automation

It's possible to automate tasks during headless browser scraping. That's a real time-saver, especially when the website you're trying to crawl isn't protecting itself or changing its internal architecture too often. That way, you won't be bothered by constant updates to the browsing logic.

2. Increase in Speed

The increase in speed is considerable since you'll utilize fewer resources per website, and the loading time for each one can also be reduced, making it a huge time-saver over time.

3. Delivery of Structured Data from a Seemingly Unstructured Source

Websites can seem unstructured because, after all, they're designed to be read by people, and people have no problem with gathering information from unstructured sources. But because websites do have an internal architecture, we can leverage it and take the information we want.

We can then save that information in a machine-readable form (like JSON, YAML, or any other data storage format) for later processing.

4. Potential Savings in Bandwidth Feeds

Headless browsing can be done in a way that skips some of the biggest resources (Kb-wise) of a web page. That translates directly to a speed increase, but it also allows us to save a lot of data from being transferred to the server where the scraping is taking place.

And that can mean a considerably lower data-transfer bill from services like proxies or gateways from cloud providers that charge per transferred byte.

5. Scraping Dynamic Content

Several of the above also apply to faster tools like requests in Python. But those lack an important feature that headless browsers have: extracting data from dynamic pages or SPAs (Single Page Applications).

You can wait for some content to appear and even interact with the page. Navigate or fill in forms; the options are almost unlimited. After all, you're interacting with a browser.

Conclusion

Web scraping, in general, is a great tool to capture data from multiple web sources and centralize it wherever you need it. It's a cost-effective way of crawling because you can work once and automate the next set of executions at a very low price.

Headless web scraping is a way to perform scraping with a special version of a browser with no UI, making it even faster and cheaper to run.

In this guide, you've learned the basics of headless browser web scraping, including the types, benefits, downsides and some tools.

As a recap, some of the different headless browsers we mentioned are:

- ZenRows.

- Puppeteer.

- Selenium.

- HTMLUnit.

Or you could use an all-in-one API-based solution such as ZenRows for smooth web scraping. It provides access to premium proxies, anti-bot protection and CAPTCHA bypass systems. Try ZenRows for free.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.