In this Java web scraping tutorial, you'll learn everything you need to know about web scraping in Java. Follow this step-by-step tutorial, and you'll become a web scraping expert. In detail, you'll learn how to master the web scraping basics as well as the most advanced aspects.

Let's not waste more time! Learn how to build a web scraper in Java. This script will be able to crawl an entire website and automatically extract data from it. Cool, isn't it?

Can You Web Scrape With Java?

The short answer is "Yes, you can!"

Java is one of the most reliable object-oriented programming languages available. Thus, Java can count on a wide range of libraries. This means that there are several Java web scraping libraries you can choose from.

Two examples are Jsoup and Selenium. These libraries allow you to connect to a web page. Also, they come with many functions to help you extract the data that interests you. In this Java web scraping tutorial, you'll learn how to use both.

How Do You Scrape a Page in Java?

You can scrape a web page in Java as you can perform web scraping in any other programming language. You need a web scraping Java library that allows you to visit a web page, retrieve HTML elements, and extract data from them.

You can easily install a Java web scraping library with Maven or Gradle. There are the two most popular Java dependency tools. Follow this web scraping Java tutorial, and learn more about how to do web scraping in Java.

Getting Started

Before starting to build your Java web scraper, you need to meet the following requirements:

- Java LTS 8+: any version of Java LTS (Long-Term Support) greater than or equal to 8 will do. In detail, this Java web scraping tutorial refers to Java 21. At the time of writing, this is the last LTS version of Java.

- Gradle or Maven: choose one of the two build automation tools. You'll need one of them for its dependency management features to install your Java web scraping library.

- A Java IDE: any IDE that supports Java and can integrate with Maven and Gradle will do. IntelliJ IDEA is one of the best options available.

If you don't meet these prerequisites, follow the links above. Download and install Java, Gradle and Maven, and the Java IDE in order. If you encounter problems, follow the official installation guides. Then, you can verify everything went as expected with the following terminal command:

java -version

This should return something like this:

openjdk version "21" 2023-09-19 LTS

OpenJDK Runtime Environment Temurin-21+35 (build 21+35-LTS)

OpenJDK 64-Bit Server VM Temurin-21+35 (build 21+35-LTS, mixed mode, sharing)

As you can see, that represents the info related to the version of Java installed on your machine.

Then, if you're a Gradle user, type in your terminal:

gradle -v

Again, this would return the version of Gradle you installed, as follows:

------------------------------------------------------------

Gradle 8.4

------------------------------------------------------------

Build time: 2023-10-04 20:52:13 UTC

Revision: e9251e572c9bd1d01e503a0dfdf43aedaeecdc3f

Kotlin: 1.9.10

Groovy: 3.0.17

Ant: Apache Ant(TM) version 1.10.13 compiled on January 4 2023

JVM: 21 (Eclipse Adoptium 21+35-LTS)

OS: Linux 6.2.0-34-generic amd64

Or, if you're a Maven user, launch the command below:

mvn -v

If the Maven installation process worked as expected, this should return something like this:

Apache Maven 3.9.5 (57804ffe001d7215b5e7bcb531cf83df38f93546)

You're ready to follow this step-by-step web scraping Java tutorial. In detail, you're going to learn how to perform web scraping in Java on https://scrapeme.live/shop/. This is a special website designed to be scraped.

Have a look at https://scrapeme.live/shop/.

Note that scrapeme.live/shop/ is just a simple paginated list of Pokemon-inspired products. The goal of the Java web scraper will be to crawl the entire website and retrieve all product data.

You can find the web scraping Java source code in the GitHub repository that supports this tutorial. Clone it and take a look at the code while reading the tutorial with the following command:

git clone https://github.com/Tonel/simple-web-scraper-java

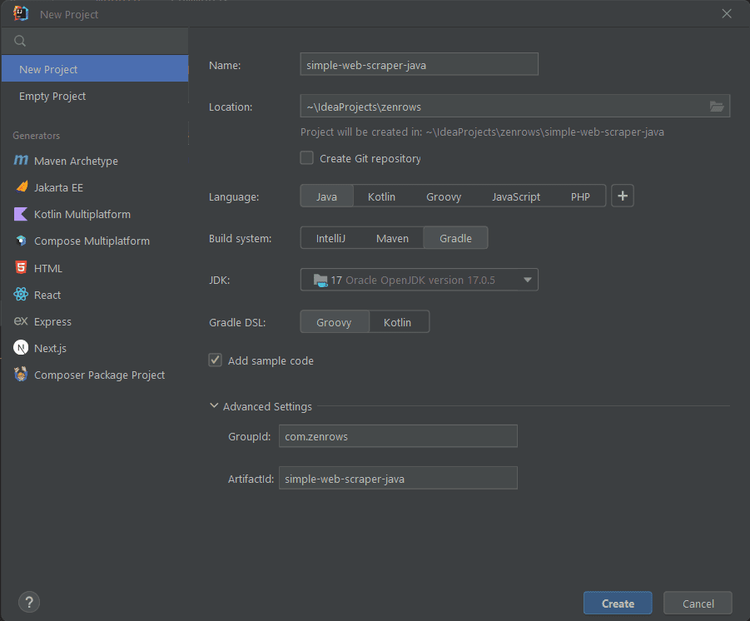

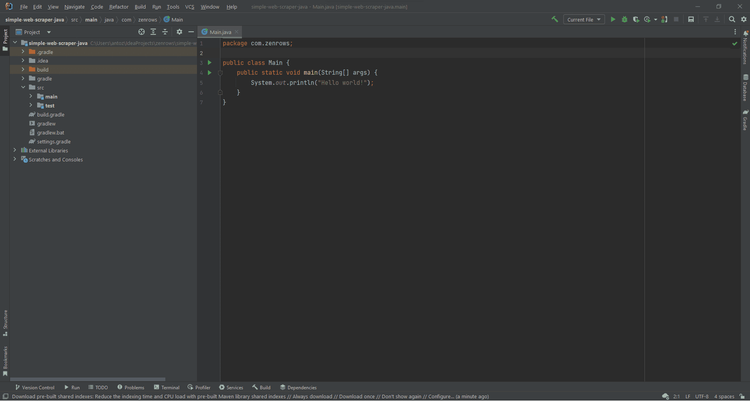

Select Maven or Gradle based on the build automation tool you installed or want to use. Then, click "Create" to initialize your Java project. Wait for the setup process to end, and you should now have access to the following Java web scraping project:

Let's now learn the basics of web scraping using Java!

Basic Web Scraping in Java

The first thing you need to learn is how to scrape a static website in Java. You can think of a static website as a collection of pre-built HTML documents. Each of these HTML pages will have its CSS and JavaScript files. A static website relies on server-side rendering.

In a static web page, the content is embedded in the HTML document provided by the server. So, you don't need a web browser to extract data from it. In detail, static web scraping is about:

- Downloading a web page

- Parsing the HTML document retrieved from the server.

- Selecting the HTML elements containing the data of interest from the web page.

- Extracting the data from them

In Java, scraping web pages isn't difficult, especially when it comes to static web scraping. Let's now learn the basics of web scraping with Java.

Step #1: Install Jsoup

First, you need a web-scraping Java library. Jsoup is a Java library to perform that makes web scraping easy. In detail, Jsoup comes with an advanced Java web scraping API. This allows you to connect to a web page with its URL, select HTML elements with CSS selectors, and extract data from them.

In other terms, Jsoup offers you almost everything you need to perform static web scraping with Java. If you're a Gradle user, add jsoup to the dependencies section of your build.gradle file:

implementation "org.jsoup:jsoup:1.16.1"

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.16.1</version>

</dependency>

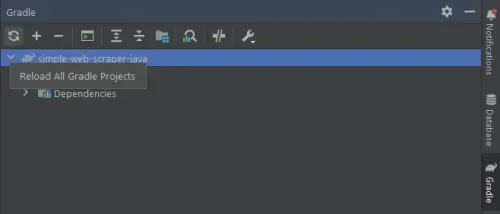

Then, if you're an IntelliJ user, don't forget to click on the Gradle/Maven reload button below to install the new dependencies:

Jsoup is now installed and ready to use. Import it in your Main.java file as follows:

import org.jsoup.*;

import org.jsoup.nodes.*;

import org.jsoup.select.*;

Let's write your first web scraping Java script!

Step #2: Connect to your target website

You can use Jsoup to connect to a website using its URL with the following lines:

// initializing the HTML Document page variable

Document doc;

try {

// fetching the target website

doc = Jsoup.connect("https://scrapeme.live/shop").get();

} catch (IOException e) {

throw new RuntimeException(e);

}

This snippet uses the connect() method from Jsoup to connect to the target website. Note that if the connection fails, Jsoup throws a IOException. This is why you need a try ... catch logic. Then, the get() method returns a Jsoup HTML Document object you can use to explore the DOM.

Keep in mind that many websites automatically block requests that don't have a set of some expected HTTP headers. This is one of the most basic anti-scraping systems. Thus, you can simply avoid being blocked by manually setting these HTTP headers.

In general, the most important header you should always set is the User-Agent header. This is a string that helps the server identifies the application, operating system, and vendor from which the HTTP request comes from.

You can set the User-Agent header and other HTTP headers in Jsoup as follows:

doc = Jsoup

.connect("https://scrapeme.live/shop")

.userAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36")

.header("Accept-Language", "*")

.get();

Specifically, you can set the User-Agent with the Jsoup userAgent() method. Similarly, you can specify any other HTTP header with header().

Step #3: Select the HTML elements of interest

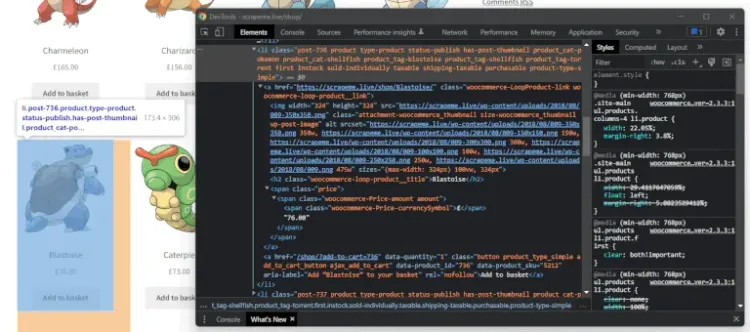

Open your target web page in the browser and identify the HTML elements of interest. In this case, you want to scrape all product HTML elements. Right-click on a product HTML element and select the "Inspect" option. This should open the DevTools window below:

As you can see, a product is li.product HTML element. This includes:

-

An

aHTML element: contains the URL associated with the product -

An

imgHTML element: contains the product image. -

A

h2HTML element: contains the product name. -

A

spanHTML element: contains the product price.

Now, let's learn how to extract data from a product HTML element with web scraping in Java.

Step #4: Extracting data from the HTML elements

First, you need a Java object where to store the scraped data. Create a data folder in the main package and define a PokemonProduct.java class as follows:

package com.zenrows.data;

public class PokemonProduct {

private String url;

private String image;

private String name;

private String price;

// getters and setters omitted for brevity...

@Override

public String toString() {

return "{ \"url\":\"" + url + "\", "

+ " \"image\": \"" + image + "\", "

+ "\"name\":\"" + name + "\", "

+ "\"price\": \"" + price + "\" }";

}

}

Note that the toString() method produces a string in JSON format. This will come in handy later.

Now, let's retrieve the list of li.product HTML products on the target web page. You can achieve this with Jsoup as below:

Elements products = doc.select("li.product");

What this snippet does is simple. The Jsoup select() function apply the CSS selector strategy to retrieve all li.product on the web page. In details, Elements extends an ArrayList. So, you can easily iterate over it.

Thus, you can iterate over products to extract the info of interest and store it in PokemonProduct objects:

// initializing the list of Java object to store

// the scraped data

List<PokemonProduct> pokemonProducts = new ArrayList<>();

// retrieving the list of product HTML elements

Elements products = doc.select("li.product");

// iterating over the list of HTML products

for (Element product : products) {

PokemonProduct pokemonProduct = new PokemonProduct();

// extracting the data of interest from the product HTML element

// and storing it in pokemonProduct

pokemonProduct.setUrl(product.selectFirst("a").attr("href"));

pokemonProduct.setImage(product.selectFirst("img").attr("src"));

pokemonProduct.setName(product.selectFirst("h2").text());

pokemonProduct.setPrice(product.selectFirst("span").text());

// adding pokemonProduct to the list of the scraped products

pokemonProducts.add(pokemonProduct);

}

This logic uses the Java web scraping API offered by Jsoup to extract all the data of interest from each product HTML element. Then, it initializes a PokemonProduct with this data and adds it to the list of scraped products.

Congrats! You just learned how to scrape data from a web page with Jsoup. Let's now convert this data into a more useful format.

Step #5: Export the data to JSON

Don't forget that the toString() method of PokemonProduct returns a JSON string. So, simply call toString() on the List<PokemonProduct> object:

pokemonProducts.toString();

System.out.println(pokemonProducts);

toString() on an ArrayList calls the toString() method on each element of the list. Then, it embeds the result in square brackets.

In other words, this will produce the following JSON data:

[

{

"url": "https://scrapeme.live/shop/Bulbasaur/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png",

"name": "Bulbasaur",

"price": "£63.00"

},

// ...

{

"url": "https://scrapeme.live/shop/Pidgey/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/016-350x350.png",

"name": "Pidgey",

"price": "£159.00"

}

]

Et voilà! You just performed web scraping using Java! Yet, the website consists of several web pages. Let's see how to scrape them all.

Web Crawling in Java

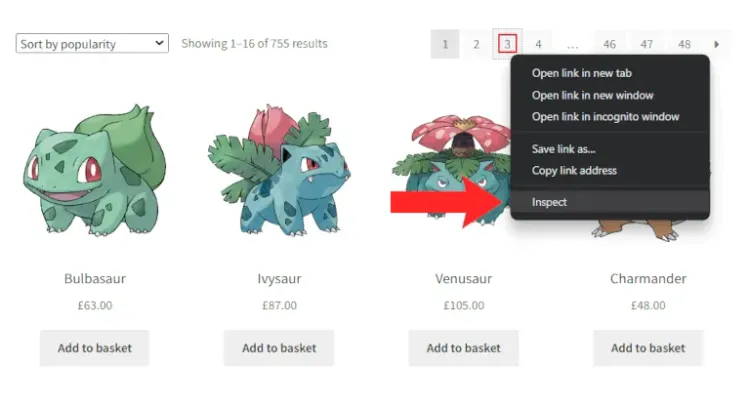

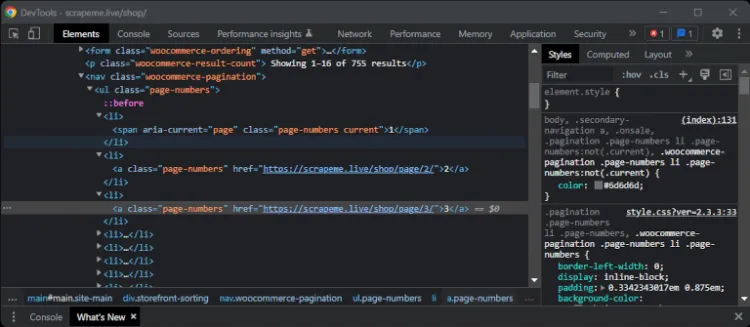

Let's now retrieve the list of all pagination links to scrape the entire website. This is what web crawling is about. Right-click on the pagination number HTML element and choose the "Inspect" option.

The browser should open the DevTools section and highlight the selected DOM element, as below:

From here, note that you can extract all the pagination number HTML elements with the a.page-numbers CSS selector. These elements contain the links you want to scrape. You can retrieve them all with Jsoup as below:

Elements paginationElements = doc.select("a.page-numbers");

If you want to scrape all web pages, you have to implement some crawling logic. Also, you'll need to rely on some lists and sets to avoid scraping a web page twice. You can implement web crawling logic to visit a limited amount of webpages controlled by limit as follows:

package com.zenrows;

import com.zenrows.data.PokemonProduct;

import org.jsoup.*;

import org.jsoup.nodes.*;

import org.jsoup.select.*;

import java.io.IOException;

import java.util.*;

public class Main {

public static void scrapeProductPage(

List<PokemonProduct> pokemonProducts,

Set<String> pagesDiscovered,

List<String> pagesToScrape

) {

// the current web page is about to be scraped and

// should no longer be part of the scraping queue

String url = pagesToScrape.remove(0);

pagesDiscovered.add(url);

// scraping logic omitted for brevity...

Elements paginationElements = doc.select("a.page-numbers");

// iterating over the pagination HTML elements

for (Element pageElement : paginationElements) {

// the new link discovered

String pageUrl = pageElement.attr("href");

// if the web page discovered is new and should be scraped

if (!pagesDiscovered.contains(pageUrl) && !pagesToScrape.contains(pageUrl)) {

pagesToScrape.add(pageUrl);

}

// adding the link just discovered

// to the set of pages discovered so far

pagesDiscovered.add(pageUrl);

}

}

public static void main(String[] args) {

// initializing the list of Java object to store

// the scraped data

List<PokemonProduct> pokemonProducts = new ArrayList<>();

// initializing the set of web page urls

// discovered while crawling the target website

Set<String> pagesDiscovered = new HashSet<>();

// initializing the queue of urls to scrape

List<String> pagesToScrape = new ArrayList<>();

// initializing the scraping queue with the

// first pagination page

pagesToScrape.add("https://scrapeme.live/shop/page/1/");

// the number of iteration executed

int i = 0;

// to limit the number to scrape to 5

int limit = 5;

while (!pagesToScrape.isEmpty() && i < limit) {

scrapeProductPage(pokemonProducts, pagesDiscovered, pagesToScrape);

// incrementing the iteration number

i++;

}

System.out.println(pokemonProducts.size());

// writing the scraped data to a db or export it to a file...

}

}

scrapeProductPage() scrapes a web page, discovers new links to scrape, and adds their URL to the scraping queue. If you increment limit to 48, at the end of the while cycle, pagesToScrape will be empty and pagesDiscovered will contain all the 48 pagination URLs.

If you're an IntelliJ IDEA user, click on the run icon to run the web scraping Java example. Wait for the process to end. This will take some seconds. At the end of the process, pokemonProducts will contain all 755 pokemon products.

Congratulations! You just extracted all the product data automatically!

Parallel Web Scraping in Java

Web scraping in Java can become a time-consuming process. This is especially true if your target website consists of many web pages and/or the server takes time to respond. Also, Java isn't popular for being a performant programming language.

At the same time, Java 8 introduced a lot of features to make parallelism easier. So, transforming your Java web scraper to work in parallel takes only a few updates. Let's see how you can perform parallel web scraping in Java:

package com.zenrows;

import com.zenrows.data.PokemonProduct;

import org.jsoup.*;

import org.jsoup.nodes.*;

import org.jsoup.select.*;

import java.io.IOException;

import java.util.*;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.TimeUnit;

public class Main {

public static void scrapeProductPage(

List<PokemonProduct> pokemonProducts,

Set<String> pagesDiscovered,

List<String> pagesToScrape

) {

// omitted for brevity...

}

public static void main(String[] args) throws InterruptedException {

// initializing the list of Java object to store

// the scraped data

List<PokemonProduct> pokemonProducts = Collections.synchronizedList(new ArrayList<>());

// initializing the set of web page urls

// discovered while crawling the target website

Set<String> pagesDiscovered = Collections.synchronizedSet(new HashSet<>());

// initializing the queue of urls to scrape

List<String> pagesToScrape = Collections.synchronizedList(new ArrayList<>());

// initializing the scraping queue with the

// first pagination page

pagesToScrape.add("https://scrapeme.live/shop/page/1/");

// initializing the ExecutorService to run the

// web scraping process in parallel on 4 pages at a time

ExecutorService executorService = Executors.newFixedThreadPool(4);

// launching the web scraping process to discover some

// urls and take advantage of the parallelization process

scrapeProductPage(pokemonProducts, pagesDiscovered, pagesToScrape);

// the number of iteration executed

int i = 1;

// to limit the number to scrape to 5

int limit = 10;

while (!pagesToScrape.isEmpty() && i < limit) {

// registering the web scraping task

executorService.execute(() -> scrapeProductPage(pokemonProducts, pagesDiscovered, pagesToScrape));

// adding a 200ms delay to avoid overloading the server

TimeUnit.MILLISECONDS.sleep(200);

// incrementing the iteration number

i++;

}

// waiting up to 300 seconds for all pending tasks to end

executorService.shutdown();

executorService.awaitTermination(300, TimeUnit.SECONDS);

System.out.println(pokemonProducts.size());

}

}

Keep in mind that ArrayList and HashSet are not thread-safe in Java. This is why you need to wrap your collections with Collections.synchronizedList() and Collections.synchronizedSet(), respectively. These methods will turn them into thread-safe collections you can then use in threads.

Then, you can use ExecutorServices to run tasks asynchronously. Thanks to ExecutorServices, you can execute and manage parallel tasks with no effort. Specifically, newFixedThreadPool() allows you to initialize an Executor that can simultaneously run as many threads as the number passed to the initialization method.

You don't want to overload the target server or local machine. This is why you need to add a few milliseconds of timeout between threads with sleep(). Your goal is to perform web scraping, not a DOS attack.

Then, always remember to shutdown your ExecutorService and release its resource. Since when the code exists the while cycle some task may still be running, you should use the awaitTermination() method.

You must call this method after a shutdown request. In detail, awaitTermination() blocks the code and wait for all tasks to complete within the interval of time passed as a parameter.

Run this java web scraping example script and you'll experience a noticeable increase in performance compared to before. You just learned how to perform instant web scraping with Java.

Well done! You now know how to do parallel web scraping with Java! But there are still a few lessons to learn!

Scraping Dynamic Content Websites in Java

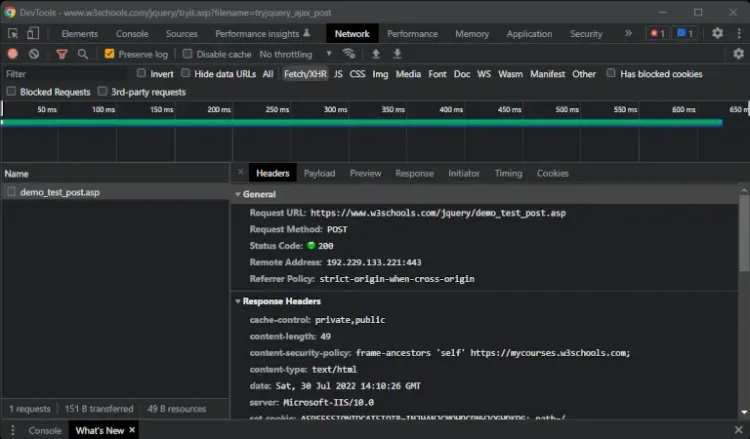

Don't forget that a web page is more than its corresponding HTML document. Web pages can perform HTTP requests in the browser via AJAX. This mechanism allows web pages to retrieve data asynchronously and update the content shown to the user accordingly.

Most websites now rely on frontend API requests to retrieve data. These requests are AJAX calls. So, these API calls provide valuable data you can't ignore when it comes to web scraping. You can sniff these calls, replicate them in your scraping script, and retrieve this data.

To sniff a AJAX call, use the DevTools your browser. Right-click on a web page, choose "Inspect", and select the "Network" tab. In the "Fetch/XHR" tab, you'll find the list of AJAX calls the web page executed, as below.

Here, you can retrieve all the info you need to replicate these calls in your web scraping script. Yet, this isn't the best approach.

Web Scraping With a Headless Browser

Web pages perform most of the AJAX calls in response to user interaction. This is why you need a tool to load a web page in a browser and replicate user interaction. This is what a headless browser is about.

In detail, a headless browser is a web browser with no GUI that enables you to programmatically control a web page. In other terms, a headless browser allows you to instruct a web browser to perform some tasks.

Thanks to a headless browser, you can interact with a web page through JavaScript as a human being would. One of the most popular libraries in Java offering headless browser functionality is Selenium WebDriver.

Note that ZenRows API comes with headless browser capabilities. Learn more about how to extract dynamically loaded data.

If you use Gradle, add selenium-java with the line below in the dependencies section of your build.gradle file:

implementation "org.seleniumhq.selenium:selenium-java:4.14.1"

Otherwise, if you use Maven, insert the following lines in your pom.xml file:

<dependency>

<groupId>org.seleniumhq.selenium</groupId>

<artifactId>selenium-java</artifactId>

<version>4.14.1</version>

</dependency>

Make sure to install your new dependency by running update command from the terminal or in your IDE. Ensure you have the latest version of Chrome installed. WebDriver previously needed its own setup, but now it's automatically included in version 4 and later. If you use Gradle, check the dependencies section of your build.gradle file. Otherwise, if you use Maven, check your pom.xml file. You're now ready to start using Selenium.

You can replicate the web scraping logic seen above on a single page with the following script:

import org.openqa.selenium.*;

import org.openqa.selenium.chrome.ChromeOptions;

import org.openqa.selenium.chrome.ChromeDriver;

import org.openqa.selenium.support.ui.WebDriverWait;

import java.util.*;

import com.zenrows.data.PokemonProduct;

public class Main {

public static void main(String[] args) {

// defining the options to run Chrome in headless mode

ChromeOptions options = new ChromeOptions();

options.addArguments("--headless");

// initializing a Selenium WebDriver ChromeDriver instance

// to run Chrome in headless mode

WebDriver driver = new ChromeDriver(options);

// connecting to the target web page

driver.get("https://scrapeme.live/shop/");

// initializing the list of Java object to store

// the scraped data

List<PokemonProduct> pokemonProducts = new ArrayList<>();

// retrieving the list of product HTML elements

List<WebElement> products = driver.findElements(By.cssSelector("li.product"));

// iterating over the list of HTML products

for (WebElement product : products) {

PokemonProduct pokemonProduct = new PokemonProduct();

// extracting the data of interest from the product HTML element

// and storing it in pokemonProduct

pokemonProduct.setUrl(product.findElement(By.tagName("a")).getAttribute("href"));

pokemonProduct.setImage(product.findElement(By.tagName("img")).getAttribute("src"));

pokemonProduct.setName(product.findElement(By.tagName("h2")).getText());

pokemonProduct.setPrice(product.findElement(By.tagName("span")).getText());

// adding pokemonProduct to the list of the scraped products

pokemonProducts.add(pokemonProduct);

}

// ...

driver.quit();

}

}

As you can see, the web scraping logic isn't that different from that seen before. What truly changes is that Selenium runs the web scraping logic in the browser. This means that Selenium has access to all features offered by a browser.

For example, you can click on a pagination element to directly navigate to a new page as below:

WebElement paginationElement = driver.findElement(By.cssSelector("a.page-numbers"));

// navigating to a new web page

paginationElement.click();

// wait for the page to load...

System.out.println(driver.getTitle()); // "Products – Page 2 – ScrapeMe"

In other words, Selenium allows you to perform web crawling by interacting with the elements in a web page. Just like a human being would. This makes a web scraper based on a headless browser harder to detect and block. Learn more on how to perform web scraping without getting blocked.

Other Web Scraping Libraries For Java

Other useful Java libraries for web scraping are:

- HtmlUnit: a GUI-less/headless browser for Java. HtmlUnit can perform all browser-specific operations on a web page. Like Selenium, it was born for testing but you can use it for web crawling and scraping.

- Playwright: an end-to-end testing library for web apps developed by Microsoft. Again, it enables you to instruct a browser. So, you can use it for web scraping like Selenium.

Conclusion

In this web scraping java tutorial, you learned everything you should know about performing professional web scraping with Java. In detail, you saw:

- Why Java is a good programming language when it comes to web scraping

- How to perform basic web scraping in Java with Jsoup

- How to crawl an entire website in Java

- Why you might need a headless browser

- How to use Selenium to perform scraping in Java on dynamic content websites

What you should never forget is that your web scraper needs to be able to bypass anti-scraping systems. This is why you need a complete web scraping Java API. ZenRows offers that and much more.

In detail, ZenRows is a tool that offers many services to help you perform web scraping. ZenRows also gives access to a headless browser with just a simple API call. Try ZenRows for free and start scraping data from the web with no effort.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.