Data is the world's most valuable asset. Companies know this well, which is why they try to protect their own at all costs. Some of it is publicly accessible through the web. But they don't want competitors to steal it with web scraping. That's why more and more websites are adopting anti-scraping measures.

In this article, you'll learn everything you need to know about the most popular anti-scraping techniques. Of course, you'll also see how to defeat them.

Let's get started!

What Is Anti-Scraping?

It refers to all techniques, tools, and approaches to protect online data against scraping. In other words, anti-scraping makes it more difficult to automatically extract data from a web page. Specifically, it's about identifying and blocking requests from bots or malicious users.

For this reason, anti-scraping also includes anti-bot protection and anything you can do to block and restrict scrapers. If you aren't familiar with this, anti-bot is a technology that aims to block unwanted bots. This is because not all of them are bad. For example, the Google bot crawls your website so that the company can index it.

Now, you might be asking the following:

What Is the Difference Between Anti-Scraping and Scraping?

Scraping and anti-scraping are two opposite concepts. The former's about extracting data from web pages using scripts. In contrast, the latter's concerned with protecting the information contained in a web page.

The two concepts are inherently connected. Anti-scraping techniques evolve based on what methods scrapers use to retrieve web data. At the same time, scraping technologies evolve to prevent their spider from being recognized and blocked.

Now, the following question should arise.

How Do You Stop Scraping?

There are several techniques behind anti-scraping technology. Also, there are a lot of anti-scraping software or anti-scraping services. They're becoming increasingly complex and effective against web scrapers.

At the same time, keep in mind that preventing web scraping isn't an easy task. As anti-scraping techniques evolve, so do ways to bypass them. But it's fundamental to know what challenges await you.

How to Bypass Anti Scraping?

Bypassing anti-scraping means finding a way to overcome all data protection systems that a website implements. The best way to skip them is to know how they work and what to expect.

Only this way you can equip your spider with the necessary tools.

To understand how these techniques work, let's have a look at the most popular anti-scraping approaches.

Top Seven Anti-Scraping Techniques

If you want your spider to be effective, you need to address all the obstacles you may face. So, let's dig into the seven most popular and adopted anti-scraping techniques and how to overcome them.

1. Auth Wall or Login Wall

Does the following image look familiar to you?

Most websites, such as LinkedIn, hide their data behind an auth wall/login wall. That's especially true when it comes to social platforms like Twitter and Facebook. When a website implements a log wall, only authenticated users can access its data.

A server identifies a request as authenticated based on its HTTP headers. In detail, some cookies store the values to send as authentication headers. If you aren't familiar with this concept, an HTTP cookie is a small piece of data stored in the browser. The browser creates the login cookies based on the response obtained from the server after login.

So, to crawl sites that adopt a login wall, your crawler must first have access to those cookies. The values contained in them are sent as HTTP headers. After logging in, you can retrieve the values by looking at a request in the DevTools.

Similarly, your spider can use a headless browser to simulate the login operation and then navigate it. This could make the logic of your scraping process more complex. Luckily, ZenRows API handles headless browsers for you.

Note that, in this case, you must have valid credentials for your target website if you want to scrape it.

2. IP Address Reputation

One of the simplest techniques involves blocking requests from a particular IP. Let's elaborate:

The website tracks the requests it receives. When too many come from the same IP, the website bans it.

At the same time, the site might block the IP because it makes requests at regular intervals, something that's unlikely for a human user. Thus, these get marked as generated by a bot. That's one of the most common anti-bot protection systems.

Keep in mind that these can undermine your IP address reputation forever. Check here if an IP has already been compromised. Remember this as a general rule: avoid using your IP when scraping.

The only way to evade blocking because of IP is to introduce random timeouts between requests. Or, you can use an IP rotation system via premium proxy servers. Note that ZenRows offers an excellent premium proxy service at an affordable price.

3. User Agent and/or Other HTTP Headers

Just like IP-based banning, anti-scraping technologies can use some HTTP headers to identify malicious requests and block them. Again, the website keeps track of the last requests received. If these don't contain an accepted set of values in some header, it blocks them.

In detail, the most relevant header you should take into account is the User-Agent. This is a string that identifies the application, operating system, and/or vendor version the request comes from. So, your crawlers should always set a real User-Agent.

Similarly, the anti-scraping system may block requests that don't have a Referrer. This header is a string that contains an absolute or partial address of the page that makes the request.

4. Honeypots

A honeypot is a decoy system projected to look like a legitimate one. They tend to have some security issues. Their goals are to divert malicious users and bots from real targets. Also, through these honeypots, protection systems can study how attackers act.

When it comes to anti-scraping, a honeypot can be a fake website that doesn't implement any protection measures. It generally provides false or wrong data. Also, it may be collecting info from the requests it receives to train the anti-scraping systems.

The only way to avoid a honeypot trap is to make sure that the data on the target website is real. Otherwise, you can ignore the threat by protecting your IP address behind a proxy server.

A web proxy acts as an intermediary between your computer and the rest of the internet. When you use it to perform requests, the target website will see the IP address and headers of the proxy server instead of yours. **This stops the honeypot trap from being effective.

**

You should also avoid following hidden links (those marked with the display: none or visibility: hidden CSS rule) when crawling a website. That's because honeypot pages typically come from links that are contained in the page but invisible to the user.

5. JavaScript Challenges

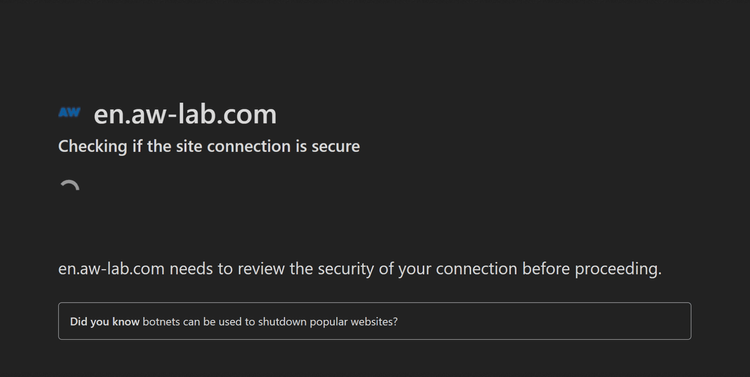

A JavaScript challenge is a mechanism that anti-scraping systems use to prevent bots from accessing a webpage.

Every user, even legitimate ones, can face hundreds of JS challenges for a single page. Any browser with JavaScript enabled will be automatically able to understand and execute them.

The challenge adds a short seconds delay. That's the time required by the anti-bot system to perform it. It executes automatically without the user even realizing it.

So, any spider that isn't equipped with a JavaScript stack won't be able to pass the challenge. And since crawlers generally perform server-to-server requests without a browser, they won't be able to bypass the anti-scraping system.

If you want to overcome such a challenge, you need a browser. Your scraper could use a headless browser, such as Selenium. These technologies execute a real one without the graphical interface behind the scene.

Cloudflare and Akamai offer the most difficult JavaScript challenges on the market. Avoiding them isn't easy, but it's definitely possible. Check our step-by-step guide to learn how to bypass Cloudflare and Akamai.

6. CAPTCHAs

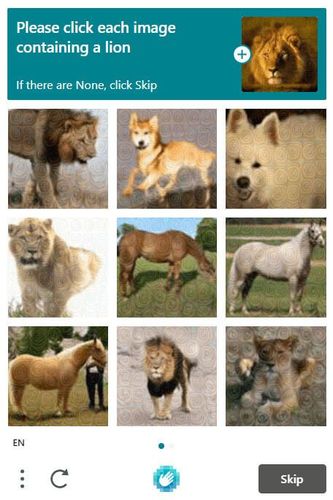

A CAPTCHA is a type of challenge-response test used to determine whether a user is human. They involve finding a solution to a problem only humans can solve. For example, asking you to select images of a particular animal or object.

CAPTCHAs are one of the most popular anti-bot protection systems. That's especially true considering that many CDN (Cloud Delivery Network) services now offer them as built-in anti-bot solutions.

They prevent non-human automated systems from accessing and browsing a site. In other words, they prevent scrapers from crawling a website. At the same time, there are ways to overcome them automatically.

Learn more about the ways to automate CAPTCHA solving.

7. User Behavior Analysis

UBA (User Behavior Analytics) is about collecting, tracking, and elaborating user data through monitoring systems. Then, a user behavior analysis process determines whether the current user is a human or a bot.

During this, an anti-scraping software uses the UBA and looks for patterns of human behavior. If it doesn't find them, the system labels the user as a bot and blocks it. This is because any anomaly represents a potential threat.

Bypassing these systems can be very challenging as they evolve based on the data they collect about users. Since they depend on artificial intelligence and machine learning, the current solutions may not work in the future. Only advanced anti-scraping services, like ZenRows, offer such a protection solution that can keep up with the challenge.

Conclusion

You've got an overview of everything essential you should know about anti-scraping techniques, from basic to advanced approaches. As shown above, there are lots of ways you might get blocked. However, there are also several methods and tools to counteract those anti-scraping measures.

At the end of the day, you have to be aware of what you're up against if you want to have a chance to beat it. Let's see a quick recap of the knowledge you now possess. Today, you learned:

- What anti-scraping is and how it differs from scraping.

- How anti-scraping systems work.

- What are the most popular and adopted anti-scraping techniques, and how to avoid them.

If you liked this, take a look at our guide on web scraping without getting blocked. Or just use ZenRows to save yourself the trouble. Try it for free today!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.