Did your scraper get blocked again? That's frustrating, but we've been there and will share with you ten easy solutions to get the data you want.

Here's a short overview of what to try to succeed at web scraping without getting blocked:

- Set real request headers.

- Use proxies.

- Use premium proxies.

- Use headless browsers.

- Outsmart honeypot traps.

- Avoid fingerprinting.

- Bypass anti-bot systems.

- Automate CAPTCHA solving.

- Use APIs to your advantage.

- Stop repeated failed attempts.

Types of Techniques to Avoid Getting Blocked

You need to make your scraper undetectable to be able to extract data from a webpage, and the main types of techniques for that are imitating a real browser and simulating human behavior. For example, a normal user wouldn't make 100 requests to a website in one minute.

You'll learn proven tips and discover some tools to quickly implement them into your codebase.

1. Set Real Request Headers

As we mentioned, your scraper activity should look as similar as possible to a regular user browsing the target website. Web browsers usually send a lot of information that HTTP clients or libraries don't.

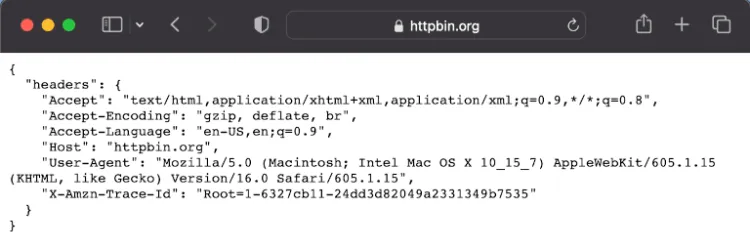

Luckily this is easy to solve. First, go to Httpbin and check the request headers your current browser sends. In our case, we got this:

One of the most important headers for web scraping is User-Agent. That string informs the server about the operating system, vendor, and version of the requesting User-Agent.

Then, using the library of your preference, set these headers, so the target website thinks your web scraper is a regular web browser.

For specific instructions, you can check our guides on how to set headers for JavaScript, PHP, and Python.

2. Use Proxies

If your scraper makes too many requests from an IP address, websites can block that IP. In that case, you can use a proxy server with a different IP. It'll act as an intermediary between your web scraping script and the website host.

There are many types of proxies. Using a free proxy, you can start testing how to integrate proxies with your scraper or crawler. You can find one in the Free Proxy List.

But keep in mind that they are usually slow and unreliable. They can also keep track of your activities and connections, self-identify as a proxy, or use IPs on banned lists.

There are better alternatives if you're serious about web scraping without getting blocked. For example, ZenRows offers an excellent premium proxies service.

Ideally, you want rotating IPs, so your activity seems to come from different users and does not look suspicious. That also helps if one IP gets banned or blacklisted because you can use others.

Another essential distinction between proxies is that some use a data center IP while others rely on a residential IP.

Data center IPs are reliable but are easy to identify and block. Residential IP proxies are harder to detect since they belong to an Internet Service Provider (ISP) that might assign them to an actual user.

How to Configure Your Scraper to Use a Proxy?

Once you get a proxy to use with your scraper, you need to connect the two. The exact process depends on the type of scraper you have.

If you're coding your web scraper with Python, we have a detailed guide on rotating proxies.

If your web scraper runs on Node.js, you can configure Axios or another HTTP client to use a proxy in the following way.

const axios = require('axios');

const proxy = {

protocol: 'http',

host: '202.212.123.44', // Free proxy from the list

port: 80,

};

(async () => {

const { data } = await axios.get('https://httpbin.org/ip', { proxy });

console.log(data);

// { origin: '202.212.123.44' }

})();

3. Use Premium Proxies for Web Scraping

High-speed and reliable web scraping proxies with residential IPs sometimes are referred to as premium proxies. For production crawlers and scrapers, it's common to use these types of proxies.

When selecting a proxy service, it's important to check that it works great for web scraping. If you pay for a high-speed, private proxy that gets its only IP blocked by your target website, you might have just drained your money down the toilet.

Companies like ZenRows provide premium proxies tailored for web scraping and web crawling. Another advantage is that it works as an API service with integrated proxies, so you don't have to tie the scraper and the proxy rotator together.

4. Use Headless Browsers

To avoid being blocked when web scraping, you want your interactions with the target website to look like regular users visiting the URLs. One of the best ways to achieve that is to use a headless web browser. They are real web browsers that work without a graphical user interface.

Most popular web browsers like Google Chrome and Firefox support headless mode. However, even if you use an official browser in headless mode, you need to make its behavior look real. It's common to add some special request headers to achieve that, like a User-Agent.

Selenium and other browser automation suites allow you to combine headless browsers with proxies. That will enable you to hide your IP and decrease the risk of being blocked.

To learn more about using headless browsers to prevent having your web scraper blocked, check out our detailed guides for Selenium, Playwright and Puppeteer.

5. Outsmart Honeypot Traps

Some websites will set up honeypot traps. These are mechanisms designed to attract bots while being unnoticed by real users. They can confuse crawlers and scrapers by making them work with fake data.

Let's learn how to get the honey without falling into the trap!

Some of the most basic honeypot traps are links in the website's HTML code but are invisible to humans. Make your crawler or scraper identify links with CSS properties that make them invisible.

Ideally, your scraper shouldn't follow text links with the same color as the background or hidden from users on purpose. You can see a basic JavaScript snippet that identifies some invisible links in the DOM below.

function filterLinks() {

let allLinksAr = Array.from(document.querySelectorAll('a[href]'));

console.log('There are ' + allLinksAr.length + ' total links');

let filteredLinks = allLinksAr.filter(link => {

let linkCss = window.getComputedStyle(link);

let isDisplayed = linkCss.getPropertyValue('display') != 'none';

let isVisible = linkCss.getPropertyValue('visibility') != 'hidden';

if (isDisplayed && isVisible) return link;

});

console.log('There are ' + filteredLinks.length + ' visible links');

}

Another fundamental way to avoid honeypot traps is to respect the robots.txt file. It's written only for bots and contains instructions about which parts of a website can be crawled or scraped and which should be avoided.

Honeypot traps usually come together with tracking systems designed to fingerprint automated requests. This way, the website can identify similar requests in the future, even if they don't come from the same IP.

6. Avoid Fingerprinting

If you change a lot of parameters in your requests, but your scraper is still blocked, you might've been fingerprinted. Namely, the anti-bot system uses some mechanism to identify you and block your activity.

To overcome fingerprinting mechanisms, make it more difficult for websites to identify your scraper. Unpredictability is key, so you should follow the tips below.

- Don't make the requests at the same time every day. Instead, send them at random times.

- Change IPs often.

- Forge and rotate TLS fingerprints. You can learn more about this in our article on bypassing Cloudflare.

- Use different request headers, including other User-Agents.

- Configure your headless browser to use different screen sizes, resolutions, and installed fonts.

- Use different headless browsers.

7. Bypass Anti-bot Systems

If your target website uses Cloudflare, Akamai, DataDome, PerimeterX, or a similar anti-bot service, you probably couldn't scrape the URL because it has been blocked. Bypassing these systems is challenging but possible.

Cloudflare, for example, uses different bot-detection methods. One of their most essential tools to block bots is the "waiting room". Even if you're not a bot, you should be familiar with this type of screen:

While you wait, some JavaScript code checks to ensure the visitor isn't a bot. The good news is this code runs on the client side, and we can tamper with it. The bad news, it's obfuscated and isn't always the same script.

We have a comprehensive guide on bypassing Cloudflare but be warned; it's a long and difficult process. The best way to scrape and automatically overcome this type of protection is to web scraping API such as ZenRows.

8. Automate CAPTCHA Solving

Bypassing CAPTCHAs is among the most difficult obstacles when scraping a URL. These computer challenges are specifically made to tell humans apart from bots. Usually, they're placed in sections with sensitive information.

You should consider if you can still get the information you want, even if you leave out the protected sections, as it's tough to code a solution.

On the bright side, some companies offer to solve CAPTCHAs for you. They employ real humans for the job and charge per solved test. Some examples are 2Captcha and Anti Captcha.

Overall, CAPTCHAs are slow and expensive to solve. Wouldn't it be better to avoid them altogether? ZenRows' Anti-CAPTCHA will help you if you're after content protected by CAPTCHA. It'll get the content without any action on your side.

9. Use APIs to Your Advantage

Currently, much of the information that websites display comes from APIs. This data is difficult to scrape because it's usually requested dynamically with JavaScript after the user has executed some action.

Let's say you're trying to collect data from posts that appear on a website with "infinite scroll". In this case, static web scraping isn't the best option because you'll always get the results from the first page.

You can use headless browsers or a scraping service to configure user actions for these websites. ZenRows provides a web scraping API to do just that without complicated headless browser configurations.

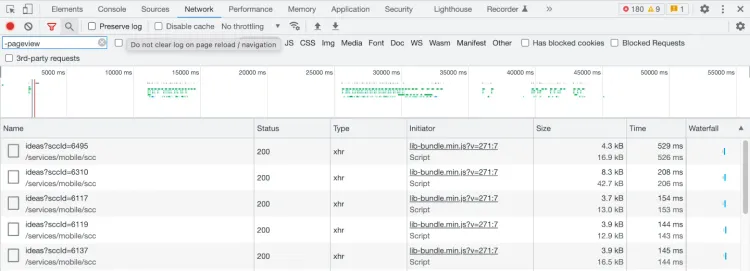

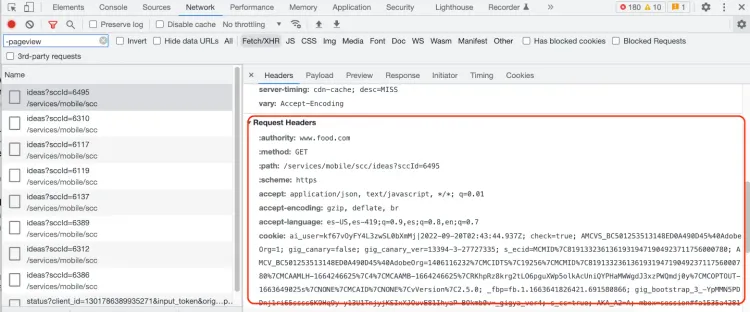

Alternatively, you can reverse engineer the APIs of the website. The first step is to use the network inspector of your preferred browser and check the XHR (XMLHttpRequest) requests that the page is making.

Then you should check the parameters sent, for example, page numbers, dates, or reference IDs. Sometimes these parameters use simple encodings to prevent the APIs from being used by third parties. In that case, you can find out how to send the appropriate parameters with trial and error.

Other times, you'll have to obtain authentication parameters with real users and browsers and send this information to the server as headers or cookies. In any case, you'll need to study carefully the requests the website makes to its API.

Sometimes, figuring out the working of a private API can be a complex task, but if you manage to do it. The parsing job will be much simpler, as you'll get the information already organized and structured, usually in JSON format.

10. Stop Repeated Failed Attempts

One of the most suspicious situations for a webmaster is to see a large number of failed requests. Initially, they may not suspect a bot is a cause and start investigating.

However, if they detect these errors because a bot is trying to scrape their data, they'll block your web scraper. That's why it's best to detect and log failed attempts and get notified when it happens to suspend scraping.

These errors usually happen because there have been changes to the website. Before continuing with data scraping, you'll need to adjust your scraper to accommodate the new website structure. This way, you'll avoid triggering alarms that can lead to being blocked.

Conclusion

As you can see, some websites use multiple mechanisms to block you from scraping their content. Using only one technique to avoid being blocked might not be enough for successful scraping.

Let's recap the anti-block tips we saw in this post:

| Anti-scraper block | Workaround | Supported by ZenRows |

|---|---|---|

| Requests limit by IP | Rotating proxies | ✅ |

| Data center IPs blocked | Premium proxies | ✅ |

| Cloudflare and other anti-bot systems | Avoid suspicious requests and reverse-engineer JavaScript Challenge | ✅ |

| Browser fingerprinting | Rotating headless browsers | ✅ |

| Honeypot traps | Skipping invisible links and circular references | ✅ |

| CAPTCHAs on suspicious requests | Premium proxies and user-like requests | ✅ |

| Always-on CAPTCHAs | CAPTCHA-solving tools and services | ❌ |

Remember that even after applying these tips, you can be blocked. Save time on all of that!

At ZenRows, we use all the anti-block techniques discussed here and more. That's why our web scraping API can handle thousands of requests per second without being blocked.

On top of that, we can even create custom scrapers suited to your needs. You can try it for free today.

Frequent Questions

How Do I Scrape a Website Without Being Blocked?

Websites employ various techniques to prevent bot traffic from accessing their pages. That's why you're likely to run into firewalls, waiting rooms, JavaScript challenges, and other obstacles while web scraping.

Fortunately, you can minimize the risk of getting blocked by trying the following:

- Set real request headers.

- Use proxies.

- Use premium proxies for web scraping.

- Use headless browsers.

- Outsmart honeypot traps.

- Avoid fingerprinting.

- Bypass anti-bot systems.

- Automate CAPTCHA solving.

- Use APIs to your advantage.

- Stop repeated failed attempts.

Why Is Web Scraping Not Allowed?

Web scraping is legal but not always allowed because even publicly available data is often protected by copyright law and requires written authorization for commercial use. Luckily, you can scrape data legitimately by following the Fair use guidelines.

Also, a website may contain data protected by international regulations, like personal and confidential information, that requires explicit consent from the data subjects.

Can a Website Block You From Web Scraping?

Yes, if a website detects your tool is breaching the rules outlined in its robots.txt file or triggers an anti-bot measure, it'll block your scraper.

Some basic precautions you can take to avoid bans are to use proxies with rotating IPs and to ensure your request headers appear real. Moreover, your scraper should behave like a human as much as possible without sending out too many requests too fast.

Why Do Websites Block Scraping?

Websites have many reasons to prevent bot access to their pages. For example, many companies sell data, so they're doing that to protect their income. Furthermore, security measures against hackers and unauthorized data use ban all bots, including scrapers.

Another concern is that if misdesigned, scrapers can overload the site's servers with requests, causing monetary costs and disrupting the user experience.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.