The internet is a vast source of data if you know how to extract it. Thus, the demand for web scraping has risen exponentially in recent years, and Python has become the most popular programming language for that purpose.

In this step-by-step tutorial, you'll learn how to retrieve information using popular libraries such as Requests and Beautiful Soup.

Let's dive into the world of web scraping with Python!

What Is Web Scraping in Python

Web scraping is the process of retrieving data from the web. Even copying and pasting content from a page is a form of scraping! Yet, the term usually refers to a task performed by automated software, essentially a script (also called bot, crawler, or spider) that visits a website and extracts the data of interest from its pages. In our case, using Python.

You need to know that many sites implement anti-scraping techniques for different reasons. But don't worry because we'll show you how to get around them!

If you practice scraping responsibly, you're unlikely to run into legal issues. Just make sure that you're not violating the Terms of Service or extracting sensitive information, especially before building a large-scale project. That's one of the several web crawling best practices you must be aware of.

Roadmap for Python Web Scraping 101

Wondering what's ahead in your journey to learn web scraping with Python? Keep reading, and be assured we'll take you by the hand in your initial steps.

What You Need to Learn

Scraping is a step-by-step process that involves four main tasks. These are:

- Inspect the target site: Get a general idea of what information you can extract. To do this task:

- Visit the target website to get familiar with its content and structure.

- Study how HTML elements are positioned on the pages.

- Find out where the most important data is and in which format it is.

- Get the page's HTML code: Access the HTML content by downloading the page's documents. To accomplish that:

- Use an HTTP client library, like Requests, to connect to your target website.

- Make HTTP GET requests to the server with the URLs of the pages to scrape.

- Verify that the server returned the HTML document successfully.

- Extract your data from the HTML document: Obtain the information you're after, usually a specific piece of data or a list of items. To achieve this:

- Parse the HTML content with a data parsing library, like Beautiful Soup.

- Select the HTML content of interest with the same library.

- Write the scraping logic to extract information from these elements.

- If the website consists of many pages and you want to scrape them all:

- Extract the links to follow from the current page and add them to a queue.

- Follow the first link and go back until the queue is empty.

- Store the extracted data: Once extracted, transform and store it in a format that makes it easier to use. To reach this:

- Convert the scraped content to CSV, JSON, or similar formats and export them to a file.

- Write it to a database.

Remark: Don't forget that online data isn't static! Websites keep changing and evolving, and the same goes for their content. Thus, to keep the scraped information up-to-date, you need to review and repeat this process periodically.

Scraping Use Cases

Web scraping in Python comes in handy in a variety of circumstances. Some of the most relevant use cases include:

- Competitor Analysis: Gather data from your competitors' sites about their products/services, features, and marketing approaches, to monitor them and gain a competitive edge.

- Price Comparison: Collect prices from several e-commerce platforms to compare them and find the best deals.

- Lead Generation: Extract contact information from websites, such as names, email addresses, and phone numbers, to create targeted marketing lists for businesses. But be aware of your national laws when personal information is involved.

- Sentiment Analysis: Retrieve news and posts from social media to track public opinion about a topic or a brand.

- Social Media Analytics: Recover data from social platforms, such as Twitter, Instagram, Facebook, and Reddit, to track the popularity and engagement of specific hashtags, keywords, or influencers.

Keep in mind that these are but a few examples; there are plenty of scenarios where retrieving information can make a difference. Take a look at our guide on web scraping use cases to learn more!

Challenges in Web Scraping

Anyone with internet access has the ability to create a site, which has made the web a chaotic environment characterized by ever-changing technologies and styles. Due to this, you'll have to deal with some challenges while scraping:

- Variety: The diversity of layouts, styles, content and data structures available online makes it impossible to write a single spider to scrape it all. Each website is unique. Thus, each web crawling script must be custom-built for the specific target.

- Longevity: Scraping involves extracting data from the HTML elements of a website. Thus, its logic depends on the site's structure. But web pages can change their structure and content without notice! That makes the scrapers stop working and force you to adapt the data retrieval logic accordingly.

- Scalability: As the amount of data to collect increases, the performance of your Python web crawler becomes a concern. However, there are several solutions available to make your Python scraping process more scalable: you can use distributed systems, adopt parallel scraping, or optimize code performance.

Then, there's another category of challenges to consider: anti-bot technologies. These are the measures that sites take to protect against malicious or unwanted bots and include scrapers by default.

Some of the approaches are IP blocking, JavaScript challenges, and CAPTCHAs, which make data extraction less straightforward. Yet, you can bypass them using rotating proxies and headless browsers, for example. Or you can just use ZenRows to save you the hassle and easily get around them.

Alternatives to Web Scraping: APIs and Datasets

Some sites provide you with an official Application Programming Interface (API) to request and retrieve data. This approach is more stable because these mechanisms don't change so often, and there's no protection. At the same time, the data you can access through them is limited, and only a small fraction of the internet sites have developed an API.

Another option is buying ready-to-use datasets online, but you might not find one suitable for your specific needs on the market.

That's why web scraping remains the winning approach in most cases.

How to Scrape a Website in Python

Ready to get started? You'll build a real spider that retrieves data from ScrapeMe, a Pokémon e-commerce built to learn web scraping.

At the end of this step-by-step tutorial, you'll have a Python web scraper that:

- Downloads some target pages from ScrapeMe using Requests.

- Selects the HTML elements containing the data of interest.

- Scrapes the desired information with Beautiful Soup.

- Exports the information to a file.

What you'll see is just an example to get an understanding of how web scraping in Python works. Yet, keep in mind that you can apply what you'll learn here to any other website. The process may be more complex, but the key concepts to follow are always the same.

We'll also cover how to solve common errors and provide exercises to help you get a strong foundation.

Before getting your hands dirty with some code, you'll need to meet some prerequisites. Tackle them all now!

Set Up the Environment

To build a data scraper in Python, you need to download and install the following tools:

- Python 3.11+: This tutorial refers to Python 3.11.2, the latest at the time of writing.

- pip: The Python Package Index (PyPi) you can use to install libraries with a single command.

- A Python IDE: Any IDE that supports Python is ok. The free PyCharm Community Edition is the one we used.

Note: If you are a Windows user, don't forget to check the Add python.exe to PATH option in the installation wizard. This way, Windows will be able to use the python and pip commands in the terminal. FYI: Since Python version 3.4 or later includes pip by default, you don't need to install it manually.

You now have everything required to build your first web scraper in Python. Let's go!

Initialize a Python Project

Launch PyCharm and select the File > New Project... option on the menu bar.

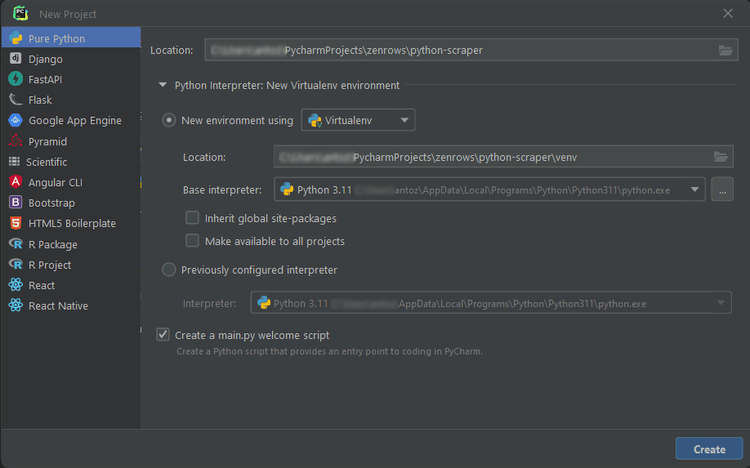

It'll open a pop-up. Select Pure Python from the left menu, and set up your project as below:

Make a folder for your project called python-scraper. Check the Create a main.py welcome script option, and click the Create button.

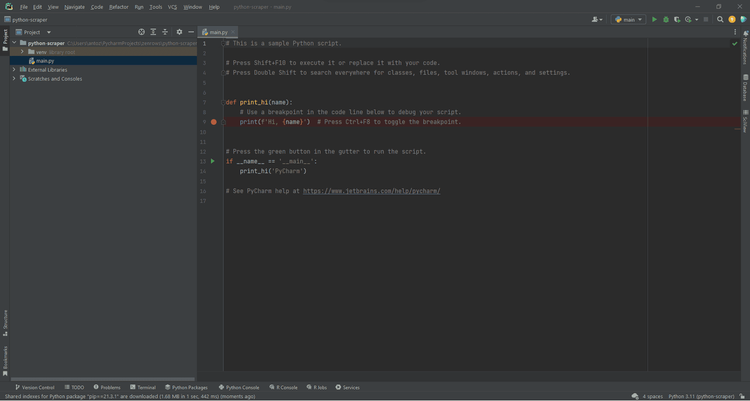

After you wait a bit for PyCharm to set up your project, you should see the following:

To verify that everything works, open the Terminal tab at the bottom of the screen and type:

python main.py

Launch this command, and you should get:

Hi, PyCharm

Fantastic! Your Python project works like a charm!

Rename main.py to scraper.py and remove all lines of code from it. You'll soon learn how to populate that file with some Beautiful Soup scraping logic in Python!

Step 1: Inspect Your Target Website

You might be tempted to go straight to coding, but that's not the best approach. First, you need to spend some time getting to know your target website. It may sound tedious or unnecessary, but it's the only way to study the site structure and understand how to scrape data from it. Every scraping project starts this way.

Make some coffee and open ScrapeMe in your favorite browser.

Browse the Website

Interact with the site as any ordinary user would. Explore different product list pages, try search features, enter a single product page, and add items to your shopping cart. Study how the site reacts and how its pages are structured.

Play with the site's buttons, icons, and other elements to see what happens. In detail, look at how the URL updates when you change pages.

Analyze the URL Structure

A web server returns an HTML document based on the request URL, each one is associated with a specific page. Consider the Venosaur product page URL:

https://scrapeme.live/shop/Venusaur/

You can deconstruct any of them into two main parts:

-

Base URL: The path to the shop section of the website. Here it's

https://scrapeme.live/shop/. -

Specific page location: The path to the specific product. The URL may end with

.html,.php, or have no extension at all.

All products available on the website will have the same base URL. What changes between each page is the latter part of it, containing a string that specifies what product page the server should return. Typically, URLs of same-type pages share a similar format overall.

Additionally, URLs can contain extra information:

-

Path parameters: These are used to capture specific values in a RESTful approach (e.g., in

https://www.example.com/users/14,14is the path param). -

Query parameters: These are added to the end of a URL after a question mark (

?). They generally encode filter values to send to the server when performing a search (e.g., inhttps://www.example.com/search?search=blabla&sort=newest,search=blablaandsort=newestare the query params).

Note that any query parameter string consists of:

-

?: It marks the beginning. - A list of

key=valueparameters separated by&:keyis the name of one parameter, whilevalueshows its value. Query strings contain parameters in key-value pairs separated by the&character.

In other words, URLs are more than simple location strings to HTML documents. They can contain information in parameters, which the server uses to run queries and fill a page with specific data.

Take a look at the one below to understand how all this works:

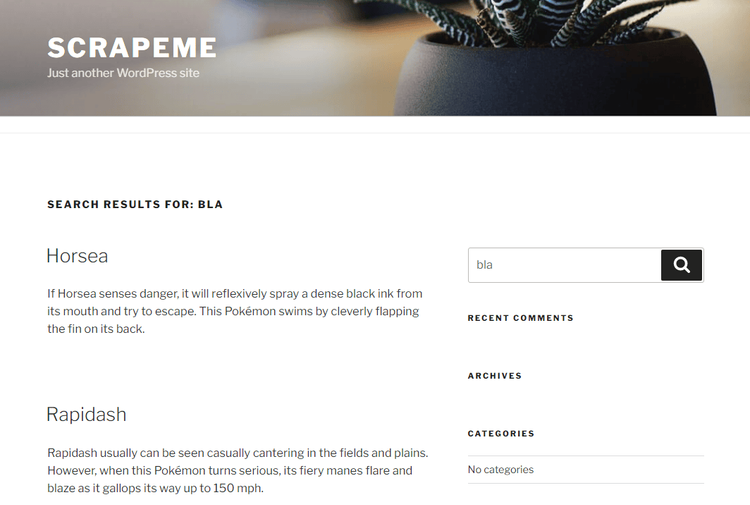

https://scrapeme.live/page/3/?s=bla

This corresponds to the URL of the third page in the list of search results for the bla query.

In that example, 3 is the path parameter while bla is the value of the s query one. This URL will instruct the server to run a paginated search query and get all that contain the string bla and return only the results of page number three.

📘 Exercise: Play around with the URL above. Change the path parameter and query string to see what happens. Similarly, play with the search input to see how the URL reacts.

📘 Solution: If you update the string in the search box and submit, the s query parameter in the URL will change accordingly. Vice versa, manual changes to that URL lead to different results displayed on the website.

Examine your target website to figure out the structure of its pages and how to retrieve data from the server.

Now that you know how ScrapeMe's page structure works, it's time to inspect the HTML code of its pages.

Use Developer Tools to Inspect the Site

You're now familiar with the website. The next step is to delve into the pages' HTML code to study its structure and content to learn how to extract data from it.

All modern browsers come with a suite of advanced developer tools, most offering nearly the same. These enable you to explore the HTML code of a web page and play with it. In this Python web scraping tutorial, you'll see Chrome's DevTools in action.

Right-click on an HTML element and select Inspect to open the DevTools window. If the site disabled the right-click menu, then do this:

-

On macOS: Select

View > Developer > Developertoolsin the menu bar. -

On Windows and Linux: Click the top-right

⋮menu button, and select theMore Tools > Developer toolsoption.

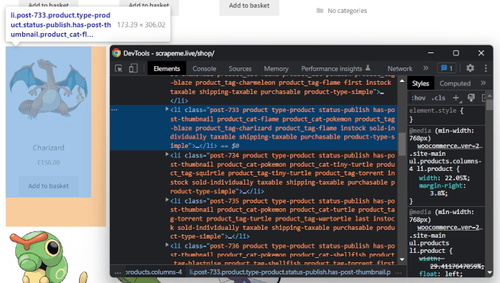

They let you inspect the structure of the Document Object Model (DOM) of a web page. This, in turn, helps you to gain deeper insight into the source code. In the DevTools section, enter the Elements tab to access the DOM.

As you can see, this shows the structure as a clickable tree of HTML nodes. The node selected on the right represents the code of the element highlighted on the left. Consider that you can expand, compress, and even edit these nodes directly in the DevTools.

To locate the position of an HTML element in the DOM, right-click on it and select Inspect. Hover over the HTML code to your right, and you'll see the corresponding elements light up on the page.

If you struggle to grasp the difference between the DOM and the HTML:

- The HTML code represents the content of a web page document written by a developer.

- The DOM is a dynamic, in-memory representation of HTML code created by the browser. In JavaScript, you can manipulate the DOM of a page to change its content, structure, and styles.

📘 Exercise: What's the first class of the li product element containing Bulbasaur? And in which HTML tag is the product price contained? Explore the DOM with the DevTools to find out!

📘 Solution: The first class is post-759, while the HTML tag wrapping the price is a <span>.

Experiment and play around! The better you dig into the target pages, the easier it'll become to scrape them.

Buckle up! You're about to build a Python spider that navigates HTML code and extracts relevant data from it.

Step 2: Download HTML Pages

Get ready to ignite your Python engine! You're all set to write some code!

Assume you want to scrape data from:

https://scrapeme.live/shop/

You first need to retrieve the target page's HTML code. In other words, you have to download the HTML document associated with the page URL. To achieve this, use the Python requests library.

In the Terminal tab of your PyCharm project, launch the command below to install requests:

pip install requests

Open the scraper.py file and initialize it with the following lines of code:

import requests

// download the HTML document

// with an HTTP GET request

response = requests.get("https://scrapeme.live/shop/")

// print the HTML code

print(response.text)

This snippet imports the requests dependencies. Then, it uses the requests get() function to perform an HTTP GET request to the target page URL, and get() returns the Python representation of the response containing the HTML document.

Print the text attribute of the response, and you'll get access to the code of the page you inspected earlier:

<!doctype html>

<html lang="en-GB">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=2.0">

<link rel="profile" href="http://gmpg.org/xfn/11">

<link rel="pingback" href="https://scrapeme.live/xmlrpc.php">

<title>Products – ScrapeMe</title>

<!-- rest of the page omitted for brevity... -->

Common error: Forgetting the error handling logic!

The GET request to the server may fail for several reasons. The server may be temporarily unavailable, the URL may be wrong, or your IP might have been blocked. That's why you may want to handle errors as follows:

response = requests.get("https://scrapeme.live/sh1op/")

# if the response is 2xx

if response.ok:

# scraping logic here...

else:

# log the error response

# in case of 4xx or 5xx

print(response)

This way, the script won't crash in case of an error in the request and will continue only on 2xx responses.

Fantastic! You just learned how to fetch the HTML code of a site's page from your Python scraping script!

Before writing some data scraping Python logic, let's see an overview of the types of sites you may come across.

Static-Content Websites

Static-content web pages don't perform AJAX requests to dynamically get other data. ScrapeMe is a static-content site. That means that the server sends back HTML pages that already contain all the content.

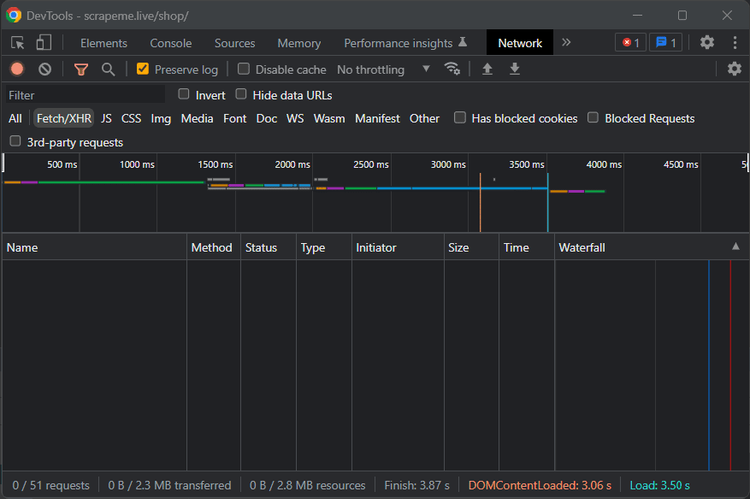

To check that, take a look at the FETCH/XHR section in DevTools' Network tab. Reload the page, and that section should remain empty, as in the picture below:

This means that the target page doesn't make AJAX calls to retrieve data at render time. Thus, what you get from the server is what you see on the page.

With static-content sites, you don't need complex logic to gain access to their content. All you have to do is make an HTTP web request to their server with the URL of the page. A piece of cake!

The requests Python library is enough to retrieve the HTML content. Then, you can parse the HTML response and start collecting relevant data immediately. Static content websites are the easiest to scrape.

Yet, there are far more challenging types to analyze!

Dynamic-Content Sites

Here, the server returns only a small part of the HTML content the user will see on the page. The browser generates the remaining information through JavaScript code. This makes API requests to get the data and generate the rest of the HTML content dynamically.

When it comes to some technologies, the server barely sends any HTML. What you get instead is a ton of JavaScript code. This instructs the browser to create the desired HTML at render time based on the data it dynamically retrieved via API.

The code you get in the server response and what you see inspecting a page with developer tools are completely different. That's pretty common in apps developed in React, Vue, or Angular. These technologies use the clients' browser capabilities to offload work from the server.

Rather than making new requests to the server, the page always remains the same. When the user navigates the web app, JavaScript takes care of getting the new information and updating the DOM. That's why they are called Single Page Applications (SPAs).

Keep in mind that only browsers can run JavaScript. For this reason, scraping sites with dynamic content requires tools with the browser's capabilities. With requests, you would only get what the server sends, which would be a lot of JS code you could not run.

To extract data from dynamic-content sites in Python, you can use requests-html. That's a project created by the author of requests that adds the ability to run JavaScript. Another choice is Selenium, the most popular headless browser library.

For those unfamiliar with the concept, a headless browser is one without a graphical user interface (GUI). With libraries like Selenium, you can instruct it to run tasks. They make for an ideal solution to run JavaScript code in Python!

Digging into how scraping dynamic-content sites works isn't the goal of this tutorial. If you want to learn more, check out our guide on headless browsers with Python!

Login-Wall Sites

Some websites restrict information behind a login wall. Only authorized users can overcome it and access particular pages.

To scrape data from those, you need an account. Logging in the browser is one thing, but doing it programmatically in Python is another.

Since ScrapeMe isn't protected by a login, this tutorial will not cover authentication. But we have a solution for you! Follow our guide on how to scrape a website that requires a login with Python to learn more!

Step 3: Parse HTML Content With Beautiful Soup

In the previous step, you retrieved an HTML document from the server. If you take a look at it, you'll see a long string of code, and the only way to make sense of it is by extracting the desired data through HTML parsing.

Time to learn how to use Beautiful Soup!

Beautiful Soup is a Python library for parsing XML and HTML content that exposes an API to explore HTML code. In other words, it allows you to select HTML elements and easily extract data from them.

To install the library, launch the command below in the terminal:

pip install beautifulsoup4

Then, use it to parse the content retrieved with requests this way:

import requests

from bs4 import BeautifulSoup

from bs4 import BeautifulSoup

# download the target page

response = requests.get("https://scrapeme.live/shop/")

# parse the HTML content of the page

soup = BeautifulSoup(response.content, "html.parser")

The BeautifulSoup() constructor takes some content and a string that specifies the parser to use. "html.parser" instructs Beautiful Soup to use the HTML one.

Common error: Passing response.text instead of response.content to BeautifulSoup().

The content attribute of the response object holds the HTML data in raw bytes, which is easier to decode than the text representation stored in the text attribute. To avoid issues with character encoding, prefer response.content to response.text with BeautifulSoup().

To be noted, websites contain data in many formats. Individual elements, lists, and tables are just a few examples. If you want your Python scraper to be effective, you need to know how to use Beautiful Soup in many scenarios. Let's see how to solve the most common challenges!

Extract Data From a Single Element

Beautiful Soup offers several ways to select HTML elements from the DOM, with the id field being the most effective approach to selecting a single element. As its name suggests, the id uniquely identifies an HTML node on the page.

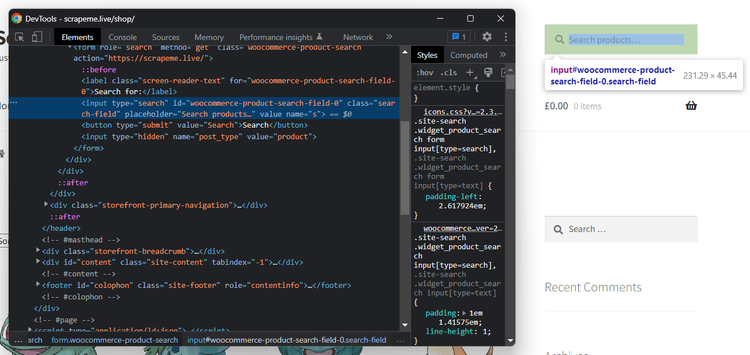

Identify the input element to search for products on ScrapeMe. Right-click and inspect it with the DevTools:

As you can see, the <input> element has the following id:

woocommerce-product-search-field-0

You can use this information to select the product search element:

product_search_element = soup.find(id="woocommerce-product-search-field-0")

The find() function enables you to extract a single HTML element from the DOM.

Keep in mind that id is an optional attribute. That's why there are also other approaches to selecting elements:

-

By tag: with the

find()function with no parameters:

# get the first <h1> element

# on the page

h1_element = soup.find("h1")

-

By class: through to the

class_parameter infind()

# find the first element on the page

# with "search_field" class

search_input_element = soup.find(class_="search_field")

-

By attribute: through to the

attrsparameter infind()

# find the first element on the page

# with the name="s" HTML attribute

search_input_element = soup.find(attrs={"name": "s"})

You can also retrieve HTML nodes by CSS selector with select() and select_one():

# find the first element identified

# by the "input.search-field" CSS selector

search_input_element = soup.select_one("input.search-field")

On text content HTML elements, extract their text with get_text():

h1_title = soup.select_one(".beta.site-title").getText()

print(h1_title)

This snippet would print:

ScrapeMe

Common error: Not checking for None.

When find() and select_one() don't manage to find the desired element, they return None. Since pages change over time, you should always perform a non-None check as follows:

product_search_element = soup.find(id="woocommerce-product-search-field-0")

# making sure product_search_element is present on the page

# before trying to access its data

if product_search_element is not None:

placeholder_string = product_search_element["placeholder"]

That simple if check can save you from silly errors like this:

TypeError: 'NoneType' object is not subscriptable

Select Nested Elements

The DOM is a nested tree structure. Defining a selection strategy to get the desired HTML elements in one step isn't always easy, but that's why Beautiful Soup offers an alternative approach for searching nodes.

First, select the parent containing many nested nodes. Next, call the Beautiful Soup search functions directly on this one. This will limit the scope of research only to the children of the parent element.

Let's dig into this with an example!

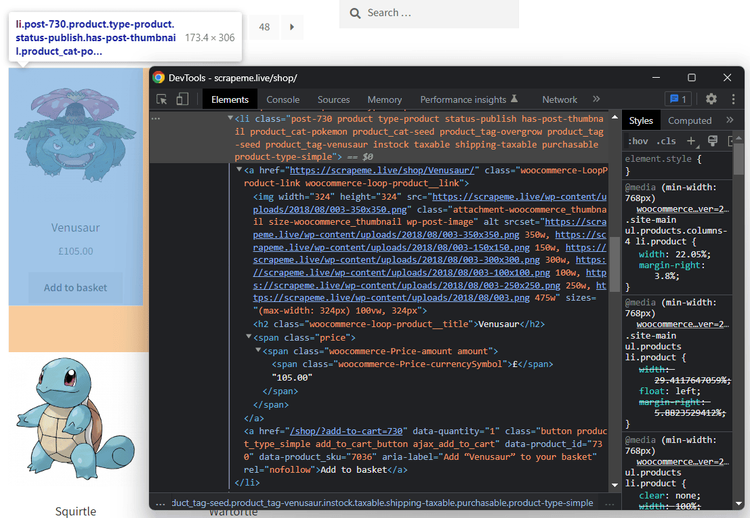

Assume you want to retrieve some data from the Venosaur product. By inspecting it, you'll see that its HTML elements don't have an ID. This means that you can't access them directly, and you need a complex CSS selector.

Start by selecting the parent element:

venosaur_element = soup.find(class_="post-730")

Then, apply the find() function on venosaur_element:

# extracting data from the nested nodes

# extracting data from the nested nodes

# inside the Venosaur product element

venosaur_image_url = venosaur_element.find("img")["src"]

venosaur_price = venosaur_element.select_one(".amount").get_text()

print(venosaur_image_url)

print(venosaur_price)

This will print:

https://scrapeme.live/wp-content/uploads/2018/08/003-350x350.png

£105.00

Great! You're now able to scrape data from nested elements with no effort!

📘 Exercise: Get the page URL and complete name related to the Wartortle product.

📘 Solution: Not too different from what you did before.

wartortle_element = soup.find(class_="post-735")

wartortle_element = soup.find(class_="post-735")

wartortle_url = wartortle_element.find("a")["href"]

wartortle_name = wartortle_element.find("h2").get_text()

Look for Hidden Elements

HTML elements with the display:none or visibility:hidden CSS class aren't visible. Since the browser doesn't show them on the page, they are also known as hidden elements.

Typically, these contain data to show only after a particular interaction. Also, they allow web developers to store information that shouldn't be visible to the end user. This information is often used to provide additional functionality to the page or for debugging.

They're still part of the DOM. Thus, you can select and scrape data from them as from all other HTML elements. Let's see an example:

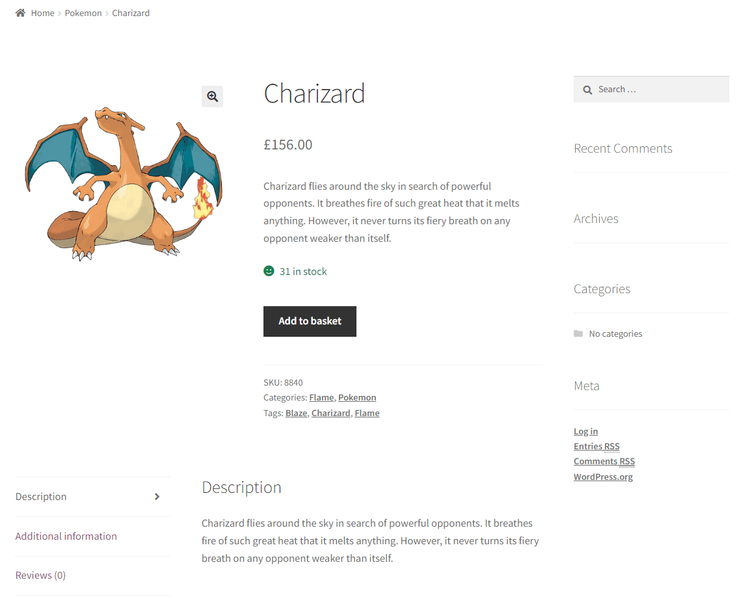

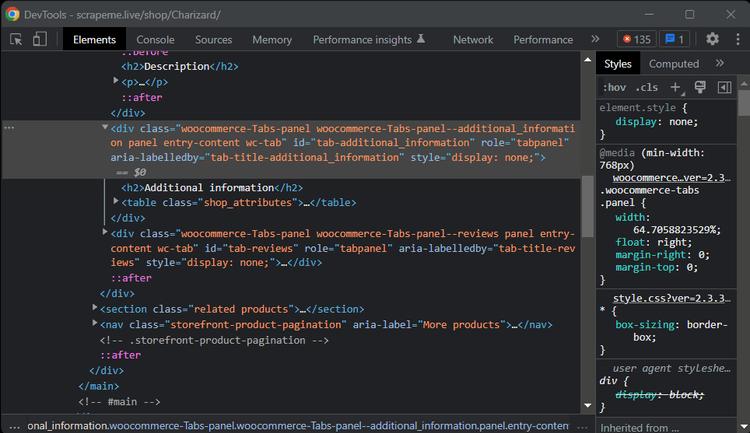

Explore the page of a particular product, such as Charizard:

response = requests.get("https://scrapeme.live/shop/Charizard/")

soup = BeautifulSoup(response.content, "html.parser")

Take a look at the "Description" section, and you'll see that there are some clickable options on the left. Clicking on them activates elements on the page that weren't visible before:

Inspect the HTML page with the developer tools in this section. You'll notice that the "Additional Information" and "Reviews" parent <div>s have the display: none CSS class.

When you click on the corresponding voice on the left menu, the browser removes that class via JavaScript. This will make it visible. But you don't have to wait for an element to appear to scrape data from it!

Select the "Additional Information" content <div> with:

additional_info_div = soup.select_one(".woocommerce-Tabs-panel--additional_information")

Now, print a node to verify if Beautiful Soup found it. Complementarily, run prettify() on the resulting node to get its HTML content in an easy-to-read format.

print(additional_info_div.prettify())

This will return:

<div aria-labelledby="tab-title-additional_information" class="woocommerce-Tabs-panel woocommerce-Tabs-panel--additional_information panel entry-content wc-tab" id="tab-additional_information" role="tabpanel">

<h2>

Additional information

</h2>

<table class="shop_attributes">

<tr>

<th>

Weight

</th>

<td class="product_weight">

199.5 kg

</td>

</tr>

<tr>

<th>

Dimensions

</th>

<td class="product_dimensions">

5 x 5 x 5 cm

</td>

</tr>

</table>

</div>

That's precisely the HTML code contained in the "Additional Information" <div>. Since the result isn't None, Beautiful Soup was able to find it even though it's a hidden element.

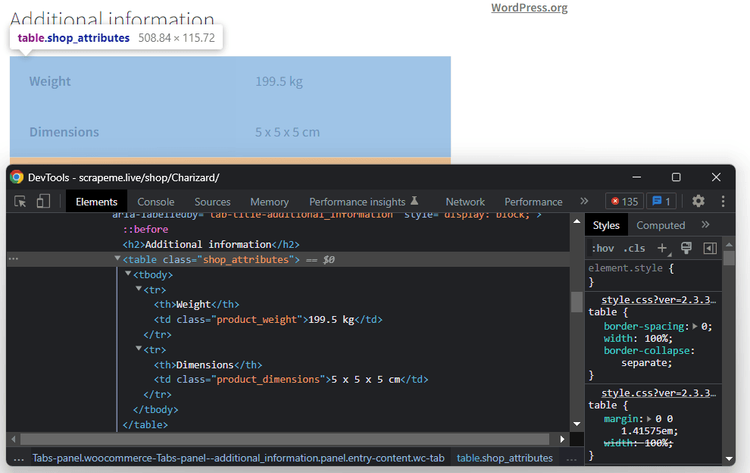

Get Data From a Table

Let's continue the example to learn how to scrap data from a <table> HTML element.

An HTML table is a structured set of data that consists of several <tr> rows and many <td> cells. Retrieve all information:

# get the table contained inside the

# "Additional Information" div

additional_info_table = additional_info_div.find("table")

# iterate over each row of the table

for row in additional_info_table.find_all("tr"):

category_name = row.find("th").get_text()

cell_value = row.find("td").get_text()

print(category_name, cell_value)

Note the use of find_all(). That's a Beautiful Soup function to get the list of all HTML elements that match the selection query.

Run it, and you'll see:

Weight 199.5 kg

Dimensions 5 x 5 x 5 cm

That's all the content contained in the table!

That was an easy example, but you can simply expand the scraping logic above to larger and more complex scenarios.

📘 Exercise: Extract all SpongeBob first-season episodes information from its Wikipedia page:

https://en.wikipedia.org/wiki/List_of_SpongeBob_SquarePants_episodes

_Italic_📘 Solution: There are several ways to solve this exercise. This is one of the many possible solutions:

response = requests.get("https://en.wikipedia.org/wiki/List_of_SpongeBob_SquarePants_episodes")

soup = BeautifulSoup(response.content, "html.parser")

episode_table = soup.select_one(".wikitable.plainrowheaders.wikiepisodetable")

# skip the header row

for row in episode_table.find_all("tr")[1:]:

# to store cell values

cell_values = []

# get all row cells

cells = row.find_all("td")

# iterating over the list of cells in

# the current row

for cell in cells:

# extract the cell content

cell_values.append(cell.get_text())

print("; ".join(cell_values))

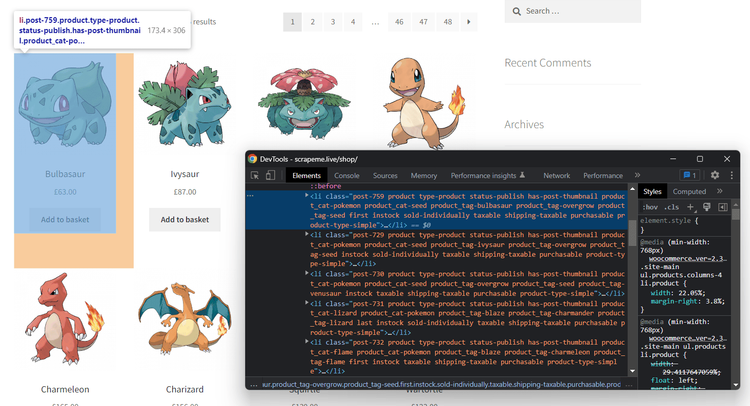

Scrape a List of Elements

Web pages often contain a list of elements, like the list of products on an e-commerce. Retrieving data from them may take a lot of time, but here Python's Beautiful Soup scraping comes into play!

Head back to https://scrapeme.live/:

response = requests.get("https://scrapeme.live/shop/")

soup = BeautifulSoup(response.content, "html.parser")

That contains a list of Pokemon-inspired products in <li> elements:

Note that all <li> nodes have the product class. Select them all:

product_elements = soup.select("li.product")

Iterate over them to extract all product data as follows:

for product_element in product_elements:

name = product_element.find("h2").get_text()

url = product_element.find("a")["href"]

image = product_element.find("img")["src"]

price = product_element.select_one(".amount").get_text()

print(name, url, image, price)

When dealing with a list of elements, you should store the scraped data in a dictionary list. A Python dictionary is an unordered collection of key-value pairs, and you can use it as below:

# the list of dictionaries containing the

# scrape data

pokemon_products = []

for product_element in product_elements:

name = product_element.find("h2").get_text()

url = product_element.find("a")["href"]

image = product_element.find("img")["src"]

price = product_element.select_one(".amount").get_text()

# define a dictionary with the scraped data

new_pokemon_product = {

"name": name,

"url": url,

"image": image,

"price": price

}

# add the new product dictionary to the list

pokemon_products.append(new_pokemon_product)

pokemon_products now contains the list of all information scraped from each of the individual products on the page.

Wow! You now have all the building blocks required to build a data scraper in Python with Beautiful Soup. But keep going; the tutorial isn't over yet!

Step 4: Export the Scraped Data

Retrieving web content is usually the first step in a larger process. The scraped information is then used to meet different needs and for different purposes. That's why it's critical to convert it into a format that makes it easy to read and explore, such as CSV or JSON.

You have the product info in the pokemon_products list presented earlier. Now, find out how to convert it to a new format and export it to a file in Python!

Export to CSV

CSV is a popular format for data exchange, storage, and analysis, especially when it comes to large datasets. A CSV file stores the information in a tabular form, with values separated by commas. This makes it compatible with spreadsheet programs, such as Microsoft Excel.

Convert a list of dictionaries to CSV in Python:

import csv

# scraping logic...

# create the "products.csv" file

csv_file = open('products.csv', 'w', encoding='utf-8', newline='')

# initialize a writer object for CSV data

writer = csv.writer(csv_file)

# convert each element of pokemon_products

# to CSV and add it to the output file

for pokemon_product in pokemon_products:

writer.writerow(pokemon_product.values())

# release the file resources

csv_file.close()

This snippet exports the scraped data in pokemon_product to a products.csv file. It creates a file with open(). Then, it iterates over each product and adds it to the CSV output document with writerow(). This writes a product dictionary as a CSV-formatted row to the CSV file.

Common error: Looking for an external dependency to deal with CSV files in Python.

csv provides classes and methods to work with such documents in a convenient way, and it's part of the Python Standard Library. So, you can import and use it without installing any extra dependencies.

Launch the Python spider:

python scraper.py

In the root folder of your project, you'll see a products.csv file containing the following:

Bulbasaur,https://scrapeme.live/shop/Bulbasaur/,https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png,£63.00

Bulbasaur,https://scrapeme.live/shop/Bulbasaur/,https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png,£63.00

Ivysaur,https://scrapeme.live/shop/Ivysaur/,https://scrapeme.live/wp-content/uploads/2018/08/002-350x350.png,£87.00

...

Beedrill,https://scrapeme.live/shop/Beedrill/,https://scrapeme.live/wp-content/uploads/2018/08/015-350x350.png,£168.00

Pidgey,https://scrapeme.live/shop/Pidgey/,https://scrapeme.live/wp-content/uploads/2018/08/016-350x350.png,£159.00

Fantastic! That's CSV data!

Export to JSON

JSON is a lightweight, versatile, popular data interchange format, especially in web applications. It's generally used to transfer information between servers or between clients and servers via API. It's supported by many programming languages, and you can also import it into Excel.

Export a list of dictionaries to JSON in Python with this code:

import json

# scraping logic...

# create the "products.json" file

json_file = open('data.json', 'w')

# convert pokemon_products to JSON

# and write it into the JSON output file

json.dump(pokemon_products, json_file)

# release the file resources

json_file.close()

The export logic above revolves around the json.dump() function. That comes from the json standard Python module and allows you to write a Python object into a JSON formatted file.

json.dump() takes two arguments:

- The Python object to convert to JSON format.

- A file object initialized with

open()where to write the JSON data.

Launch the scraping script:

python scraper.py

A products.json file will appear in the project folder. Open it, and you'll see this:

[

{

"name": "Bulbasaur",

"url": "https://scrapeme.live/shop/Bulbasaur/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png",

"price": "£63.00"

},

{

"name": "Ivysaur",

"url": "https://scrapeme.live/shop/Ivysaur/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/002-350x350.png",

"price": "£87.00"

},

...

{

"name": "Beedrill",

"url": "https://scrapeme.live/shop/Beedrill/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/015-350x350.png",

"price": "£168.00"

},

{

"name": "Pidgey",

"url": "https://scrapeme.live/shop/Pidgey/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/016-350x350.png",

"price": "£159.00"

}

]

Great! That's JSON data!

Next Steps

The web scraping Python tutorial ends here, but that doesn't mean that your learning does too! Check out the following collection of useful resources to learn more, improve your skills, and challenge yourself.

More Exercises to Improve Your Skills

Here's a list of exercises that will help you improve your data-scraping skills in Python:

- Crawl the entire website: Expand the spider built here to crawl the entire website and retrieve all product data. It should start from the first page of the paginated list of products. Follow our guide on web crawling in Python.

-

Implement parallel scraping: Update the previous script to simultaneously scrape the data from many pages. Use the

concurrent.futuresmodule, or any other parallel processing library. Take a look at our tutorial on parallel scraping in Python. -

Write the scraped to a database: Expand the Python script to write the info stored in

pokemon_productsto a database. Use any database management system of your choice, such as MySQL and PostgreSQL. Make sure to keep the information in a structured way to support future retrieval.

Best Courses

Online courses are a very effective way to learn new things. Also, you'll get a certificate of completion to enrich your resume. These are one of the most recommended Python web scraping courses currently available:

- Web Scraping and API Fundamentals in Python: This covers the basics of web scraping and APIs in Python. It'll teach you how to extract content from websites and APIs using the popular Requests library and how to parse HTML and JSON data.

- Web Scraping In Python: Master The Fundamentals: This course addresses the basics of web scraping in Python. It explains how to extract data using the Beautiful Soup and Requests libraries and how to parse HTML and XML data.

Other Useful Resources

Here's a list of follow-up tutorials you should read to become an expert in Python web scraping:

- 5 Best Python Web Scraping Libraries in 2023: An overview of the most popular Python libraries for web scraping, including BeautifulSoup, Scrapy, and Selenium.

- How to Scrape JavaScript Rendered Web Pages with Python: A guide on how to extract data from web pages that use JavaScript to load content, including a comparison of the different methods and tools available.

- Stealth Web Scraping in Python: Avoid Blocking like a Ninja: A guide on how to avoid being detected or blocked while scraping, including tips on rotating IP addresses, using HTTP headers and cookies, and overcoming CAPTCHAs.

- How to Rotate Proxies in Python: An in-depth, step-by-step guide on how to use multiple proxy IP addresses to avoid being detected or blocked.

- How to Bypass Cloudflare in Python: A guide on how to access web pages protected by Cloudflare, a popular security and performance optimization service, using Python and various scraping techniques.

Conclusion

This step-by-step tutorial covered everything you need to know to get started on web scraping in Python. First, we introduced you to the terminology. Then, we tackled together the most popular Python data scraping concepts.

You know now:

- What web scraping is and when it's useful.

- The basics of scraping in Python with Beautiful Soup and Requests.

- How to extract data with Beautiful Soup from single nodes, lists, tables, and more.

- How to export the scraped to several formats, such as JSON and CSV.

- How to address the most common challenges when it comes to data scraping using Python.

The hardest challenge you'll face is being blocked by anti-bot systems. Bypassing them all means finding several workarounds, which is complex and cumbersome. But you can avoid them by using a Python web scraping API like ZenRows. Thanks to it, you run data scraping via API requests and forget about anti-bots.

Frequent Questions

Is Python Best for Web Scraping?

Python is considered one of the best programming languages for web scraping. That's due to its simplicity, readability, a wide range of libraries and tools designed for the purpose, and large community. It's not the only option, but its characteristics make it probably the best choice for developers.

Also, its versatility as a programming language, which can be used for many different purposes, including data analysis and machine learning, also makes it an excellent choice for those looking to expand their skills beyond scraping.

Can You Use Python for Data Scraping?

Yes, you can! Python is one of the most popular languages when it comes to retrieving data. That's thanks to its versatility, ease of use, and extensive library support. There are several tools for sending HTTP requests, parsing HTML, and extracting information. This makes Python the perfect solution for data-scraping projects.

How Do You Use Python for Web Scraping?

You can scrape data from a website in Python, as you can in any other programming language. That gets easier if you take advantage of one of the many web scraping libraries available in Python. Use them to connect to the target website, select HTML elements from its pages, and extract data from them.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.