Scrapy allows you to scrape paginated websites easily. Wondering how to implement that?

This article shows you how to use Python's Scrapy to scrape websites in all cases.

When You Have a Navigation Page Bar

Page navigation bars are the most common and simplest forms of pagination.

There are two standard methods for scraping websites with navigation bars in Scrapy: you can use the next page link method or change the page number in the URL.

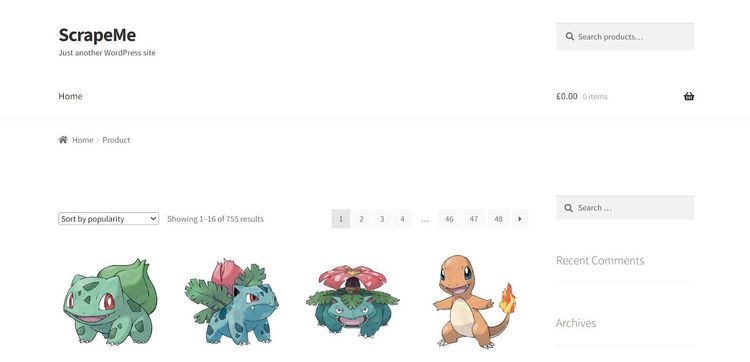

Let's see how each works with examples where Scrapy retrieves the names and prices of products across all pages on ScrapeMe, a demo website with a page navigation bar.

But first, let's briefly examine the website you want to scrape.

You're about to scrape 48 product pages using Scrapy! Let's get started with each method.

Use the Next Page Link

The scraping logic behind the next page link is to have Scrapy follow and scrape the next page if it exists. We’ll do this using the response.follow standard method of Scrapy.

Let's set up our spider request with a callback:

# import the required modules

from scrapy.spiders import Spider

from scrapy import Request

class MySpider(Spider):

# specify the spider name

name = 'product_scraper'

start_urls = ['https://scrapeme.live/shop/']

def start_requests(self):

# start with the initial page

for url in self.start_urls:

yield Request(url=url, callback=self.parse)

#...

All the products are inside an unordered list (ul) element. The products variable obtains each product from the parent ul element.

The following parse function loops through the product container and retrieves corresponding product names and prices. It then appends the result into the empty data array.

class MySpider(Spider)

#...

# parse HTML page as response

def parse(self, response):

# extract text content from the ul element

products = response.css('ul.products li.product')

# declare an empty array to collect data

data = []

for product in products:

# get children element from the ul

product_name = product.css('h2.woocommerce-loop-product__title::text').get()

price = product.css('span.woocommerce-Price-amount::text').get()

# append the scraped data into the empty data array

data.append({

'product_name': product_name,

'price': price,

})

self.log(data)

#...

Running the spider logs the content on the first page only. It means that our code isn't following the pages yet:

[{'product_name': 'Bulbasaur', 'price': '63.00'}, {'product_name': 'Ivysaur', 'price': '87.00'}, {'product_name': 'Venusaur', 'price': '105.00'}, {'product_name': 'Charmander', 'price': '48.00'}, {'product_name': 'Charmeleon', 'price': '165.00'}, {'product_name': 'Charizard', 'price': '156.00'}, {'product_name': 'Squirtle', 'price': '130.00'}, ...]

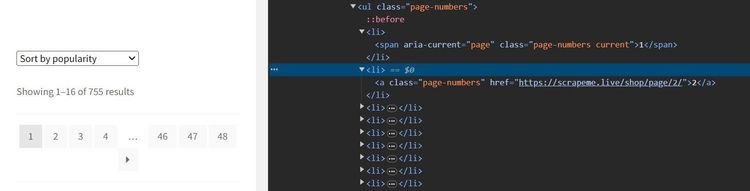

Next is to write the logic for retrieving content from all the pages. Let's quickly inspect the pagination bar element and expose its structure.

So, the navigation bar is an unordered list (ul) of page numbers.

Here's what the element looks like in the inspection tab:

The code below gets successive pages from the ul by calling the href attribute of a.next

If the next href exists, Scrapy visits its page using response.follow and scrapes the target content. Otherwise, it terminates the crawl.

Extend the previous code with the following:

def parse(self, response):

#...

# follow the next page link

next_page = response.css('ul.page-numbers li a.next::attr(href)').get()

if next_page:

yield response.follow(next_page, self.parse)

Let's get the code together:

# import the required modules

from scrapy.spiders import Spider

from scrapy import Request

class MySpider(Spider):

# specify the spider name

name = 'product_scraper'

start_urls = ['https://scrapeme.live/shop/']

def start_requests(self):

# start with the initial page

for url in self.start_urls:

yield Request(url=url, callback=self.parse)

# parse HTML page as response

def parse(self, response):

# extract text content from the ul element

products = response.css('ul.products li.product')

# declare an empty array to collect data

data = []

for product in products:

# Get children element from the ul

product_name = product.css('h2.woocommerce-loop-product__title::text').get()

price = product.css('span.woocommerce-Price-amount::text').get()

# append the scraped data into the empty data array

data.append({

'product_name': product_name,

'price': price,

})

# follow the next page link

next_page = response.css('ul.page-numbers li a.next::attr(href)').get()

if next_page:

yield response.follow(next_page, self.parse)

self.log(data)

This crawls all available pages and outputs the product names and prices:

[{'product_name': 'Bulbasaur', 'price': '63.00'}, {'product_name': 'Ivysaur', 'price': '87.00'}, {'product_name': 'Venusaur', 'price': '105.00'},...]

[{'product_name': 'Pidgeotto', 'price': '84.00'}, {'product_name': 'Pidgeot', 'price': '185.00'}, {'product_name': 'Rattata', 'price': '128.00'},...]

[{'product_name': 'Clefairy', 'price': '160.00'}, {'product_name': 'Clefable', 'price': '188.00'}, {'product_name': 'Vulpix', 'price': '85.00'},...]

#... other products omitted for brevity

Congratulations! You just scraped content from every page on a paginated website.

You can even try a more custom approach of changing the page number in the URL.

Change the Page Number in the URL

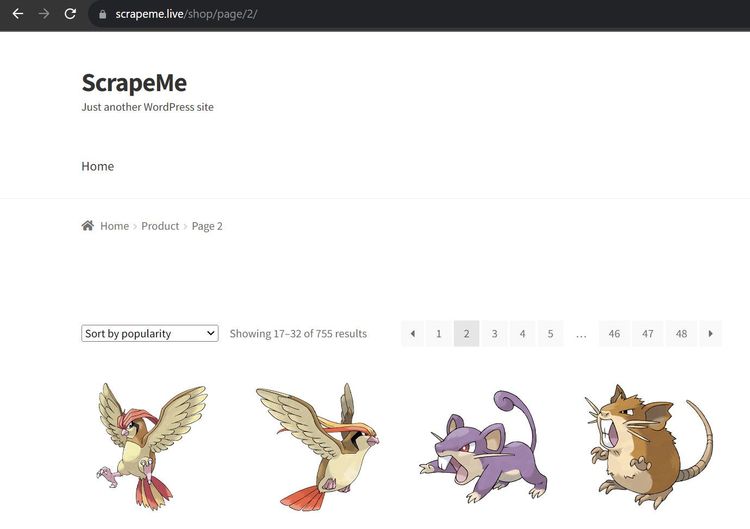

Paginated websites with navigation bars often show the current page directory in the URL.

For example, the second page URL on ScrapeMe is https://scrapeme.live/shop/page/2/. This is the same for all pages. See a demo below:

The idea is to increase the page numbers in the URL, have Scrapy visit, and scrape each page.

Let's see how that works, starting with the request callback below. page_count sets the initial page number to one.

Since this is a custom method, handle_httpstatus_list ensures that your scraper ignores the error 404 once your scraper exceeds the available page numbers.

# import the required modules

from scrapy.spiders import Spider

from scrapy import Request

class MySpider(Spider):

# specify the spider name

name = 'product_scraper'

# specify the target URL

start_urls = ['https://scrapeme.live/shop/']

# handle HTTP 404 response

handle_httpstatus_list = [404]

# set the initial page count to 1

page_count = 1

def start_requests(self):

# start with the initial page

for url in self.start_urls:

yield Request(url=url, callback=self.parse)

The code below parses and scrapes each page by requesting the next page count in the URL.

Notably, the code adds one to the page number per request and obtains the target URL by its index. This gives https://scrapeme.live/shop/.

Thus, the next page format becomes: https://scrapeme.live/shop/page/<PAGE_NUMBER>. Next, Scrapy visits each incremented page number in that order until the last page.

The error 404 logic terminates the crawl once Scrapy exceeds the available page numbers and hits an error 404.

class MySpider(Spider):

#...

# parse HTML page as response

def parse(self, response):

# get response status

status = response.status

# terminate the crawl when you exceed the available page numbers

if status == 404:

self.log(f'Ignoring 404 response for URL: {response.url}')

return

# extract text content from the ul element

products = response.css('ul.products li.product')

# declare an empty array to collect data

data = []

for product in products:

# get children element from the ul

product_name = product.css('h2.woocommerce-loop-product__title::text').get()

price = product.css('span.woocommerce-Price-amount::text').get()

# append the scraped data into the empty data array

data.append({

'product_name': product_name,

'price': price,

})

self.page_count += 1

next_page = f'{self.start_urls[0]}page/{self.page_count}/'

yield Request(url=next_page, callback=self.parse)

self.log(data)

Let's put the code in one piece:

# import the required modules

from scrapy.spiders import Spider

from scrapy import Request

class MySpider(Spider):

# specify the spider name

name = 'product_scraper'

# specify the target URL

start_urls = ['https://scrapeme.live/shop/']

# handle HTTP 404 response

handle_httpstatus_list = [404]

# set the initial page count to 1

page_count = 1

def start_requests(self):

# start with the initial page

for url in self.start_urls:

yield Request(url=url, callback=self.parse)

# parse HTML page as response

def parse(self, response):

# get response status

status = response.status

# terminate the crawl when you exceed the available page numbers

if status == 404:

self.log(f'Ignoring 404 response for URL: {response.url}')

return

# extract text content from the ul element

products = response.css('ul.products li.product')

# declare an empty array to collect data

data = []

for product in products:

# get children element from the ul

product_name = product.css('h2.woocommerce-loop-product__title::text').get()

price = product.css('span.woocommerce-Price-amount::text').get()

# append the scraped data into the empty data array

data.append({

'product_name': product_name,

'price': price,

})

self.page_count += 1

next_page = f'{self.start_urls[0]}page/{self.page_count}/'

yield Request(url=next_page, callback=self.parse)

self.log(data)

This outputs the product names and prices for all available pages, as shown:

[{'product_name': 'Bulbasaur', 'price': '63.00'}, {'product_name': 'Ivysaur', 'price': '87.00'}, {'product_name': 'Venusaur', 'price': '105.00'},...]

[{'product_name': 'Pidgeotto', 'price': '84.00'}, {'product_name': 'Pidgeot', 'price': '185.00'}, {'product_name': 'Rattata', 'price': '128.00'},...]

[{'product_name': 'Clefairy', 'price': '160.00'}, {'product_name': 'Clefable', 'price': '188.00'}, {'product_name': 'Vulpix', 'price': '85.00'},...]

#... other products omitted for brevity

Nice! Your custom Scrapy code for scraping paginated content works.

But most modern websites now employ dynamic JavaScript to load content as you scroll. Let's handle that in the next section.

When JavaScript-Based Pagination is Required

Websites that use JavaScript for pagination may use infinite scroll to load content or require clicking a button to load more content. It means you'll need a headless browser to render JavaScript with Scrapy in their case.

Let's consider each scenario with examples that use Scrapy Splash to scrape product images, names, prices, and links off ScrapingClub, a demo website that uses infinite scrolling.

The demo website loads content dynamically as you scroll down the page like so:

First, install scrapy-splash using pip:

pip install scrapy-splash

Now, let’s scrape this website!

Infinite Scroll to Load More Content

Infinite scrolling is common with social media and e-commerce websites. Using Splash to render JavaScript in Scrapy is the best way to interact with infinite scrolling.

The following code demonstrates how to use Scrapy Splash to access and scrape data rendered by infinite scrolling.

The lua_script details how Splash should interact with the web page. The script specifies the number of times to scroll the page and implements a pause for more items to load when scrolling.

The Splash Request accepts a URL, a callback, an endpoint, and an optional parameter that points to the lua_script.

# import the required libraries

import scrapy

from scrapy_splash import SplashRequest

lua_script = """

function main(splash, args)

splash:go(args.url)

local num_scrolls = 8

local wait_after_scroll = 1.0

local scroll_to = splash:jsfunc('window.scrollTo')

local get_body_height = splash:jsfunc(

'function() { return document.body.scrollHeight; }'

)

-- scroll to the end for 'num_scrolls' time

for _ = 1, num_scrolls do

scroll_to(0, get_body_height())

splash:wait(wait_after_scroll)

end

return splash:html()

end

"""

class ScrapingClubSpider(scrapy.Spider):

name = 'scraping_club'

star_urls = ['https://scrapingclub.com/exercise/list_infinite_scroll/']

def start_requests(self):

for url in self.star_urls:

yield SplashRequest(url, callback=self.parse, endpoint='render.html', args={'lua_source': lua_script})

def parse(self, response):

# iterate over the product elements

for product in response.css('.post'):

url = product.css('a').attrib['href']

image = product.css('.card-img-top').attrib['src']

name = product.css('h4 a::text').get()

price = product.css('h5::text').get()

# add the scraped product data to the list

yield {

'url': url,

'image': image,

'name': name,

'price': price

}

This scrapes the desired content successfully, as shown:

{'url': '/exercise/list_basic_detail/90008-E/', 'image': '/static/img/90008-E.jpg', 'name': 'Short Dress', 'price': '$24.99'}

{'url': '/exercise/list_basic_detail/96436-A/', 'image': '/static/img/96436-A.jpg', 'name': 'Patterned Slacks', 'price': '$29.99'}

{'url': '/exercise/list_basic_detail/93926-B/', 'image': '/static/img/93926-B.jpg', 'name': 'Short Chiffon Dress', 'price': '$49.99'}

{'url': '/exercise/list_basic_detail/90882-B/', 'image': '/static/img/90882-B.jpg', 'name': 'Off-the-shoulder Dress', 'price': '$59.99'}

#... other products omitted for brevity

The code works!

But what if the pagination style requires a user to click a "Load More" button to view more content? Let's see how to tackle that in the following section.

Click on a Button to Load More Content

A "Load More" button is another pagination style you might encounter while scraping paginated websites with Scrapy. Scrapy Splash requires interaction with the web page to get more content.

In this example, we'll use Splash with Scrapy to retrieve product information from Sketchers.

This website uses the "Load More" button to show content dynamically, as shown:

The code below obtains the product names and prices from the target website. The bulk of the job is with the lua_script, which implements the scrolling strategy for Scrapy.

num_scrolls in the lua_script determines the number of times Scrapy will attempt to scroll the page. It then implements a logic to check for the presence of the load button and presses the button if the logic evaluates to true.

Paste the lua_script in your spider file, as shown:

# importing necessary libraries

import scrapy

from scrapy_splash import SplashRequest

lua_script = """

function main(splash, args)

splash:go(args.url)

local num_scrolls = 10

local wait_after_scroll = 1.0

local wait_after_click = 5.0

local scroll_to = splash:jsfunc('window.scrollTo')

local get_body_height = splash:jsfunc(

'function() { return document.body.scrollHeight; }'

)

-- scroll to the end and click 'Load More' for 'num_scrolls' times

for _ = 1, num_scrolls do

scroll_to(0, get_body_height())

splash:wait(wait_after_scroll)

local load_more_button = splash:evaljs([[

var button = document.querySelectorAll('button.btn.btn-primary')[1];

return button && button.offsetHeight > 0;

]])

-- click the 'Load More' button

if load_more_button then

load_more_button.click()

splash:wait(wait_after_click)

end

end

return splash:html()

end

"""

#...

Next, write your scraper class and add the lua_scriptto the SplashRequest method.

#...

class ScrapingClubSpider(scrapy.Spider):

name = 'crutch'

start_urls = ['https://www.skechers.com/men/shoes/']

custom_settings = {

'USER_AGENT': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

}

def start_requests(self):

for url in self.start_urls:

yield SplashRequest(url, callback=self.parse, endpoint='render.html', args={'lua_source': lua_script})

def parse(self, response):

products = response.css('div.product-grid div.col-6')

for product in products:

item = {

'name': product.css('a.link.c-product-tile__title::text').get(),

'price': product.css('span.value::text').get()

}

yield item

We've added a user agent to this spider to mimic a real browser and increase the success rate of the scraper.

Here's the code combined:

# importing necessary libraries

import scrapy

from scrapy_splash import SplashRequest

lua_script = """

function main(splash, args)

splash:go(args.url)

local num_scrolls = 10

local wait_after_scroll = 1.0

local wait_after_click = 5.0

local scroll_to = splash:jsfunc('window.scrollTo')

local get_body_height = splash:jsfunc(

'function() { return document.body.scrollHeight; }'

)

-- Scroll to the end and click 'Load More' for 'num_scrolls' times

for _ = 1, num_scrolls do

scroll_to(0, get_body_height())

splash:wait(wait_after_scroll)

local load_more_button = splash:evaljs([[

var button = document.querySelectorAll('button.btn.btn-primary')[1];

return button && button.offsetHeight > 0;

]])

-- Click the 'Load More' button

if load_more_button then

load_more_button.click()

splash:wait(wait_after_click)

end

end

return splash:html()

end

"""

class ScrapingClubSpider(scrapy.Spider):

name = 'crutch'

start_urls = ['https://www.skechers.com/men/shoes/']

custom_settings = {

'USER_AGENT': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

}

def start_requests(self):

for url in self.start_urls:

yield SplashRequest(url, callback=self.parse, endpoint='render.html', args={'lua_source': lua_script})

def parse(self, response):

products = response.css('div.product-grid div.col-6')

for product in products:

item = {

'name': product.css('a.link.c-product-tile__title::text').get(),

'price': product.css('span.value::text').get()

}

yield item

The code outputs the product names and prices of the items on that page:

{'name': 'Skechers Slip-ins: Snoop Flex - Velvet', 'price': '$100.00'}

{'name': 'Skechers Slip-ins: Snoop One - Boss Life Velvet', 'price': '$115.00'}

{'name': 'SKX RESAGRIP', 'price': '$150.00'}

{'name': 'SKX FLOAT', 'price': '$150.00'}

{'name': 'Skechers Slip-ins: Max Cushioning AF - Fortuitous', 'price': '$120.00'}

{'name': 'Skechers Slip-ins: Max Cushioning AF - Game', 'price': '$120.00'}

#...other content omitted for brevity

That's it! Your code works and is now scraping content dynamically after clicking a "Load More" button on a paginated website.

But the problem is only halfway solved. Most websites use blockers like anti-bots to prevent scraping.

How can you avoid this while scraping with Scrapy?

Getting Blocked when Scraping Multiple Pages with Scrapy

Your scraper can get blocked if a website uses anti-bot measures, which e.g. detect you as a bot if you request a lot of content too quickly. You have to handle CAPTCHAs, use proxies and so on.

Thankfully, a solution like ZenRows makes your scraping job much easier and integrates with Scrapy to handle all those complexities. It equips you with premium proxies, JavaScript interactions, and everything you need to avoid getting blocked.

Try ZenRows with Scrapy for free and scrape any website.

Check the JavaScript instructions documentation for interacting with dynamic web pages.

Conclusion

This article taught you the methods of employing Scrapy for multi-page scraping, covering both traditional and JavaScript-based pagination methods.

You now know how to:

- Navigate through the page bar and URL-based scraping techniques.

- Employ dynamic scraping methods for infinite scrolling and content loading.

- Address common web scraping barriers and their solutions.

The power of Scrapy is clear, yet barriers like anti-bot measures can present challenges. ZenRows integrates seamlessly with Scrapy, providing an easy solution to scrape any website. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.