The need to scrape data by companies and individuals has increased in recent years, and Ruby is one of the best programming languages for this purpose. Web scraping in Ruby is simply building a script that can automatically retrieve data from the web, and you can then use the extracted data however you like.

In this step-by-step tutorial, you'll learn how to do web scraping with Ruby using libraries like Nokogiri and Selenium.

Ready? Let's dig in!

Prerequisites

Set Up the Environment

Here are the tools we'll use in this data scraping tutorial:

- Ruby 3+: any version greater than or equal to 3 will do. We'll use 3.2.2 since it's the latest version at the time of writing.

- An IDE supporting Ruby: IntelliJ IDEA with the Ruby Language for IntelliJ plugin is a great choice. Or, if you prefer a free option, Visual Studio Code with the VSCode Ruby extension will do.

If you don't have these tools installed, click the links above and download them. Install them using the official guides, and you'll have everything you need to follow this web scraping Ruby guide.

Once installed, you can verify that Ruby works correctly by launching the command below in your terminal:

ruby -v

It should return the version of Ruby available on your machine, something like this:

ruby 3.2.2 (2023-03-30 revision e51014f9c0) [x86_64-linux]

Initialize a Web Scraping Ruby Project

Create a folder for your Ruby project and then enter it with the commands below:

mkdir simple-web-scraper-ruby

cd simple-web-scraper-ruby

Now, create a scraper.rb file and initialize it as follows:

puts "Hello, World!"

In the terminal, run the command ruby scraper.rb to launch the script. This should print:

"Hello, World!"

This means your Ruby data scraping script works correctly!

Note that scraper.rb will contain the scraper logic. Import the simple-web-scraper-ruby folder in your Ruby IDE, and you're now ready to put the basics of data scraping with Ruby into practice!

How to Scrape a Website in Ruby

Let's use ScrapeMe as our target website, and we'll use our Ruby spider to visit each page and retrieve the product data from them. The target website only contains a list of Pokemon-inspired elements paginated on several pages.

Let's install some Ruby gems and start scraping.

Step 1: Install HTTParty and Nokogiry

Net::HTTP library is the standard HTTP client API for Ruby, and you can use it to perform HTTP requests. But it doesn't provide the best syntax and may not be the best option for beginners. Therefore, a more user-friendly HTTP client, like HTTPParty, is a better choice.

HTTParty is an intuitive HTTP client that tries to make HTTP fun. And considering how important these requests are when it comes to web crawling, HTTParty will come in handy.

Install HTTParty via the httparty gem with:

gem install httparty

You can now perform HTTP requests to retrieve HTML documents easily. You only need an HTML parser! Nokogiri makes it easy to deal with XML and HTML documents in Ruby. It offers a powerful and easy-to-use API that lets you parse HTML pages, select HTML elements, powerful API that lets you parse HTML pages, select HTML elements, and extract their data.

In other words, Nokogiri helps you perform web scraping in Ruby. You can install it via the nokogiri gem:

gem install nokogiri

Add the following lines on top of your scraper.rb file:

require "httparty"

require "nokogiri"

If your Ruby IDE doesn't report errors, you installed the two gems correctly. Let's go ahead and see how to use HTTParty and Nokogiri!

Step 2: Download Your Target Web Page

Use HTTParty to perform an HTTP GET request to download the web page you want to scrape:

# downloading the target web page

response = HTTParty.get("https://scrapeme.live/shop/")

The HTTParty get() method performs a GET request to the URL passed as a parameter, and the response.body contains the HTML document returned by the server as a response.

Don't flip the table if you get an error at this stage since the error is because several websites block HTTP requests according to their headers. Specifically, they ban requests that don't come with a valid User-Agent header as part of their anti-scraping technologies. You can set a User-Agent in HTTParty as follows:

response = HTTParty.get("https://scrapeme.live/shop/", {

headers: {

"User-Agent" => "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

},

})

Step 3: Parse the HTML Document

Use Nokogiri to parse the HTML document contained in response.body.

# parsing the HTML document returned by the server

document = Nokogiri::HTML(response.body)

The Nokogiri:HTML() method accepts an HTML string and returns a Nokogiri document, which has many methods that give you access to all the Nokogiri web scraping features. Let's see how to use them to select HTML elements from the target web page.

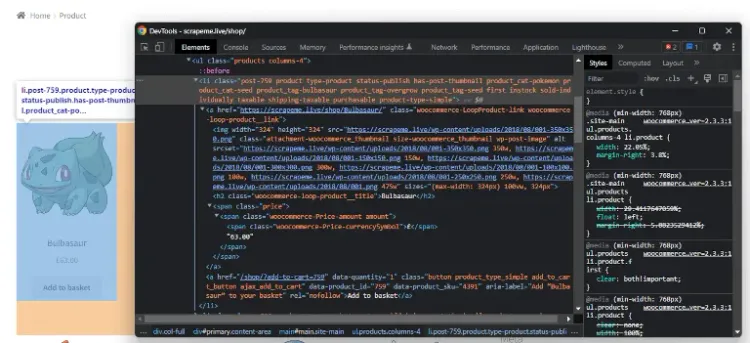

Step 4: Identify the Most Important HTML Elements

To do this, right-click on a product HTML element and select the "Inspect" option to open the DevTools window:

Here you can notice that a li.product HTML element contains:

- An

aHTML element that involves the product URL. - An

imgHTML element that contains the product image. - A

h2HTML element that wraps the product name. - A

spanHTML element that stores the product price.

Let's scrape data from the product HTML elements with Ruby.

Step 5: Extract Data from those HTML Elements

You'll need a Ruby object where you'll store the scraped data first, and you can do this by creating a PokemonProduct class with a Ruby Struct:

# defining a data structure to store the scraped data

PokemonProduct = Struct.new(:url, :image, :name, :price)

A Struct lets you bundle several attributes in the same data structure without writing a new class from scratch. PokemonProduct has four attributes corresponding to the data to scrape from each product HTML element. Now go ahead and retrieve the list of all li.product HTML element, like this:

# selecting all HTML product elements

html_products = document.css("li.product")

Thanks to the css() method exposed by Nokogiri, you can select HTML elements based on a CSS selector. Here, css() applies the li.product CSS selector strategy to retrieve all product HTML elements.

Iterate over the list of HTML products and extract the data of interest from each product as follows:

# initializing the list of objects

# that will contain the scraped data

pokemon_products = []

# iterating over the list of HTML products

html_products.each do |html_product|

# extracting the data of interest

# from the current product HTML element

url = html_product.css("a").first.attribute("href").value

image = html_product.css("img").first.attribute("src").value

name = html_product.css("h2").first.text

price = html_product.css("span").first.text

# storing the scraped data in a PokemonProduct object

pokemon_product = PokemonProduct.new(url, image, name, price)

# adding the PokemonProduct to the list of scraped objects

pokemon_products.push(pokemon_product)

end

Fantastic! You just learned how to perform data scraping in Ruby. The snippet above relies on the Ruby web scraping API offered by Nokogiri to scrape the data of interest from each product HTML element. Then it creates a PokemonProduct instance with this data and stores it in the list of scraped products.

It's time to convert the scraped data into a more convenient format.

Step 6: Convert the Scraped Data to CSV

You can easily extract the scraped data to a CSV file in Ruby this way:

# defining the header row of the CSV file

csv_headers = ["url", "image", "name", "price"]

CSV.open("output.csv", "wb", write_headers: true, headers: csv_headers) do |csv|

# adding each pokemon_product as a new row

# to the output CSV file

pokemon_products.each do |pokemon_product|

csv << pokemon_product

end

end

The CSV library is part of the default gems. So you can deal with CSV files in Ruby without an extra library. The snippet here initializes an output.csv file with a header row, then it populates the CSV file with the data contained in the list of scraped products

Run the scraper.rb data scraping Ruby script in your IDE or with the command below:

ruby scraper.rb

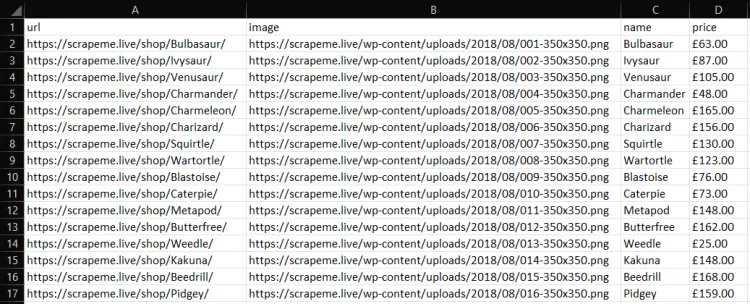

When the script ends, you'll find an output.csv file in the root folder of your Ruby project. Open it and you'll see the following data:

Way to go! You just scraped a web page using Ruby!

Great! In less than 100 lines of code, you can build a web scraper in Ruby!

Advanced Web Scraping in Ruby

You just learned the basics of web scraping with Ruby. Let's now dig deeper into the most advanced approaches.

Web Crawling in Ruby

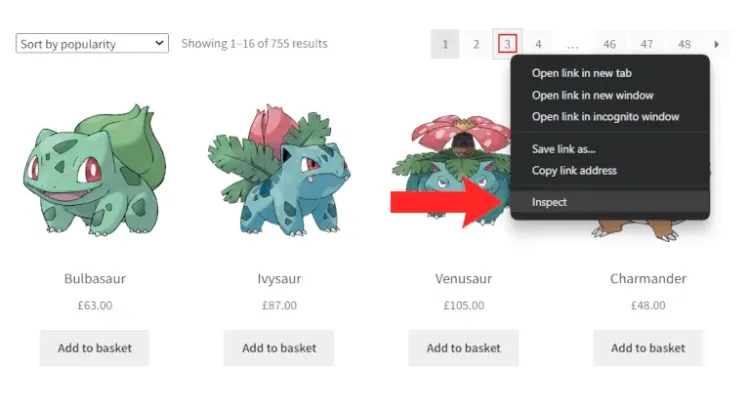

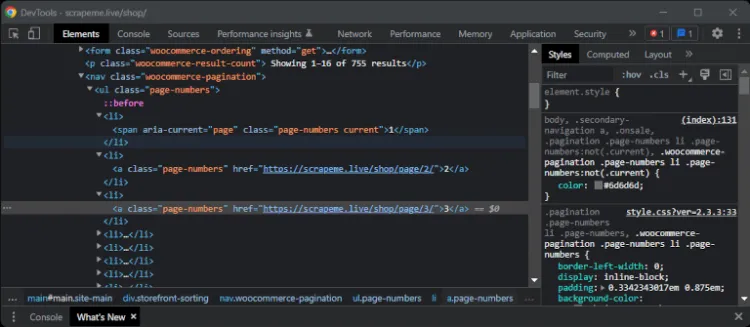

Since the target website involves several web pages, let's do web crawling to visit all the target website pages by following all pagination links. To achieve this, right-click on the pagination number HTML element and select "Inspect":

If you're a Chrome user, your DevTools window will look like this:

Chrome will automatically highlight the HTML element you right-clicked on. Note that you can select all pagination elements with the a.page-numbers CSS selector. Go ahead and extract all pagination links with Nokogiri:

# extracting the list of URLs from

# the pagination elements

pagination_links = document

.css("a.page-numbers")

.map{ |a| a.attribute("href") }

This snippet returns the list of URLs extracted by the href attribute of each pagination HTML element.

To scrape all pages from a website, you need to write some crawling logic. You'll need some data structures to keep track of the pages visited and avoid crawling a page twice, as well as a limit variable to prevent your Ruby crawler from visiting too many pages:

# initializing the list of pages to scrape with the

# pagination URL associated with the first page

pages_to_scrape = ["https://scrapeme.live/shop/page/1/"]

# initializing the list of pages discovered

# with a copy of pages_to_scrape

pages_discovered = ["https://scrapeme.live/shop/page/1/"]

# current iteration

i = 0

# max pages to scrape

limit = 5

# iterate until there is still a page to scrape

# or the limit is reached

while pages_to_scrape.length != 0 && i < limit do

# getting the current page to scrape and removing it from the list

page_to_scrape = pages_to_scrape.pop

# retrieving the current page to scrape

response = HTTParty.get(page_to_scrape, {

headers: {

"User-Agent" => "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

},

})

# parsing the HTML document returned by the server

document = Nokogiri::HTML(response.body)

# extracting the list of URLs from the pagination elements

pagination_links = document

.css("a.page-numbers")

.map{ |a| a.attribute("href") }

# iterating over the list of pagination links

pagination_links.each do |new_pagination_link|

# if the web page discovered is new and should be scraped

if !(pages_discovered.include? new_pagination_link) && !(pages_to_scrape.include? new_pagination_link)

pages_to_scrape.push(new_pagination_link)

end

# discovering new pages

pages_discovered.push(new_pagination_link)

end

# removing the duplicated elements

pages_discovered = pages_discovered.to_set.to_a

# scraping logic...

# incrementing the iteration counter

i = i + 1

end

# exporting to CSV...

This Ruby data scraper analyzes a web page, searches for new links, adds them to the crawling queue and scrapes data from the current page. Then it repeats this logic for each page.

If you set limit to 48, the script will visit the entire website and pages_discovered will store all the 48 pagination URLs. As a result, output.csv will contain the data of all the 755 Pokemon-inspired products on the website.

Put It All Together

This is what the entire Ruby data scraper looks like:

require "httparty"

require "nokogiri"

# defining a data structure to store the scraped data

PokemonProduct = Struct.new(:url, :image, :name, :price)

# initializing the list of objects

# that will contain the scraped data

pokemon_products = []

# initializing the list of pages to scrape with the

# pagination URL associated with the first page

pages_to_scrape = ["https://scrapeme.live/shop/page/1/"]

# initializing the list of pages discovered

# with a copy of pages_to_scrape

pages_discovered = ["https://scrapeme.live/shop/page/1/"]

# current iteration

i = 0

# max pages to scrape

limit = 5

# iterate until there is still a page to scrape

# or the limit is reached

while pages_to_scrape.length != 0 && i < limit do

# getting the current page to scrape and removing it from the list

page_to_scrape = pages_to_scrape.pop

# retrieving the current page to scrape

response = HTTParty.get(page_to_scrape, {

headers: {

"User-Agent" => "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

},

})

# parsing the HTML document returned by the server

document = Nokogiri::HTML(response.body)

# extracting the list of URLs from the pagination elements

pagination_links = document

.css("a.page-numbers")

.map{ |a| a.attribute("href") }

# iterating over the list of pagination links

pagination_links.each do |new_pagination_link|

# if the web page discovered is new and should be scraped

if !(pages_discovered.include? new_pagination_link) && !(pages_to_scrape.include? new_pagination_link)

pages_to_scrape.push(new_pagination_link)

end

# discovering new pages

pages_discovered.push(new_pagination_link)

end

# removing the duplicated elements

pages_discovered = pages_discovered.to_set.to_a

# selecting all HTML product elements

html_products = document.css("li.product")

# iterating over the list of HTML products

html_products.each do |html_product|

# extracting the data of interest

# from the current product HTML element

url = html_product.css("a").first.attribute("href").value

image = html_product.css("img").first.attribute("src").value

name = html_product.css("h2").first.text

price = html_product.css("span").first.text

# storing the scraped data in a PokemonProduct object

pokemon_product = PokemonProduct.new(url, image, name, price)

# adding the PokemonProduct to the list of scraped objects

pokemon_products.push(pokemon_product)

end

# incrementing the iteration counter

i = i + 1

end

# defining the header row of the CSV file

csv_headers = ["url", "image", "name", "price"]

CSV.open("output.csv", "wb", write_headers: true, headers: csv_headers) do |csv|

# adding each pokemon_product as a new row to the output CSV file

pokemon_products.each do |pokemon_product|

csv << pokemon_product

end

end

This Ruby data scraper analyzes a web page, searches for new links, adds them to the crawling queue and scrapes data from the current page. Then it repeats this logic for each page.

If you set limit to 48, the script will visit the entire website and pages_discovered will store all the 48 pagination URLs. As a result, output.csv will contain the data of all the 755 Pokemon-inspired products on the website.

gem install parallel

And import it by adding this line to your Ruby file:

require "parallel"

You can now access several utility functions to perform parallel computation in Ruby.

Now, you have the list of web pages to scrape stored in an array:

pages_to_scrape = [

"https://scrapeme.live/shop/page/1/",

"https://scrapeme.live/shop/page/2/",

# ...

"https://scrapeme.live/shop/page/48/"

]

The next step is to build a parallel web scraper with Ruby. To do this, first instantiate a Mutex to implement a semaphore for coordinating access to shared data from multiple concurrent threads. You'll need it because arrays aren't thread-safe in Ruby. Then, use the map() method exposed by Parallel to run parallel web scraping on in_threads pages at a time.

# initializing a semaphore

semaphore = Mutex.new

Parallel.map(pages_to_scrape, in_threads: 4) do |page_to_scrape|

# retrieving the current page to scrape

response = HTTParty.get(page_to_scrape, {

headers: {

"User-Agent" => "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

},

})

# parsing the HTML document returned by server

document = Nokogiri::HTML(response.body)

# scraping logic...

# since arrays are not thread-safe in Ruby

semaphore.synchronize {

# adding the PokemonProduct to the list of scraped objects

pokemon_products.push(pokemon_product)

}

end

Incredible! You now know how to make your Ruby web scraping script lightning fast! Note that this is just a simple snippet, but you can find the complete parallel Ruby spider here:

require "httparty"

require "nokogiri"

require "parallel"

# defining a data structure to store the scraped data

PokemonProduct = Struct.new(:url, :image, :name, :price)

# initializing the list of objects

# that will contain the scraped data

pokemon_products = []

# initializing the list of pages to scrape

pages_to_scrape = [

"https://scrapeme.live/shop/page/2/",

"https://scrapeme.live/shop/page/3/",

"https://scrapeme.live/shop/page/4/",

"https://scrapeme.live/shop/page/5/",

"https://scrapeme.live/shop/page/6/",

# ...

"https://scrapeme.live/shop/page/48/"

]

# initializing a semaphore

semaphore = Mutex.new

Parallel.map(pages_to_scrape, in_threads: 4) do |page_to_scrape|

# retrieving the current page to scrape

response = HTTParty.get(page_to_scrape, {

headers: {

"User-Agent" => "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

},

})

# parsing the HTML document returned by server

document = Nokogiri::HTML(response.body)

# selecting all HTML product elements

html_products = document.css("li.product")

# iterating over the list of HTML products

html_products.each do |html_product|

# extracting the data of interest

# from the current product HTML element

url = html_product.css("a").first.attribute("href").value

image = html_product.css("img").first.attribute("src").value

name = html_product.css("h2").first.text

price = html_product.css("span").first.text

# storing the scraped data in a PokemonProduct object

pokemon_product = PokemonProduct.new(url, image, name, price)

# since arrays are not thread-safe in Ruby

semaphore.synchronize {

# adding the PokemonProduct to the list of scraped objects

pokemon_products.push(pokemon_product)

}

end

end

# defining the header row of the CSV file

csv_headers = ["url", "image", "name", "price"]

CSV.open("output.csv", "wb", write_headers: true, headers: csv_headers) do |csv|

# adding each pokemon_product as a new row

# to the output CSV file

pokemon_products.each do |pokemon_product|

csv << pokemon_product

end

end

Scraping Dynamic Content Websites with a Headless Browser in Ruby

Many websites use JavaScript to perform HTTP requests through AJAX for retrieving data asynchronously. They can then use this data to update the DOM accordingly and, similarly, they can use JavaScript to manipulate the DOM dynamically.

In this case, the HTML document returned by the server may not include all the data of interest. If you want to scrape dynamic content websites, you need a tool that can run JavaScript, like a headless browser.

A headless browser is a technology that enables you to load web pages in a browser with no GUI. One of the most reliable Ruby libraries that provides headless browser functionality is the Ruby binding for Selenium.

To use it to scrape a dynamic webpage, install the selenium-webdriver Selenium gem in Ruby with:

gem install selenium-webdriver

After installing Selenium, run the Ruby scraper below to retrieve the data from ScrapeMe:

require "selenium-webdriver"

# defining a data structure to store the scraped data

PokemonProduct = Struct.new(:url, :image, :name, :price)

# initializing the list of objects

# that will contain the scraped data

pokemon_products = []

# configuring Chrome to run in headless mode

options = Selenium::WebDriver::Chrome::Options.new

options.add_argument("--headless")

# initializing the Selenium Web Driver for Chrome

driver = Selenium::WebDriver.for :chrome, options: options

# visiting a web page in the browser opened

# by Selenium behind the scene

driver.navigate.to "https://scrapeme.live/shop/"

# selecting all HTML product elements

html_products = driver.find_elements(:css, "li.product")

# iterating over the list of HTML products

html_products.each do |html_product|

# extracting the data of interest

# from the current product HTML element

url = html_product.find_element(:css, "a").attribute("href")

image = html_product.find_element(:css, "img").attribute("src")

name = html_product.find_element(:css, "h2").text

price = html_product.find_element(:css, "span").text

# storing the scraped data in a PokemonProduct object

pokemon_product = PokemonProduct.new(url, image, name, price)

# adding the PokemonProduct to the list of scraped objects

pokemon_products.push(pokemon_product)

end

# closing the driver

driver.quit

# exporting logic

Selenium allows you to select HTML elements in Ruby with the find_elements() method. When calling find_element() to a Selenium HTML element, this looks for HTML nodes in its children. Then, with methods like attribute() and text(), you can extract data from HTML nodes.

This approach to web scraping in Ruby removes the need for HTTParty and Nokogiri because Selenium uses a browser to perform the GET request and render the resulting HTML document. So you can directly visit a new web page in Selenium with:

# selecting a pagination element

pagination_element = driver.find_element(:css, "a.page-numbers")

# visiting to a new page

# directy in the browser

paginationElement.click

# waiting for the web page to load...

puts driver$title # prints "Products – Page 2 – ScrapeMe"

When you call the click method, Selenium will open a new page in the headless browser, letting you do web crawling in Ruby as a human user would. This makes it easy to perform web scraping without getting blocked.

Other Web Scraping Libraries in Ruby

Other useful libraries for web scraping with Ruby are:

- ZenRows: a web scraping API that can easily extract data from web pages and automatically bypass any anti-bot or anti-scraping system. ZenRows also offers headless browsers, rotating proxies and a 99% uptime guarantee.

-

OpenURI: a Ruby gem that wraps the

Net::HTTPlibraries to make it easier to use. With OpenURI, you can easily perform HTTP requests in Ruby. - Kimurai: an open-source and modern data scraping Ruby framework that works with headless browsers, like Chromium, headless Firefox and PhantomJS, to let you interact with websites that require JavaScript. It also supports simple HTTP requests for static content websites.

- Watir: an open-source Ruby library for performing automated testing. Watir allows you to instruct a browser and offers headless browser functionality.

- Capybara: a high-level Ruby library to build tests for web apps. Capybara is web-driver agnostic and supports Rack::Test and Selenium.

Conclusion

This step-by-step tutorial discussed the basic things you need to know to get started on Ruby web scraping.

As a recap, you learned:

- How to do basic web scraping in Ruby with Nokogiri.

- How to define web crawling logic to scrape an entire website.

- Why you may require a Ruby headless browser library.

- How to scrape and crawl dynamic content websites with the Ruby binding for Selenium.

Web data scraping using Ruby can get troublesome because there are many websites out there with anti-scraping technologies, and bypassing them can be challenging. The best way to avoid these headaches is to use a Ruby web scraping API like ZenRows. It's capable of handling anti-bot bypasses with just a single API call.

You can get started for free and try ZenRows' scraping features, like rotating proxies and a headless browser.

Frequent Questions

How Do You Scrape Data from a Website in Ruby?

You can scrape data from a website in Ruby, just as in any other programming language. This becomes easier if you adopt one or more Ruby web scraping libraries. Use them to connect to your target website, select HTML elements from its pages and extract data from them.

Can You Scrape with Ruby?

Yes, you can! Ruby is a general-purpose programming language with an unbelievable number of libraries. There are several Ruby gems to support your web scraping project, like httparty, nokogiri, open-uri, selenium-webdriver, watir and capybara.

What Is the Most Popular Web Scraping Library in Ruby?

Nokogiri is the most popular web scraping library in Ruby. It's a gem that allows you to easily parse HTML and XML documents and extract data from them. Nokogiri is generally used with an HTTP client, like OpenURI or HTTParty.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.