Most modern websites rely on client-side rendering to display content. So when you make a Scrapy request to such websites, the data you need is often not in the response. This is because you must render JavaScript with Scrapy to access client-side data.

In this tutorial, we'll walk you through the five best Scrapy JavaScript rendering middleware that'll help you handle dynamic content:

Does Scrapy Work with JavaScript?

Scrapy works with JavaScript, but you must use a headless browser middleware for that. And besides rendering content, you gain the capability to interact with dynamic web pages.

Here's a quick comparison table of the five most popular Scrapy middlewares to render JavaScript:

| Tool | Language | Ease of Use | Browser Support | Anti-bot Bypass | Scale-up Costs |

|---|---|---|---|---|---|

| Scrapy-splash | Lua | Moderate | Splash | Can integrate with other services for some bypass techniques | Expensive |

| Scrapy with ZenRows | Python, NodeJS, Java, Ruby, PHP, Go, and any other | Easy | All modern rendering engines | Bypasses all anti-bot solutions, regardless of their level of complexity | Cost-effective |

| Scrapy-playwright | Python, Java, .NET, JavaScript, and TypeScript | Moderate | All modern rendering engines, including Chromium, Webkit, and Firefox | Playwright Stealth plugin and customizations | Expensive |

| Scrapy-puppeteer | JavaScript and an unofficial Python support with Pyppetee | Moderate | Chrome or Chromium | Puppeteer stealth plugin and customizations | Expensive |

| Scrapy-selenium | JavaScript and Python | Moderate | Chrome, Firefox, Edge, and Safari | Undetected ChromeDriver plugin, Selenium Stealth and customizations | Expensive |

How to Run JavaScript in Scrapy: Headless Browser Options

Let's get an overview of the five best middlewares for JavaScript rendering with Scrapy and see a quick example of each one using Angular.io as a target page (its page title relies on JS rendering).

Ready? Let's dive in.

1. Scrapy with ZenRows

ZenRows is a web scraping API with in-built headless browser functionality for rendering JavaScript and extracting dynamic content. Also, it provides you with a complete toolkit to web scrape without getting blocked by anti-bot measures.

Integrating Scrapy with ZenRows gives you rotating premium proxies, anti-CAPTCHA, auto-set User Agent rotation, fingerprinting bypass, and everything necessary to access your desired data.

ZenRows Example: Setting it up

Follow the steps below to render JavaScript with Scrapy using ZenRows.

To get started, sign up to get your ZenRows API key.

Then, create a function that generates the API URL you'll call to retrieve the rendered content from the ZenRows service. This function takes two arguments: url (your target website) and api_key.

To create this function, import the urlencode module and use the payload dictionary to define the parameters to construct the ZenRows request. These parameters include js_render, js_instructions, premium_proxy, and antibot. The js-instructions parameter allows you to instruct ZenRows to wait for a few seconds for the JS-rendered content to load.

import scrapy

from urllib.parse import urlencode

def get_zenrows_api_url(url, api_key):

# Define the necessary parameters

payload = {

'url': url,

'js_render': 'true',

'js_instructions': '[{"wait": 500}]',

'premium_proxy': 'true',

'antibot': 'true',

}

The urlencode module is used to URL-encode data, which means converting characters to their encoded representations. This is useful for creating valid URLs that can be sent in HTTP requests.

Lastly, construct the API URL by combining the ZenRows base API URL https://api.zenrows.com/v1/, the api_key, and the encoded payload. Then, return the generated API URL, and you'll have your function.

import scrapy

from urllib.parse import urlencode

def get_zenrows_api_url(url, api_key):

# Define the necessary parameters

payload = {

'url': url,

'js_render': 'true',

'js_instructions': '[{"wait": 500}]',

'premium_proxy': 'true',

'antibot': 'true',

}

# Construct the API URL

api_url = f'https://api.zenrows.com/v1/?apikey={api_key}&{urlencode(payload)}'

return api_url

ZenRows Example: Scraping Dynamic Data

Using the previous code and building TestSpider class, the script below is your complete code to render JavaScript using ZenRows:

import scrapy

from urllib.parse import urlencode

def get_zenrows_api_url(url, api_key):

# Define the necessary parameters

payload = {

'url': url,

'js_render': 'true',

'js_instructions': '[{"wait": 500}]',

'premium_proxy': 'true',

'antibot': 'true',

}

# Construct the API URL

api_url = f'https://api.zenrows.com/v1/?apikey={api_key}&{urlencode(payload)}'

return api_url

class TestSpider(scrapy.Spider):

name = 'test'

def start_requests(self):

urls = [

'https://angular.io/docs',

]

api_key = 'Your_API_Key'

for url in urls:

# make a GET request using the ZenRows API URL

api_url = get_zenrows_api_url(url, api_key)

yield scrapy.Request(api_url, callback=self.parse)

def parse(self, response):

# Extract and print the title tag

title = response.css('title::text').get()

yield {'title': title}

Run it, and you should get the following result:

{'title': 'Angular - Introduction to the Angular docs'}

Awesome!

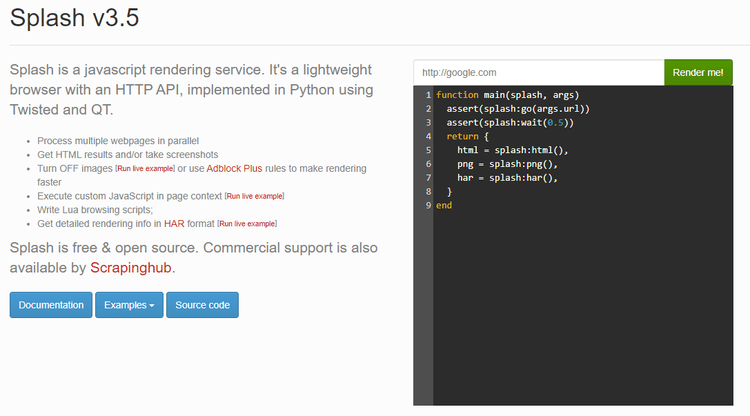

2. Scrapy Splash

Scrapy-splash is a lightweight headless browser that allows you to load web pages with JavaScript in Scrapy and simulate user behavior by sending requests to the Splash server's HTTP API.

While Splash doesn't hold the same level of popularity as Puppeteer and Playwright, it's actively maintained by the developers behind Scrapy. So, its JavaScript rendering and web scraping capabilities are up to date.

As a plus, Splash is asynchronous. That means it can process multiple web pages in parallel. It can also turn off images or block ads using the Adblock feature to reduce load time.

Setting up this middleware isn't very straightforward, but let's get into it.

Scrapy Splash Example: Setting it up

Splash is available as a Docker image. Thus, to get started, install and run Docker version 17 or newer. Then, pull the Docker image using the following command.

docker pull scrapinghub/splash

Next, create and start a new Splash container. It'll host the Splash service on localhost (your IP address) at port 8050 (HTTP) and make it accessible via HTTP requests.

docker run -it -p 8050:8050 --rm scrapinghub/splash

You can access it by making requests to the appropriate endpoint, which is usually http://localhost:8050/. To confirm everything is running correctly, visit the URL above using a browser. You should see the following page:

Next is integrating with Scrapy for JavaScript rendering. To do that, you need both the Scrapy-splash library and Scrapy. So, install both using pip.

pip install scrapy scrapy-splash

Then, create a Scrapy project and generate your spider using the following commands.

$ scrapy startproject project_name

$ scrapy genspider spider_name start_url

This will initialize a new Scrapy project and create a Scrapy spider with the specified name. Remember to replace project_name and spider_name.

After that, configure Scrapy to use Splash. Start by adding the Splash API URL to your settings.py file.

SPLASH_URL = 'http://[host]:8050'

Replace [host] with your machine's IP address.

Configure the Scrapy-splash middleware by adding it to the DOWNLOADER_MIDDLEWARES settings and changing the HttpCompressionMiddleware priority to 810. This priority adjustment is necessary to avoid response decompression by the Splash service. In other words, it enables Splash to handle requests without interruptions.

DOWNLOADER_MIDDLEWARES = {

'scrapy_splash.SplashCookiesMiddleware': 723,

'scrapy_splash.SplashMiddleware': 725,

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware': 810,

}

Add SplashDeduplicateArgsMiddleware to the SPIDER_MIDDLEWARE settings. This middleware helps to save space by avoiding duplicate Splash arguments in a disk request queue.

SPIDER_MIDDLEWARES = {

'scrapy_splash.SplashDeduplicateArgsMiddleware': 100,

}

Set a custom DUPEFILTER_CLASS to specify the class Scrapy should use as its duplication filter.

DUPEFILTER_CLASS = 'scrapy_splash.SplashAwareDupeFilter'

There are other settings you can add to change the default settings. You can find them on the Scrapy-splash GitHub page. But the above is all you need to integrate Scrapy with Splash.

Scrapy Splash Example: Scraping Dynamic Data

Now, let's use Splash in a spider to render dynamic content and retrieve the necessary data.

To do that, use the scrapy_splash.SplashRequest instead of the default scrapy.Request.

import scrapy

from scrapy_splash import SplashRequest

class SplashSpider(scrapy.Spider):

name = 'splash_spider'

start_urls = ['https://angular.io/docs']

def start_requests(self):

# Generate SplashRequests to the specified start URLs with a 2-second delay.

for url in self.start_urls:

yield SplashRequest(url, self.parse, args={'wait': 2})

def parse(self, response):

# Extract the title from the response HTML using a CSS selector.

title = response.css('title::text').get()

yield {'title': title}

The code block above defines a Scrapy spider that sends requests to the URLs specified in the start_urls list. Each request is a SplashRequest, which utilizes the Splash rendering service. The requests are processed with a specified delay of 2 seconds using the args parameter, and the parse method extracts the title text from the response HTML using a CSS selector.

Run the code using scrapy crawl spider_name, and you'll get the page title.

{'title': 'Angular - Introduction to the Angular docs'}

Did it work? If yes, congrats! Otherwise, it's important to note that Splash doesn't always render content for websites that rely on Angular JS and React. So, this code might not work for you.

Check out our complete tutorial on Scrapy Splash to learn more.

3. Scrapy Playwright

The Scrapy-playwright library is a download handler that allows you to leverage Playwright's services in Scrapy to get JavaScript capabilities.

Playwright is a powerful headless browser that automates browser interactions using a few lines of code. Compared to other known tools like Puppeteer and Selenium, its ease of use makes it a popular web scraping solution and the least difficult to scale.

Like most headless browsers, Playwright can imitate natural user interactions, including clicking page elements and scrolling through a page. Also, Playwright supports multiple browsers, including Firefox, Chrome, and Webkit.

Although Playwright is primarily a Node JS library, you can use its API in TypeScript, Python, .NET, and Java. This makes it easy to integrate Playwright with Scrapy.

Here's how:

Scrapy Playwright Example: Setting It Up

Integrating asyncio-based projects like Playwright requires Scrapy 2.0 or newer.

To get started, install Scrapy-playwright using the following command.

pip install scrapy-playwright

Here, Playwright works as a dependency, so it gets installed when you run the above command. However, you might need to install the browsers. You can do this by running this command.

playwright install

You can also choose to install the specific browsers you want to use. For example:

playwright install firefox chromium

BoldNext, configure Scrapy to use Playwright by replacing the default Download Handlers with Scrapy Playwright Download Handlers and setting the asyncio-based Twisted reactor.

DOWNLOAD_HANDLERS = {

"http": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

"https": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

}

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

Note that the ScrapyPlaywrightDownloadHandler class inherits the default Scrapy Handlers. So, you must explicitly mark requests you want to run through the Playwright Handler. Otherwise, the regular Scrapy handler will handle all requests.

Scrapy Playwright Example: Scraping Dynamic Data

Once everything is set up, you can render JavaScript with Playwright by setting the Request.meta key to "playwright": True`. This instructs Scrapy to use Playwright to render JavaScript on the response page.

import scrapy

class PlaywrightSpider(scrapy.Spider):

name = 'playwright_spider'

start_urls = ['https://angular.io/docs']

def start_requests(self):

for url in self.start_urls:

# Set the playwright Request.meta key

yield scrapy.Request(url, meta={"playwright": True})

def parse(self, response):

# Extract the page title

title = response.css('title::text').get()

yield {'title': title}

This spider sends a request to the target URL and uses Playwright to handle the page rendering before parsing the response and extracting the page title.

When you run the code, you'll get the following outcome.

{'title': 'Angular - Introduction to the Angular docs'}

Congrats on rendering JavaScript with Scrapy through Playwright. Discover more in our in-depth Scrapy Playwright guide.

4. Scrapy Selenium

Scrapy-selenium is a middleware that merges Scrapy with the popular Selenium headless browser.

Selenium is a Python library for automating web scraping tasks and rendering dynamic content. Using the Webdriver protocol, the Selenium API can control local and remote instances of different browsers like Chrome, Firefox, and Safari. Additionally, Selenium supports other languages, including C#, JavaScript, Java, and Ruby.

You can use Selenium with Scrapy for JavaScript by directly importing the Python library or through the Scrapy-selenium middleware. However, the first approach can get a bit complex. Therefore, we'll use the middleware option, which is much easier to implement.

Here's how:

Scrapy Selenium: Setting It Up

Start by installing Scrapy-selenium using the following command.

pip install scrapy-selenium

Note that Scrapy-selenium requires Python 3.6 or newer. So, ensure you have that installed alongside a Selenium-supported browser. We'll use Chrome for this tutorial.

As implied earlier, Selenium needs a Webdriver to control a browser. So, download one that matches the version of the browser you have installed on your machine. Since we're using Chrome 115.0.5790.171, we need the corresponding Chromedriver. For Firefox, you'll need to install the corresponding Geckodriver.

You can discover your Chrome version by navigating to settings and clicking About Chrome.

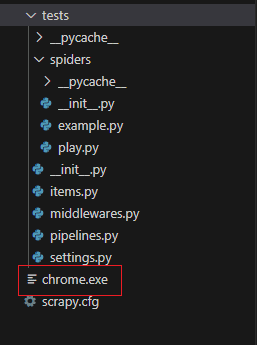

Once you've unzipped your Chromedriver file, add the Chrome executable to your Scrapy project, like the image below.

Next, configure Scrapy to use Selenium by adding the browser, the path to the Chromedriver executable, and arguments to pass to the executable, like in the example below.

# Selenium Chromedriver settings

from shutil import which

SELENIUM_DRIVER_NAME = 'chrome'

SELENIUM_DRIVER_EXECUTABLE_PATH = which('chrome')

SELENIUM_DRIVER_ARGUMENTS=['--headless']

_Italic_After that, add the Selenium middleware to your DOWNLOADER_MIDDLEWARES setting.

DOWNLOADER_MIDDLEWARES = {

'scrapy_selenium.SeleniumMiddleware': 800

}

Scrapy Selenium Example: Scraping Dynamic Data

You can use Selenium to render JavaScript by replacing the regular scrapyRequest with SeleniumRequest.

import scrapy

from scrapy_selenium import SeleniumRequest

class SeleniumSpider(scrapy.Spider):

name = 'selenium_spider'

start_urls = ['https://angular.io/docs']

# Initiate requests using Selenium for the start URL

def start_requests(self):

for url in self.start_urls:

yield SeleniumRequest(url=url, callback=self.parse)

# Parse the response using Selenium

def parse(self, response):

# Extract the page title using CSS selector

title = response.css('title::text').get()

yield {'title': title}

The spider above uses the SeleniumRequest class to initiate the request. The parse function then processes the responses from Selenium, extracting the page title using a CSS selector.

Run the code to extract the page title.

{'title': 'Angular - Introduction to the Angular docs'}

Bingo!

To be noted, Scrapy-selenium is no longer maintained and broken for newer versions, so this code might not work for you. However, a workaround is possible.

The workaround is using the Selenium Python library directly in Scrapy. For that, import the necessary modules (Webdriver, service, and options) and override the start_requests method to configure Scrapy to use the Selenium Webdriver for each request.

import scrapy

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

class SeleniumSpider(scrapy.Spider):

name = 'selenium_spider'

start_urls = ['https://angular.io/docs']

# Generate normal scrapy request

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(url, self.parse, meta={'url': url})

def parse(self, response):

url = response.meta['url']

# Create a Service object for chromedriver

service = Service()

# Create Chrome options

options = Options()

options.add_argument("--headless") # Set the browser to run in headless mode

# Create a new Selenium webdriver instance with the specified service and options

driver = webdriver.Chrome(service=service, options=options)

# Use the driver to navigate to the URL

driver.get(url)

# Extract the page title using Selenium

title = driver.title

# Close the driver after use

driver.quit()

yield {'title': title}

In the code block above, the Webdriver instance is created within the start_requests method, ensuring that each request has its own instance.

You'll get the same result as Scrapy-selenium when you run this code.

Check out our detailed tutorial on Scrapy Selenium to learn more.

5. Scrapy Puppeteer

Last on this list is Puppeteer, a powerful headless browser for automating web browsers and rendering dynamic content. Being a Node JS library with no official Python support, Scrapy can only integrate with its unofficial Python port, Pyppeteer.

Scrapy-pyppeteer grants you access to Puppeteer's functionalities. However, it's not actively maintained, and its GitHub repository recommends Scrapy-playwright for headless browser integrations.

But if you're still interested, here's how to integrate Scapy with Pyppeteer.

Scrapy Puppeteer: Setting It Up

To get started, install Scrapy-pyppeteer using the following command.

pip install scrapy-pyppeteer

Next, configure Scrapy to use Pyppeteer by adding the following Download Handlers in your settings.py file.

DOWNLOAD_HANDLERS = {

"http": "scrapy_pyppeteer.handler.ScrapyPyppeteerDownloadHandler",

"https": "scrapy_pyppeteer.handler.ScrapyPyppeteerDownloadHandler",

}

Like Scrapy-playwright, you must explicitly enable the Pyppeteer Handler for requests you want Pyppeteer to process.

Scrapy Puppeteer Example: Scraping Dynamic Data

To use Pyppeteer in your request, set the Pyppeteer Request.meta key to {"pyppeteer" = true}.

import scrapy

class PyppeteerSpider(scrapy.Spider):

name = 'pyppeteer_spider'

start_urls = ['https://angular.io/docs']

def start_requests(self):

for url in self.start_urls:

# Set the pyppeteer Request.meta key

yield scrapy.Request(url, meta={'pyppeteer': True})

def parse(self, response):

# Extract the page title

title = response.css('title::text').get()

yield {'title': title}

The code snippet above sends a request to the target URL using Pyppeteer and parses the returned response to extract the page title.

Execute the code, and the output will be as follows.

{'title': 'Angular - Introduction to the Angular docs'}

Awesome! However, as stated earlier, Scrapy-pyppeteer is no longer maintained. So, this might not work for you.

Avoid Getting Blocked with Scrapy Headless Browser Middleware for JavaScript Rendering

While integrating Scrapy with headless browsers enables you to render dynamic content before retrieving the response, it doesn't automatically prevent you from getting blocked. This is a major web scraping challenge.

One error that's more specific to Scrapy is the Scrapy 403 Forbidden error, which occurs when your target website recognizes you as a bot and denies you access.

A great first step to overcoming this challenge is using proxies, which allow you to route your requests through different IP addresses. Read our guide on how to use proxies with Scrapy to learn more.

Also, websites often use User Agents' (UAs) information web clients share to identify and block requests accordingly. Therefore, randomizing accurate UAs while using proper headers can also help you avoid detection. Our guide on Scrapy User Agents explains this in detail.

However, these techniques, alongside many others you may have encountered, are not foolproof and may not work isolatedly. Fortunately, ZenRows offers an all-in-one solution that includes everything you need to bypass any anti-bot challenge. Moreover, you can leverage ZenRows functionality in Scrapy with a single API call.

Conclusion

Scrapy is a powerful web scraping tool. However, it requires integrations with headless browsers or web engines to render dynamic content that relies on JavaScript.

Selenium, Puppeteer, Playwright, and Splash are great tools for executing JavaScript, but they can get complex and expensive to scale. Of the options discussed in this article, ZenRows is the most reliable and easiest to scale.

In addition to rendering JavaScript, ZenRows handles all anti-bot bypass needs, allowing you to focus on extracting the necessary data. Sign up now to try ZenRows for free.

Frequent Questions

Can We Use Scrapy for JavaScript?

We can use Scrapy for JavaScript but must integrate with headless browsers. Scrapy, by itself, doesn't have JavaScript rendering functionality. However, with tools like Playwright, Splash, Selenium, and the all-in-one ZenRows, we can use Scrapy to render JavaScript.

What Is the Alternative to Scrapy JavaScript?

The alternatives to Scrapy JavaScript are web scraping solutions with headless browser functionality. Tools like Selenium, Puppeteer, and Playwright are great for making HTTP requests and rendering responses before parsing. That said, for an all-around Scrapy alternative that executes JavaScript while handling all anti-bot bypasses, use ZenRows.

Can Scrapy Replace Selenium?

Scrapy can replace Selenium by integrating with headless browsers like Splash and Playwright. While they're both Python libraries, Selenium is a headless browser that can render JavaScript and simulate user interactions but is expensive to scale. On the other hand, Scrapy can do the same but better by integrating with scalable solutions like ZenRows.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.