Web scraping with Scrapy enables quick data extraction from the web, but developers frequently get blocked by target websites. The reason? They use a variety of measures to detect bots, such as inspecting the User Agent request header.

In this tutorial, you'll learn the importance of the Scrapy User Agent, how to change it and also the way to auto-rotate it to increase your chances to succeed while scraping.

Let's get started!

What Is a User Agent in Scrapy?

When making an HTTP request, the client sends additional data to the server, known as request headers, which contain information about the client and the expected response.

HTTP request headers are represented as key-value pairs, with the key being the header name and the value being the data associated with that header. And for web scraping, the User Agent (UA) is a critical part of the headers because websites can identify you're a bot because of the information you send or because they can identify someone's making an unusually large amount of requests.

UAs typically include your operating system, browser version, and more. They look like this:

Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36

However, the default Python Scrapy User Agent is different.

What Is the Default User Agent in Scrapy?

Scrapy provides a default User-Agent header that is used when making HTTP requests to websites. It looks like this:

Scrapy/2.9.0 (+https://scrapy.org)

2.9.0 represents the Scrapy version.

As you can assume, the default User Agent in Scrapy will make you be flagged as a bot and blocked easily. Yet luckily, the library allows you to customize your UA, and that's what we're going to do next.

How Do You Set a Custom User Agent in Scrapy?

In this section, you'll learn how to customize your User Agent and then rotate it so that you aren't flagged as a bot.

As a prerequisite, create a spider.

Start by quickly installing Scrapy via pip if you don't have it at the moment:

pip install scrapy

Then, create a new Scrapy project (for example, name it scraper):

# Syntax: scrapy startproject <project_name>

scrapy startproject scraper

Now, make a basic spider and take https://httpbin.io/user-agent as a target URL to check your new UA later. For that, use the genspider command in your project directory:

# Syntax: scrapy genspider <spider_name> <target_website>

scrapy genspider headers httpbin.io/user-agent

Update the content of the spider file to log the response of your target URL:

import scrapy

class HeadersSpider(scrapy.Spider):

name = 'headers'

allowed_domains = ['httpbin.io']

start_urls = ['https://httpbin.io/user-agent']

def parse(self, response):

self.log(f'RESPONSE: {response.body}')

Run the spider in your project root:

# Syntax: scrapy crawl <spider_name>

scrapy crawl headers

You'll get a response like the one below in the Scrapy logs in your terminal:

"user-agent": "Scrapy/2.9.0 (+https://scrapy.org)"

This is the response from HTTPBin that displays our starting point: the default Scrapy User Agent. Let's change it.

Step 1: Generate a Random UA

Coming up with a real User Agent is essential, yet many scrapers don't get to match the small details, which doesn't put them one step ahead.

Check out our guide for an in-depth technical overview, or just grab a list of working UAs from it. For now, we'll use one that we grabbed there:

Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36

Step 2: Set a New User Agent in Scrapy with Middleware

You can set a new UA in Scrapy in one of two ways: globally for all spiders in your project or locally for specific spiders. We recommend the first path, but we'll show you how to make the change in both ways.

Global Scope

To set the User Agent globally, specify the USER_AGENT variable in the settings.py file located in the scrapy folder of your project's root directory.

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36'

Run the crawl command again, and you should see your custom UA in the logged response this time:

"user-agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

Alternatively, you can specify the User-Agent key of the DEFAULT_REQUEST_HEADERS dictionary in the same settings file to get the same result:

DEFAULT_REQUEST_HEADERS = {

# ... Other headers

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

}

Local Scope

If you want to set the User-Agent for a concrete spider instead, you can specify USER_AGENT in the custom_settings dictionary property of the spider class in question.

# ...

class HeadersSpider(scrapy.Spider):

name = 'headers'

allowed_domains = ['httpbin.org']

start_urls = ['https://httpbin.org/headers']

custom_settings = {

'USER_AGENT': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

}

def parse(self, response):

yield { 'response': response.body }

You should get the same result as with the global scope approach.

Step 3: Build a User Agent Rotator in Scrapy

Rotating UAs is crucial to avoid being identified as the single source of many requests and therefore being blocked. So, let's look into how to do that.

We'll use the downloader middleware built-in feature to create a Scrapy User Agent middleware that selects a random one from a pool for each request.

Start by grabbing a couple of extra UAs from our list of User Agents for web scraping that you'll rotate through with Scrapy. Then, create a new USER_AGENTS list in your settings.py file and include your chosen UAs:

USER_AGENTS = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15'

]

Next, create a new rotator middleware in your middleware.py file. We leveraged the process_request() method, as well as the access to the request, since it's called for every request.

# ...

import random

# ...

class UARotatorMiddleware:

def __init__(self, user_agents):

self.user_agents = user_agents

@classmethod

def from_crawler(cls, crawler):

user_agents = crawler.settings.get('USER_AGENTS', [])

return cls(user_agents)

def process_request(self, request, spider):

user_agent = random.choice(self.user_agents)

request.headers['User-Agent'] = user_agent

Now, register the middleware as a downloader in your settings.py file:

# ...

DOWNLOADER_MIDDLEWARES = {

# ... Other middlewares

'scratest.middlewares.UARotatorMiddleware': 400,

}

Finally, update your headers spider to look like this:

import scrapy

class HeadersSpider(scrapy.Spider):

name = "headers"

allowed_domains = ["httpbin.io"]

start_urls = ["https://httpbin.io/user-agent"]

def parse(self, response):

self.logger.info(f'RESPONSE: {response.text}')

To test that it works, run the command scrapy crawl headers about three times now, and you should get a response similar to this in your logs:

2023-07-16 17:01:45 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://httpbin.io/user-agent> (referer: None)

2023-07-16 17:01:45 [headers] INFO: RESPONSE: {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

}

====

2023-07-16 17:04:02 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://httpbin.io/user-agent> (referer: None)

2023-07-16 17:04:02 [headers] INFO: RESPONSE: {

"user-agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

}

====

2023-07-16 17:04:29 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://httpbin.io/user-agent> (referer: None)

2023-07-16 17:04:29 [headers] INFO: RESPONSE: {

"user-agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

}

Great! A randomly selected UA was chosen from your list for each request, which confirms that your Scrapy User Agent rotator is working with the strings you set manually! You're better equipped as a web scraper now.

Easy Way to Get a Random User Agent in Scrapy At Scale

UA strings constructed incorrectly or outdated are a real problem. Fortunately, you can use the community-managed scrapy-useragents middleware. It creates a User Agent rotation in Scrapy through 2,200 variations, which is just fine for starters.

Quickly install it using pip install scrapy-user-agents and register it as a downloader middleware in settings.py like this:

# ...

DOWNLOADER_MIDDLEWARES = {

# ... Other middlewares

# 'scratest.middlewares.UARotatorMiddleware': 400,

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': None,

'scrapy_user_agents.middlewares.RandomUserAgentMiddleware': 400,

}

Rerun the command scrapy crawl headers several times, and you'll get a different UA each time:

2023-07-16 17:10:25 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://httpbin.io/user-agent> (referer: None)

2023-07-16 17:10:25 [headers] INFO: RESPONSE: {

"user-agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36"

}

====

2023-07-16 17:11:33 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://httpbin.io/user-agent> (referer: None)

2023-07-16 17:11:34 [headers] INFO: RESPONSE: {

"user-agent": "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36"

}

====

2023-07-16 17:12:18 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://httpbin.io/user-agent> (referer: None)

2023-07-16 17:12:18 [headers] INFO: RESPONSE: {

"user-agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.118 Safari/537.36"

}

Each of your UAs was different each time since the list provided by scrapy-useragents is larger.

A reality, though, is this isn't enough for scraping at scale. And aside from the detection related to headers in general, there are other big anti-scraping challenges that web scraping enthusiasts need to face, like IP banning, CAPTCHAs, browser fingerprinting or even techniques based on machine learning.

You might be interested in learning about proxies for Scrapy as a primary recommendation.

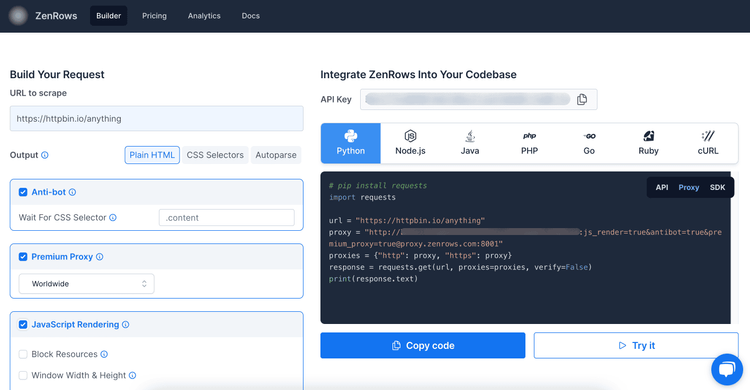

The good news is there's an easy way to get around those challenges: using a web scraping API like ZenRows, an all-in-one tool that deals with all anti-bot measures for you. It automatically rotates your User Agent, provides you with premium proxies, and gives you everything you're going to need.

You can integrate it with Scrapy or use it as an alternative. Let's quickly see ZenRows in action with Scrapy!

Sign up for an account to get your free API key from the Request Builder. You'll scrape through API requests.

In your headers spider file (headers.py), create the function get_zenrows_api_url() that'll take your target URL and ZenRows' API key, and activate the recommended parameters (js_render, antibot and premium_proxy).

import scrapy

from urllib.parse import urlencode

# Creates a ZenRows proxy URL for a given target_URL using the provided API key.

def get_zenrows_api_url(url, api_key):

payload = {

'url': url,

'js_render': 'true',

'antibot': 'true',

'premium_proxy': 'true'

}

# Construct the API URL by appending the encoded payload to the base URL with the API key

api_url = f'https://api.zenrows.com/v1/?apikey={api_key}&{urlencode(payload)}'

return api_url

Now, update the start_requests() function of the headers spider to make your requests via ZenRows instead. Replace <YOUR_ZENROWS_API_KEY> with your API key and add your target URL (https://httpbin.io/user-agent).

# ...

class HeadersSpider(scrapy.Spider):

name = "headers"

def start_requests(self):

url = 'https://httpbin.io/user-agent'

api_key = '<YOUR_ZENROWS_API_KEY>'

api_url = get_zenrows_api_url(url, api_key)

yield scrapy.Request(url=api_url, callback=self.parse)

def parse(self, response):

self.logger.info(f'RESPONSE: {response.text}')

Here's the complete code of the headers spider:

import scrapy

from urllib.parse import urlencode

# Creates a ZenRows proxy URL for a given target_URL using the provided API key.

def get_zenrows_api_url(url, api_key):

payload = {

'url': url,

'js_render': 'true',

'antibot': 'true',

'premium_proxy': 'true'

}

# Construct the API URL by appending the encoded payload to the base URL with the API key

api_url = f'https://api.zenrows.com/v1/?apikey={api_key}&{urlencode(payload)}'

return api_url

class HeadersSpider(scrapy.Spider):

name = "headers"

def start_requests(self):

url = 'https://httpbin.io/user-agent'

api_key = '<YOUR_ZENROWS_API_KEY>'

api_url = get_zenrows_api_url(url, api_key)

yield scrapy.Request(url=api_url, callback=self.parse)

def parse(self, response):

self.logger.info(f'RESPONSE: {response.text}')

You can make several requests to see your UA changes per request with the scrapy crawl headers command.

ZenRows skips Scrapy's header settings in the global or local scope and sets more robust headers for you so that you maximize your scraping success. Awesome!

You can also try with a heavily protected web page as a target URL, such as https://www.g2.com/products/jira/reviews, and you'll succeed in getting access granted.

Happy scraping!

Conclusion

Using a custom and random User Agent in Scrapy is a must in real-world web scraping. However, it's necessary to know the best practices, and you might need some library to do it at scale.

Additionally, there are many other anti-bot challenges, like CAPTCHAS, honeypot traps, and IP rate limiting, that you need to win over.

ZenRows is a popular complement to Scrapy that will help you get the job done and save you tons of time and effort against anti-bot measures. Try it free now!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.