Using an incorrect user agent when scraping or not applying some related best practices is a recipe for getting blocked. To solve that, you'll find here a list of the best user agents for scraping and some tips to use them.

Ready? Let's go!

What Is a User Agent?

User Agent (UA) is a string sent by the user's web browser to a server. It's located in the HTTP header and identifies the browser type and version as well as the operating system. Accessed with JavaScript on the client side using navigator.userAgent property, the remote web server uses this information to identify and render the content in a way that's compatible with the user's specifications.

While different structures and information are contained, most web browsers tend to follow the same format:

Mozilla/5.0 (<system-information>) <platform> (<platform-details>) <extensions>

For example, a user agent string for Chrome (Chromium) might be Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36. Let's break it down: it contains the name of the browser (Chrome), the version number (109.0.0.0) and the operating system the browser is running on (Windows NT 10.0, 64-bit processor).

Why Is a User Agent Important for Web Scraping?

Since UA strings help web servers identify the type of browser (and bots) requests, adopting them for scraping can help mask your spider as a web browser.

Beware that using a wrongly formed user agent will get your data extraction script blocked.

What Are the Best User Agents for Scraping?

We compiled a list of the best ones to use while scraping. They can help you emulate a browser and avoid getting blocked:

- Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36

- Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:124.0) Gecko/20100101 Firefox/124.0

- Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36 Edg/123.0.2420.81

- Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36 OPR/109.0.0.0

- Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36

- Mozilla/5.0 (Macintosh; Intel Mac OS X 14.4; rv:124.0) Gecko/20100101 Firefox/124.0

- Mozilla/5.0 (Macintosh; Intel Mac OS X 14_4_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/17.4.1 Safari/605.1.15

- Mozilla/5.0 (Macintosh; Intel Mac OS X 14_4_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36 OPR/109.0.0.0

- Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36

- Mozilla/5.0 (X11; Linux i686; rv:124.0) Gecko/20100101 Firefox/124.0

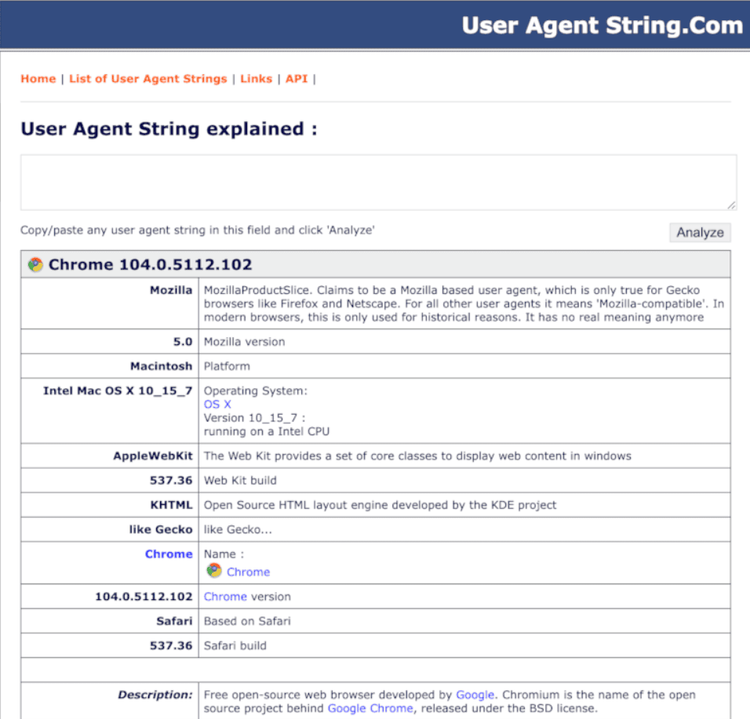

How to Check User Agents and Understand Them

The easiest way to do so is to visit UserAgentString.com. It automatically displays the user agent for your web browsing environment. You can also get comprehensive information on other user agents. You just have to copy/paste any string in the input field and click on ''Analyze.''

How to Set a New User Agent Header in Python?

Let's run a quick example of changing a scraper user agent using Python requests. We'll use a string associated with Chrome:

Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36

Use the following code snippet to set the User-Agent header while sending the request:

import requests

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"}

# You can test if your web scraper is sending the correct header by sending a request to HTTPBin

r = requests.get("https://httpbin.org/headers", headers=headers)

print(r.text)

Its output will look like this:

{

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-63c42540-1a63b1f8420b952f1f0219f1"

}

}

And that's it! You now have a new user agent for scraping.

How to Avoid Getting Your UA Banned

Although employing a user agent can reduce your chances of getting blocked, sending too many requests from the same UA can trigger the anti-bot system, eventually leading to the same result. The best way to avoid this is to use browser UAs, rotate through a list of user agents for scraping and keep them up to date.

1. Rotate User Agents

Rotating scraping user agents refers to changing them while making web requests. This lets you access more data and increases your scraper's efficiency. This method can help protect your IP address from getting blocked and blacklisted.

How to Rotate User Agents

Start by obtaining a list of user agents for scraping. You can get some real ones from WhatIsMyBrowser.

We'll use these three strings and put them in the Python list():

user_agent_list = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

]

Use a for loop and random.choice() to select a random one from the list():

for i in range(1,4):

user_agent = random.choice(user_agent_list)

Set the UA header and then send the request:

headers = {'User-Agent': user_agent}

response = requests.get(url, headers=headers)

Here's what the complete code should look like:

import requests

import random

user_agent_list = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

]

url = 'https://httpbin.org/headers'

for i in range(1, 4):

user_agent = random.choice(user_agent_list)

headers = {'User-Agent': user_agent}

response = requests.get(url, headers=headers)

received_ua = response.json()['headers']['User-Agent']

print("Request #%d\nUser-Agent Sent: %s\nUser-Agent Received: %s\n" % (i, user_agent, received_ua))

And this is your result after running the request:

Request #1

User-Agent Sent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36

User-Agent Received: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36

Request #2

User-Agent Sent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36

User-Agent Received: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36

Request #3

User-Agent Sent: Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15

User-Agent Received: Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15

2. Keep Random Intervals Between Requests

Maintain random intervals between requests to prevent your spider from getting detected and blocked.

You might be interested in reading our guide on how to bypass rate limits while web scraping.

3. Use Up-to-date User Agents

To keep your scraping experience smooth and uninterrupted, ensure that your spider's user agents are regularly updated. Outdated ones can get your IP blocked!

Conclusion

A user agent in web scraping lets you mimic the behavior of a web browser. This, in turn, helps you access a website as a human user and avoid getting blocked.

Today you learned what some of the best UA strings and tips are. Here's a recap on how to use them to help your scraping project:

- Rotate your user agent to avoid bot detection.

- Keep random intervals between requests.

- Keep your user agents updated.

Modern websites rely on different anti-scraping techniques to detect web scraping bots. Sure, using the best scraping user agent lowers the risks of getting blocked, but it may not always work. To avoid uncertainties and headaches, many people use a web scraping API, such as ZenRows.

ZenRows is capable of bypassing anti-bots and CAPTCHAs while scraping. It has rotating premium proxies and up to a 99.9% uptime guarantee. Oh, and you can get started for free. Sign you for free today!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.