Web scraping is essential for collecting data on the internet. The process can get pretty complex, especially considering all the challenges you have to complete and the hoops to get through. One of those is the rate limit, which can easily get you blocked if you're not careful.

So, how does it work, and how can your spider avoid it? In this article, we'll cover all about what a rate limit is and how to bypass it while scraping.

Let's jump straight in:

What Is a Rate Limit in Web Scraping?

The rate limit is the hard limit of requests you can send in a particular time window. In the case of APIs, it's the maximum amount of calls you can make. In other words, when the resource is limited, you cannot send requests over the restriction.

If you go beyond, you can get the following error responses:

- Slow down, too Many Requests from this IP Address.

- IP Address Has Reached Rate Limit.

WAF service providers, e.g., Cloudflare, Akamai, and Datadome, use rate limiting to strengthen their security. Meanwhile, API providers like Amazon use it to control the data flow and prevent overuse.

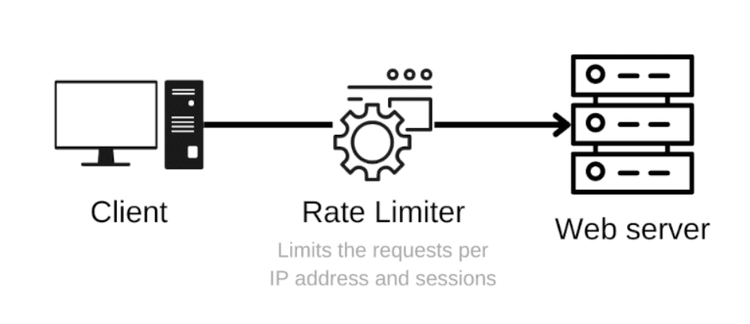

Let's see how it works:

Say you're restricted on the web server. When your scraper exceeds the rate limit, the server responds with 429: Too Many Requests.

There's a variety of rate-limiting methods. However, this article will explore real-world applications. Here are the popular types:

- IP Rate Limiting: #1 way of rate limiting. Simply associates the number of requests with the user's IP address.

- API Rate Limits: API providers generally require you to use an API key. Then they can limit the number of calls you can make in a specific time frame.

- Geographic Rate Limit: It's also possible to set limits for a specific region and country.

- Rate Limiting based on User Session: WAF vendors such as Akamai set session cookies and then restrict your request rate.

- Rate Limiting based on HTTP requests: Cloudflare supports rate limiting for specific HTTP headers and cookies. It's also possible to implement a restriction by TLS fingerprints.

Why Are APIs Rate Limited?

Many get limited to prevent overloading the web server. It also provides further protection from malicious bots and DDoS attacks. These attacks block the API's service from legitimate users or completely shut down its service.

Why Do Websites Use Rate Limiting?

The primary reason is, once again, to avoid server overloading and mitigate potential attacks. However, even if your intent is not malicious, you can get stuck on a limit while scraping. The reason for that is to control the data flow on the server side.

Bypassing the Rate Limits While Web Scraping

What can you do to avoid rate limits in web scraping? Here are some methods and tricks that can help:

- Using Proxy Servers

- Using Specific Request Headers

- Changing HTTP Request Headers

You now know that the most employed limiting approach is the IP-based one. Thus, we suggest using proxy servers. Let's first explore all options:

Using Specific Headers in Request

There are several headers we can use to spoof IP on the backend. You can also try them when a CDN delivers the content:

- X-Forwarded-Host: This one identifies the original host requested by the client in the Host HTTP request header. It's possible to get around rate limiting using an extensive list of hostnames. You can pass a URL in this header.

- X-Forwarded-For: It identifies the originating IP address of a client connecting to a web server through a proxy. It requires specifying the IP addresses of proxy servers used for the connection. Passing a single address or brute-force with a list of IPs is possible.

The headers below specify the IP address of the client. However, they might not be implemented in every service. You can change the address and try your luck!

- X-Client-IP

- X-Remote-IP

- X-Remote-Addr

Changing HTTP Request Headers

Sending requests with randomized HTTP headers can be used to bypass rate limiting. Many websites and WAF vendors use headers to block malicious bots. You can randomize them like User-Agent to avoid the limitations. That's a widely used scraping practice. Learn more about it in our article, where we share the 10 best tips to avoid getting blocked!

Python fans can also check [our guide on how to avoid blocking in Python like a pro!

Ultimate Solution: Proxy Servers

When you use a proxy server, your request is routed to the proxy. Then it gets the response and forwards the data to you. You won't need to deal with a rate-limited proxy since you can always use another one.

**This makes using proxies the ultimate solution to bypass IP rate limiting. ** While there are public and free-to-use servers, those are generally blocked by the WAF vendors and websites.

Let's see what the two types of proxy servers are:

- Residential Proxies: IP addresses are assigned by an ISP. They're much more reliable than data center ones since they're attached to an actual address. The primary drawback is the price---high-quality servers cost more.

- Datacenter Proxies: Datacenter proxies are commercially assigned. They usually don't have a unique address and are generally flagged by websites and WAF services. This means they are more affordable but less reliable than the former option.

You can also use a smart rotating proxy, which will automatically use a random residential proxy server every time you send a request.

Proxy Rotation in Python

What happens if that IP gets blocked?

You can build a list of proxy servers and rotate those while sending requests! Since this will let you use multiple addresses, you won't get blocked because of IP rate-limiting algorithms.

However, you don't need to change the proxy server in every request if there's an authentication session. Your session can also be tracked, so that isn't a proper solution.

Before going further, we have to get a proxy list!

Keep in mind that most public servers are short-lived and unreliable. This means that some of the examples below may not work properly when you get to them.

138.68.60.8:3128

54.66.104.168:80

80.48.119.28:8080

157.100.26.69:80

198.59.191.234:8080

198.49.68.80:80

169.57.1.85:8123

219.78.228.211:80

88.215.9.208:80

130.41.55.190:8080

88.210.37.28:80

128.199.202.122:8080

2.179.154.157:808

165.154.226.12:80

200.103.102.18:80

UnderlineFirst, save the proxy servers in a text file (proxies.txt). Second, read the ip:port pairs from the file and check whether they are working. Then, code simple synchronous functions to send requests from random proxy servers.

**If your application works in scale, you'll want to use an asynchronous proxy rotator. ** See our guide on building a fully-fledged proxy rotator in Python.

In order to check the proxy servers, we will send requests to httpbin. If they aren't working, we will receive an error in the response.

Now, let's dive in!

To start, we need to read the proxies from the text file:

proxies = open("proxies.txt", "r").read().strip().split("\n")

Then, we send requests to the URL using the requests module. Unfortunately, public proxies don't generally support SSL. So we have to use HTTP rather than HTTPS.

The get() function uses the given proxy server to send the GET request to the target URL. When there's an error, the returned status code will exceed 400. The status codes between 400 and 500 specify client-side errors, while anything above 500 points to a server-side one. If that's the case, the function returns None.

Finally, it returns the actual response if there isn't an error at all.

def get(url, proxy):

"""

Sends a GET request to the given url using given proxy server.

The proxy server is used without SSL, so the URL should be HTTP.

Args:

url - string: HTTP URL to send the GET request with proxy

proxy - string: proxy server in the form of {ip}:{port} to use while sending the request

Returns:

Response of the server if the request sent successfully. Returns `None` otherwise.

"""

try:

r = requests.get(url, proxies={"http": f"http://{proxy}"})

if r.status_code < 400: # client-side and server-side error codes are above 400

return r

else:

print(r.status_code)

except Exception as e:

print(e)

return None

Now, it's possible to check our proxies. The check_proxy() function returns True or False by sending a GET request to httpbin. We can then filter out the available proxy servers from our list.

def check_proxy(proxy):

"""

Checks the proxy server by sending a GET request to httpbin.

Returns False if there is an error from the `get` function

"""

return get("http://httpbin.org/ip", proxy) is not None

available_proxies = list(filter(check_proxy, proxies))

Afterward, we can use the random module to select a random server from those available. Then, we send a request with the help of the get function.

Conclusion

Congratulations, you now have a snippet to send requests from random IP addresses!

Here's a quick recap of everything you learned today:

- While there are different approaches to rate limiting, the most popular one is IP-based.

- Hard limits can be passed by using different HTTP headers.

- The most convenient solution is usually based on proxy rotation.

- Public and free proxy servers are not reliable; the better option is to use residential ones.

However, this implementation wouldn't be enough for scaled applications. There's lots of room for improvement. To learn more, check outour Python guide for a scalable proxy rotation.

Implementing a full-fledged proxy rotator suitable for scraping is difficult. To save you some pain, you can use ZenRows's API. It includes smart rotating proxies that can be used automatically by specifying a single URL. Sign up and get your free API key today!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.