Scrapy Selenium is a library to extend Scrapy with JavaScript rendering capabilities. It uses Selenium as a headless browser to interact with dynamic web pages and simulate human behavior to reduce the chances of your spiders getting blocked.

This tutorial will show you the basics and then guide you to more advanced interactions through code examples.

Let's dive in!

Can I Use Selenium with Scrapy?

Yes, you can, thanks to the scrapy-selenium Python package. This Scrapy middleware allows spiders to load pages in Selenium, adding JavaScript rendering capabilities to the scraping tool.

The main problem with this solution is that the middleware hasn't been maintained since 2020. This means it still relies on Selenium 3 and no longer works with the most recent version of browsers, so you might prefer to choose Scrapy Splash or Scrapy Playwright.

However, we devised a workaround to make scrapy-selenium work with Selenium 4! Keep reading to find out.

Why Use Selenium with Scrapy?

Using Selenium with Scrapy provides two main advantages: it enables Scrapy to render JavaScript, as well as allows you to mimic human behavior via browser interaction to avoid getting detected as a bot.

The main pros of Selenium are its vast community, extensive documentation, and cross-language compatibility. This makes it more popular than other headless browsers for browser automation in both testing and scraping.

You might be interested in our guide on how to avoid bot detection with Selenium.

Getting Started

Follow this step-by-step section to set up Selenium in Scrapy.

Set Up a Scrapy Project

You need Python 3 to follow this tutorial. Verify that your system doesn't already have it preinstalled with this command:

python --version

If yours does, you'll get a message like this:

Python 3.11.4

If you receive an error or version 2.x, your system doesn't meet the prerequisites. Download the Python 3.x installer, launch it, and follow the wizard.

You now have everything you need to set up a Scrapy project.

Use the commands below to create a scrapy-selenium-project folder with a Python virtual environment:

mkdir scrapy-selenium-project

cd scrapy-selenium-project

python3 -m venv env

Activate and enter the environment:

.env/bin/activate

Next, install Scrapy:

pip3 install scrapy

Be patient, as this may take a couple of minutes.

If you want to review the fundamentals, read our guide on Scrapy in Python.

Initialize a Scrapy project called selenium_scraper:

scrapy startproject selenium_scraper

The scrapy-selenium-project directory will now contain this file structure:

├── scrapy.cfg

└── selenium_scraper

├── __init__.py

├── items.py

├── middlewares.py

├── pipelines.py

├── settings.py

└── spiders

└── __init__.py

That's what a Scrapy spider looks like. Great, you're ready to add Selenium!

Install Scrapy Selenium

Enter the below command in the virtual environment terminal to install scrapy-selenium.

pip3 install scrapy-selenium

You'll also need Selenium greater than or equal to version 4.11.2 to integrate it into Scrapy, so launch the following. If you already have selenium installed on your system, that will update it to the latest version. Otherwise, it'll install it from scratch.

pip3 install selenium --upgrade

selenium is going to be added as part of your project dependencies by default.

Implement the Workaround for Selenium 4

As mentioned above, Scrapy Selenium doesn't natively support Selenium 4. But there's a workaround!

Get to the scrapy-selenium installation folder:

pip3 show scrapy-selenium

You should see something similar to this:

Name: scrapy-selenium

Version: 0.0.7

Summary: Scrapy with selenium

Home-page: https://github.com/clemfromspace/scrapy-selenium

Author: UNKNOWN

Author-email: UNKNOWN

License: UNKNOWN

Location: C:\Users\<YOUR_USER>\AppData\Local\Programs\Python\Python311\Lib\site-packages

Requires: scrapy, selenium

Required-by:

Open the folder specified in the Location field and then enter the scrapy-selenium directory. Locate the middlewares.py file and replace it with the below code. It's an updated version of the middlewares.py from a special fork of scrapy-selenium.

from importlib import import_module

from scrapy import signals

from scrapy.exceptions import NotConfigured

from scrapy.http import HtmlResponse

from selenium.webdriver.support.ui import WebDriverWait

from .http import SeleniumRequest

class SeleniumMiddleware:

"""Scrapy middleware handling the requests using selenium"""

def __init__(self, driver_name, driver_executable_path,

browser_executable_path, command_executor, driver_arguments):

"""Initialize the selenium webdriver

Parameters

----------

driver_name: str

The selenium ``WebDriver`` to use

driver_executable_path: str

The path of the executable binary of the driver

driver_arguments: list

A list of arguments to initialize the driver

browser_executable_path: str

The path of the executable binary of the browser

command_executor: str

Selenium remote server endpoint

"""

webdriver_base_path = f'selenium.webdriver.{driver_name}'

driver_klass_module = import_module(f'{webdriver_base_path}.webdriver')

driver_klass = getattr(driver_klass_module, 'WebDriver')

driver_options_module = import_module(f'{webdriver_base_path}.options')

driver_options_klass = getattr(driver_options_module, 'Options')

driver_options = driver_options_klass()

if browser_executable_path:

driver_options.binary_location = browser_executable_path

for argument in driver_arguments:

driver_options.add_argument(argument)

driver_kwargs = {

'executable_path': driver_executable_path,

f'{driver_name}_options': driver_options

}

# locally installed driver

if driver_executable_path is not None:

driver_kwargs = {

'executable_path': driver_executable_path,

f'{driver_name}_options': driver_options

}

self.driver = driver_klass(**driver_kwargs)

# remote driver

elif command_executor is not None:

from selenium import webdriver

capabilities = driver_options.to_capabilities()

self.driver = webdriver.Remote(command_executor=command_executor,

desired_capabilities=capabilities)

# webdriver-manager

else:

# selenium4+

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

if driver_name and driver_name.lower() == 'chrome':

service = Service()

self.driver = webdriver.Chrome(service=service, options=driver_options)

@classmethod

def from_crawler(cls, crawler):

"""Initialize the middleware with the crawler settings"""

driver_name = crawler.settings.get('SELENIUM_DRIVER_NAME')

driver_executable_path = crawler.settings.get('SELENIUM_DRIVER_EXECUTABLE_PATH')

browser_executable_path = crawler.settings.get('SELENIUM_BROWSER_EXECUTABLE_PATH')

command_executor = crawler.settings.get('SELENIUM_COMMAND_EXECUTOR')

driver_arguments = crawler.settings.get('SELENIUM_DRIVER_ARGUMENTS')

if driver_name is None:

raise NotConfigured('SELENIUM_DRIVER_NAME must be set')

# let's use webdriver-manager when nothing is specified instead | RN just for Chrome

if (driver_name.lower() != 'chrome') and (driver_executable_path is None and command_executor is None):

raise NotConfigured('Either SELENIUM_DRIVER_EXECUTABLE_PATH '

'or SELENIUM_COMMAND_EXECUTOR must be set')

middleware = cls(

driver_name=driver_name,

driver_executable_path=driver_executable_path,

browser_executable_path=browser_executable_path,

command_executor=command_executor,

driver_arguments=driver_arguments

)

crawler.signals.connect(middleware.spider_closed, signals.spider_closed)

return middleware

def process_request(self, request, spider):

"""Process a request using the selenium driver if applicable"""

if not isinstance(request, SeleniumRequest):

return None

self.driver.get(request.url)

for cookie_name, cookie_value in request.cookies.items():

self.driver.add_cookie(

{

'name': cookie_name,

'value': cookie_value

}

)

if request.wait_until:

WebDriverWait(self.driver, request.wait_time).until(

request.wait_until

)

if request.screenshot:

request.meta['screenshot'] = self.driver.get_screenshot_as_png()

if request.script:

self.driver.execute_script(request.script)

body = str.encode(self.driver.page_source)

# Expose the driver via the "meta" attribute

request.meta.update({'driver': self.driver})

return HtmlResponse(

self.driver.current_url,

body=body,

encoding='utf-8',

request=request

)

def spider_closed(self):

"""Shutdown the driver when spider is closed"""

self.driver.quit()

This workaround only works for Chrome.

Integrate Selenium into Scrapy

Open settings.py and add the following lines to configure Selenium in Scrapy as the default page downloader middleware:

"scrapy_selenium.SeleniumMiddleware": 800

}

Then, set Chrome as the browser to control and specify its arguments:

SELENIUM_DRIVER_ARGUMENTS = ["--headless=new"]

--headless=new makes sure that Selenium will launch Chrome in headless mode. If you instead want to see the actions performed by your scraping script in the browser, write:

SELENIUM_DRIVER_ARGUMENTS = []

By default, scrapy-selenium will launch Chrome with the GUI.

Amazing! Scrapy Selenium is ready to use. It's time to see it in action!

How to Use Selenium in Scrapy Python

To learn how to use Selenium in Scrapy, we'll use an infinite scrolling demo page as a target URL. It loads new products as you scroll down, so it's a perfect example of a dynamic content page that relies on JavaScript for data retrieval.

In the terminal, initialize a Scrapy spider for your target page:

scrapy genspider scraping_club https://scrapingclub.com/exercise/list_infinite_scroll/

import scrapy

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

allowed_domains = ["scrapingclub.com"]

start_urls = ["https://scrapingclub.com/exercise/list_infinite_scroll/"]

def parse(self, response):

pass

That's just what a basic Scrapy spider looks like.

Implement the start_requests() method as below to open a page in Chrome via Selenium. SeleniumRequest comes from scrapy_selenium and replaces the Scrapy built-in Request function with the goal of instructing the scraping script to open pages in the specified browser via Selenium.

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SeleniumRequest(url=url, callback=self.parse)

Don't forget to import SeleniumRequest by adding this line on top of your scraper:

from scrapy_selenium import SeleniumRequest

start_requests() replaces start_urls, so you can remove that attribute from the class. This is what the current code of your new spider looks like:

import scrapy

from scrapy_selenium import SeleniumRequest

class ScrapingClubSpider(scrapy.Spider):

name = 'scraping_club'

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SeleniumRequest(url=url, callback=self.parse)

def parse(self, response):

# scraping logic...

pass

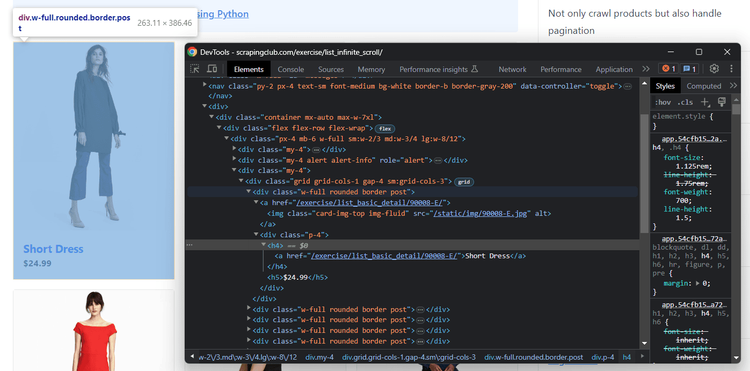

It only remains to define the scraping logic in the parse() method. Open the target site in the browser and inspect a product HTML node with DevTools:

Familiarize yourself with the DOM to devise an effective data parsing strategy to extract the name, price, image and URL of each product.

The following snippet uses a CSS selector in the Scrapy css() method to get all product HTML elements. Then, it iterates over them to extract their data and uses yield to create a new set of scraped items:

def parse(self, response):

# select all product elements and iterate over them

for product in response.css(".post"):

# scrape the desired data from each product

url = product.css("a").attrib["href"]

image = product.css(".card-img-top").attrib["src"]

name = product.css("h4 a::text").get()

price = product.css("h5::text").get()

# add the data to the list of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

Your entire scraping_club.py spider should now look like this:

import scrapy

from scrapy_selenium import SeleniumRequest

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SeleniumRequest(url=url, callback=self.parse)

def parse(self, response):

# select all product elements and iterate over them

for product in response.css(".post"):

# scrape the desired data from each product

url = product.css("a").attrib["href"]

image = product.css(".card-img-top").attrib["src"]

name = product.css("h4 a::text").get()

price = product.css("h5::text").get()

# add the data to the list of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

Execute the script to verify that it works as expected:

Execute the script to verify that it works as expected using scrapy crawl scraping_club.

`.

[selenium.webdriver.remote.remote_connection] DEBUG: Remote response: status=200

The output that matters is the list of the scraped objects you can find in the logs:

{'url': '/exercise/list_basic_detail/90008-E/', 'image': '/static/img/90008-E.jpg', 'name': 'Short Dress', 'price': '$24.99'}

{'url': '/exercise/list_basic_detail/96436-A/', 'image': '/static/img/96436-A.jpg', 'name': 'Patterned Slacks', 'price': '$29.99'}

# 6 products omitted for brevity...

{'url': '/exercise/list_basic_detail/96643-A/', 'image': '/static/img/96643-A.jpg', 'name': 'Short Lace Dress', 'price': '$59.99'}

{'url': '/exercise/list_basic_detail/94766-A/', 'image': '/static/img/94766-A.jpg', 'name': 'Fitted Dress', 'price': '$34.99'}

Before the end, you'll see the Scrapy Selenium request getting terminated:

[selenium.webdriver.remote.remote_connection] DEBUG: Finished Request

Fantastic! The basics of Scrapy with Selenium are no longer a secret!

**The current result only involves ten items as the page uses infinite scrolling to load data. So, let's see how to scrape all products in the next section of this scrapy-selenium documentation article.

Interact with Web Pages with scrapy-selenium Middleware

Scrapy Selenium can control Chrome in headless mode, allowing you to programmatically wait for elements, move the mouse, and more. These actions help to fool anti-bots because your spider will interact with pages like a human.

The interactions supported by Selenium include:

- Scroll down or up the page.

- Click page elements and perform other mouse actions.

- Wait for elements on the page to load, be visible, be clickable, etc.

- Fill out and empty input fields.

- Submit forms.

- Take screenshots.

- Drag and drop elements.

You can achieve some of those operations by passing specific arguments to the SeleniumRequest method, and the actions will be executed on the page before returning the final response to parse().

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SeleniumRequest(

url,

callback=self.parse,

# action 1...

# action 2...

)

Otherwise, you can access the Selenium driver object directly in parse(), and get all Selenium methods exposed by driver. Find out more in our guide on web scraping with Selenium in Python.

def parse(self, response):

driver = response.request.meta["driver"]

# ...

driver = response.request.meta["driver"]

# scroll to the end of the page 10 times

for x in range(0, 10):

# scroll down by 10000 pixels

ActionChains(driver) \

.scroll_by_amount(0, 10000) \

.perform()

# waiting 2 seconds for the products to load

time.sleep(2)

# scraping logic...

Add the required imports to make your spider work:

from selenium.webdriver import ActionChains

import time

If you run the script, you'll still get only ten items. Why? Because response doesn't reflect the changes made by the driver and still holds the old HTML!

When you do actions in parse() with Selenium and want to access the new state of the page, you must use the methods exposed by driver. Thus, replace the data extraction Scrapy logic with some Selenium code.

The logic below is equivalent to the scraping code presented earlier. Instead of relying on Scrapy, it uses the methods exposed by Selenium's driver object to select all products and extract the data of interest from them:

# select all product elements and iterate over them

for product in driver.find_elements(By.CSS_SELECTOR, ".post"):

# scrape the desired data from each product

url = product.find_element(By.CSS_SELECTOR, "a").get_attribute("href")

image = product.find_element(By.CSS_SELECTOR, ".card-img-top").get_attribute("src")

name = product.find_element(By.CSS_SELECTOR, "h4 a").text

price = product.find_element(By.CSS_SELECTOR, "h5").text

# add the data to the list of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

This should be your new complete spider:

import scrapy

from scrapy_selenium import SeleniumRequest

from selenium.webdriver import ActionChains

import time

from selenium.webdriver.common.by import By

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SeleniumRequest(url=url, callback=self.parse)

def parse(self, response):

driver = response.request.meta["driver"]

# scroll to the end of the page 10 times

for x in range(0, 10):

# scroll down by 10000 pixels

ActionChains(driver) \

.scroll_by_amount(0, 10000) \

.perform()

# waiting 2 seconds for the products to load

time.sleep(2)

# select all product elements and iterate over them

for product in driver.find_elements(By.CSS_SELECTOR, ".post"):

# scrape the desired data from each product

url = product.find_element(By.CSS_SELECTOR, "a").get_attribute("href")

image = product.find_element(By.CSS_SELECTOR, ".card-img-top").get_attribute("src")

name = product.find_element(By.CSS_SELECTOR, "h4 a").text

price = product.find_element(By.CSS_SELECTOR, "h5").text

# add the data to the list of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

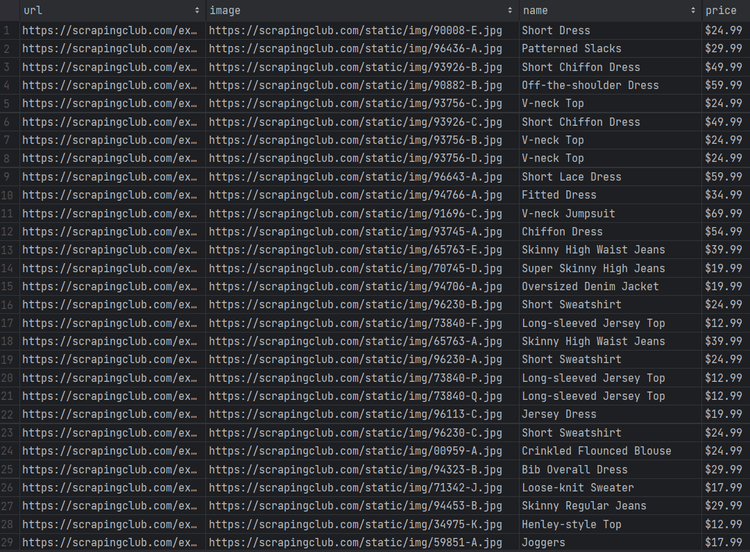

The output will now contain all 60 product elements instead of just the first ten. Run the spider again and export the data to CSV to verify that:

scrapy crawl scraping_club -O products.csv

That will produce the products.csv file below:

Well done, mission complete with your Scrapy Selenium scraper! You just scraped all products from the page. Yet, the above script can still be improved by removing the hard waits. Let's see how in the section below!

Wait for Element

The current spider relies on hard waits through time.sleep(). That's not good because it makes the scraping logic unreliable. In case of a browser or network slowdown, your script might fail, and you want to avoid that!

Waiting for HTML elements to be on a page before dealing with them leads to more consistent results. And, considering how commonly pages get data or render nodes via JavaScript, this is key for building effective spiders.

Selenium provides the is_visible() method to wait for an element to be visible. Use it to remove the hard waits and wait until the 60th .post element is on the page. After scrolling down, the spider will wait for the AJAX calls to be made and the last product element to be rendered on the page.

from selenium.webdriver.support.wait import WebDriverWait

# ...

# wait for 10 seconds for the 60th to be on the page

wait = WebDriverWait(driver, timeout=10)

wait.until(lambda driver: driver.find_element(By.CSS_SELECTOR, ".post:nth-child(60)").is_displayed())

Now, your spider no longer involves hard waits:

import scrapy

from scrapy_selenium import SeleniumRequest

from selenium.webdriver import ActionChains

from selenium.webdriver.common.by import By

from selenium.webdriver.support.wait import WebDriverWait

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SeleniumRequest(url=url, callback=self.parse)

def parse(self, response):

driver = response.request.meta["driver"]

# scroll to the end of the page 10 times

for x in range(0, 10):

# scroll down by 10000 pixels

ActionChains(driver) \

.scroll_by_amount(0, 10000) \

.perform()

wait = WebDriverWait(driver, timeout=10)

wait.until(lambda driver: driver.find_element(By.CSS_SELECTOR, ".post:nth-child(60)").is_displayed())

# select all product elements and iterate over them

for product in driver.find_elements(By.CSS_SELECTOR, ".post"):

# scrape the desired data from each product

url = product.find_element(By.CSS_SELECTOR, "a").get_attribute("href")

image = product.find_element(By.CSS_SELECTOR, ".card-img-top").get_attribute("src")

name = product.find_element(By.CSS_SELECTOR, "h4 a").text

price = product.find_element(By.CSS_SELECTOR, "h5").text

# add the data to the list of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

Run it, and you'll get the same results as before but with better performance, as you're now waiting for the right amount of time only.

The above solution is mainly based on the Selenium API, but what methods specifically does scrapy-selenium have to offer?

The solution above mainly relies on vanilla Selenium's API, but what specific features scrapy-selenium have to offer?

SeleniumRequest accepts a wait_until argument that takes a Selenium expected condition on an element. For example, to wait for an element to be visible in Scrapy Selenium. Scrapy will call parse() only when the expected condition occurs, otherwise the script will fail after a timeout.

yield SeleniumRequest(

url=url,

callback=self.parse,

# wait for the first product to be visible

wait_until=EC.visibility_of((By.CSS_SELECTOR, ".post"))

)

Don't forget to import the expected_conditions from Selenium:

from selenium.webdriver.support import expected_conditions as EC

Perfect! Time to see other useful interactions in action.

Wait for Time

As dynamic operations on a web page take time to end, you might need to wait for a few seconds for debugging purposes.

Scrapy Selenium provides the wait_time argument to wait for a specified number of seconds before closing the page:

yield SeleniumRequest(

url=url,

callback=self.parse,

# wait for 10 seconds with the page opened in Selenium

wait_time=10

)

As mentioned before, fixed timeouts must be avoided in production.

Click Elements

Clicking an element in scrapy-selenium enables you to trigger AJAX calls or JavaScript actions. That's the most essential user interaction you can simulate.

Selenium elements expose the click() method to click them. Select an HTML element with driver in parse() and call the click function on it:

first_product = driver.find_element(By.CSS_SELECTOR, ".post")

first_product.click()

If the click() triggers a page change (and this is such a case), you'll have to continue the scraping logic with driver as explained earlier:

def parse(self, response):

driver = response.request.meta["driver"]

# click the first product element

first_product = driver.find_element(By.CSS_SELECTOR, ".post")

first_product.click()

# you are now on the detail product page...

# scraping logic...

# driver.find_element(...)

Adapt the scraping logic to the new page to complete this script.

Take Screenshot

Extracting data from a page isn't the only way to get useful information from it. Taking screenshots of the entire page or specific elements gives you visual feedback. That's important to see how a site looks in different scenarios or track competitors.

Scrapy Selenium comes with built-in screenshot capabilities thanks to the screenshot argument:

yield SeleniumRequest(

url=url,

callback=self.parse,

# wait for 10 seconds with the page opened in Selenium

screenshot=True

)

When set to True, Selenium will take a screenshot of the page and add the PNG binary data to the response meta:

def parse(self, response):

with open("screenhost.png", "wb") as image_file:

image_file.write(response.meta["screenshot"])

This snippet exports the image to a screenshot.png file you'll find in the root folder of your project after running the spider.

Avoid Getting Blocked with a Scrapy Selenium Proxy

The biggest challenge when scraping the web is getting blocked by anti-scraping measures, like IP bans. An effective way to bypass them is to use proxies, making requests come from different IPs and locations.

Before jumping into the code, you can check out our guide on how to deal with proxies in Scrapy and proxies in Selenium. And besides that, you might also need to rotate the User-Agent in Scrapy.

Let's now learn how to set a proxy in Scrapy Selenium.

First, get a free proxy from providers like Free Proxy List. Then, specify it in the --proxy-server Chrome argument in the SELENIUM_DRIVER_ARGUMENTS array in settings.py:

proxy_server_url = "157.245.97.60"

SELENIUM_DRIVER_ARGUMENTS = ["--headless=new", f"--proxy-server={proxy_server_url}"]

Free proxies typically don't have a username and password.

The main problem with this approach is that it works at the browser level, which means you can't set a different proxy for each request. Also, --proxy-server doesn't support proxies with a username and a password.

Considering that premium proxies require authentication and free proxies are unreliable, that's a huge problem. The exit server's IP is likely to get blocked, so you can't rely on this approach in a real-world scenario.

The solution? An alternative to Selenium with Scrapy that can both scrape dynamic content pages and help you avoid getting blocked. ZenRows comes into play! As a powerful web scraping API that you can easily integrate with Scrapy, it offers IP rotation through premium residential proxies, User-Agent rotation, and the most advanced anti-bot bypass toolkit that exists. Get your free 1,000 credits and try it out!

Run Custom JavaScript Code

Scrapy Selenium supports the execution of custom JavaScript code via the script argument. That's helpful for simulating user interactions that aren't directly supported by the Selenium API. An example? Updating the DOM of a page.

To run JavaScript in scrapy-selenium, store your script in a string (isolating the JS code in a variable is better for readability):

js_script = """

// code here

"""

For example, the below snippet uses querySelectorAll() to get all product price elements and update their content by replacing the currency symbol.

js_script = """

document.querySelectorAll(".post h5").forEach(e => e.innerHTML = e.innerHTML.replace("$", "€"))

"""

Then, pass js_script to the script parameter in SeleniumRequest. Scrapy will run the JavaScript code on the page before passing the results to the parse() method.

yield SeleniumRequest(

url=url,

callback=self.parse,

script=js_script

)

Here's the updated code of your spider that also changes the currency of the prices from dollar to euro:

import scrapy

from scrapy_selenium import SeleniumRequest

# USD to EUR

js_script = """

document.querySelectorAll(".post h5").forEach(e => e.innerHTML = e.innerHTML.replace("$", "€"))

"""

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SeleniumRequest(

url=url,

callback=self.parse,

script=js_script

)

def parse(self, response):

# select all product elements and iterate over them

for product in response.css(".post"):

# scrape the desired data from each product

url = product.css("a").attrib["href"]

image = product.css(".card-img-top").attrib["src"]

name = product.css("h4 a::text").get()

price = product.css("h5::text").get()

# add the data to the list of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

Execute the spider and the logs will now display a change in the price:

Brilliant! The currency went from $ to € as expected!

Conclusion

In this Selenium Scrapy tutorial, you learned the fundamentals of using Scrapy with Selenium. You started from the basics and explored more complex techniques to become a scraping expert.

Now you know:

- How to integrate Selenium 4 into a Scrapy project.

- What user interactions you can mimic with it.

- How to run custom JavaScript logic on a web page in Scrapy.

- The challenges of web scraping with

scrapy-selenium.

Regardless of how sophisticated your browser automation is, anti-bots will still be able to detect and block you. Luckily, you can avoid all that with ZenRows, a web scraping API with headless browser capabilities, IP and User-Agent rotation, and a top-notch anti-scraping bypass toolkit. Getting data from dynamic content web pages has never been easier. Try ZenRows for free!

Frequent Questions

Is Scrapy or Selenium Better for Scraping?

Scrapy is ideal for scraping static content sites with many pages, while Selenium is more suitable for JavaScript-heavy pages. You can get the best out of the two approaches and scrape dynamiccontent pages in Scrapy with Splash.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.