Have you gotten poor results while scraping dynamic web page content? It's not just you. Crawling dynamic data is a challenging undertaking (to say the least) for standard scrapers. That's because JavaScript runs in the background when an HTTP request is made.

Scraping dynamic websites requires rendering the entire page in a browser and extracting the target information.

Join us in this step-by-step tutorial to learn all you need about dynamic web scraping with Python—the dos and don'ts, the challenges and solutions, and everything in between.

Let's dive right in!

What Is a Dynamic Website?

A dynamic website is one that doesn't have all its content directly in its static HTML. It uses server-side or client-side to display data, sometimes based on the user's actions (e.g., clicking, scrolling, etc.).

Put simply, these websites display different content or layout with every server request. This helps with loading time as there's no need to reload the same information each time the user wants to view "new" content.

How to identify them? One way is by disabling JavaScript in the command palette on your browser. If the website is dynamic, the content will disappear.

Let's use Saleor React Storefront as an example. Here's what its front page looks like:

Take notice of the titles, images and artist's name.

Now, let's disable JavaScript using the steps below:

- Inspect the page: Right-click and select "Inspect" to open the DevTools window.

- Navigate to the command palette: CTRL/CMD + SHIFT + P.

- Search for "JavaScript."

- Click on Disable JavaScript.

- Hit refresh.

What's the result? See below:

You see it for yourself! Disabling JavaScript removes all dynamic web content.

Alternatives to Dynamic Web Scraping With Python

So, you want to scrape dynamic websites with Python...

Since libraries such as Beautiful Soup or Requests don't automatically fetch dynamic content, you're left with two options to complete the task:

- Feed the content to a standard library.

- Execute the page's internal JavaScript while scraping.

However, not all dynamic pages are the same. Some render content through JS APIs that can be accessed by inspecting the "Network" tab. Others store the JS-rendered content as JSON somewhere in the DOM (Document Object Model).

The good news is we can parse the JSON string to extract the necessary data in both cases.

Keep in mind that there are situations in which these solutions are inapplicable. For such websites, you can use headless browsers to render the page and extract your needed data.

The alternatives to crawling dynamic web pages with Python are:

- Manually locating the data and parsing JSON string.

- Using headless browsers to execute the page's internal JavaScript (e.g., Selenium or Pyppeteer, an unofficial Python port of Puppeteer).

What Is the Easiest Way to Scrape a Dynamic Website in Python?

It's true, headless browsers can be slow and performance-intensive. However, they lift all restrictions on web scraping. That is if you don't count anti-bot detection. And you shouldn't because we've already told you how to bypass such protections.

Manually locating data and parsing JSON strings presumes that accessing the JSON version of the dynamic data is possible. Unfortunately, that's not always the case, especially when it comes to high-level Single-page applications (SPAs).

Not to mention that mimicking an API request is not scalable. They often require cookies and authentications alongside other restrictions that can easily block you out.

The best way to scrape dynamic web pages in Python depends on your goals and resources. If you have access to the website's JSON and are looking to extract a single page's data, you may not need a headless browser.

However, barring this tiny portion of cases, most of the time using Beautiful Soup and Selenium is your best and easiest option.

Time to get our hands dirty! Get ready to write some code and see precisely how to scrape a dynamic website in Python!

Prerequisites

To follow this tutorial, you'll need to meet some requirements. We'll use the following tools:

- Python 3: The latest version of Python will work best. At the time of writing, that is 3.11.5.

-

Selenium: run the command

pip install selenium.

Selenium WebDriver acts as a web automation tool that gives you the ability to control web browsers. Previously, the installation of WebDriver was a prerequisite, but not any longer.

Starting from Selenium version 4 and above, WebDriver is automatically included. So, if you're using an earlier version of Selenium, consider updating it to access the newest features and capabilities. To verify your current version, employ the command pip show selenium, and update with pip install --upgrade selenium.

You now have everything you need. Let's go!

Method #1: Dynamic Web Scraping With Python Using Beautiful Soup

Beautiful Soup is arguably the most popular Python library for crawling HTML data.

To extract information with it, we need our target page's HTML string. However, dynamic content is not directly present in a website's static HTML. This means that Beautiful Soup can't access JavaScript-generated data.

Here's a solution: it's possible to extract data from XHR requests if the website loads content using an AJAX request.

Method #2: Scraping Dynamic Web Pages in Python Using Selenium

To understand how Selenium helps you scrape dynamic websites, first, we need to inspect how regular libraries, such as Requests, interact with them.

We'll use Angular as our target website:

Let's try scraping it with Requests and see the result. Before that, we have to install the Requests library that can be executed using the pip command.

pip install requests

Here's what our code looks like:

import requests

url = 'https://angular.io/'

response = requests.get(url)

html = response.text

print(html)

As you can see, only the following HTML was extracted:

<noscript>

<div class="background-sky hero"></div>

<section id="intro" style="text-shadow: 1px 1px #1976d2;">

<div class="hero-logo"></div>

<div class="homepage-container">

<div class="hero-headline">The modern web<br>developer's platform</div>

</div>

</section>

<h2 style="color: red; margin-top: 40px; position: relative; text-align: center; text-shadow: 1px 1px #fafafa; border-top: none;">

<b><i>This website requires JavaScript.</i></b>

</h2>

</noscript>

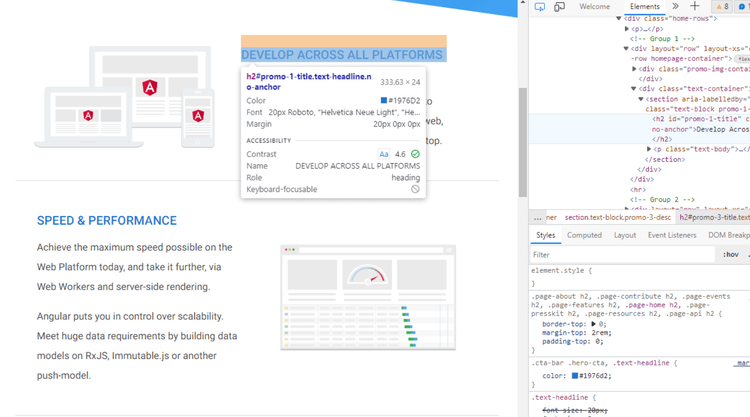

Though, inspecting the website shows more content than what was retrieved.

This is what happened when we disabled JavaScript on the page:

That's precisely what Requests was able to return. The library perceives no errors as it parses data from the website's static HTML, which is exactly what it was created to do.

In this case, aiming for the same result as what's displayed on the website is impossible. Can you guess why? That's right, it's because this is a dynamic web page.

To access the entire content and extract our target data, we must render the JavaScript.

It's time to make it right with Selenium dynamic web scraping.

We'll use the following script to quickly crawl our target website:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

url = 'https://angular.io/'

driver = webdriver.Chrome(service=ChromeService(

ChromeDriverManager().install()))

driver.get(url)

print(driver.page_source)

There you have it! The page's complete HTML, including the dynamic web content.

Congratulations! You've just scraped your first dynamic website.

Selecting Elements in Selenium

There are different ways to access elements in Selenium. We discuss this matter in depth in our web scraping with Selenium in Python guide.

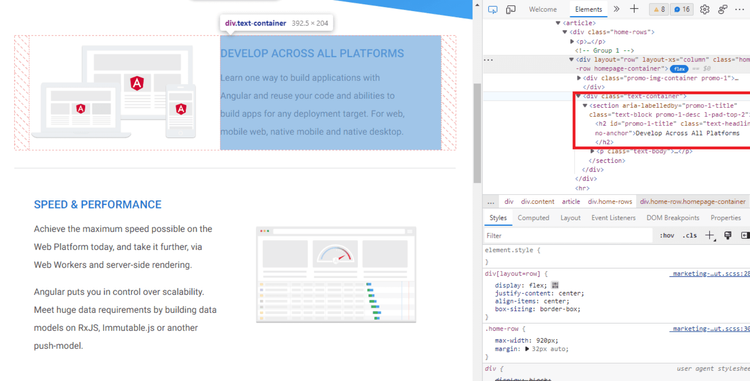

Still, we'll explain this with an example. Let's select only the H2s on our target website:

Before we get to that, we need to inspect the website and identify the location of the elements we want to extract.

We can see that the class="text-container" is common for those headlines. We copy that and map the H2s to get elements using Chrome Driver.

Paste this code:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

# instantiate options

options = webdriver.ChromeOptions()

# run browser in headless mode

options.headless = True

# instantiate driver

driver = webdriver.Chrome(service=ChromeService(

ChromeDriverManager().install()), options=options)

# load website

url = 'https://angular.io/'

# get the entire website content

driver.get(url)

# select elements by class name

elements = driver.find_elements(By.CLASS_NAME, 'text-container')

for title in elements:

# select H2s, within element, by tag name

heading = title.find_element(By.TAG_NAME, 'h2').text

# print H2s

print(heading)

You'll get the following:

"DEVELOP ACROSS ALL PLATFORMS"

"SPEED & PERFORMANCE"

"INCREDIBLE TOOLING"

"LOVED BY MILLIONS"

Nice and easy! You can now scrape dynamic sites with Selenium effortlessly.

How to Scrape Infinite Scroll Web Pages With Selenium

Some dynamic pages load more content as users scroll down to the bottom of the page. These are known as "Infine scroll websites." Crawling them is a bit more challenging. To do so, we need to instruct our spider to scroll to the bottom, wait for all new content to load and only then begin scraping.

Understand this with an example. Let's use Scraping Club's sample page.

This script will scroll through the first 20 results and extract their title:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

import time

options = webdriver.ChromeOptions()

options.headless = True

driver = webdriver.Chrome(service=ChromeService(

ChromeDriverManager().install()), options=options)

# load target website

url = 'https://scrapingclub.com/exercise/list_infinite_scroll/'

# get website content

driver.get(url)

# instantiate items

items = []

# instantiate height of webpage

last_height = driver.execute_script('return document.body.scrollHeight')

# set target count

itemTargetCount = 20

# scroll to bottom of webpage

while itemTargetCount > len(items):

driver.execute_script('window.scrollTo(0, document.body.scrollHeight);')

# wait for content to load

time.sleep(1)

new_height = driver.execute_script('return document.body.scrollHeight')

if new_height == last_height:

break

last_height == new_height

# select elements by XPath

elements = driver.find_elements(By.XPATH, "//div/h4/a")

h4_texts = [element.text for element in elements]

items.extend(h4_texts)

# print title

print(h4_texts)

_Remark: It's important to set a target count for infinite scroll pages so you can end your script at some point._

In the previous example, we used yet another selector: By.XPath. It will locate elements based on an XPath instead of classes and IDs, as seen before. Inspect the page, right-click on a <div> containing the elements you want to scrape and select Copy Path.

Your result should look like this:

['Short Dress', 'Patterned Slacks', 'Short Chiffon Dress', 'Off-the-shoulder Dress', ...]

And there you have it, the H4s of the first 20 products!

_Remark: Using Selenium for dynamic web scraping can get tricky with continuous Selenium updates. Do well to go through the latest changes._

Conclusion

Dynamic web pages are everywhere. Thus, there's a high enough chance you'll encounter them in your data extraction efforts. Remember that getting familiar with their structure will help you identify the best approach for retrieving your target information.

All methods we explored in this article come with their own faults and disadvantages. So, take a look at what ZenRows has to offer. The solution allows you to scrape dynamic websites using a simple API call. Try it for free today and save yourself time and resources.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.