Are you struggling to scrape data from paginated websites using Requests? Don't worry. It's a common challenge.

In this tutorial, you'll learn how to scrape paginated pages with Requests and BeautifulSoup libraries. We'll start with the classic navigation bars and then explore more complex scenarios with JavaScript-rendered pagination, including "load more" buttons and infinite scrolling.

You won't get stuck on the first page anymore. Let's go!

When You Have a Navigation Page Bar

Navigation bars are the most common pagination methods. You can scrape a website with a navigation bar by checking for the next page link or changing the page number in the URL.

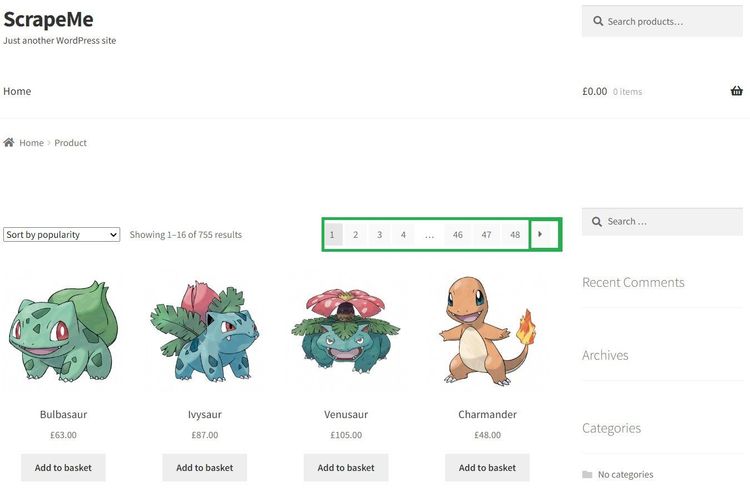

You'll use Python's Request and BeautifulSoup libraries to extract product names and prices from ScrapeMe to demonstrate these two methods.

Before you go any further, see the website layout below.

That website has 48 pages, and you're about to scrape it all. Let's get started!

Use the Next Page Link

The next page link method involves following all available pages and extracting content from them iteratively until there are no more pages.

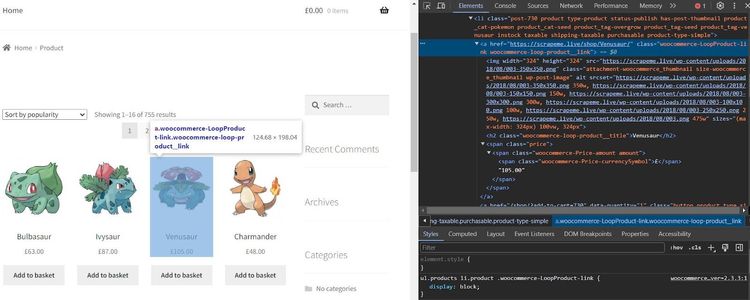

Before you begin, inspect the target page:

Each product is in a dedicated container. Let's start extracting the required data from them.

You'll put the scraping logic in a function that extracts the entire page's HTML. It requests the target URL using Python's Requests and parses its HTML content with BeautifulSoup:

# import the required libraries

import requests

from bs4 import BeautifulSoup

target_url = 'https://scrapeme.live/shop/'

def pagination_scraper(url):

# make request to the target url

response = requests.get(url, verify=False)

# validate the request

if response.status_code != 200:

return f'status failed with {response.status_code}'

else:

# parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

print(soup)

pagination_scraper(target_url)

The code outputs the first page's HTML, as shown:

<!DOCTYPE html>

<!-- ... -->

<title>Products – ScrapeMe</title>

<!-- ... -->

<li class="post-759 product type-product status-publish has-post-thumbnail product_cat-pokemon product_cat-seed product_tag-bulbasaur product_tag-overgrow product_tag-seed instock sold-individually taxable shipping-taxable purchasable product-type-simple">

<a class="woocommerce-LoopProduct-link woocommerce-loop-product__link" href="https://scrapeme.live/shop/Bulbasaur/">

<img alt="" class="attachment-woocommerce_thumbnail size-woocommerce_thumbnail wp-post-image" height="324" sizes="(max-width: 324px) 100vw, 324px" src="https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png" srcset="https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png 350w, https://scrapeme.live/wp-content/uploads/2018/08/001-150x150.png 150w, https://scrapeme.live/wp-content/uploads/2018/08/001-300x300.png 300w, https://scrapeme.live/wp-content/uploads/2018/08/001-100x100.png 100w, https://scrapeme.live/wp-content/uploads/2018/08/001-250x250.png 250w, https://scrapeme.live/wp-content/uploads/2018/08/001.png 475w" width="324" />

<h2 class="woocommerce-loop-product__title">Bulbasaur</h2>

<span class="price"><span class="woocommerce-Price-amount amount"><span class="woocommerce-Price-currencySymbol">£</span>63.00</span></span>

</a>

<a aria-label="Add “Bulbasaur” to your basket" class="button product_type_simple add_to_cart_button ajax_add_to_cart" data-product_id="759" data-product_sku="4391" data-quantity="1" href="/shop/?add-to-cart=759" rel="nofollow">Add to basket</a>

</li>

<!-- ...other content omitted for brevity-->

Now, expand the function to extract the product names and prices for the first page only. We've set verified to False to ignore SSL errors. Here's what your code should look like at this point:

# import the required libraries

import requests

from bs4 import BeautifulSoup

target_url = 'https://scrapeme.live/shop/'

def pagination_scraper(url):

# make request to the target url

response = requests.get(url, verify=False)

# validate the request

if response.status_code != 200:

return f'status failed with {response.status_code}'

else:

# parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

# obtain main product card

product_card = soup.find_all('a', class_='woocommerce-loop-product__link')

# iterate through product card to retrieve product names and prices

for product in product_card:

name_tag = product.find('h2', class_= 'woocommerce-loop-product__title')

price_tag = product.find('span', class_= 'price')

# validate the presence of the name and price tags and extract their texts

name = name_tag.text if name_tag else 'Name tag not found'

price = price_tag.text if price_tag else 'Price tag not found'

print(f'name: {name}, price: {price}')

pagination_scraper(target_url)

This only scrapes the first page, as shown:

name: Bulbasaur, price: £63.00

name: Ivysaur, price: £87.00

#... 14 products omitted for brevity

You need to apply navigation logic to scrape from all pages. The concept is to iteratively obtain the next page link from its element and repeat the scraping logic for each link.

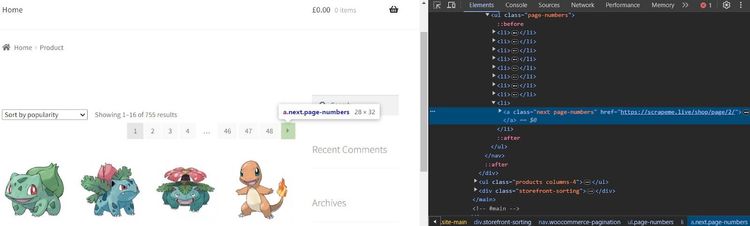

Let's quickly inspect the next link element (the arrow symbol) on the navigation bar:

So, the next page to the current one (page 1) is /page/2/.

The code below checks if the next page link exists in the DOM. If true, it visits the URL and recurses the function to repeat the entire scraping process. Otherwise, it terminates the recursion and stops extraction.

Expand the previous code like so:

# import the required libraries

import requests

from bs4 import BeautifulSoup

def pagination_scraper(url):

#...

# get the next page link

link = soup.find('a', class_='next page-numbers')

# check if next page exists and recurse if so

if link:

next_link = link.get('href')

print(f' Scrapping from: {next_link}')

# recurse the function on the next page link if it exists

pagination_scraper(next_link)

else:

print('No more next page')

Put everything together and execute the function:

# import the required libraries

import requests

from bs4 import BeautifulSoup

target_url = 'https://scrapeme.live/shop/'

def pagination_scraper(url):

# make request to the target url

response = requests.get(url, verify=False)

# validate the request

if response.status_code != 200:

return f'status failed with {response.status_code}'

else:

# parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

# obtain main product card

product_card = soup.find_all('a', class_='woocommerce-loop-product__link')

# iterate through product card to retrieve product names and prices

for product in product_card:

name_tag = product.find('h2', class_= 'woocommerce-loop-product__title')

price_tag = product.find('span', class_= 'price')

# validate the presence of the name and price tags and extract their texts

name = name_tag.text if name_tag else 'Name tag not found'

price = price_tag.text if price_tag else 'Price tag not found'

print(f'name: {name}, price: {price}')

# get the next page link

link = soup.find('a', class_='next page-numbers')

# check if next page exists and recurse if so

if link:

next_link = link.get('href')

print(f' Scrapping from: {next_link}')

# recurse the function on the next page link if it exists

pagination_scraper(next_link)

else:

print('No more next page')

pagination_scraper(target_url)

Running the code scrapes the product names and prices from all 48 pages:

name: Bulbasaur, price: £63.00

name: Ivysaur, price: £87.00

#... 751 products omitted for brevity

name: Stakataka, price: £190.00

name: Blacephalon, price: £149.00

```

Bravo! You built a web scraper to extract content from a paginated website with navigation page bars.

Now, let's jump into the other method for changing the page number in the URL.

Change the Page Number in the URL

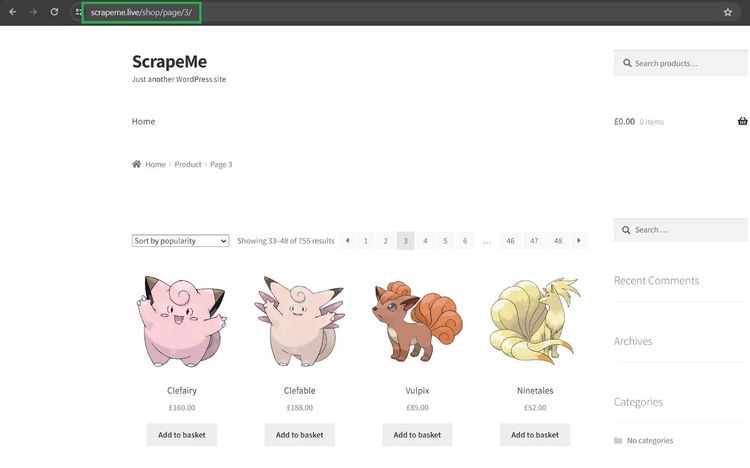

To change the page number in the URL, you'll need to append each page number to the target URL iteratively and scrape incrementally. This means you need to understand how the website shows the current page in the URL box.

For instance, see the URL format for the third page below:

The pagination format in the URL is target_url/page/page_number/. You'll need to programmatically increase the page number from 1 to the last page (48).

First, define a function to extract content from one page using the following code. The function requests the target URL with the Requests library and parses the website's HTML using BeautifulSoup:

# import the required libraries

import requests

from bs4 import BeautifulSoup

target_url = 'https://scrapeme.live/shop/'

def pagination_scraper(url):

response = requests.get(url, verify=False)

if response.status_code != 200:

return f'status failed with {response.status_code}'

else:

# parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

# obtain main product card

product_card = soup.find_all('a', class_='woocommerce-loop-product__link')

# iterate through product card to retrieve product names and prices

for product in product_card:

name_tag = product.find('h2', class_= 'woocommerce-loop-product__title')

price_tag = product.find('span', class_= 'price')

name = name_tag.text if name_tag else 'Name tag not found'

price = price_tag.text if price_tag else 'Price tag not found'

print(f'name: {name}, price: {price}')

Next, the code below sets the initial page number to one and increments it in a loop based on the specified range. It then appends the increased page number to the target URL to get the full URL.

Finally, pass the full page URL to the scraping function to extract content from each page.

# set the initial page number to 1

page_count = 1

# scrape until the last page (1 to 48)

for next in range(1, 49):

# get the new page number

page_url = f'page/{page_count}'

# append the incremented page number to the target URL

full_url = target_url + page_url

print(f'Scraping from: {full_url}')

pagination_scraper(full_url)

# increment the page number

page_count += 1

Put everything together, and your final code should look like this:

# import the required libraries

import requests

from bs4 import BeautifulSoup

target_url = 'https://scrapeme.live/shop/'

def pagination_scraper(url):

response = requests.get(url, verify=False)

if response.status_code != 200:

return f'status failed with {response.status_code}'

else:

# parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

# obtain main product card

product_card = soup.find_all('a', class_='woocommerce-loop-product__link')

# iterate through product card to retrieve product names and prices

for product in product_card:

name_tag = product.find('h2', class_= 'woocommerce-loop-product__title')

name = name_tag.text if name_tag else 'Name tag not found'

price_tag = product.find('span', class_= 'price')

price = price_tag.text if price_tag else 'Price tag not found'

print(f'name: {name}, price: {price}')

# set the initial page number to 1

page_count = 1

# scrape until the last page (1 to 48)

for next in range(1, 49):

# get the new page number

page_url = f'page/{page_count}'

# append the incremented page number to the target URL

full_url = target_url + page_url

print(f'Scraping from: {full_url}')

pagination_scraper(full_url)

# increment the page number

page_count += 1

This extracts product information from all the pages, as expected:

name: Bulbasaur, price: £63.00

name: Ivysaur, price: £87.00

#... 751 products omitted for brevity

name: Stakataka, price: £190.00

name: Blacephalon, price: £149.00

Your scraper now extracts content from a paginated website by changing its page number in the URL.

But what if you encounter a website that uses JavaScript pagination to load content dynamically? You'll see how to handle that in the next section.

XHR Interception For JavaScript-Based Pagination

JavaScript-paginated websites load content dynamically as the user scrolls. It usually involves infinite scrolling or clicking a "load more" button and is more common with social media and e-commerce websites.

Scraping dynamic pagination with Python's Requests library is possible but requires advanced techniques, like intercepting the XHR requests. However, using a headless browser for advanced cases is better, especially if you're familiar with Selenium web scraping.

While you can use Python's Requests to intercept XHR manually, it doesn't work in cases where the website makes direct API calls that require authentication.

Let's see how to handle infinite scrolling and "load more" buttons in the next sections using Python's Requests.

Infinite Scroll to "load more" Content

You'll use the Requests library to intercept XHR requests in this case. This involves tracking the requests in the network tab as you scroll down the page.

Our demo website is ScrapingClub. As the user scrolls, it loads pages as individual requests using AJAX.

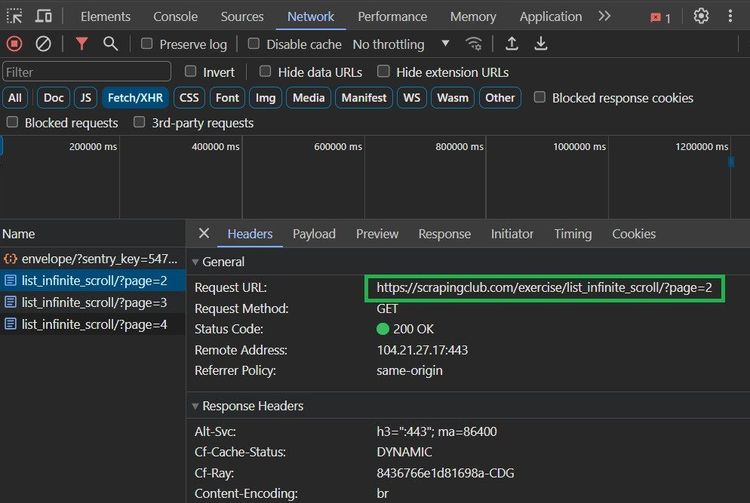

The page number doesn't change in the URL box, but you can see what happens behind the hood by inspecting the Fetch/XHR "Network" tab of the website in your browser.

Open your browser's developer console and click "Network". Then click Fetch/XHR to view the AJAX calls as you scroll.

See the demonstration below. Pay attention to how the URL changes in the Network tab as you scroll:

So, a complete vertical scroll loads six pages, and the format is ?/page=page_number.

For instance, let's take a closer look at a request to the second page and see its full URL format:

The second page URL is https://scrapingclub.com/exercise/list\_infinite\_scroll/?page=2.

Let's leverage this insight to extract information from that website.

First, define a function to scrape the product names and prices. This function requests the target URL and parses its HTML to BeautifulSoup:

# import the required libraries

import requests

from bs4 import BeautifulSoup

target_url = 'https://scrapingclub.com/exercise/list_infinite_scroll/'

def pagination_scraper(url):

response = requests.get(url)

if response.status_code != 200:

return f'status failed with {response.status_code}'

else:

# parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

print(soup)

pagination_scraper(target_url)

This outputs the initial page HTML:

<!DOCTYPE html>

<html class="h-full">

<head>

<title>Scraping Infinite Scrolling Pages (Ajax) | ScrapingClub</title>

<!-- ... -->

</head>

<body>

<!-- ... -->

<div class="my-4">

<div class="grid grid-cols-1 gap-4 sm:grid-cols-3">

<div class="w-full rounded border post">

<a href="/exercise/list_basic_detail/90008-E/">

<img alt="" class="card-img-top img-fluid" src="/static/img/90008-E.jpg"/>

</a>

<div class="p-4">

<h4>

<a href="/exercise/list_basic_detail/90008-E/">Short Dress</a>

</h4>

<h5>$24.99</h5>

</div>

</div>

</div>

</div>

<!-- ...other content omitted for brevity -->

The function then scrapes the product information by iterating through their containers:

# import the required libraries

import requests

from bs4 import BeautifulSoup

def pagination_scraper(url):

#...

# iterate through the product containers and extract the product content

for product in product_containers:

name_tag = product.find('h4')

price_tag = product.find('h5')

name = name_tag.text if name_tag else ''

price = price_tag.text if price_tag else ''

print(f'name: {name}, price: {price}')

The next code extracts information dynamically by simulating a request to each page in the Network tab using a loop. It then runs the scraping function for each page.

# set an initial request count

request_count = 1

# scrape infinite scroll by intercepting the page numbers in the Network tab

for page in range(1, 7):

# simulate the full URL format

requested_page_url = f'{target_url}?page={request_count}'

pagination_scraper(requested_page_url)

# increment the request count

request_count += 1

Here's the full code:

# import the required libraries

import requests

from bs4 import BeautifulSoup

target_url = 'https://scrapingclub.com/exercise/list_infinite_scroll/'

def pagination_scraper(url):

response = requests.get(url)

if response.status_code != 200:

return f'status failed with {response.status_code}'

else:

# parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

# get the product containers

product_containers = soup.find_all('div', class_ ='p-4')

# iterate through the product containers and extract the product content

for product in product_containers:

name_tag = product.find('h4')

price_tag = product.find('h5')

name = name_tag.text if name_tag else ''

price = price_tag.text if price_tag else ''

print(f'name: {name}, price: {price}')

# set an initial request count

request_count = 1

# scrape infinite scroll by intercepting the page numbers in the Network tab

for page in range(1, 7):

# simulate the full URL format

requested_page_url = f'{target_url}?page={request_count}'

pagination_scraper(requested_page_url)

# increment the request count

request_count += 1

The code extracts the names and prices of products from the whole website by simulating infinite scrolling from XHR interception:

name: Short Dress, price: $24.99

name: Patterned Slacks, price: $29.99

#... 56 other products omitted for brevity

name: T-shirt, price: $6.99

name: Blazer, price: $49.99

Congratulations! You just scraped a website with infinite scrolling using Python's Requests and BeautifulSoup. Let's see how to handle a scenario where there is a "load more" button.

Click on a Button to "load more" Content

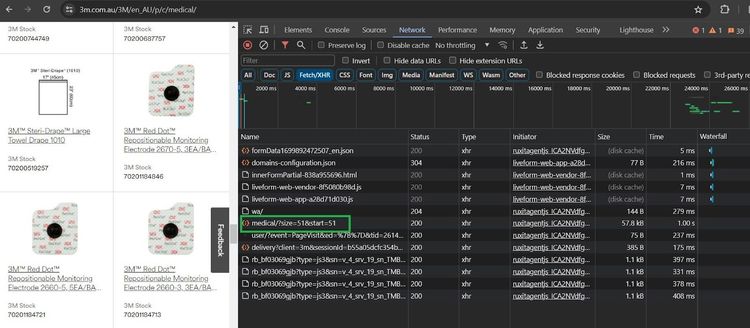

The approach here is to observe the Fetch/XHR requests in the Network tab whenever you click a "load more" button. Using 3M Medical as the target website, you'll scrape product names and image URLs by intercepting its Fetch/XHR with Python's Requests.

See a quick layout of the target website below:

Let's observe what happens behind the scenes when you hit the "load more" button.

Open the developer console on your browser (right-click and select "Inspect") and go to the "Network" tab. Then click "Fetch/XHR" to start monitoring the network traffic.

The Network traffic below shows that the website sends a request to medical/?size=51&start=51 whenever a user clicks the "load more" button.

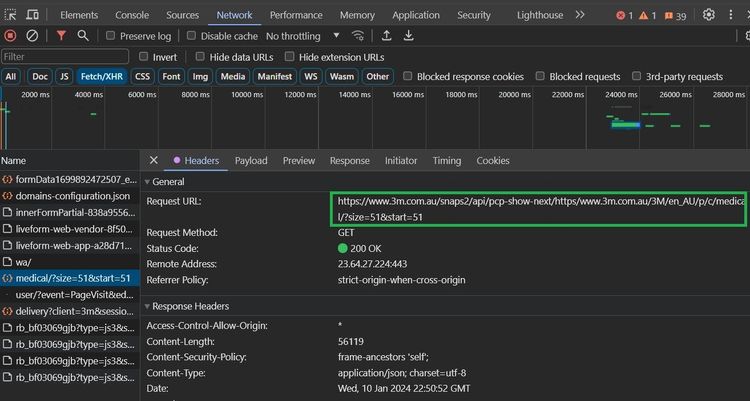

Click this particular request to see its full URL:

Look at the "Request URL" closely, and you'll see that it's an API request that loads 51 items at a time, and the response Content-Type is JSON. The Request URL is a combination of the API endpoint and the website's URL with size parameters. "

That's great! This information is all we need to scrape data from the website. The logic is to make an API call to the Request URL and extract product names and image URLs from the JSON response.

The next code concatenates the API base URL with the target URL to make a full endpoint. The dynamic URL is a programmed format of the Request URL. We've used a user agent to mimic a real browser.

Then define a scraping function that requests the API endpoint and extracts the entire JSON response to view the data structure:

# import the required libraries

import requests

ua = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36'

xhr_api_base_url = 'https://www.3m.com.au/snaps2/api/pcp-show-next/'

target_url = 'https://www.3m.com.au/3M/en_AU/p/c/medical'

full_api_endpoint = xhr_api_base_url + target_url

# specify data size to query per request

data_size = 51

dynamic_url = f'{full_api_endpoint}?size={data_size}&start=51'

def pagination_scraper(api_endpoint):

headers = {'User-Agent': ua}

# make request and pass in the user agent headers

response = requests.get(api_endpoint, headers=headers)

# validate your request

if response.status_code != 200:

print(f'status failed with {response.status_code}')

else:

print(response.json())

pagination_scraper(dynamic_url)

This prints the first 51 products. The result shows that the data you need is inside the item key:

{

'queryId': '2cf99613-fb64-4130-a73b-f89c2781ec90',

# ...,

'items': [{

'hasMoreOptions': False,

'imageUrl': 'https://multimedia.3m.com/mws/media/11159J/red-dot-trace-prep-2236.jpg',

'altText': 'Red-Dot Trace Prep 2236',

'name': '3M™ Red Dot™ Trace Prep 2236, 36 ROLLS CTN',

'url': 'https:://www.3m.com.au/3M/en_AU/p/d/v000161746/',

# ... other products omitted for brevity,

}]

}

Now that you know where the product information lies, replace the print function in the else statement with a more precise filter. The code below calls the item key and iterates through it to extract the required information:

def pagination_scraper(api_endpoint):

#...

else:

# load the items from the JSON response

data = response.json()['items']

# extract data from the JSON response

for product in data:

product_name = product['name']

image_url = product['imageUrl']

print(f'name: {product_name}, image_url: {image_url}')

Your code should look like this at this stage:

# import the required libraries

import requests

ua = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36'

xhr_api_base_url = 'https://www.3m.com.au/snaps2/api/pcp-show-next/'

target_url = 'https://www.3m.com.au/3M/en_AU/p/c/medical'

full_api_endpoint = xhr_api_base_url + target_url

# specify data size to query per request

data_size = 51

dynamic_url = f'{full_api_endpoint}?size={data_size}&start=51'

def pagination_scraper(api_endpoint):

headers = {'User-Agent': ua}

# make request and pass in the user agent headers

response = requests.get(api_endpoint, headers=headers)

# validate your request

if response.status_code != 200:

print(f'status failed with {response.status_code}')

else:

# load the items from the JSON response

data = response.json()['items']

# extract data from the JSON response

for product in data:

product_name = product['name']

image_url = product['imageUrl']

print(f'name: {product_name}, image_url: {image_url}')

pagination_scraper(dynamic_url)

Running that code outputs the first 51 products only.

name: 3M™ Red Dot™ Trace Prep 2236, 36 ROLLS CTN, image_url: https://multimedia.3m.com/mws/media/11159J/red-dot-trace-prep-2236.jpg

name: 3M™ Littmann® Master Cardiology™ Stethoscope, image_url: https://multimedia.3m.com/mws/media/10796J/3mtm-littmannr-master-cardiologytm-stethoscope-model-2160.jpg

name: 3M™ Steri-Strip™ Blend Tone Skin Closures (Non-reinforced) - Tan, B1550, 3 mm x 75 mm, 5 strips/envelope, 5 strips/envelope, 50 enlv/box, 4 box/case, image_url: https://multimedia.3m.com/mws/media/987200J/b1550-steri-strip-blend-tone-skin-closures.jpg

#... other products omitted for brevity

But you want to get more products. To scale up, the code below increases the data size iteratively by 51 per batch in the dynamic URL and runs the scraping function based on the maximum requests (4).

The dynamic URL is now inside the loop so the iteration affects it directly:

# set the maximum number of requests to make per execution

max_requests = 4

# ensure the requests doesn't exceed the number of requests

for i in range(max_requests):

# change the data size dynamically in URL

dynamic_url = f'{full_api_endpoint}?size={data_size}&start=51'

# increment the data size

data_size += 51

# run the scraper funcion on the dynamic URL

pagination_scraper(dynamic_url)

Now, let's put it all together:

# import the required libraries

import requests

ua = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36'

xhr_api_base_url = 'https://www.3m.com.au/snaps2/api/pcp-show-next/'

target_url = 'https://www.3m.com.au/3M/en_AU/p/c/medical'

full_api_endpoint = xhr_api_base_url + target_url

# specify data size to query per request

data_size = 51

def pagination_scraper(api_endpoint):

headers = {'User-Agent': ua}

# make request and pass in the user agent headers

response = requests.get(api_endpoint, headers=headers)

# validate your request

if response.status_code != 200:

print(f'status failed with {response.status_code}')

else:

# load the items from the JSON response

data = response.json()['items']

# extract data from the JSON response

for product in data:

product_name = product['name']

image_url = product['imageUrl']

print(f'name: {product_name}, image_url: {image_url}')

# set the maximum number of requests to make per execution

max_requests = 4

# ensure the requests doesn't exceed the number of requests

for i in range(max_requests):

# change the data size dynamically in URL

dynamic_url = f'{full_api_endpoint}?size={data_size}&start=51'

# increment the data size

data_size += 51

# run the scraper funcion on the dynamic URL

pagination_scraper(dynamic_url)

Running the code extracts the product names and image URLs like so:

name: 3M™ Red Dot™ Repositionable Monitoring Electrode 2660-3, 3EA/BAG 200BG/CASE,

image_url: https://multimedia.3m.com/mws/media/111602J/3m-red-dot-repositionable-monitoring-electrode-part-2660-3-front.jpg

#... 151 products omitted for brevity

name: 3M™ Coban™ 2 Layer Comfort Foam Layer 20014, 18 Rolls/Box, 2 Box/Case,

image_url: https://multimedia.3m.com/mws/media/986178J/coban-2-layer-for-lymphoedema.jpg

You've now built a web scraper that intercepts Fetch/XHR requests from a "load more" button using Python's Requests module. That was easy!

However, the biggest challenge you must handle while scraping is getting blocked.

Getting Blocked when Scraping Multiple Pages with Requests

The risk of getting blocked increases while scraping multiple pages with Python's Requests, and some websites even block you right away with only one page.

For instance, you can try to access a product category on Skechers with the Requests library, as shown:

import requests

url = 'https://www.skechers.com/men/shoes/'

# make request and pass in the user agent headers

response = requests.get(url)

# validate your request

if response.status_code != 200:

print(f'status failed with error {response.status_code}')

else:

print(response.status_code)

The request gets blocked with an error 403:

status failed with error 403

Avoiding these blocks is crucial to extracting the information you need.

You can add proxies in Python Requests to avoid IP bans or mimic a real browser by rotating the User Agent. However, none of these methods guarantee solutions to accessing protected websites.

A solution that works regardless of the anti-bot sophistication and integrates with Requests is ZenRows, an all-in-one scraping API that allows you to extract data from any website with premium proxies, anti-CAPTCHA, and more.

For example, you can integrate ZenRows in the previous code like so:

import requests

url = 'https://www.skechers.com/men/shoes/'

params = {

'url': url,

'apikey': <YOUR_ZENROWS_API_KEY>,

'js_render': 'true',

'premium_proxy': 'true',

}

response = requests.get('https://api.zenrows.com/v1/', params=params)

if response.status_code != 200:

print(f'status failed with {response.status_code}')

else:

print(f'successful with {response.status_code} response')

This accesses the protected website successfully:

successful with 200 response

Try ZenRows for free and bypass any anti-bot easily!

Conclusion

In this Requests pagination tutorial, you've learned how to scrape content from websites with navigation bars and JavaScript-based pagination using Python's Requests library.

Now you know:

- The next page link and URL page number methods of scraping content from multiple website pages with a navigation bar.

- How to use Requests to scrape a website's content with an infinite scroll by intercepting its XHR requests.

- How to use XHR interception with the Requests library to extract content rendered by a "load more".

Ensure you put all you've learned into consistent practice. But remember that regardless of how sophisticated your scraper is, many modern websites will still detect and block it. Bypass anti-bot detection with ZenRows and scrape without limitations. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.