Is it possible to avoid bot detection with Selenium? Yes, it is.

We know how annoying anti-bot protections can get, but there are solutions you can use to get around them. We'll cover the nine most important methods to bypass Selenium detection:

- Do IP rotation / Use proxies.

- Disable the automation indicator WebDriver Flags.

- Rotate HTTP Header information and user agent.

- Avoid patterns.

- Remove JavaScript signature.

- Use cookies.

- Follow the page flow.

- Use a browser extension.

- Use CAPTCHA-solving services.

How Do Anti-Bots Work?

Bot detection is the process of scanning and filtering the network traffic to detect and block malicious bots. Anti-bot providers like Cloudflare, PerimeterX and Akamai work tirelessly to find ways to detect bots using a headless browser and header data and different behavioral patterns.

When a client requests a web page, information about the nature of the request and the requester is sent to the server to be processed. Active and passive detection are the two main methods an anti-bot uses to detect bot activities. Check out our article on bot detection to learn more.

How Do Websites Detect Selenium?

Selenium is among the popular tools in the field of web scraping. As a result, websites with strict anti-bot policies try to identify its unique attributes before blocking access to their resources.

Selenium bot detection mainly works by testing for specified JavaScript variables that emerge while executing Selenium. Bot detectors often check for the words "Selenium" or "WebDriver" in any of the variables (on the window object), as well as document variables named $cdc_ and $wdc_.

They also check for the values of automation indicator flags in the WebDriver, like useAutomationExtension and navigator.webdriver. These attributes are enabled by default to allow a better testing experience and as a security feature.

Top Measures to Avoid Bot Detection with Selenium

Bot detection nowadays has become a headache for web scrapers, but there are ways to get around it. Here are some of the techniques you can use to avoid bot detection using Python with Selenium:

1. IP Rotation / Proxy

One of the major ways most bot detectors work is by inspecting IP behaviors. Web servers can draw a pattern from an IP address by maintaining a log for every request.

They use Web Application Firewalls (WAFs) to track and block IP address activities and blacklist suspicious IPs. The repetitive and programmatic request to the server might hurt the IP reputation and result in getting blocked permanently.

To avoid bot detection, you can use IP rotation or proxies with Selenium. Proxies act as an intermediary between the requester and the server.

The responding server interprets the request as coming from the proxy server, not the client's computer. As a result, it won't be able to draw a pattern for behavioral analysis.

# Install selenium with pip install selenium

from selenium import webdriver

# Define the proxy server

PROXY = "IpOfTheProxy:PORT"

# Set ChromeOptions()

options = webdriver.ChromeOptions()

# Add the proxy as argument

options.add_argument("--proxy-server=%s" % PROXY)

driver = webdriver.Chrome(options=options)

# Send the request

driver.get("https://www.google.com")

IP rotation is a method that uses a number of proxy servers to disguise the requesting server.

2. Disabling the Automation Indicator WebDriver Flags

While web scraping with Selenium, the WebDriver sends information to the server to indicate the request is automated.

The WebDriver is expected to have properties like window.navigator.webdriver, mandated by the W3C WebDriver Specification to allow better testability and as a security feature. This results in getting detected by the web servers, which leads to being flagged or denied access.

With the availability of execute_cdp_cmd(cmd, cmd_args) commands, you can now easily execute Google-Chrome-DevTools commands using Selenium. That makes it possible to change the default flagships.

from selenium import webdriver

# Create Chromeoptions instance

options = webdriver.ChromeOptions()

# Adding argument to disable the AutomationControlled flag

options.add_argument("--disable-blink-features=AutomationControlled")

# Exclude the collection of enable-automation switches

options.add_experimental_option("excludeSwitches", ["enable-automation"])

# Turn-off userAutomationExtension

options.add_experimental_option("useAutomationExtension", False)

# Setting the driver path and requesting a page

driver = webdriver.Chrome(options=options)

# Changing the property of the navigator value for webdriver to undefined

driver.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

driver.get("https://www.google.com")

3. Rotating HTTP Header Information and User-Agent

The HTTP header contains information about the browser, the operating system, the request type, the user language, the referrer, the device type, and so on.

{

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"Accept-Encoding": "gzip, deflate",

"Accept-Language": "en-US,en;q=0.9",

"Host": "httpbin.org",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

}

The values of some of these attributes are different for headless browsers by default, and anti-bots identify these discrepancies to distinguish between legitimate visitors and bots. To mitigate detection, rotating user agents in Selenium can be helpful.

Here's how we used rotating HTTP header information to avoid bot detection with Selenium:

from selenium import webdriver

driver = webdriver.Chrome()

# Initializing a list with two Useragents

useragentarray = [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36",

]

for i in range(len(useragentarray)):

# Setting user agent iteratively as Chrome 108 and 107

driver.execute_cdp_cmd("Network.setUserAgentOverride", {"userAgent": useragentarray[i]})

print(driver.execute_script("return navigator.userAgent;"))

driver.get("https://www.httpbin.org/headers")

driver.close()

4. Avoid Patterns

One of the major mistakes that automation testers make is to create a bot with a defined time frame. Humans don't have a solid consistency like a bot, so it becomes fairly easy for the anti-bots to identify the consistent patterns of the bots.

It's also a common mistake to rapidly navigate from page to page, which humans don't do.

Randomizing time frames, using waits, scrolling slower, and generally trying to mimic human behavior surely elevate the chance of avoiding bot detectors. Here's how to bypass Selenium detection by avoiding patterns:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import time

driver = webdriver.Chrome()

driver.get('https://scrapingcourse.com/ecommerce/')

# Wait 3.5 on the webpage before trying anything

time.sleep(3.5)

# Wait for 3 seconds until finding the element

wait = WebDriverWait(driver, 3)

element = wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, '.woocommerce-loop-product__title')))

print('Product name: ' + element.text)

# Wait 4.5 seconds before scrolling down 700px

time.sleep(4.5)

driver.execute_script('window.scrollTo(0, 700)')

# Wait 2 seconds before clicking a link

time.sleep(2)

wait = WebDriverWait(driver, 3)

element = wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, 'a.woocommerce-loop-product__link'))).click()

# wait for 5 seconds until finding the element

wait = WebDriverWait(driver, 5)

element = wait.until(EC.presence_of_element_located((By.CLASS_NAME, 'woocommerce-product-details__short-description')))

print('Description: ' + element.text)

# Close the driver after 3 seconds

time.sleep(3)

driver.close()

5. Remove JavaScript Signature

One of the ways bot detectors like FingerprintJS and Imperva work is by inspecting the JavaScript signature inside WebDrivers, like ChromeDriver and GeckoDriver.

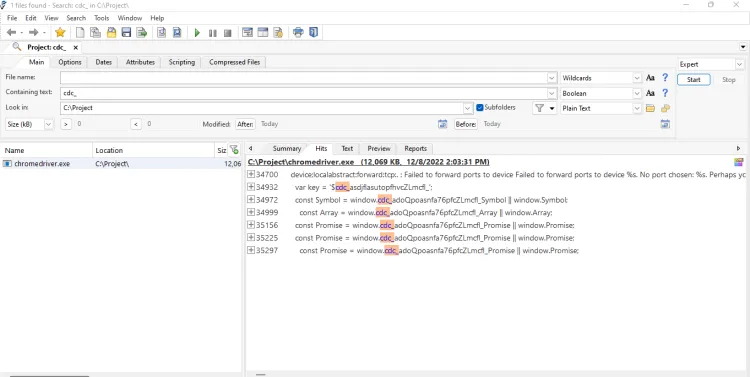

This signature is stored in the cdc_ variable. Websites look for the cdc_ variable in the document before denying access.

We'll use a tool called Agent Ransack to search for this signature in the chromedriver.exe binary file. It works the same way for WebDrivers like GeckoDriver and EdgeDriver.

As you can see, the signature is $cdc_asdjflasutopfhvcZLmcfl_. In order to evade detection, we can change "cdc" to a string of the same length as "abc". First, we need to open the binary of ChromeDriver. We'll use the Vim editor to open and edit the binary file.

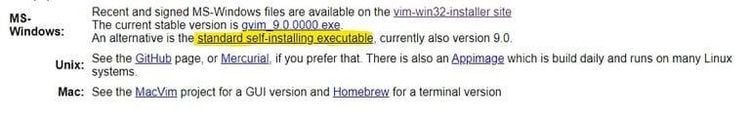

You can download Vim here. Click on "standard self-installing executable" for Windows. The program comes pre-installed by default for Mac and Linux.

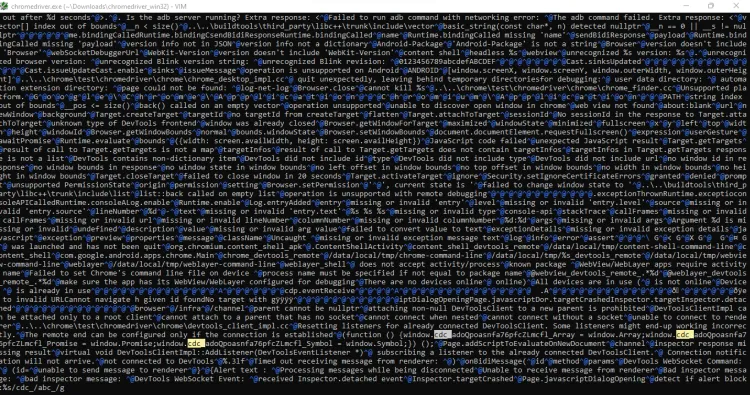

After installation, open CMD and type this and navigate to the folder. Then, run the following command:

vim.exe <pathTo>\chromedriver.exe

Then type :%s/cdc_/abc_/g to search and replace cdc_ with abc_.

Next, to exit Vim, type :wq and hit Enter to save the changes.

Some files might be generated with ~ at the end of the file names. Delete these files.

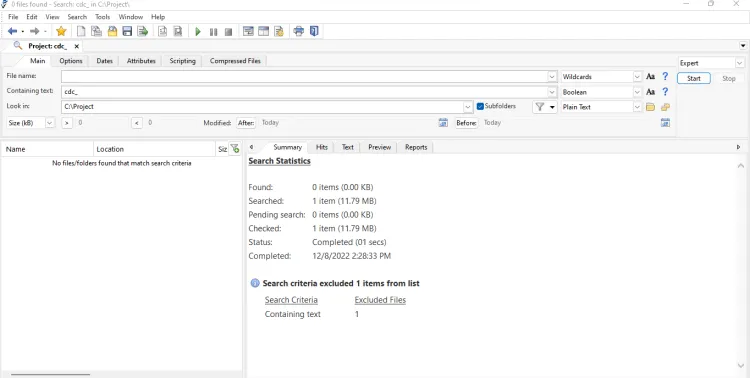

Now, let's try the same search using Agent Ransack.

As you can see now, the cdc_ signature variable isn't found in the file.

6. Using Cookies

When trying to scrape data from social media platforms or other sites that require some form of authentication, it's very common to log in repeatedly.

This iterative authentication request raises the alarm, and the account might be blocked or face a CAPTCHA or JavaScript challenge for verification.

In order to avoid this, we can use cookies. After logging in once, we can collect the login session cookies to reuse them in the future.

7. Follow The Page Flow

When interacting with a website, it's important to follow the same flow that a human user would.

That means clicking on links, filling out forms, and navigating the website naturally.

Following the page flow can make it less obvious that you're performing automation.

8. Using a Browser Extension

Another way to bypass Selenium detection is by using a browser extension, like uBlock Origin, to block JavaScript challenges and CAPTCHAs from being loaded on the page. That can help reduce the chances of your bot being detected by these challenges.

uBlock Origin is a free, open-source browser extension designed to block unwanted content (such as ads, tracking scripts and malware) from being loaded on web pages.

It can also be configured to block JavaScript challenges and CAPTCHAs, which can help reduce the chances of your bot being detected by these challenges.

To use uBlock Origin to avoid Selenium bot detection, you'll need to install the extension in your browser and configure it to block JavaScript challenges and CAPTCHAs.

You can then use Selenium to interact with the browser as you normally would, and uBlock Origin will automatically block any unwanted content from being loaded on the page.

It's important to note that uBlock Origin may not work with all websites, and it may not be able to block all types of JavaScript challenges and CAPTCHAs.

However, it can be a useful tool for reducing the chances of your bot being detected by these challenges, especially when combined with other methods.

9. Using a CAPTCHA Solving Service With Selenium

If you need to solve CAPTCHAs as part of your bot's workflow, you can use a CAPTCHA-solving service like 2Captcha or Anti-Captcha. These services use real people to solve CAPTCHAs, reducing the chances of your bot being detected as a bot.

When using a CAPTCHA-solving service with Selenium, it's important to use it sparingly in order to avoid bot detection. That means that you should only use the service when necessary and avoid using it for every CAPTCHA you encounter.

There are several reasons for this. First, using the service too frequently can raise red flags and increase the chances of your bot being detected. Websites may be more likely to suspect that you're using a bot if you use a CAPTCHA-solving service for every single CAPTCHA you encounter.

Second, using the service too frequently can be costly. Many CAPTCHA-solving services charge a fee for each CAPTCHA that they solve, so using the service too frequently can significantly increase the cost of running your bot.

To avoid these issues, it's a good idea to use the CAPTCHA-solving service only when necessary. It's best to use other methods, such as natural delays or browser extensions, to avoid CAPTCHAs when possible.

That can help to reduce the chances of your bot being detected and can also help to reduce the overall cost of running your bot.

Alternatives to Base Selenium

Using Selenium to avoid bot detection is quite straightforward, but other methods are as efficient as Selenium, like using ChromeDrivers, Cloudscraper, and APIs.

ZenRows API

ZenRows' API-based anti-bot bypassing solution is one of the highest-rated solutions for web crawlers and web scrapers.

It works by making an API call for a range of anti-bot solutions and provides solutions like rotating proxies, custom headers, WAF bypass, and CAPTCHA bypass tools.

# pip install requests

import requests

# Unique API key and URL of page you want to scrape

url = 'https://nowsecure.nl/'

apikey = YOUR_API_KEY

params = {

'url': url,

'apikey': apikey,

'js_render': 'true',

'antibot': 'true',

'premium_proxy': 'true',

}

response = requests.get('https://api.zenrows.com/v1/', params=params)

print(response.text)

Undetected_ChromeDriver

Undetected_ChromeDriver is an open-source project that makes Selenium ChromeDriver "look" human. It's an optimized Selenium ChromeDriver patch that lets you bypass anti-bot services like DataDome, Distill Network, Imperva, and Botprotect.io.

It bypasses bot detection measures like JavaScript challenges more effectively than ordinary Selenium. JavaScript challenges usually serve as a means to mitigate cyberattacks, like DDOS attacks, and also prohibit scraping.

The challenge consists of sending a JavaScript code that includes a challenge to the browser. Legitimate browsers have a JavaScript stack and will understand and pass the challenge.

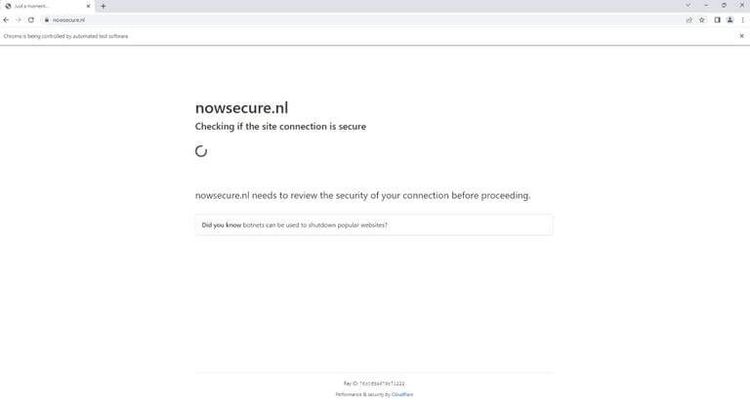

from selenium import webdriver

driver = webdriver.Chrome()

driver.get("https://nowsecure.nl")

As you can see, Selenium fails to bypass the security in the above website, so let's give undetected_ChromeDriver a try.

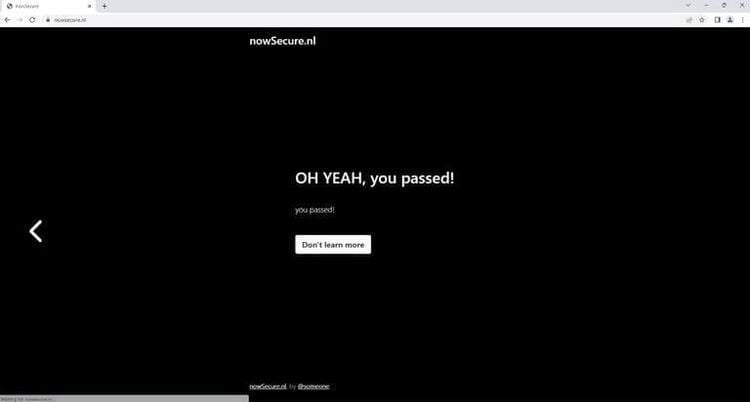

# pip install undetected-chromedriver

import undetected_chromedriver as uc

# Initializing driver

driver = uc.Chrome()

# Try accessing a website with antibot service

driver.get("https://nowsecure.nl")

Awesome! We were able to avoid the bot detectors with undetected_ChromeDriver.

Undetected_ChromeDriver also works on Brave Browser and many other Chromium-based browsers. For more, you can check out this project on GitHub.

On the one hand, it's able to bypass some bot detection measures better than base Selenium. However, undetected_ChromeDriver still falls short when it comes to bypassing strong anti-bot protections like Cloudflare and Akamai.

Cloudscraper

Cloudflare is one of the most popular anti-bot solution providers in the market. It's a Python module to bypass Cloudflare's anti-bot (also known as "I'm Under Attack Mode" or IUAM) implemented with requests.

Cloudflare employs active and passive bot detection techniques. For passively detecting bots, Cloudflare maintains a catalog of devices, IP addresses, and behavioral patterns associated with malicious bot networks.

It then checks the IP address reputation, HTTP request headers, TLS fingerprinting, and so on to filter out the bots. It also employs active detection methods, including CAPTCHAs, canvas fingerprinting, and event tracking.

Cloudflare's main bot protection method, known as "The Waiting Room", checks whether the client supports JavaScript and if it's able to solve the sophisticated JavaScript challenges.

Cloudscraper relies on a JavaScript Engine/Interpreter to solve the JavaScript challenges of the protected websites.

Any script using Cloudscraper will sleep for about five seconds for the first visit to any site with Cloudflare anti-bots enabled.

# Install cloudscraper with pip install cloudscraper

import cloudscraper

# Create cloudscraper instance

scraper = cloudscraper.create_scraper()

# Or: scraper = cloudscraper.CloudScraper() # CloudScraper inherits from requests.Session

print(scraper.get("https://nowsecure.nl").text)

Note that Cloudscraper doesn't work for the latest version of Cloudflare anti-bot protection.

Detected a Cloudflare version 2 challenge, This feature is not available in the opensource (free) version.

Conclusion

In this article, we've discussed why websites use anti-bots, how they work and the best ways to avoid bot detection with Selenium.

We also have seen alternative tools, like undetected-ChromeDriver, Cloudscraper, and ZenRows' API solution, to avoid detection while web scraping with Python.

ZenRows' API solution is currently one of the best options to avoid bot detection, and you can get your API key for free.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.