Scraping dynamic websites becomes possible with Selenium, but your script may get detected as a bot and your IP banned. On the bright side, you can avoid all this with a Selenium proxy.

Let's dive in!

What Is a Selenium Proxy?

A proxy acts as an intermediary between a client and a server. Through it, the client makes requests to other servers anonymously and securely and avoids geographical restrictions.

Headless browsers support proxy servers like HTTP clients. A Selenium proxy helps protect your IP address and avoid blocks when visiting websites.

Keep reading to learn how to set up a proxy in Selenium for web scraping!

Prerequisites

First, you need to install Python 3. Most platforms have it by default, so you can check if you do with the following command:

python --version

It should return something like this:

Python 3.11.2

If it results in an error or the printed version is 2.x, you should download Python 3.x and follow the installation instructions to set it up.

Next, initialize a new Python project and add the Selenium Python binding package:

pip install selenium

Selenium can control many browsers, but we'll use Google Chrome because it's the most used one. Make sure you have the latest Chrome version installed.

In the past, you needed to install WebDriver, but this is no longer the way. Selenium version 4 and above includes it as part of the package. If you're using an older version of Selenium, consider updating it to access the latest features and functionality. You can verify your current version using pip show selenium and upgrade to the newest version with pip install --upgrade selenium.

It's time to use what you installed in a Python script to start controlling Chrome with Selenium. This snippet below imports the tools, initializes a Chrome WebDriver instance and uses it to visit an example target page.

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

driver = webdriver.Chrome(service=ChromeService(ChromeDriverManager().install()))

driver.get('https://example.com/')

Perfect! You just learned how to get started with Selenium in Python. Let's see how to use it with a proxy!

How to Set a Proxy in Selenium

To set a proxy in Selenium, you need to:

- Retrieve a valid proxy server.

- Specify it in the

--proxy-serverChrome option. - Visit your target page.

Let's go over the whole process step by step.

First, get a proxy server URL from Free Proxy List. Then, configure Selenium with Options to launch Chrome with the --proxy-server flag:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.chrome.options import Options

# define custom options for the Selenium driver

options = Options()

# free proxy server URL

proxy_server_url = "157.245.97.60"

options.add_argument(f'--proxy-server={proxy_server_url}')

# create the ChromeDriver instance with custom options

driver = webdriver.Chrome(

service=ChromeService(ChromeDriverManager().install()),

options=options

)

The controlled instance of Chrome will now perform all requests through the specified proxy.

Next, navigate to http://httpbin.org/ip as a target site:

driver.get('http://httpbin.org/ip')

Note: This site will return the IP the request comes from and is handy for this example.

You can print the JSON value contained in the target web page like this:

print(driver.find_element(By.TAG_NAME, "body").text)

Put it all together:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

# define custom options for the Selenium driver

options = Options()

# free proxy server URL

proxy_server_url = "157.245.97.60"

options.add_argument(f'--proxy-server={proxy_server_url}')

# create the ChromeDriver instance with

# custom options

driver = webdriver.Chrome(

service=ChromeService(ChromeDriverManager().install()),

options=options

)

# print the IP the request comes from

print(driver.find_element(By.TAG_NAME, "body").text)

Here's what it'll return:

{ "origin": "157.245.97.60" }

The site response matches the proxy server IP. That means Selenium is visiting pages through the proxy server as desired. 🥳

Note: Free proxies are short-lived and unreliable, so chances are that the one used in the snippet above won't work. We'll see a better alternative later in the tutorial.

Great! You now know the basics of using a Python Selenium proxy. Let's dig into more advanced concepts!

Proxy Authentication with Python Selenium: Username & Password

Some proxy servers rely on authentication to restrict access to users with valid credentials. That's usually the case with commercial solutions or premium proxies.

The syntax to specify a username and password in an authenticated proxy URL looks like this with Selenium:

<PROXY_PROTOCOL>://<USERNAME>:<PASSWORD>@<PROXY_IP_ADDRESS>:<PROXY_PORT>

Note that using such a URL in --proxy-server won't work because the Chrome driver ignores the username and password by default. That's where a third-party plugin, such as Selenium Wire, comes to the rescue.

It extends Selenium to give you access to the requests made by the browser and change them as desired. Launch the command below to install it:

pip install selenium-wire

Next, use Selenium Wire to deal with proxy authentication, as in the example below:

from seleniumwire import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.common.by import By

# Selenium Wire configuration to use a proxy

proxy_username = 'fgrlkbxt'

proxy_password = 'cs01nzezlfen'

seleniumwire_options = {

'proxy': {

'http': f'http://{proxy_username}:{proxy_password}@185.199.229.156:7492',

'verify_ssl': False,

},

}

driver = webdriver.Chrome(

seleniumwire_options=seleniumwire_options

)

driver.get('http://httpbin.org/ip')

print(driver.find_element(By.TAG_NAME, 'body').text) # { "origin": "185.199.229.156" }

Note: This code may result in a 407: Proxy Authentication Required error. A proxy server responds with that HTTP status when the credentials aren't correct, therefore make sure the proxy URL involves a valid username and password.

Learn more in our guide to Selenium Wire.

Best Protocols for a Selenium Proxy: HTTP, HTTPS, SOCKS5

When it comes to choosing a protocol for a Selenium proxy, the most common options are HTTP, HTTPS, and SOCKS5.

HTTP proxies send data over the internet, while HTTPS proxies encrypt it to provide an extra security layer. That's why the latter is more popular.

Another useful protocol for Selenium proxies is SOCKS5, also known as SOCKS. It supports a wider range of web traffic, including email and FTP, which makes it a more versatile protocol.

Overall, HTTP and HTTPS proxies are good for web scraping and crawling, and SOCKS finds applications in tasks that involve non-HTTP traffic.

"Error 403: Forbidden for Proxy" in Selenium Grid

Selenium Grid allows you to control remote browsers and run cross-platform scripts in parallel. One of the most common errors you can encounter during web scraping is Error 403: Forbidden for Proxy. That happens for two reasons:

- Another process is already running on port 4444.

- You aren't sending

RemoteWebDriverrequests to the correct URL.

By default, the Selenium server hub listens on http://localhost:4444. So, if you have another process running on the 4444 port, end it or start Selenium Grid using another port.

If that doesn't solve the issue, make sure you're connecting the remote driver to the right hub URL, as shown below:

import selenium.webdriver as webdriver

# ...

webdriver.Remote('http://localhost:4444/wd/hub', {})

Perfect! The error should be gone now!

Use a Rotating Proxy in Selenium with Python

If your script makes several requests in a short interval, the server may consider it suspicious and block your IP. Yet, that won't happen with a rotating proxy approach, a technique that involves switching proxies after a particular period or number of requests.

Your end IP will keep changing to make you appear as a different user each time, preventing the server from banning you. That's the power of proxy rotation!

It's time to learn how to build a proxy rotator in Selenium with selenium-wire.

First, you have to find a pool of proxies. In this example, we'll use some free proxies.

Store them in an array as follows:

PROXIES = [

'http://19.151.94.248:88',

'http://149.169.197.151:80',

# ...

'http://212.76.118.242:97'

]

Then, extract a random proxy with random.choice() and use it to initialize a new driver instance:

from seleniumwire import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

import random

# the list of proxy to rotate on

PROXIES = [

'http://19.151.94.248:88',

'http://149.169.197.151:80',

# ...

'http://212.76.118.242:97'

]

# randomly extract a proxy

random_proxy = random.choice(PROXIES)

# set the proxy in Selenium Wire

seleniumwire_options = {

'proxy': {

'http': f'{random_proxy}',

'https': f'{random_proxy}',

'verify_ssl': False,

},

}

# create a ChromeDriver instance

driver = webdriver.Chrome(

service=ChromeService(ChromeDriverManager().install()),

seleniumwire_options=seleniumwire_options

)

driver.visit('https://example.com/')

# scraping logic...

driver.quit()

# visit other pages...

Repeat this logic every time you want to visit a new page.

Well done! You built a Selenium rotating proxy. Learn more in our definitive guide on how to rotate proxies in Python.

However, most requests will fail since free proxies are error-prone. That's why you should add retry logic with random timeouts.

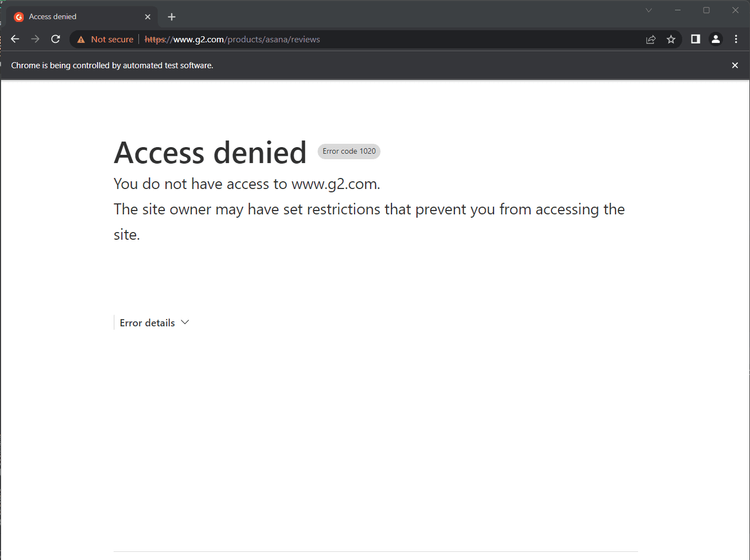

But that isn't the only issue. Try to test the IP rotator logic against a target that uses anti-bot technologies:

driver.visit('https://www.g2.com/products/asana/reviews')

The output:

The target server detected the rotating proxy Selenium request as a bot and responded with a 403 Unauthorized error.

In fact, free proxies will get you blocked most of the time. We used them to show the basics, but you should never rely on them in a real-world script.

The solution? A premium proxy!

Which Proxy Is Best for Selenium?

As seen above, free proxies are unreliable, and you should prefer premium proxies for web scraping. If you need ideas on where to get them, check our list of the best proxy providers for scraping.

At the same time, premium proxies aren't the definitive solution. Due to the automated nature of Selenium, anti-scraping technologies can detect and block it, even when using it with a premium proxy.

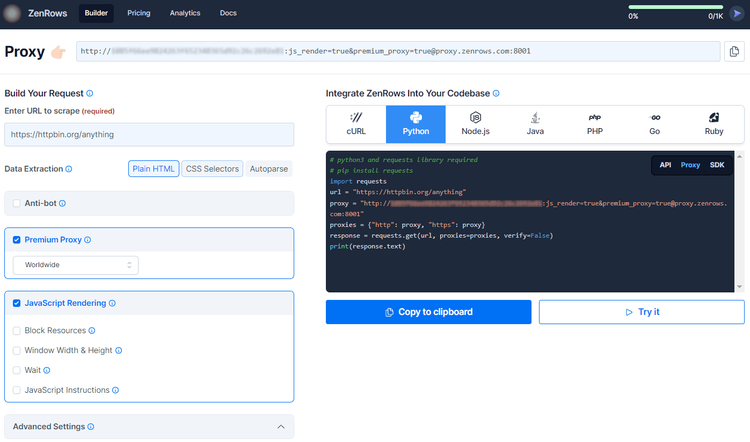

A better alternative to avoid getting blocked is ZenRows. This scraping API offers similar features to Selenium but with a much higher success rate. And unlike proxy companies, ZenRows charges only for successful requests. That makes it a cheaper and more flexible solution. Also, it eliminates the infrastructure headaches of setting up and maintaining a proxy.

Sign up to get started with ZenRows and receive 1,000 free API credits. Once you register, you'll reach the Request Builder page, where you paste your target URL and check the features you need.

To scrape dynamic websites as in Selenium, you need to check the "JavaScript Rendering" option. Also, you should select the Premium Proxy option for maximum anonymity and avoid IP blocks. Next, choose Python as a language and the Proxy mode on the right, then click the "Copy to clipboard" button.

Note: It's often recommended to activate the Anti-bot feature, too.

Now, install the requests library:

pip install requests

Then, paste the Python code into your script:

import requests

proxy = "http://<YOUR_ZENROWS_API_KEY>:js_render=true&[email protected]:8001"

proxies = {"http": proxy, "https": proxy}

response = requests.get("https://www.g2.com/products/asana/reviews", proxies=proxies, verify=False)

print(response.status_code)

This time, the snippet would return 200 and no longer 403.

Fantastic! Now you have a proxy scraping solution with Selenium's capabilities, but much more effective!

Conclusion

This step-by-step tutorial showed how to set up a proxy in Selenium with Python. You started from the basics and have become a Selenium Python proxy master!

Now you know:

- What a Selenium proxy is.

- The basics of setting a proxy with Selenium in Python.

- How to deal with authenticated proxies in Selenium.

- How to implement a rotating proxy and why this approach doesn't work with free proxies.

- What a premium proxy is and how to use it.

Keep in mind that using proxies in Selenium helps you avoid IP bans. Yet, some anti-scraping technologies will still be able to block you. Fool them all with ZenRows, which offers the best proxies on the market and provides rotating IP functionality, as well as advanced anti-block bypass capabilities with a single API call.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.