Are you struggling to change your IP address and bypass blocks while scraping with Selenium? You need to understand how to rotate proxies.

In this article, you'll learn step-by-step how to rotate proxies in Selenium using Selenium Wire, including managing proxy rotation at scale.

Why You Need to Rotate Proxies for Web Scraping

Rotating your proxies allows you to use a different IP address for each request during scraping. Although you can utilize a single static proxy, the con is that it sticks to a single IP and will eventually get you blocked.

Proxy rotation is critical when web scraping with Selenium since it routes your request through different IPs, improving your chances of bypassing anti-bots and IP bans.

You'll see how to rotate proxies in the next section. Before that, you should visit our tutorial on setting up a single proxy in Selenium.

How to Rotate Proxies in Selenium Python

Proxy rotation in Selenium isn't a straightforward process. But there's an extension called Selenium Wire that simplifies it.

So, before moving on to the steps involved, install Selenium Wire using pip. This also installs vanilla Selenium:

pip install selenium-wire

Now, let's get started with proxy rotation with Selenium Wire.

Step 1: Build Your Script With Selenium Wire

Selenium Wire has the same syntax as the vanilla version. Let's see the code to set it up.

First, import the WebDriver and selector classes from Selenium Wire and set up a driver instance:

# import the required libraries

from seleniumwire import webdriver

from selenium.webdriver.common.by import By

# set up a driver instance

driver = webdriver.Chrome()

Next, send a request to https://httpbin.io/ip and obtain the body element text to view your default IP address:

# ...

# send a request to view your current IP address

driver.get('https://httpbin.io/ip')

ip_address = driver.find_element(By.TAG_NAME, 'body').text

# print the IP address

print(ip_address)

Merge the snippets, and your final code should look like this:

# import the required libraries

from seleniumwire import webdriver

from selenium.webdriver.common.by import By

# set up a driver instance

driver = webdriver.Chrome()

# send a request to view your current IP address

driver.get('https://httpbin.io/ip')

ip_address = driver.find_element(By.TAG_NAME, 'body').text

# print the IP address

print(ip_address)

The code outputs the current IP address, as shown:

{

"origin": "101.118.0.XXX:YYY"

}

Nicely done! You just learned how to set up Selenium Wire. Next, let's get a list of proxies to rotate.

Step 2: Get a proxy list

The next step is to create a proxy list. Grab some free ones from the Free Proxy List and add them as an array in your scraper file as shown below:

# create a proxy array

proxy_list = [

{'http': '103.160.150.251:8080', 'https': '103.160.150.251:8080'},

{'http': '38.65.174.129:80', 'https': '38.65.174.129:80'},

{'http': '46.105.50.251:3128', 'https': '46.105.50.251:3128'},

{'http': '103.23.199.24:8080', 'https': '103.23.199.24:8080'},

{'http': '223.205.32.121:8080', 'https': '103.23.199.24:8080'}

]

You'll rotate these proxies in the next section.

These proxies will likely not work at the time of reading because they're free and unreliable.

Step 3: Implement and Test Proxy Rotation

Rotating your proxies is easy once you have your proxy list. Selenium Wire is handy here because it lets you reload the target web page with a different IP address while the browser instance runs.

Let's rotate the previous proxies to see how that works.

Import the required libraries and add your proxy list to your scraper file:

# import the required libraries

from seleniumwire import webdriver

from selenium.webdriver.common.by import By

# create a proxy array

proxy_list = [

{"http": "http://103.160.150.251:8080", "https": "https://103.160.150.251:8080"},

{"http": "http://38.65.174.129:80", "https": "https://38.65.174.129:80"},

{"http": "http://46.105.50.251:3128", "https": "https://46.105.50.251:3128"},

]

Next, initiate a browser instance with the first proxy on your list by pointing to its index using the options parameter. Send your first request with that proxy and print your current IP address from the body element:

# ...

# initiate the driver instance with the first proxy

driver = webdriver.Chrome(seleniumwire_options= {

'proxy': proxy_list[0],

})

# visit a website to trigger a request

driver.get('https://httpbin.io/ip')

# get proxy value element

ip = driver.find_element(By.TAG_NAME, 'body').text

# print the current IP address

print(ip)

Switch to the second proxy by setting the driver.proxy value on the first index. This switches to the second proxy on the list. Repeat your request to use the new proxy:

# ...

# switch to the second proxy:

driver.proxy = proxy_list[1]

# reload the page with the same instance

driver.get('https://httpbin.io/ip')

# get proxy value element

ip2 = driver.find_element(By.TAG_NAME, 'body').text

# print the second IP address

print(ip2)

Now, call the third proxy from its index, and reload the website to view the IP address and quit the browser:

# ...

# switch to the third proxy:

driver.proxy = proxy_list[2]

# reload the page with the same instance

driver.get('https://httpbin.io/ip')

# get proxy value element

ip3 = driver.find_element(By.TAG_NAME, 'body').text

print(ip3)

driver.quit()

Put it all together, and your final code should look like this:

from seleniumwire import webdriver

from selenium.webdriver.common.by import By

# create a proxy array

proxy_list = [

{"http": "http://103.160.150.251:8080", "https": "https://103.160.150.251:8080"},

{"http": "http://38.65.174.129:80", "https": "https://38.65.174.129:80"},

{"http": "http://46.105.50.251:3128", "https": "https://46.105.50.251:3128"},

]

driver = webdriver.Chrome(seleniumwire_options= {

'proxy': proxy_list[0],

})

# visit a website to trigger a request

driver.get('https://httpbin.io/ip')

# get proxy value element

ip = driver.find_element(By.TAG_NAME, 'body').text

# print the current IP address

print(ip)

# switch to the second proxy:

driver.proxy = proxy_list[1]

# reload the page with the same instance

driver.get('https://httpbin.io/ip')

# get proxy value element

ip2 = driver.find_element(By.TAG_NAME, 'body').text

# print the second IP address

print(ip2)

# switch to the third proxy:

driver.proxy = proxy_list[2]

# reload the page with the same instance

driver.get('https://httpbin.io/ip')

# get proxy value element

ip3 = driver.find_element(By.TAG_NAME, 'body').text

# print the second IP address

print(ip3)

driver.quit()

The code rotates the proxies and outputs a different IP address per page reload based on the list index:

{

"origin": "103.160.150.251:8080"

}

{

"origin": "38.65.174.129:3128"

}

{

"origin": "46.105.50.251:8888"

}

Good job! Your scraper now rotates proxies manually. Let's see a case where your chosen proxy requires authentication.

Step 4 (optional): Adding Proxy Authentication

Free proxies won't work long-term for your scraper. The best option is to use premium web scraping proxies for a higher success rate.

Premium proxies require authentication to boost security and reliability. Let's modify the previous script to see how proxy authentication works.

First, create a list of premium proxy services and configure them in a list. Each service usually takes a username and a password, depending on the authentication requirements.

Ensure you replace the username and password with your credentials:

# import the required libraries

from seleniumwire import webdriver

from selenium.webdriver.common.by import By

# create a proxy list and add your authentication credentials

proxy_list = [

{

'http': 'http://username:[email protected]:8001',

'https': 'https://username:[email protected]:8001'

},

{

'http': 'http://username:[email protected]:8888',

'https': 'https://username:[email protected]:8888',

},

# ... more proxies

]

Now, rotate the proxies in your code:

# ...

driver = webdriver.Chrome(seleniumwire_options= {

'proxy': proxy_list[0],

})

# visit a website to trigger a request

driver.get('https://httpbin.io/ip')

# get proxy value element

ip = driver.find_element(By.TAG_NAME, 'body').text

# print the current IP address

print(ip)

# switch to the second proxy:

driver.proxy = proxy_list[1]

# reload the page with the same instance

driver.get('https://httpbin.io/ip')

# get proxy value element

ip2 = driver.find_element(By.TAG_NAME, 'body').text

# print the second IP address

print(ip2)

driver.quit()

Here's the final code:

# import the required libraries

from seleniumwire import webdriver

from selenium.webdriver.common.by import By

# create a proxy list and add your authentication credentials

proxy_list = [

{

'http': 'http://username:[email protected]:8001',

'https': 'https://username:[email protected]:8001'

},

{

'http': 'http://username:[email protected]:8888',

'https': 'https://username:[email protected]:8888',

},

# ... more proxies

]

driver = webdriver.Chrome(seleniumwire_options= {

'proxy': proxy_list[0],

})

# visit a website to trigger a request

driver.get('https://httpbin.io/ip')

# get proxy value element

ip = driver.find_element(By.TAG_NAME, 'body').text

# print the current IP address

print(ip)

# switch to the second proxy:

driver.proxy = proxy_list[1]

# reload the page with the same instance

driver.get('https://httpbin.io/ip')

# get proxy value element

ip2 = driver.find_element(By.TAG_NAME, 'body').text

# print the second IP address

print(ip2)

driver.quit()

The code above will authenticate and route your requests through your proxy services. However, proxy management is often challenging while scaling up. How can you deal with that?

Easy Premium Proxies Management with your Rotator

Free proxies aren't reliable and will eventually get you blocked while scraping with Selenium. For instance, the previous free proxies will fail when scraping a heavily protected website like G2.

You can try it out with the following code:

# import the required library

from seleniumwire import webdriver

# create a proxy array

proxy_list = [

{'http': '103.160.150.251:8080', 'https': '103.160.150.251:8080'},

{'http': '38.65.174.129:80', 'https': '38.65.174.129:80'},

{'http': '46.105.50.251:3128', 'https': '46.105.50.251:3128'},

]

# start a driver instance with the first proxy

driver = webdriver.Chrome(seleniumwire_options= {

'proxy': proxy_list[0],

})

# visit a website to trigger a request

driver.get('https://www.g2.com/products/asana/reviews')

# grab a screenshot to confirm access

driver.save_screenshot('g2_page_with_free_proxies.png')

# switch to the second proxy

driver.proxy = proxy_list[1]

# visit a website to trigger a request

driver.get('https://www.g2.com/products/asana/reviews')

# grab a screenshot to confirm access

driver.save_screenshot('g2_page_with_free_proxies_2.png')

driver.quit()

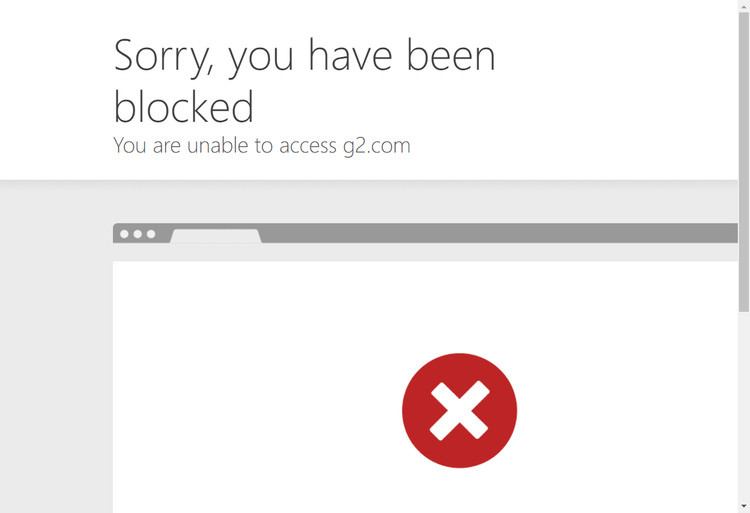

The code outputs a screenshot showing that the scraper got blocked despite using proxies:

How can you bypass that?

Premium proxies are more reliable and can help you to avoid getting blocked. However, you need more than premium proxies to beat advanced anti-bot systems like Cloudflare Turnstile.

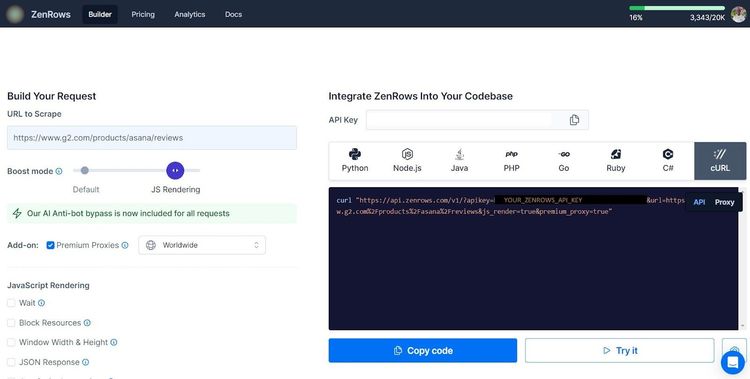

The best option is to use a web scraping API like ZenRows. It automatically rotates premium proxies, fixes your request headers, and helps you avoid any anti-bot system, allowing you to focus on scraping the content you want without worrying about blocks.

Sign up to load the Request Builder. Past the target URL in the link box, set the Boost mode to JS Rendering, and click Premium proxies. Then select the cURL request option.

Next, import vanilla Selenium and urllib. Then format the target URL like so:

# import the required libraries

import urllib.parse

from selenium import webdriver

# start a Chrome instance

driver = webdriver.Chrome()

# format the target URL

target_url = urllib.parse.quote('https://www.g2.com/products/asana/reviews', safe='')

Format the generated cURL in your script. Then, send a request to the formatted cURL and print the page source.

# ...

# reformat the generated cURL

url = (

'https://api.zenrows.com/v1/'

'?apikey=<YOUR_ZENROWS_API_KEY>'

f'&url={target_url}'

'&js_render=true&premium_proxy=true'

)

# visit the target page

driver.get(url)

print(driver.page_source)

Your final code should look like this:

# import the required libraries

import urllib.parse

from selenium import webdriver

# start a Chrome instance

driver = webdriver.Chrome()

# format the target URL

target_url = urllib.parse.quote('https://www.g2.com/products/asana/reviews', safe='')

# specify the generated cURL

url = (

'https://api.zenrows.com/v1/'

'?apikey=<YOUR_ZENROWS_API_KEY>'

f'&url={target_url}'

'&js_render=true&premium_proxy=true'

)

# visit the target page

driver.get(url)

print(driver.page_source)

driver.quit()

The code outputs the page source without getting blocked:

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/images/favicon.ico" rel="shortcut icon" type="image/x-icon" />

<title>Asana Reviews 2024</title>

</head>

<body>

<!-- other content omitted for brevity -->

</body>

</html>

Congratulations! You just scraped a protected website by integrating ZenRows with Selenium.

ZenRows also features straightforward JavaScript instructions that allow you to add headless browser functionality to your scraper. Hence, you can use ZenRows with the Requests library and avoid Selenium's complexity.

Conclusion

In this article, you've learned how to rotate proxies in Selenium. Here's a summary of what you now know:

- Step-by-step guide on how to rotate free proxies from a custom list.

- How to handle proxy services that require authentication.

- Managing premium proxy rotation with web scraping solutions.

Feel free to keep honing your web scraping skills with more examples. Remember that many websites employ different anti-bot strategies. Bypass them all with ZenRows, an all-in-one web scraping solution. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.