Selenium is an open-source framework for automating web browsers. However, it has some relevant limitations, like being unable to intercept and change network traffic automatically. Yet, there's a solution: Selenium Wire, a plugin that supercharges base Selenium.

Let's see how to use it!

What Is Selenium Wire

Selenium Wire is a Python library that enhances Selenium's Python bindings so that you can access the actual requests made by the browser. It provides an easy-to-use interface for intercepting and modifying network traffic.

Furthermore, web scrapers can use Selenium Wire to analyze a website's network traffic, such as requests, responses, and WebSocket messages.

Install Selenium Wire in Python and Get Started

Let's set up Selenium Wire and see how to get started with it. But first, ensure you have Python v3.7 or a later version installed. You can check the Python version on your machine like this:

python --version

Then, install Selenium Wire using pip:

pip install selenium-wire

That will also install its main dependency, Selenium, as it extends it.

Although Selenium supports all major browsers, we'll use Chrome in this tutorial.

Note: Installing WebDriver was once required, but not anymore. Selenium version 4 or higher includes it by default. To ensure you have the latest features, update Selenium if you're using an older version. You can check your current version with pip show selenium and upgrade with pip install --upgrade selenium if needed.

Here's a script showing the primary usage of Selenium. It initializes an instance of the ChromeDriver and opens ScrapeMe (our target site) in a new browser tab. It then kills the instance and prints the text content of the site.

from selenium import webdriver

from selenium.webdriver.common.by import By

# Creates an instance of the chrome driver (browser)

driver = webdriver.Chrome()

# Hit target site

driver.get("https://scrapeme.live/shop/")

# Get body element of target site

body = driver.find_element(By.TAG_NAME, 'body')

# Print body text content

print(body.text)

# Kill browser instance

# driver.quit()

Check out our Selenium web scraping tutorial if you want to review concepts.

Now, to use selenium-wire, update the import of webdriver from selenium to seleniumwire:

from seleniumwire import webdriver

from selenium.webdriver.common.by import By

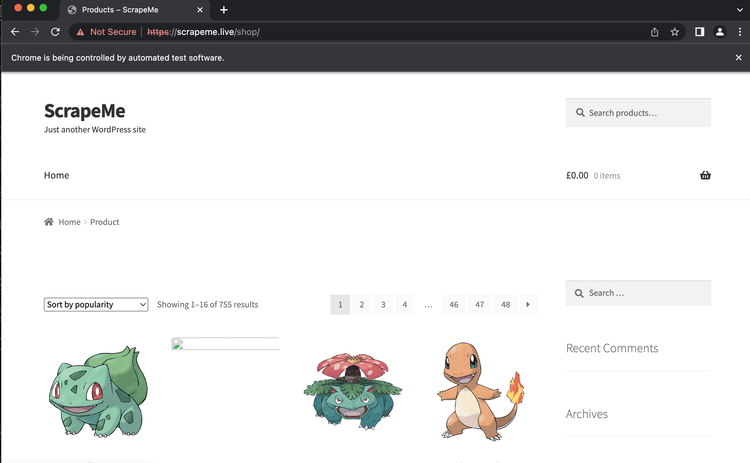

Run the script powered by Selenium Wire, and you'll see the ScrapeMe site in a new browser instance:

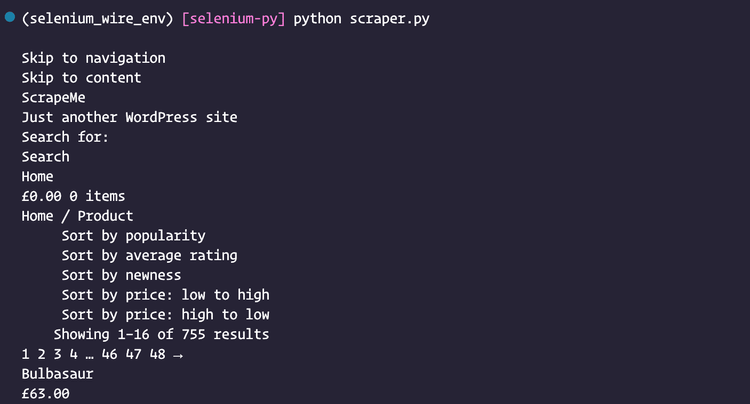

After it closes, you'll see the page text in your terminal:

Parse Response to JSON

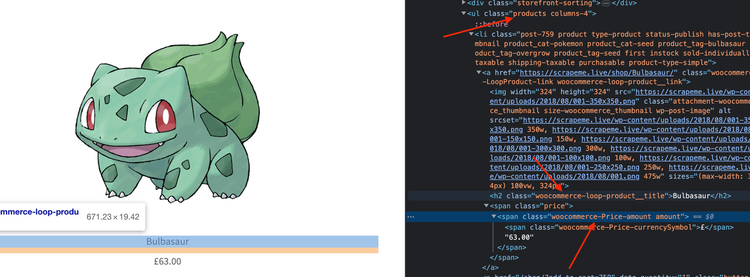

ScrapeMe contains a list of Pokémon with their respective price, and we'll scrape them into a JSON object.

Use the woocommerce-loop-product__title class to get the name of each creature and the woocommerce-Price-amount one to get their individual price.

Do those steps by looping through all the Pokémon and storing the name and price as key-value pairs in the data dictionary.

import json

# ...

driver.get('https://scrapeme.live/shop/')

products = driver.find_elements(By.CSS_SELECTOR, '.products > li')

data = {}

for product in products:

pokemon_name = product.find_element(By.CLASS_NAME, 'woocommerce-loop-product__title').text

pokemon_price = product.find_element(By.CLASS_NAME, 'woocommerce-Price-amount').text

data[pokemon_name] = pokemon_price

print(json.dumps(data, ensure_ascii=False))

# Kill browser instance

driver.quit()

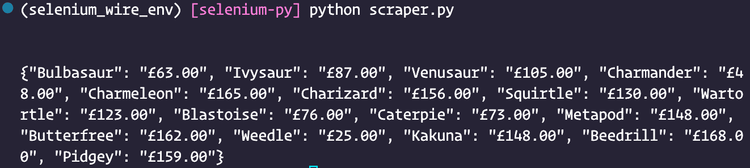

Run the script, and you should see an output on your terminal like this:

Chrome Options of Selenium Wire

Selenium Wire allows you to specify additional Chrome options when creating a WebDriver to customize it further. You can pass them as an argument to the seleniumwire_options parameter.

driver = webdriver.Chrome(

options=webdriver.ChromeOptions(...),

# seleniumwire options

seleniumwire_options={}

)

This is a list of all options made available by Selenium Wire:

| Option | Description | Example | Default Value |

|---|---|---|---|

addr |

The current machine's IP or hostname | { 'addr': '159.89.173.104' } |

127.0.0.1 |

auto_config |

Auto-configure the browser for request capture | { 'auto_config': False } |

True |

ca_cert |

The path to a root (CA) certificate | { 'ca_cert': '/path/to/ca.crt' } |

Default path |

ca_key |

The path to the private key | { 'ca_key': '/path/to/ca.key' } |

Default key |

disable_capture |

Disable request capture | { 'disable_capture': True } |

False |

disable_encoding |

Request uncompressed server data | { 'disable_encoding': True } |

False |

enable_har |

Store a HAR archive of HTTP transactions that can be retrieved with driver.har |

{ 'enable_har': True } |

False |

exclude_hosts |

The addresses Selenium Wire should ignore | { 'exclude_hosts': ['zenrows.com'] } |

[] |

ignore_http_methods |

Selenium Wire will ignore these uppercase HTTP methods | { 'ignore_http_methods': ['TRACE'] } |

['OPTIONS'] |

port |

Selenium Wire's backend port | { 'port': 8080 } |

Random port |

proxy |

The proxy server's upstream configuration | { 'proxy': { 'http': 'http://190.43.92.130:999', 'https': 'http://5.78.76.237:8080', } } |

{} |

request_storage |

Storage type | { 'request_storage': 'memory } |

disk |

request_storage_base_dir |

Selenium Wire's default disk-based storage location for requests and responses | { 'request_storage_base_dir': '/path/to/storage_folder' } |

System temp folder |

request_storage_max_size |

The maximum number of requests to store in-memory | { 'request_storage_max_size': 100 } |

Unlimited |

suppress_connection_errors |

Suppress connection-related tracebacks | { 'suppress_connection_errors': False } |

True |

verify_ssl |

Verify SSL certificates | { 'verify_ssl': False } |

False |

Understand the Request and Response Objects

Selenium provides access to two objects that represent HTTP requests and responses: the request and response objects.

You can see the attributes of the request object below:

| Attribute | Description |

|---|---|

body |

Returns the request body as a byte string |

cert |

Dictionary-format SSL certificate information |

date |

Time when the request was made |

headers |

Dictionary of request headers |

host |

Request host |

method |

HTTP method |

params |

Dictionary of parameters |

path |

Request path |

querystring |

Query string |

response |

Response object associated with the request |

url |

Request URL |

ws_messages |

For WebSocket handshake requests, this is a list of any messages sent and received |

The properties of the response object are as follows:

| Attribute | Description |

|---|---|

body |

Returns the response body as a byte string |

date |

Time the response was received |

headers |

Dictionary of request headers |

reason |

Reason phrase |

status_code |

Status code of the response |

Avoid Being Blocked while Using Selenium Wire Proxy in Python

Unfortunately, using Selenium Wire in Python to scrape websites can also get your scraper blocked. However, there are ways to avoid that, so let's explore them!

Use a Proxy with Selenium Wire

A proxy acts as a middleman between your machine and the website you're trying to scrape. You can hide your IP address and avoid detection by routing traffic through it.

Use a proxy with Selenium Wire by grabbing a free proxy for web scraping and specifying the proxy option in the seleniumwire_options dictionary. Update your script like this:

# ...

PROXY = 'http://61.28.233.217:3128'

seleniumwire_options = {

'proxy': {

'http': PROXY,

'https': PROXY,

},

}

# Creates an instance of the chrome driver (browser)

driver = webdriver.Chrome(

# seleniumwire options

seleniumwire_options=seleniumwire_options,

)

# Hit target site

driver.get('https://httpbin.org/anything')

body = driver.find_element(By.TAG_NAME, 'body')

print(body.text)

# ...

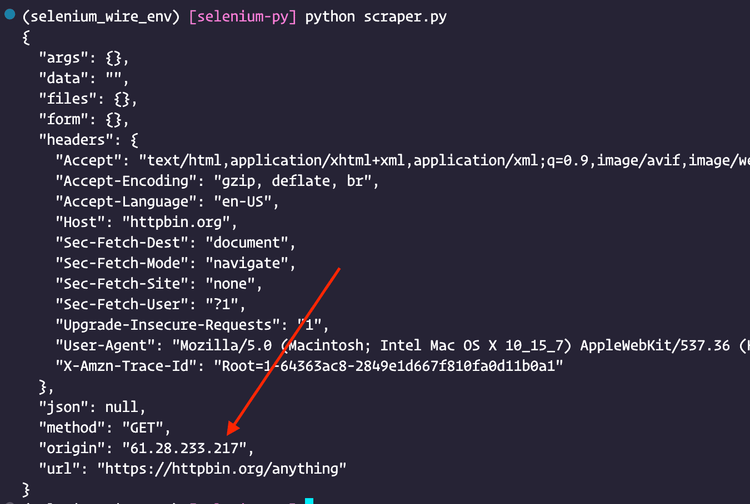

We've replaced the target site with HTTPBin, which returns some information about the clients that make requests to it, including their IP.

The proxy specified in PROXY is free and may not work for you. If so, get a fresh one from a provider like Free Proxy List using this format:

<PROXY_PROTOCOL>://<PROXY_IP_ADDRESS>:<PROXY_PORT>

Run the script, and you'll get an IP address different from your machine's.

Proxy Authentication in Selenium Wire

Some proxies require credentials before granting access, but you can perform proxy authentication with Selenium Wire by adding the username and password using the below format:

<PROXY_PROTOCOL>://<USERNAME>:<PASSWORD>@<PROXY_IP_ADDRESS>:<PROXY_PORT>

The updated script with the credentials will look like this:

# ...

PROXY = 'http://username:[email protected]:3128'

# ...

Error: Selenium Wire with Proxy Is Not Working

In certain scenarios, your proxy may not work with Selenium Wire, and you'll see the common error ERR_TUNNEL_CONNECTION_FAILED. In that case, either your proxy isn't running or accessible from your network, or your proxy configuration isn't correct.

Here's what you can do to fix it:

- Start by pinging the proxy through a browser to ensure it's active. If not, check if your proxy's IP and ports are correct and that no firewalls are blocking your connection to the proxy server.

- If you're still experiencing errors, you'll need to use a different proxy server or network.

Why You Need Premium Proxies

Using free proxies has some important downsides: they're unreliable, insecure, and sometimes malicious. They're also often overloaded, resulting in poor performance. That's where premium proxies for web scraping come in.

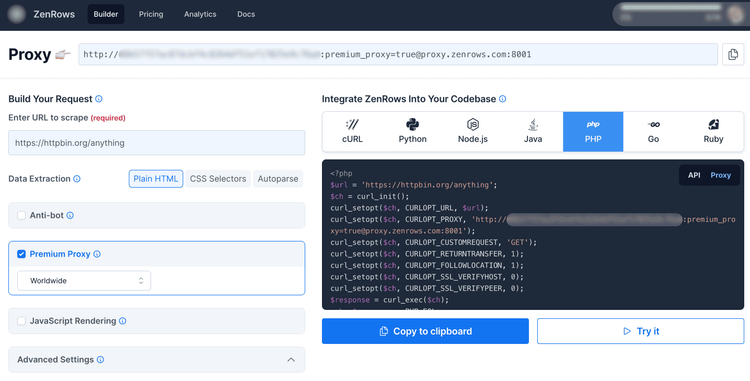

Popular solutions like ZenRows provide rotating proxies at an amazing rate. You can try it with 1,000 free credits now. Your overall experience will be notably better, and you won't get blocked easily. Also, it comes with a complete toolkit to bypass anti-scraping measures.

Head over to ZenRows and sign up for a free trial. Once on the app home, activate the premium proxy option as shown in the screenshot below and copy the proxy URL you see on the bar on the top.

Update the PROXY variable with the URL you copied to get a different IP whenever you run the script:

# ...

PROXY = 'http://<YOUR_ZENROWS_API_KEY>:[email protected]:8001'

# ...

ZenRows is more effective than free proxies, it scales easily, is cheaper than traditional proxy providers, and you'll have a higher success rate when scraping.

Customize Headers in Selenium Wire

Using default headers while web scraping is likely to get you blocked, mostly because of the User-Agent string. But as Selenium Wire enables us to intercept requests, we can set a new custom UA for each request.

To do that, update your script with the below code:

# ...

# Creates an instance of the chrome driver (browser)

driver = webdriver.Chrome(

# seleniumwire options

seleniumwire_options={},

)

CUSTOM_UA = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36'

SEC_CH_UA = '"Google Chrome";v="112", " Not;A Brand";v="99", "Chromium";v="112"'

REFERER = 'https://google.com'

def request_interceptor(request):

# Delete previous UA

del request.headers["user-agent"]

# Set new custom UA

request.headers["user-agent"] = CUSTOM_UA

# Delete previous Sec-CH-UA

del request.headers["sec-ch-ua"]

# set Sec-CH-UA

request.headers["sec-ch-ua"] = SEC_CH_UA

# Set referer

request.headers["referer"] = REFERER

driver.request_interceptor = request_interceptor

# Hit target site

driver.get('https://httpbin.org/anything')

# ...

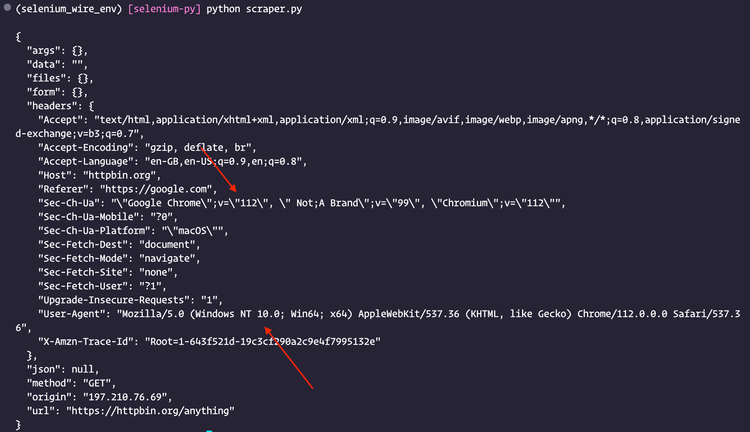

We also set the sec-ch-ua and referer headers to provide additional information about the UA. You can learn more about User-Agents for web scraping in our in-depth tutorial.

Run the script, and you'll see that your UA will change to what you set it to.

Add Undetected ChromeDriver to Selenium Wire

Undetected Chromedriver for Selenium Wire is a patched ChromeDriver that avoids popular bot detection mechanisms that websites use to identify and block automated traffic. And luckily, Selenium Wire integrates Undetected ChromeDriver if found in the same environment it's installed in.

Let's install the patched driver:

pip install undetected-chromedriver

Now, update your current driver with the patched one:

# ...

import undetected_chromedriver as uc

# Creates an instance of the chrome driver (browser)

driver = uc.Chrome(

# seleniumwire options

seleniumwire_options={},

)

# Hit target site

driver.get('https://scrapeme.live/shop/')

body = driver.find_element(By.TAG_NAME, 'body')

print(body.text)

driver.quit()

Your script will work as before but with a set of bot detection bypass features.

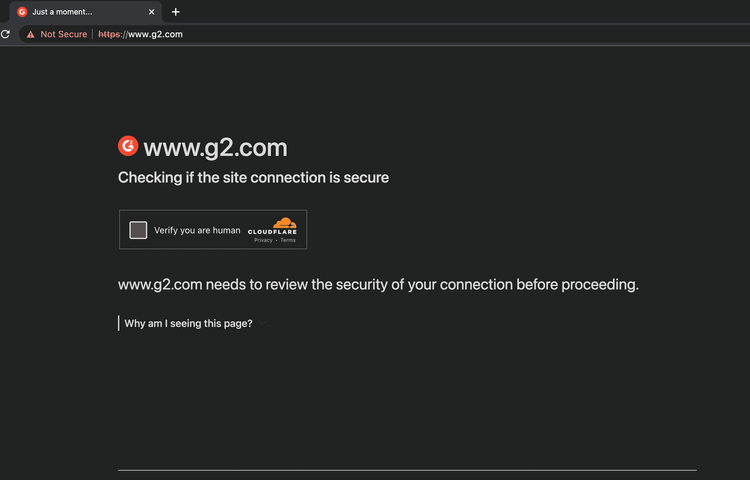

However, it'll fail on websites with advanced bot detection, like G2.

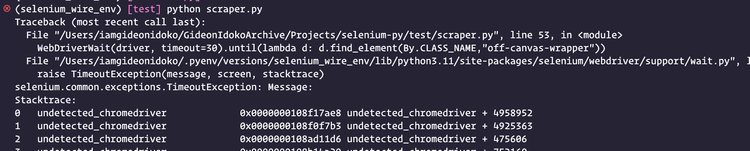

Let's see what happens when we scrape G2:

from selenium.webdriver.common.by import By

import undetected_chromedriver as uc

from selenium.webdriver.support.wait import WebDriverWait

# Creates an instance of the chrome driver (browser)

driver = uc.Chrome(

# seleniumwire options

seleniumwire_options={},

)

# Hit target site

driver.get('https://g2.com')

# Wait for element with a class of "off-canvas-wrapper"

WebDriverWait(driver, timeout=30).until(lambda d: d.find_element(By.CLASS_NAME,"off-canvas-wrapper"))

body = driver.find_element(By.TAG_NAME, 'body')

print(body.text)

driver.quit()

G2 detected us as a bot, which made the script not get past the human verification page.

And our scraper fails when the timeout is reached.

So, a better way to get around this is to use ZenRows.

Here's what you need to do.

Start by installing requests:

pip install requests

Then, implement the following script (the code is auto-generated by ZenRows on its app home when activating the premium proxy, anti-bot and JS rendering features):

import requests

apikey = '<YOUR_ZENROWS_API_KEY>'

zenrows_base_url = 'https://api.zenrows.com/v1/'

target_url = 'https://g2.com'

params = {

'apikey': apikey,

'url': target_url,

'antibot': True,

'premium_proxy': True,

'wait_for': True,

# wait for an element with class of "off-canvas-wrapper" which is in the homepage

'wait_for': '.off-canvas-wrapper'

}

response = requests.get(zenrows_base_url, params=params)

print(response.text) # G2 home page HTML

Update <YOUR_ZENROWS_API_KEY> with your ZenRows API key and run the script.

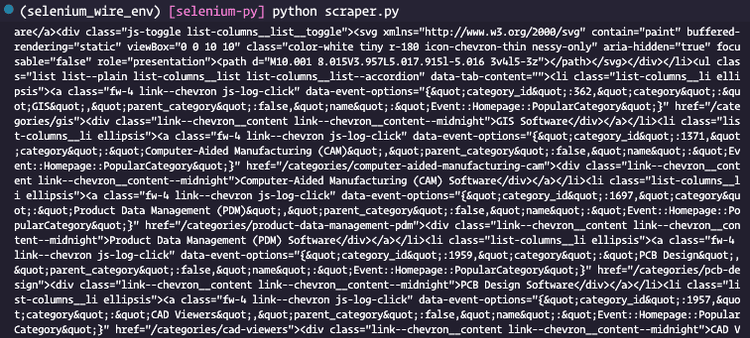

Congrats! You're able to scrape the page now:

Optimize Selenium Wire

Intercepting traffic enables you to inspect the requests and responses that your browser sends and receives. That's useful to optimize Selenium Wire, which requires balancing traffic interception and browser profile configuration.

Earlier, we intercepted requests to customize headers, but we can also do it for assets (images, for example) since we're only scraping text.

Update your script like shown below to increase scraping speed to avoid making requests for images:

# ...

# Creates an instance of the chrome driver (browser)

driver = webdriver.Chrome(

# seleniumwire options

seleniumwire_options={},

)

def request_interceptor(request):

# Block image assets

if request.path.endswith(('.png', '.jpg', '.gif')):

request.abort()

driver.request_interceptor = request_interceptor

# Hit target site

driver.get('https://scrapeme.live/shop/')

body = driver.find_element(By.TAG_NAME, 'body')

print(body.text)

# ...

Conclusion

In this tutorial, we covered the fundamentals of working with Selenium Wire. Now, you know:

- How to perform requests with Selenium Wire.

- How to use premium proxy and its benefits.

- How to customize headers in Selenium Wire.

- How to integrate Undetected ChromeDriver and a better alternative.

- How to optimize Selenium Wire.

You can visit the official documentation to learn more about it.

Although the library helps intercept and modify network requests and responses, as well as simulate different scenarios, it doesn't work well on sites with advanced bot detection. A more trustworthy Selenium Wire alternative is ZenRows. Sign up for a free trial today!

Frequent Questions

What Is The Difference between Selenium and Selenium Wire?

The difference between Selenium and Selenium Wire is that base Selenium is a tool for automating web browsers, while Selenium Wire is a Python library that extends the headless browser functionality. It allows the interception and manipulation of network traffic.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.