Were you blocked again while web scraping? Chances are HTTP headers have something to do with that. In this guide, you'll learn how to optimize your requests to prevent your bot from being stopped. And for those who aren't very familiar with the topic, we'll cover everything from the ground up.

Let's dive in!

What Are HTTP Headers in Web Scraping

Headers are key-value pairs of information sent between clients and servers using the HTTP protocol. They contain data about the request and response, like the encoding, content language, and request status.

Here's a list of the most common HTTP headers for web scraping:

| Header | Sample value |

|---|---|

| User-Agent | Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:109.0) Gecko/20100101 Firefox/109.0 |

| Accept-Language | en-US,en;q=0.5 |

| Accept-Encoding | gzip, deflate, br |

| Accept | text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8 |

| Referer | http://www.google.com/ |

Types of HTTP Headers

Requests and responses contain different header data. Let's learn about that!

Request Headers

In an HTTP transaction, the client, an internet browser, sends a request header. It serves as an identifier for the request's source and includes several details to help the server understand the client's capabilities and determine its response.

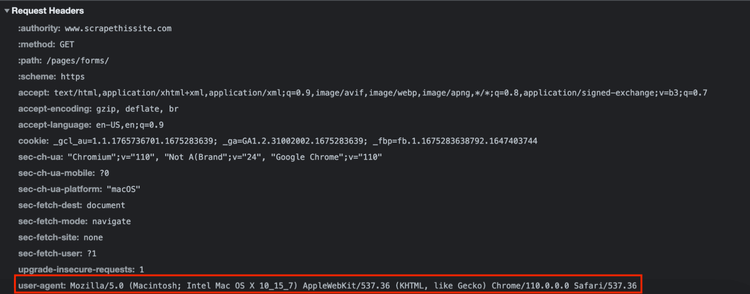

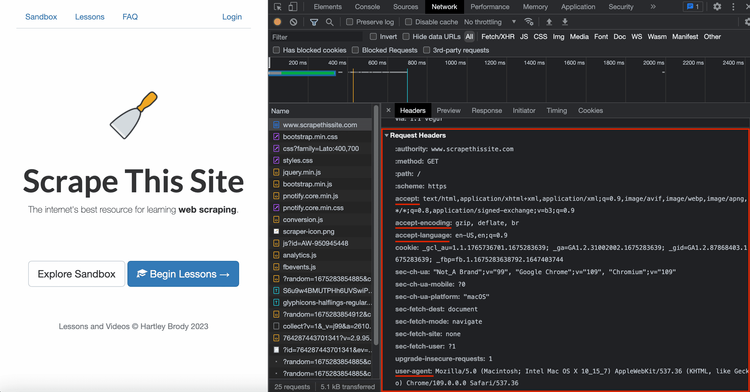

For instance, by checking the User-Agent in the request headers, the server knows if the user is accessing from a phone and serves the website's mobile version. Take a look at the example request headers below.

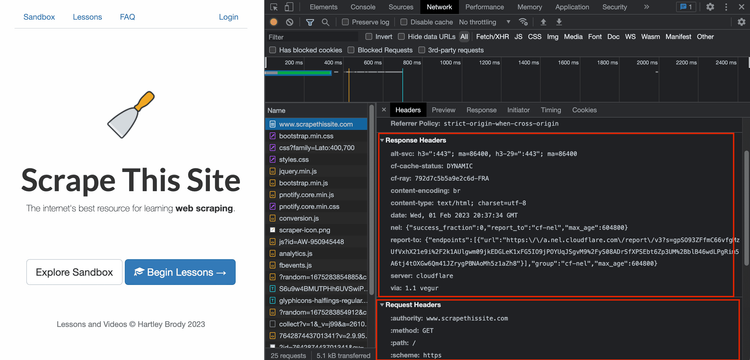

Response Headers

The web server sends a response header as a reply to the client's request. It informs the user about the host's reply to the connection request and contains data such as the status of the request, the used connection type, the content type and the encoding format. Furthermore, it'll include an error code if the request is unsuccessful.

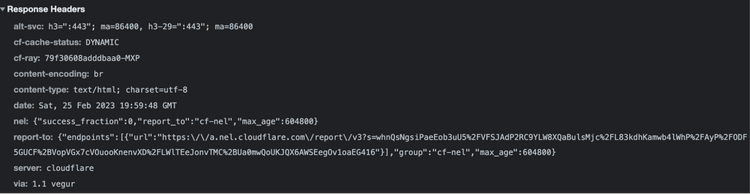

For instance, we see below that the response was served using Cloudflare, a popular anti-bot protection system.

Note: You should be aware that websites also use response headers to set unique authentication cookies.

Since the headers we can modify are the ones of the requests, we'll tackle them in this article.

Common HTTP Headers in Web Scraping

Some common HTTP headers are more important than others for web scraping. Next, we'll see the ones that are critical for our requests.

1. User-Agent

The User-Agent request header provides essential information about your software, including the operating system, the browser you use and its version, and more.

Mozilla/5.0 (Linux; Android 11; SAMSUNG SM-G973U) AppleWebKit/537.36 (KHTML, like Gecko) SamsungBrowser/14.2 Chrome/87.0.4280.141 Mobile Safari/537.36

The user employs Android 11 and SamsungBrowser in the example above.

Web servers use the User-Agent header to authenticate requests. Typically, sending many with identical headers could make the server suspect you're a bot or engage in suspicious behavior.

To avoid being blocked, rotating your User-Agent is important. But a common mistake is writing new ones just editing some parts of the UA without validating they could be real, which will flag you as a bot. You might find helpful to check out our list of best user agents for web scraping.

2. Accept-Language

The Accept-Language request header is used to tell the web server which languages the client understands using ISO 639-1 codes and the preference order to prepare the response.

Accept-Language: da, en-gb, en

In this case, the order is: Danish, British English, English.

Make sure the selected language set matches the target data you want to extract from a domain. Additionally, matching the IP location and the language used might help you stay below the radar.

3. Accept-Encoding

The Accept-Encoding request header informs the web server about which compression algorithm to use when handling the request.

Accept-Encoding: gzip, deflate, br

That's a mutual win if the server supports it because you still receive the wanted information, just in a compressed form, which helps you stand out less. At the same time, the website infrastructure uses fewer resources.

4. Accept

The Accept request header informs the web server of the data format that can be returned to the client.

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8

It's a frequent mistake to forget to configure this header or not to do it properly while web scraping, increasing your chances of getting blocked.

Note: You should separate all file types and corresponding formats by a "/" and a comma.

5. Referer

The Referer request header passes the previously visited web page to the server. That may seem unimportant, but it may signal non-human behavior and get you blocked.

Referer: https://www.bbc.com/news/entertainment-arts-64759120

Other Relevant HTTP Headers

Some other web headers will be helpful for web scraping in certain circumstances, even if they are less popular than the ones introduced above. Let's see a few of them.

Upgrade-Insecure-Requests

The Upgrade-Insecure-Requests header asks the server to reply with an encrypted and authenticated response. If your scraper encounters any difficulty with SSL, dropping this requirement may help.

Sec-Fetch

The Sec-Fetch header provides information about the security details of a request. The website may analyze these headers to identify the web scraper, so you may get blocked if your configuration is wrong.

Here are some helpful examples with their respective uses in web scraping:

-

Sec-Fetch-Mode: It specifies the navigation origin of the request. It's best to use

navigatefor direct requests andsame-originorcorsfor dynamic data requests. -

Sec-Fetch-Site: It indicates the origin of the request. You should use

nonefor direct requests andsame-sitefor dynamic data requests, such as XHR-type ones. -

Sec-Fetch-Dest: It indicates the requested document type. It's usually

documentfor direct HTML requests and empty for dynamic data requests.

Note that these are the default values when working with HTTPS websites.

How to Check Your HTTP Headers?

"What are my HTTP headers?", you may be wondering.

There are several methods you can use to find them out. We'll make the most of the browser's Developer Tools using Scrape This Site:

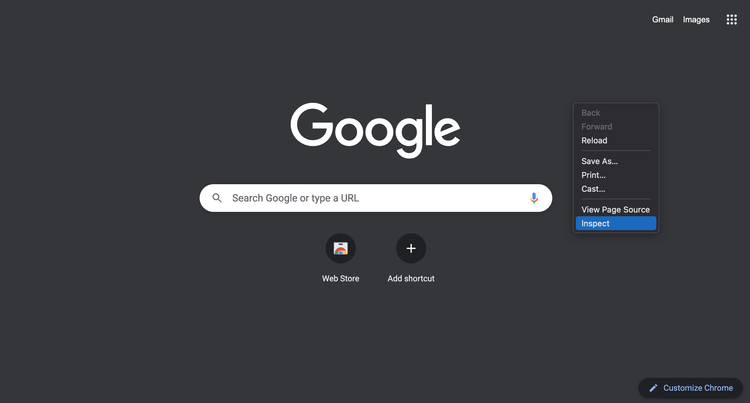

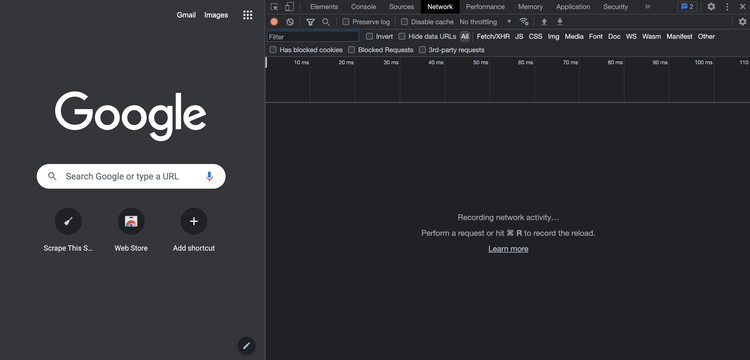

- Open a new tab, right-click, and select "Inspect" from the drop-down menu.

To simulate the browsing behavior of a typical user, specify a random website as the Referer before starting a session, and remember to set it up for your next requests. Doing so increases the organic appearance of your scraper's traffic.

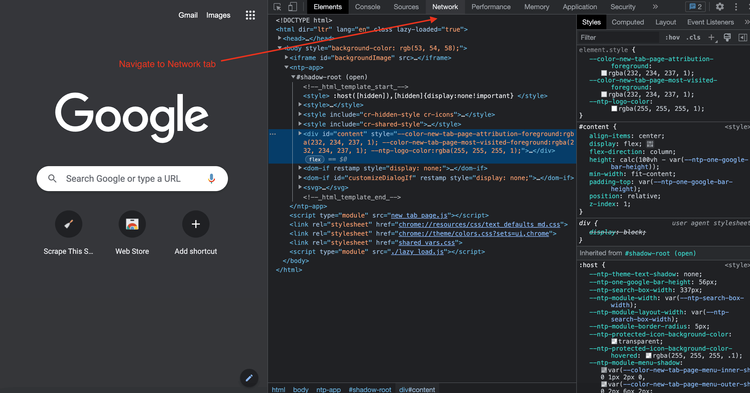

- Navigate to the Network tab.

You should see something similar to the image below.

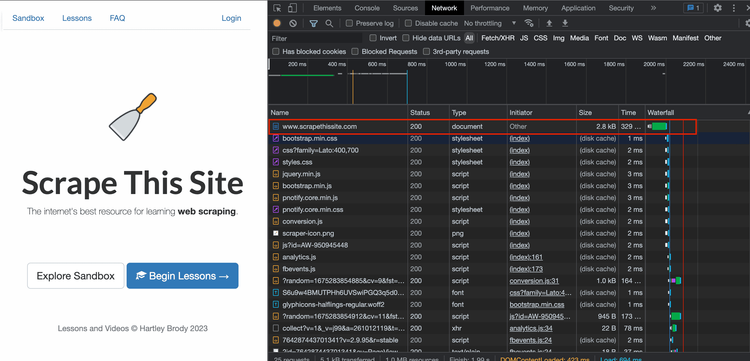

- Navigate to the website by pasting the URL to the address bar at the top. You'll see the network traffic between your browser and web server in the Network tab.

- Click on the first request in the HTML document.

- Review the browser's headers. You'll see the HTTP response and request headers list on the right.

The mentioned headers will be in the Request Headers section.

Now you can copy the Request headers while sending requests to imitate a real user as much as possible. But be careful: headers like cookie contain session/authentication cookies to identify unique users, which may get your scraper blocked.

You can use the Requests module in Python and pass your custom headers in the GET request.

import requests

# store the headers as a dict

headers = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Language': 'en-US,en;q=0.9',

'Accept-Encoding': 'gzip, deflate, br'}

url = 'https://www.scrapethissite.com/'

# use the `headers` argument to pass the custom headers

resp = requests.get(url, headers=headers)

# output the response's headers

print(r.headers)

You can see the output below.

{'Content-Type': 'text/html; charset=utf-8',

'Transfer-Encoding': 'chunked',

'Connection': 'keep-alive',

'Via': '1.1 vegur',

'CF-Cache-Status': 'DYNAMIC',

'Report-To':

'{"endpoints":[{"url":"https:\\/\\/a.nel.cloudflare.com\\/report\\/v3?s=CFawwmM%2Belnz%2BG1FZcDw%2Fvj0O75hv4fhnjTYUN6hhXv%2Fx7sEmxmv18YPs7bug%2FboxFmhi73ODJKDPtqWtIMXLin2S51TowV4Add1hcbn53kEf8kWhRdeRJRwnP9OrQScI1XVOkoIHdLj"}],

"group":"cf-nel","max_age":604800}',

'NEL': '{"success_fraction":0,"report_to":"cf-nel","max_age":604800}',

'Server': 'cloudflare',

'CF-RAY': '791c3dabe8b19a1b-FRA',

'Content-Encoding': 'br',

'alt-svc': 'h3=":443"; ma=86400, h3-29=":443"; ma=86400'}

Extra tip: You should rotate your headers when you're done with the session because making many requests without rotating headers will get you blocked almost every time.

Why Are HTTP Headers Important?

When it comes to web scraping, HTTP request headers are crucial because websites use them to determine if the visitor is a web scraper or not.

Many websites track and analyze HTTP headers, so a wrong configuration may get your web scraper blocked. Thus, it's important to use unique and legitimate headers to be seen as a human user.

But of course, websites can still block you if too many requests come from the same IP address. You can use proxy servers, which act like a middleman between your client and the target website, so a great combination is using rotating headers along with rotating proxies. Check out our tutorial on rotating proxies in Python to implement this technique!

Conclusion

In web scraping, HTTP request headers contain information identifying the client. To avoid being detected by the server, it's key to rotate headers and use a proxy to hide your IP address.

But that can be rather challenging to set up. Fortunately, you can save yourself the trouble and use ZenRows' fully-fledged web scraping API. It'll implement these techniques for you, and you can try it for free.

Frequent Questions

What Should HTTP Headers Include?

HTTP headers play a crucial role in controlling the request and response behavior. Some important headers to include when web scraping are:

- User-Agent.

- Accept.

- Referer.

- Cookie.

- Cache-Control.

- Connection.

How to Differentiate Good Sets of Headers From Bad Ones?

Good headers should accurately identify the scraper as a real user. They'll provide necessary authentication, HTTP cookies, and specific communication options. That will ensure effective communication with the target server.

How to Use HTTP Headers Effectively?

When web scraping, your HTTP headers should be identical to a regular user's to minimize the suspicion of the web server.

Additionally, the web servers may apply rate-limiting based on your IP address and HTTP headers. Therefore, you may get temporarily blocked if you send too many requests with the same your HTTP headers. That's why you should use proxy servers and frequently change your HTTP headers.

Is the Order of Headers Important?

Yes, the order of headers is an essential factor in identifying web scrapers because many HTTP client libraries in programming languages have their own ordering of headers. For instance, the Python library Request doesn't preserve header order, so web scrapers that use it can be easily detected.

The HTTPX library does maintain header order, making it a safer choice for web scraping.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.