While the base version of Playwright is a powerful tool for automating a web browser for web scraping, its unique properties make it easily identifiable to anti-bot systems, which gets you blocked. Fortunately, the Playwright Stealth plugin comes to the rescue by masking those unique properties.

In this tutorial, you'll learn how to scrape using Playwright Stealth in Python. Also, we'll discuss its limitations and how to overcome them.

Let's get started!

What Is Playwright Stealth?

Playwright Stealth is an add-on for the Playwright framework transplanted from puppeteer-extra-plugin-stealth. It extends Playwright's web scraping functionality to avoid getting blocked through Playwright Extra, an open-source library that enables the use of additional features.

The stealth plugin is available in both Node.js and Python versions of Playwright. So, regardless of your language preference, you can leverage the stealth functionality in your Playwright scraper.

How Playwright Stealth Works

While web scraping with Playwright gives you browser-like functionalities, like JavaScript rendering, it's still easy for websites to flag your requests are automated. That's due to specific properties that are unique to headless browsers, with the most fundamental ones being headless: true and --headless.

Come on, you're openly stating you aren't a human user!

Playwright Stealth works by applying techniques and modifications that suppress Playwright's default automation properties to avoid plug leaks that flag you as a bot. Those techniques are functions of built-in evasion modules that drive the stealth functionality to mimic natural browsing behavior to reduce the risk of being blocked. For example:

- The

iframe.contentWindowevasion fixes theHEADCHR_iframedetection by modifyingwindow.topandwindow.frameElement. -

Media.codecsmodifiescodecsto support what Chrome uses. -

Navigator.hardwareConcurrencysets the number of logical processors to four. -

Navigator.languagesmodifies thelanguagesproperty to allow custom languages. -

Navigator.pluginemulatesnavigator.mimeTypesandnavigator.pluginswith functional mocks to match standard Chrome used by humans.

Since you're naked with Playwright's base version, so let's get some clothes now.

How to Web Scrape with Playwright Stealth in Python

Follow the next steps to web scrape using Playwright Stealth in Python, and bear in mind the process would be very similar for NodeJS.

Step 1: Prerequisites

First of all, make sure you have Python installed.

Then, install Playwright using Python's package manager pip.

pip install playwright

Now, install the necessary browsers using the install command.

playwright install

Before we go into stealth mode, let's test base Playwright against a protected website: NowSecure.

To be noted, Playwright supports two execution variations: synchronous and asynchronous. The first one is usually associated with small-scale scraping, where concurrency is not an issue. However, the second one is recommended for cases where concurrency, scalability, and performance are critical factors. So we're going to use the asynchronous API in this tutorial.

To follow along, import async_playwright and asyncio modules. Then, open an async function where you'll write your code and call that function to run it.

import asyncio

from playwright.async_api import async_playwright

async def main():

#..

asyncio.run(main())

Next, launch a browser and create a new context (a context in Playwright represents an isolated environment to load and execute web pages. You can create multiple contexts independent of each other).

async def main():

# Launch the Playwright instance

async with async_playwright() as p:

# Iterate over the available browser types (Chromium, Firefox, WebKit)

for browser_type in [p.chromium, p.firefox, p.webkit]:

# Launch the browser of the current browser_type

browser = await browser_type.launch()

# Create new context

context = await browser.new_context()

# Create new page within the context

page = await context.new_page()

Navigate to your target URL (https://nowsecure.nl/), wait for the page to load, take a screenshot to see your result, and close the browser.

# Navigate to the desired URL

await page.goto('https://nowsecure.nl/')

# Wait for any dynamic content to load

await page.wait_for_load_state("networkidle")

# Take a screenshot of the page and save it with the browser_type name

await page.screenshot(path="screenshot.png")

print("Screenshot captured successfully.")

# Close the browser

await browser.close()

asyncio.run(main())

Putting everything together, you'll have the following script:

import asyncio

from playwright.async_api import async_playwright

async def main():

# Launch the Playwright instance

async with async_playwright() as p:

# Iterate over the available browser types (Chromium, Firefox, WebKit)

for browser_type in [p.chromium, p.firefox, p.webkit]:

# Launch the browser of the current browser_type

browser = await browser_type.launch()

# Create new context

context = await browser.new_context()

# Create new page within the context

page = await context.new_page()

# Navigate to the desired URL

await page.goto('https://nowsecure.nl/')

# Wait for any dynamic content to load

await page.wait_for_load_state("networkidle")

# Take a screenshot of the page and save it with the browser_type name

await page.screenshot(path="screenshot.png")

print("Screenshot captured successfully.")

# Close the browser

await browser.close()

asyncio.run(main())

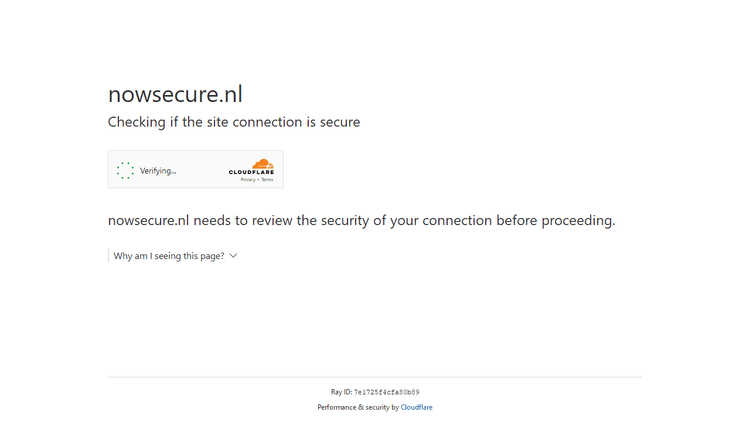

This will be your result:

The screenshot above proves your target website detected and blocked base Playwright, confirming what we've been discussing.

Now, let's try scraping the same website using Playwright Stealth.

Step 2: Install playwright-stealth

Install playwright-stealth using the following command.

pip install playwright-stealth

To configure Playwright Stealth in Python, import the plugin alongside Playwright.

from playwright_stealth import stealth_async

Your import lines should look like this:

import asyncio

from playwright.async_api import async_playwright

from playwright_stealth import stealth_async

Step 3: Scrape with the Plugin

For this step, you'll code and run a Playwright Stealth scraper.

Open an async function to launch a new Playwright instance. In this case, we'll use a Chromium browser specifically.

async def main():

# Launch the Playwright instance

async with async_playwright() as playwright:

# Launch the browser

browser = await playwright.chromium.launch()

#..

# Run the main function

asyncio.run(main())

Now, create a new context, and within it, create a new page. Then, apply the stealth plugin to the page.

async def main():

#..

# Create new context

context = await browser.new_context()

# Create new page within the context

page = await context.new_page()

# Apply stealth to the page

await stealth_async(page)

#..

# Run the main function

asyncio.run(main())

Navigate to your target URL and take a screenshot, like in the base Playwright script.

async def main():

#..

# Navigate to the desired URL

await page.goto("https://nowsecure.nl/")

# Wait for any dynamic content to load

await page.wait_for_load_state("networkidle")

# Take a screenshot of the page

await page.screenshot(path="nowsecure_success.png")

print("Screenshot captured successfully.")

# Close the browser

await browser.close()

# Run the main function

asyncio.run(main())

Here's what your full code should look like as of now:

import asyncio

from playwright.async_api import async_playwright

from playwright_stealth import stealth_async

async def main():

# Launch the Playwright instance

async with async_playwright() as playwright:

# Launch the browser

browser = await playwright.chromium.launch()

# Create new context

context = await browser.new_context()

# Create new page within the context

page = await context.new_page()

# Apply stealth to the page

await stealth_async(page)

# Navigate to the desired URL

await page.goto("https://nowsecure.nl/")

# Wait for any dynamic content to load

await page.wait_for_load_state("networkidle")

# Take a screenshot of the page

await page.screenshot(path="nowsecure_success.png")

print("Screenshot captured successfully.")

# Close the browser

await browser.close()

# Run the main function

asyncio.run(main())

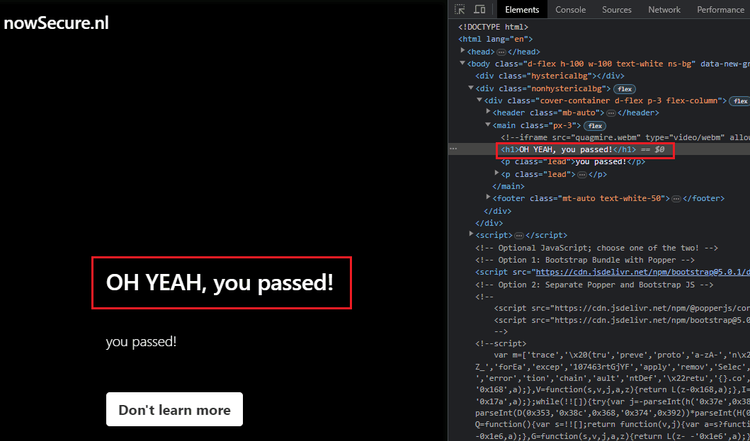

Run it, and your new screenshot should display this:

Congratulations, it works! This result shows Playwright Stealth successfully masked Playwright's automation properties. You might also want to boost your scraper's speed by blocking specific resources in Playwright.

Step 4: Parse with Playwright Extra Stealth

As an additional part of this tutorial, you'll learn to parse the data you're interested in with Playwright Extra Stealth.

To do that, visit your target page in a normal browser, right-click on the element you're interested in (e.g., the main heading), and select "Inspect". That'll open Chrome DevTools, which allows you to see where the content is in the HTML structure.

For this example, let's just print the h1, which will display ´OH YEAH, you passed!´. To achieve that, use the ´QuerySelector´ method to retrieve the h1 and print its text content.

async def main():

# Launch the Playwright instance

async with async_playwright() as playwright:

#..

# Retrieve all elements on the page

elements = await page.query_selector("h1")

if element:

text_content = await element.inner_text()

print(text_content)

#..

# Run the main function

asyncio.run(main())

Putting everything together, here's your complete code:

import asyncio

from playwright.async_api import async_playwright

from playwright_stealth import stealth_async

async def main():

# Launch the Playwright instance

async with async_playwright() as playwright:

# Launch the browser

browser = await playwright.chromium.launch()

# Create new context

context = await browser.new_context()

# Create new page within the context

page = await context.new_page()

# Apply stealth to the page

await stealth_async(page)

# Navigate to the desired URL

await page.goto("https://nowsecure.nl/")

# Wait for any dynamic content to load

await page.wait_for_load_state("networkidle")

# Take a screenshot of the page

await page.screenshot(path="nowsecure_success.png")

# Retrieve the desired element

elements = await page.query_selector_all("h1")

if element:

text_content = await element.inner_text()

print(text_content)

# Close the browser

await browser.close()

# Run the main function

asyncio.run(main())

And this will be your output:

OH YEAH, you passed!

Bingo! You've successfully avoided Playwright bot detection using playwright-stealth.

Limitations of and Easy Solution

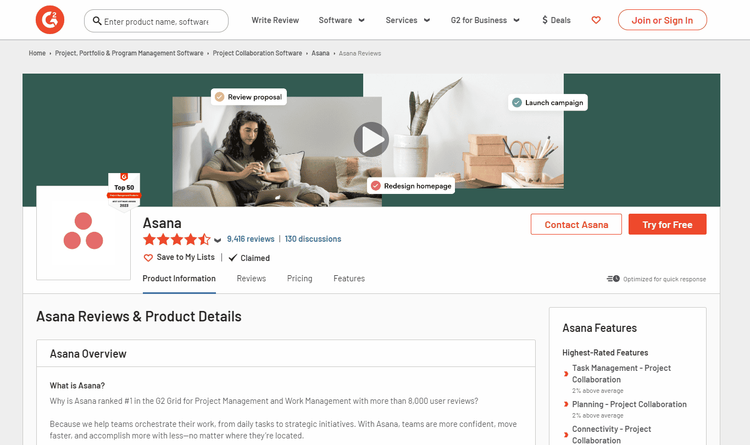

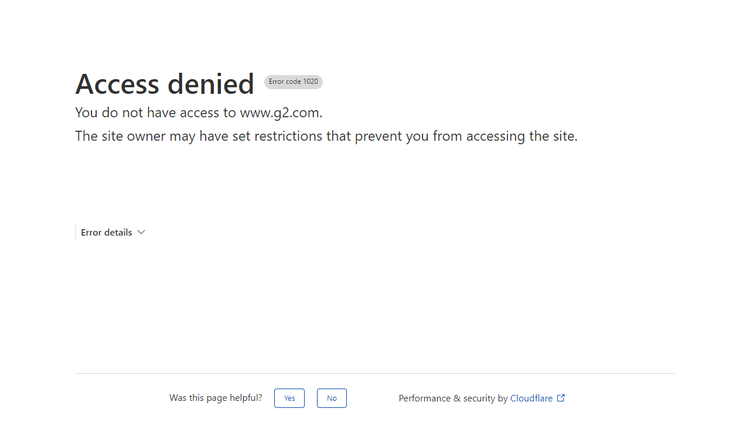

While Playwright Stealth is a powerful web scraping plugin, it has limitations because it doesn't work against more protected web pages. To prove it, let's try to scrape a G2's reviews page, which implemented advanced Cloudflare measures.

Use your Playwright Extra Stealth scraper by replacing https://nowsecure.nl/ with https://www.g2.com/products/asana/reviews as a target URL.

import asyncio

from playwright.async_api import async_playwright

from playwright_stealth import stealth_async

async def main():

# Launch the Playwright instance

async with async_playwright() as playwright:

# Launch the browser

browser = await playwright.chromium.launch()

# Create new context

context = await browser.new_context()

# Create new page within the context

page = await context.new_page()

# Apply stealth to the page

await stealth_async(page)

# Navigate to the desired URL

await page.goto("https://www.g2.com/products/asana/reviews")

# Wait for any dynamic content to load

await page.wait_for_load_state("networkidle")

# Take a screenshot of the page

await page.screenshot(path="g2_failed.png")

print("Screenshot captured successfully.")

# Close the browser

await browser.close()

# Run the main function

asyncio.run(main())

Here's the result:

We got blocked right away…

But no worries! There's an ultimate solution: with ZenRows, you can fly under the radar of any anti-bot system regardless of its difficulty level.

ZenRows has rotating premium proxies that can be integrated with Playwright. However, we recommend using it as a full alternative to Playwright and its Stealth plugin because it provides the same functionality as the headless browser with less code and also gives you access to its complete toolkit to avoid getting blocked while web scraping.

Let's try ZenRows against the page where Playwright Stealth failed.

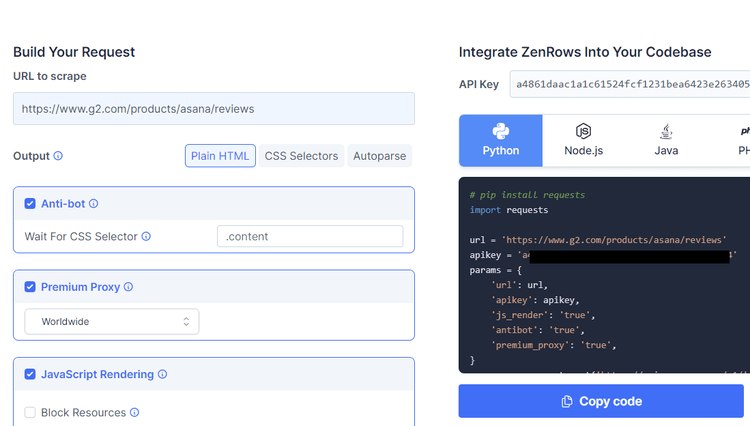

First, sign up for your free API key, and you'll get to the Request Builder page.

Enter your target URL (https://www.g2.com/products/asana/reviews), and check the boxes for JS rendering, premium proxies, and anti-bot for higher effectiveness. Then, select your programming language on the right.

That'll generate your scraper's code. Copy it to your IDE.

import requests

url = 'https://www.g2.com/products/asana/reviews'

apikey = 'Your API Key'

params = {

'url': url,

'apikey': apikey,

'js_render': 'true',

'antibot': 'true',

'premium_proxy': 'true',

}

response = requests.get('https://api.zenrows.com/v1/', params=params)

print(response.text)

You'll need to install Python Requests (or any other HTTP client) to send your scraping requests to ZenRows' API. Do that with the following command:

pip install requests

Run your scraper, and here's your result:

<!DOCTYPE html>

//..

<title>Asana Reviews 2024: Details, Pricing, & Features | G2</title>

//..

<h1 class="l2 pb-half inline-block">Asana Reviews & Product Details</h1>

Congratulations! How does it feel knowing you can scrape any website? Awesome, right? ZenRows makes it easy to retrieve your desired data.

Conclusion

Playwright is a popular headless browsing tool. However, its default properties make it easily detectable by target websites, so you need to use Playwright Stealth to mask those properties if you want to avoid getting blocked.

Yet, Playwright Stealth falls short against advanced anti-bot systems, so you might need to consider a functional and easy solution like ZenRows. Sign up now to get your 1,000 free API credits.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.