Playwright is a powerful headless browser requiring a few lines of code to achieve fast browser automation. Its simplicity and extensive capabilities make it popular amongst web scrapers, but it can easily be blocked while web scraping.

In this tutorial, we'll discuss what makes a Playwright scraper detectable and how you can avoid Playwright bot detection.

Let's dive in!

Can Playwright Be Detected?

Yes, websites with anti-bot measures can easily detect Playwright because it exhibits behaviors unique to automated browsers.

Also, it displays command line flags and inherent properties, like navigator.webdriver, that scream "I am a bot". They're enabled by default to improve the automation experience but have become detrimental to web scrapers.

However, the good news is that you can avoid Playwright detection by masking your requests and emulating human behavior. Let's see how.

Best Measures to Avoid Bot Detection with Playwright

Before we discuss the best measures to ensure your Playwright scraper runs smoothly, you'll need to tick all the following boxes:

Prerequisites

Here's what you need to follow along with this tutorial:

- Ensure you have Node.js installed.

- Install Playwright using the following command.

npm install playwright

- To install browsers, use the following command:

npx playwright install

- Add

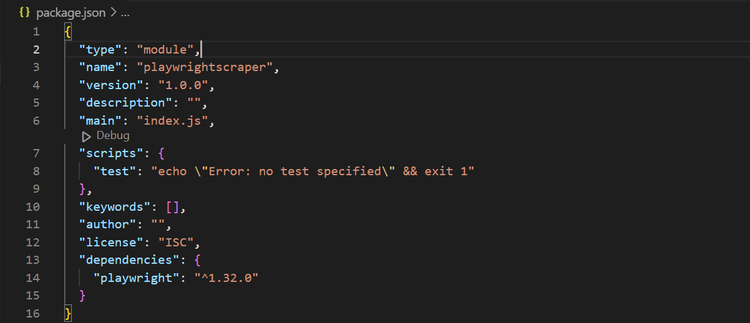

"type" : "module"in yourpackage.jsonfile to enable modern JavaScript syntax (default is TypeScript). Your file should look like this:

Playwright's installation comes with browser binaries, so it might take some time.

2. Randomize the User-Agent

Every HTTP request includes HTTP headers that provide additional context and metadata about the request and the client making it. In particular, the User Agent header is very important in web scraping, and websites can easily detect and block or limit your access if your request includes a Playwright's default UA.

This is what a real UA looks like: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36

Then, while simply changing your User Agent string to that of an actual browser may work in some cases, it's seldom enough. You'll ultimately get found out when faced with sophisticated anti-bot measures, such as request patterns or frequency analysis.

Therefore, randomizing your UAs and other headers will make your requests more natural, making it harder for websites to detect your scraper. Here's how to randomize User Agents using Playwright:

First, define your list of user agent strings in an array using: import { chromium } from 'playwright';

Then, define your list of user agent strings in an array.

const userAgentStrings = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.2227.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.3497.92 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36',

];

Ensure your UAs are compatible with the other headers and the browser you're emulating. For example, if your User Agent is that of Google Chrome v83 on Windows, and the other headers are that of a Mac, your target websites may detect this discrepancy and block your requests. Check out our guide on how to set up a new User Agent to learn more.

Next, launch a Chromium browser instance and create a new context with a randomly selected User Agent.

(async () => {

// Launch Chromium browser instance

const browser = await chromium.launch();

// Create a new browser context with a randomly selected user agent string

const context = await browser.newContext({

userAgent: userAgentStrings[Math.floor(Math.random() * userAgentStrings.length)],

});

In the code above, the Math.random() method randomly generates a number between zero and one. We then multiply it with our array's length and apply the Math.floor() method to select a random array index.

Lastly, create a new page in the browser context and navigate to your target URL. For this example, we'll use <http://httpbin.org/headers> to see our request headers.

// Create a new page in the browser context and navigate to target URL

const page = await context.newPage();

await page.goto('http://httpbin.org/headers');

Run it, and you'll get the following result:

console.log(await page.textContent("*"));

// Close the browser when done

await browser.close();

Putting it all together, we have the following code:

import { chromium } from 'playwright';

const userAgentStrings = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.2227.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.3497.92 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36',

];

(async () => {

// Launch Chromium browser instance

const browser = await chromium.launch();

// Create a new browser context with a randomly selected user agent string

const context = await browser.newContext({

userAgent: userAgentStrings[Math.floor(Math.random() * userAgentStrings.length)],

});

// Create a new page in the browser context and navigate to target URL

const page = await context.newPage();

await page.goto('http://httpbin.org/headers');

console.log(await page.textContent('*'));

// Close the browser when done

await browser.close();

})();

You'll get the following result:

{

"headers": {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"Accept-Encoding": "gzip, deflate, br",

"Host": "httpbin.org",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "none",

"Sec-Fetch-User": "?1",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-64238f2a-0f818f50596439c7688bfba6"

}

}

You've succeeded if you get a different User Agent string each time you run this code.

Awesome right?

You can also use a for loop to make multiple requests, and your complete code should look like this:

import { chromium } from 'playwright';

const userAgentStrings = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.2227.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.2228.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.3497.92 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36',

];

(async () => {

const browser = await chromium.launch();

for (const userAgent of userAgentStrings) {

const context = await browser.newContext({ userAgent });

const page = await context.newPage();

await page.goto('https://httpbin.org/headers');

console.log(await page.textContent('*'));

await context.close();

}

await browser.close();

})();

3. Bypass CAPTCHAs

CAPTCHAs are security measures designed to prevent bots from gaining access or interacting with website data. These challenges are mostly prompted by bot-like behaviors, such as unusual spikes in traffic, suspicious request patterns, etc.

There are two main approaches to bypassing CAPTCHAS: avoiding them altogether or solving them when they appear. The first one is the best possible path because it saves time and is more reliable, scalable and cheaper.

The key to avoiding CAPTCHAs is imitating human behavior and circumventing its triggers. With its ability to automate human browser actions, Playwright provides ways to achieve this. Check out our guide on the best CAPTCHA proxies to learn more.

4. Disable the Automation Indicator WebDriver Flags

As mentioned earlier, Playwright's properties flag it as an automated browser. Anti-bot detection measures can easily identify these properties and inform the web server that a bot controls the browser. One of those properties is the .webdriver.

Automation tools are mandated to have properties like navigator.webdriver to facilitate compatibility with other web applications and provide better automation capabilities and security. However, these features are problematic for web scrapers aiming to avoid bot detection.

Fortunately, Playwright offers a way to disable webdriver flags and increase your chances of avoiding detection. With its built-in addInitScript() function, you can set the navigator.webdriver property to undefined.

Add the following code in the init script to do that.

Object.defineProperty(navigator, 'webdriver', {

get: () => undefined,

});

Then, add it to our original Playwright web scraper.

//import playwright

import { chromium } from 'playwright';

(async () => {

//launch Chromium browser instance

const browser = await chromium.launch();

//create new context

const context = await browser.newContext();

//add init script

await context.addInitScript("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

//open new page using context

const page = await context.newPage();

//navigate to target website

await page.goto('https://www.example.com');

// wait for the page to load

await page.waitForTimeout(10000);

//take a screenshot and save to a file

await page.screenshot({ path: 'example.png' });

// Turn off the browser.

await browser.close();

})();

5. Use Cookies

Cookies are small packets of data sent to clients by websites to keep track of user activity. After receiving and storing them, they're sent back to the web server with each request. That way, websites can maintain session states between different sessions.

Since avoiding detection is about emulating real user behavior, you can include cookies in your request to look like a revisiting user. Web servers expect that so, if done correctly, you can fly under the radar to retrieve the necessary data.

You can use the context.addCookies() method to set cookies in a context in Playwright to make them available globally within that context. Also, you can use the page.SetCookie() method to set them for specific pages.

6. Get a Fortified Playwright

Another method for avoiding detection is to fortify Playwright with playwright-extra, a popular library that enables additional plugins. With it, you can extend your web scrapers functionality for tasks requiring more flexibility in customizing requests.

You have multiple add-ons, but the stealth plugin is the most important one. It aims to prevent websites from detecting the browser is automated by modifying the information sent to the web server.

To use it with Playwright, install playwright-extra and puppeteer-extra-plugin-stealth, load the stealth plugin and use Playwright as usual.

Yet, while that can help you avoid detection, you'll need to take a different approach against more advanced anti-bot measures.

That begs the question, what is the ultimate solution? Let's see!

Mask Requests with ZenRows

Anti-bot solutions like Akamai are continuously and quickly evolving, so you might still get blocked regardless of the techniques used to avoid Playwright bot detection.

Fortunately, ZenRows was specifically designed to avoid even the most complex anti-bot measures and has everything you might need for that purpose: premium rotating proxies, rotating User-Agents, JavaScript rendering, and so on and so forth. You can easily retrieve the needed website data with a single API call.

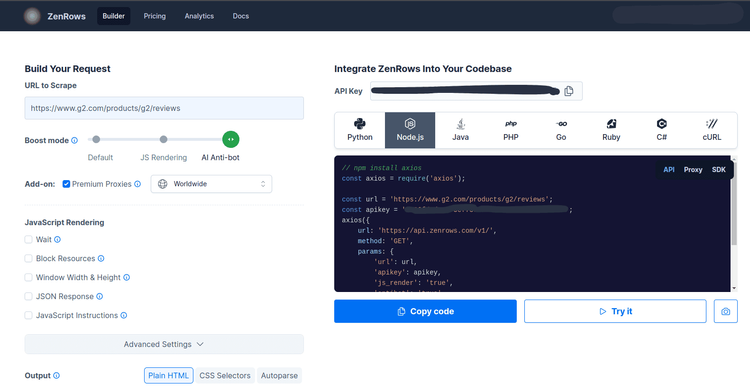

Below, see ZenRows in action against a G2 review page, a website using advanced anti-bot protection.

To follow along in this tutorial, sign up to get your free API key. You'll find it at the top of the page right after signing up.

Then, install Axios.

npm install axios

You only need to import Axios and call the ZenRows API using the necessary parameters to access your target URL. To avoid detection, set antibot=true and premium_proxy=true (you might also want to set js_render=true). So, your complete code should look like this:

//import axios

const axios = require("axios");

// Call ZenRows API with the provided url and parameters

axios({

url: "https://api.zenrows.com/v1/?apikey=YOUR_API_KEY&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fg2%2Freviews&antibot=true&premium_proxy=true",

method: "GET",

})

.then(response => console.log(response.data))

.catch(error => console.log(error));

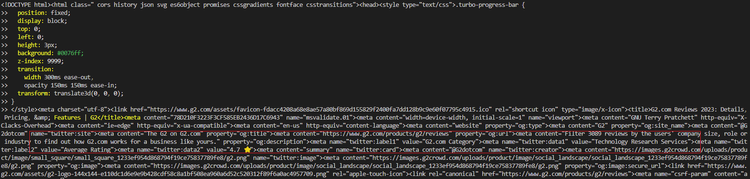

You should have the following result:

Congrats, you've bypassed advanced anti-bot detection!

Conclusion

Avoiding Playwright bot detection can be tasking because its properties and command line flags make it easy for websites to detect and block it. Moreover, anti-bot measures are evolving fast, so any technique to mask automation properties might still fall short.

However, ZenRows, a constantly evolving web scraping solution, lets you bypass even the most advanced anti-bot measures. You can sign up to get your free API key and try it.

You might also be interested in checking out our guide on how to avoid detection in Python.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.