As web scraping has grown in popularity, many websites have begun adopting anti-bot measures. These involve sophisticated technologies that prevent automated software programs from accessing their data.

When a website detects your web scraper, it can limit the number of requests it can make or block it entirely. This article explores the most popular ways an anti-bot can detect you and learn how to bypass them all.

Let's dive in!

What Is an Anti-Bot

An anti-bot is a technology that detects and prevents bots from accessing a website. A bot is a program designed to perform tasks on the web automatically. Even though the term bot has a negative connotation, not all are bad. For example, Google crawlers are bots, too!

At the same time, at least 27.7% of global web traffic is from bad bots. They perform malicious actions like stealing personal data, spamming, and DDoS attacks. That's why websites try to avoid them to protect their data and improve the user experience, and they may block your web scraper.

An anti-bot filter relies on several methods to distinguish between human users and bots, including CAPTCHAs, fingerprinting, and HTTP header validation.

Let's learn more about them!

Practices to Get Around Anti-Bots

Bypassing an anti-bot system may not be easy, but some practices can help you. Here's the list of techniques to consider:

- Respect robots.txt: The robots.txt file is a standard that sites use to communicate which pages or files bots can or can't access. By respecting the defined guidelines, web scrapers will avoid triggering anti-bot measures. Learn more on how to read robot.txt files for web scraping.

- Limit the requests from the same IP: Web scrapers often send multiple requests to a site in a short time. This behavior can trigger anti-bot systems, so try limiting the number of requests sent from the same IP address. Dig into how to bypass the rate limit when performing web scraping.

-

Customize your

User-Agent: TheUser-AgentHTTP header is a string that identifies the browser and OS the request comes from. By customizing this header, the requests appear to be from a regular user. Take a look at the top list of User Agents for web scraping. - Use a headless browser: A headless browser is a controllable web browser without a GUI. Using such a tool can help you avoid getting detected as a bot by making your scraper behave like a human user, i.e., scrolling. Find out more about what a headless browser is and the best ones for web scraping.

- Make the process easier with a web scraping API: A web scraping API allows users to scrape a website through simple API calls while avoiding anti-bot systems. That makes web scraping easy, efficient, and fast. To explore what the most powerful web scraping API on the market has to offer, try ZenRows for free now.

Do you want to build a reliable web scraper that never gets banned? Check out our guides on web scraping best practices and how to avoid getting blocked!

Anti-Bot Mechanisms

The best way to win a war is to know your enemy! Here, we'll see some popular anti-bot mechanisms used against web scraping and how to bypass them.

Time to become an anti-bot ninja!

Header Validation

Header validation is a common anti-bot protection technique. It analyzes the headers of incoming HTTP requests to look for anomalies and suspicious patterns. If the system detects anything irregular, it marks the requests as coming from a bot and blocks them.

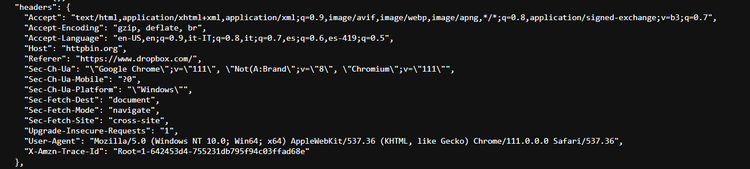

These are what the default HTTP headers sent by Google Chrome on Windows might look like:

As shown, all browser requests are sent with a lot of data in the headers. If some of these fields are missing, don't have the right values or have an incorrect order, the anti-bot system will block the request.

To bypass header validation:

- Customize the headers sent by the web scraper with actual values.

- Sniff the requests made by your browser to learn how to populate HTTP headers.

- Rotate their values to make each request run by the spider appear as coming from a different user.

Learn more about HTTP headers in web scraping in our guide.

Location-Based Blocking

Location-based blocking involves banning requests from certain geographic locations and is used by many websites to make their content available only in some countries. In a similar way, governments use this approach to ban specific sites in their country.

The geographical block is implemented at DNS or ISP level.

This is what you usually see when you need an IP in a specific country to web scrape a page:

These systems analyze the IP address to detect the user's geographic location and decide whether to block them. Thus, you need an IP from one of the allowed countries to scrape location-blocked targets.

To circumvent location-based blocking measures, you need a proxy server, and premium proxies generally allow you to select the country the server is located in. This way, requests made by the web scraper will appear from the right location.

CAPTCHA Challenges

A CAPTCHA is a challenge-response test that websites use to detect whether the user is human. Humans easily solve this challenge, but it's hard for bots, so anti-bot solutions use them to prevent scrapers from accessing a site or performing specific actions.

To solve a CAPTCHA, the user must perform a specific interaction on a page, including typing the number shown in a distorted image or selecting the set of images.

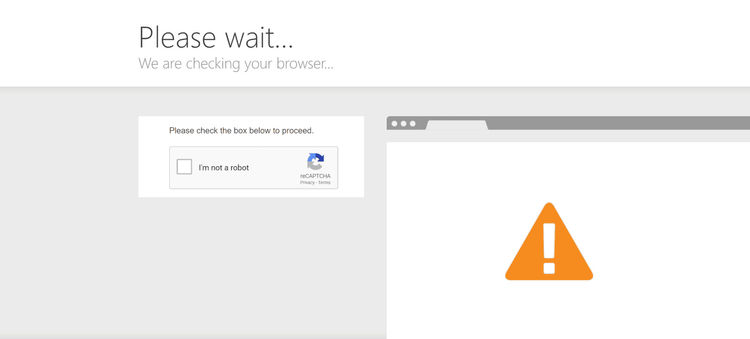

This is what a CAPTCHA wall used by a website to block bots looks like:

A professional data scraper relies on a CAPTCHA proxy solver to bypass this block. Take a look at our list to find out the best CAPTCHA proxies for you.

WAFs: The Great Threat A WAF (Web Application Firewall) is an application firewall that monitors and filters unwanted HTTP traffic by comparing it against a set of rules. If a request matches a rule, it gets blocked.

This technology can easily detect bots, representing a major threat to web scraping. The most popular WAFs are Cloudflare, DataDome, and Akamai.

Even though getting around them isn't easy, we have a solution for you. Check out our guides:

- How to Bypass Cloudflare

- How to Bypass DataDome

- How to Bypass Akamai

- How to Bypass PerimeterX

- How to Bypass Imperva

Browser Fingerprinting

Browser fingerprinting involves identifying web clients by collecting data from the user's device. Based on browser plugins, installed fonts, screen resolution, and other information, it can tell whether the request comes from a real user or a scraper.

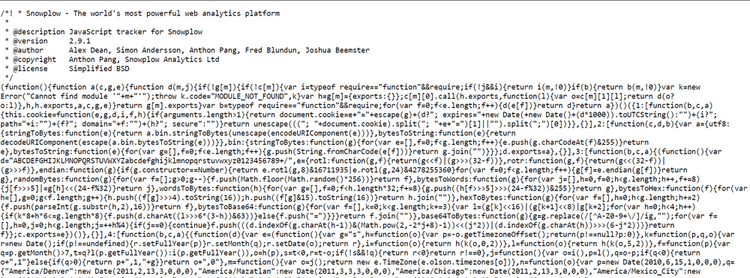

Most approaches to implementing browser fingerprinting rely on client-side technologies to collect user data. This is an example of how to perform it in JavaScript:

The script above collects data points about the user to fingerprint it.

In general, this anti-bot technology expects requests to come from a browser. To get around it when web scraping, you need a headless browser, otherwise you'll get identified as a bot.

Follow our step-by-step guide to learn how to bypass browser fingerprinting.

TLS Fingerprinting

TLS fingerprinting involves analyzing the parameters exchanged during a TLS handshake. If these don't match the expected ones, the anti-bot system marks the request as coming from a bot and blocks it.

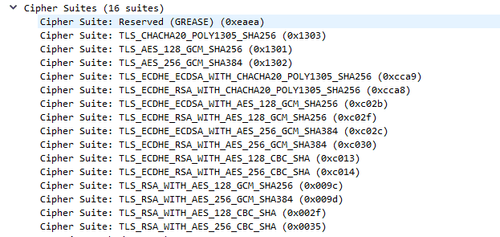

For example, here are some of the parameters exchanged by Chrome when establishing a TLS connection:

TLS fingerprinting allows a website to detect automated requests that don't originate from a legitimate browser. That represents a challenge for web scraping, but there's a solution!

To dig deeper into the anti-bot world, check out our guide on bypassing bot detection.

Conclusion

In this complete guide, you learned a lot about anti-bot detection. You started from the basics and became an anti-bot master!

Now you know:

- What an anti-bot is.

- Some best practices to get around anti-bot technologies.

- Some of the most popular mechanisms anti-bots rely on.

- How to bypass all these mechanisms.

You can discover more anti-scraping techniques but, no matter how sophisticated your scraper is, some technologies will still be able to block it.

Avoid them all with ZenRows, a web scraping API with premium proxies, built-in IP rotation, headless browser capacity, and advanced anti-bot bypass. It's an easier way to web scrape.

Frequent Questions

What Does Anti-bot Measure Mean?

An anti-bot measure includes detecting, blocking, and preventing bots. Each action relies on different techniques and approaches to identify and ban only automated traffic. As bots become more and more sophisticated, anti-bot measures evolve accordingly.

What Is an Anti-bot Used For?

An anti-bot is used to prevent automated programs known as "bots" from doing malicious actions on a site. That helps ensure only human users can interact with a platform. As a result, it protects against fraud, spam, and other types of unwanted activity. It's essential the anti-bot is effective and doesn't block or bother real users or helpful bots such as Googlebot.

What Is an Anti-bot Checkpoint?

An anti-bot checkpoint is a security measure to verify if the user interacting with a website is a human. That prevents fraudulent or malicious activity and usually involves a challenge only humans can solve. It could be solving a simple math problem, typing in a sequence of letters, or identifying objects in an image.

What Are Anti-bot Methods?

Anti-bot methods are techniques to identify and avoid automated web programs. The most popular ones include these:

- CAPTCHAs.

- IP blocking.

- Request rate limiting.

- Behavior analysis.

- Fingerprinting.

- WAFs.

How Are Bots Detected?

Bots can be detected by monitoring various aspects, including HTTP traffic and user behavior. These involve collecting some data to find patterns and characteristics typical of bots because automated software sends requests that are inconsistent with human activity.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.