Incapsula is among the most popular anti-scraping protections on the internet, meaning that the ability to bypass it has become necessary for successful data extraction projects.

In this guide, you'll learn how to bypass Incapsula (known as Imperva now) with three different methods:

But first, let's learn more about the system itself.

What Is Incapsula (Imperva Now)?

Incapsula is a Web Application Firewall (WAF) that uses advanced security measures to protect websites against attacks, such as DDoS, blocking traffic that resembles not human. Unfortunately, that includes all sorts of bots despite the legitimacy of their intentions.

We'll see how to bypass Incapsula for web scraping by diving into how it works as a prerequisite.

How Incapsula Works

The firewall acts as an intermediary between the client and the server: when a user tries to access an Incapsula-protected website, its WAF receives the request, analyzes it, and then makes another one to the source server to retrieve content.

However, scrapers seldom make it beyond the analysis stage due to three kinds of detection approaches: signature, behavior and client fingerprinting.

Keep reading to learn more.

Signature-Based Detection Techniques

Signature-based detection methods rely on predefined patterns, or signatures, to identify bots from humans. Here are some examples:

- HTTP request headers: Every request's HTTP headers contain information that recognizes whether its sender is a human. For instance, if you use a command line tool (e.g., cURL) or a headless browser, you'll be easily identified as a bot.

- IP reputation: Incapsula collects IP data from website visits and compares it to a known database of malicious IPs. If your address has a history of hostile attacks or is associated with botnets, it'll suffer from a poor reputation, and your request will be denied subsequently.

Behavior-Based Detection Techniques

Behavior-based techniques consist of behavioral analysis checks performed on the server side. Those include:

- Request analysis: The company analyzes traffic data, such as source, rate, and frequency of requests, to identify unnatural user behavior. For instance, your bot will look suspicious and be stopped if you send too many requests at an unusual rate.

- Page navigation analysis: It involves monitoring page sequence and interactions' timing and frequency. This way, Incapsula can identify unusual navigation patterns and block the corresponding request.

- Client interaction analysis: User interactions, like mouse clicks and keyboard inputs, say a lot about the client, so Incapsula obtains this type of data using obfuscated scripts and blocks suspicious requests.

Client Fingerprinting Techniques

Client fingerprinting involves clients' characteristics to identify bots. Let's elaborate with some examples:

- Device fingerprinting: This technique is used to identify the user's device. The company gathers information about the client's attributes (OS, browser type and version, screen resolution, installed fonts, etc.) and pulls it together to generate a unique user fingerprint.

- JavaScript challenges: Since the client's inability to render JavaScript is a clear sign of bot traffic, Incapsula uses various challenges to test JS execution.

- CAPTCHAs: These challenges are designed to let humans through and keep bots out. In the past, they led the way in detecting automated software, yet they harmed the user's experience and have been relegated to second place.

How to Bypass Incapsula (aka Imperva)

The mentioned protection techniques work together, and there are even more set in place, so what should a potential solution be? Our best bet in bypassing Incapsula is to make our spider look as much like a human user as possible.

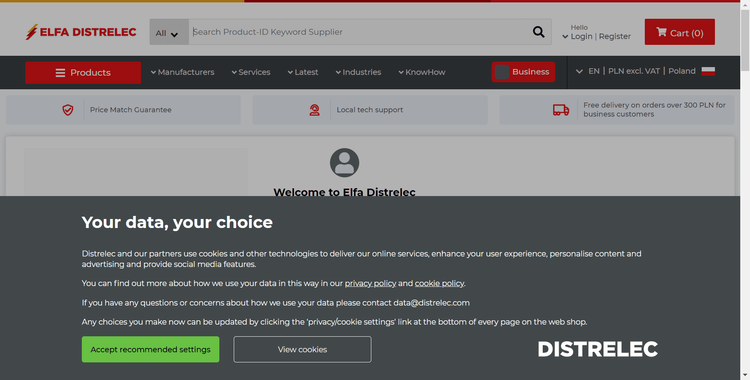

We'll use two websites with different levels of Incapsula anti-bot protection as our test playgrounds: Elfa Distrelec, an e-commerce store, and Vagaro, a software platform that implements higher Incapsula security measures.

Ready to get started? You'll now learn how to get around Incapsula protections.

Method #1: Use a Web Scraping API

Using a web scraping API is an easy approach as it handles the entire technical aspect of emulating natural user behavior for you, including features such as rotating proxies and JavaScript rendering.

We'll see ZenRows, a powerful library for scraping any website, regardless of the anti-bot security level or your project's scale. Also, it seamlessly integrates with Python, Node.js, Java, PHP, Golang, Ruby, C#, and any other language.

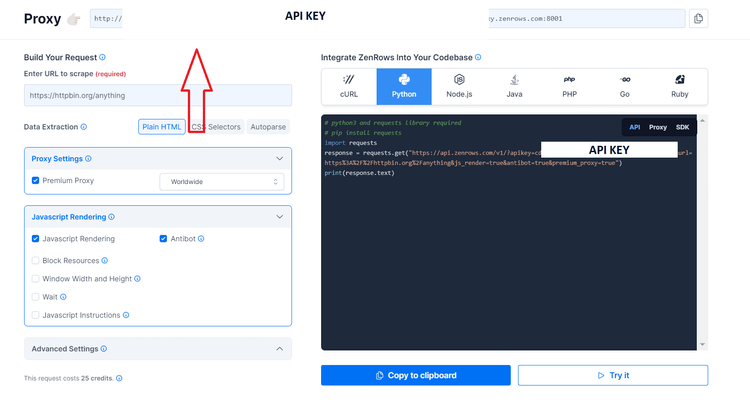

To see it in action, sign up for free to get your API key. Then, you'll get to the Request Builder, where you must input https://www.elfadistrelec.pl as a target URL, as well as activate Premium Proxy, JavaScript Rendering and Antibot.

Now, install Python's Requests library to make HTTP requests.

pip install requests

Your Python web scraping script to bypass Incapsula should look like this:

import requests

url = '<YOUR_TARGET_URL>'

apikey = '<YOUR_ZENROWS_API_KEY>'

params = {

'url': url,

'apikey': apikey,

'js_render': 'true',

'antibot': 'true',

'premium_proxy': 'true',

}

response = requests.get('https://api.zenrows.com/v1/', params=params)

print(response.text)

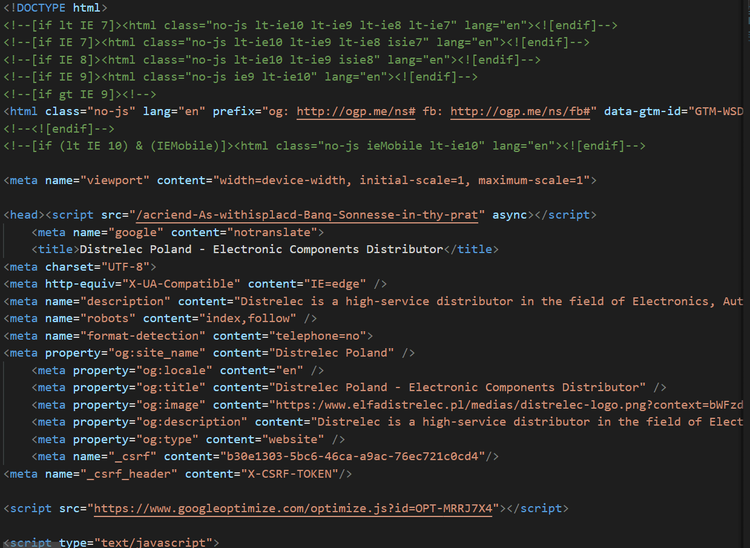

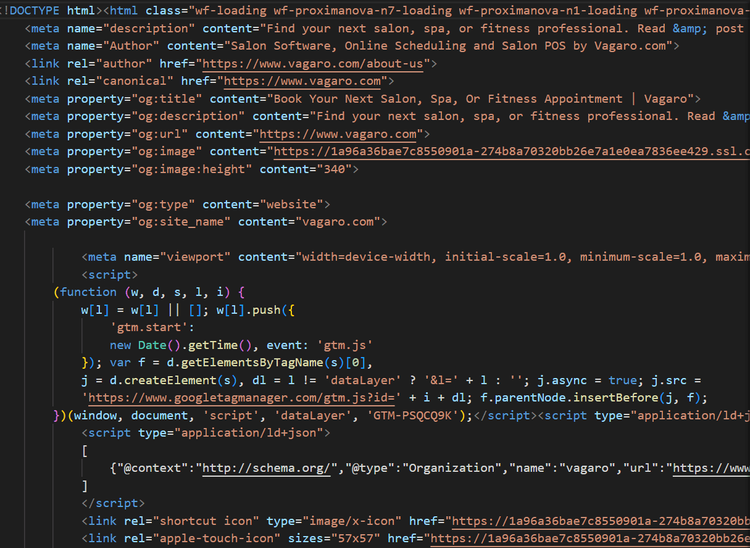

Let's see how it measures up against our first target:

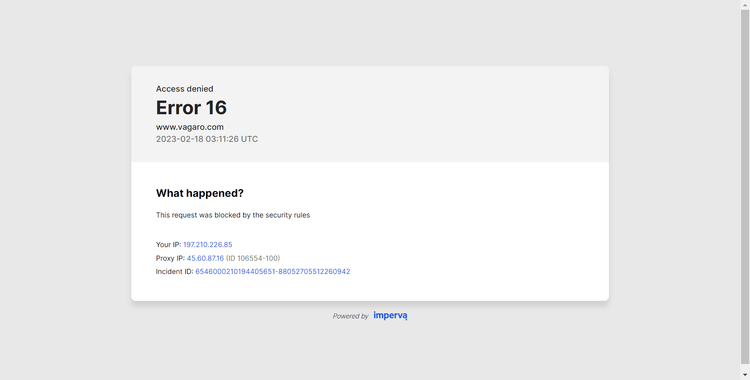

Awesome! And here's the result for Vagaro:

Fantastic, right? It was that easy to bypass Incapsula with ZenRows' help.

Anyway, even though other options can't compare, it's important to see the whole picture and explore different methods.

Method #2: Use Fortified Headless Browsers

While base headless browsers can render JavaScript and emulate user behavior, they alone can be easily detected by web security solutions. Yet, plugins like Selenium's undetected_chromedriver hide detectable characteristics and add more functionality, making bots harder to recognize. This is what is known as implementing a fortified version of a headless browser.

Let's see an example:

First, install Selenium and undetected-chromedriver.

pip install selenium undetected-chromedriver

It was required to install and import WebDriver in the past, but not anymore. From Selenium version 4 and above, WebDriver is automatically included. If you're using an older version of Selenium, update it to access the latest features and capabilities. To check your current version, use the command pip show selenium, and install the most recent one with pip install --upgrade selenium.

Then, import undetected-chromedriver and time libraries.

import undetected_chromedriver as uc

import time

Set arguments for headless mode and maximize the window.

options = uc.ChromeOptions()

options.add_argument("--headless")

options.add_argument("--start-maximized")

The next steps involve creating a Chrome driver instance, navigating to the target URL, waiting for the page to load, and taking a screenshot.

# Create a new Chrome driver instance with the specified options

driver = uc.Chrome(options=options)

# Navigate to the website URL

driver.get("TARGET_URL")

# Wait for the page to load for 10 seconds

time.sleep(10)

# Take a screenshot of the page and save it as "screenshot.png"

driver.save_screenshot("screenshot.png")

# Quit the browser instance

driver.quit()

Here's the complete code:

# Import the undetected_chromedriver and time libraries

import undetected_chromedriver as uc

import time

# Set ChromeOptions for the headless browser and maximize the window

options = uc.ChromeOptions()

options.add_argument("--headless")

options.add_argument("--start-maximized")

# Create a new Chrome driver instance with the specified options

driver = uc.Chrome(options=options)

# Navigate to the website URL

driver.get("TARGET_URL")

# Wait for the page to load for 10 seconds

time.sleep(10)

# Take a screenshot of the page and save it as "screenshot.png"

driver.save_screenshot("screenshot.png")

# Quit the browser instance

driver.quit()

What about the result? See below.

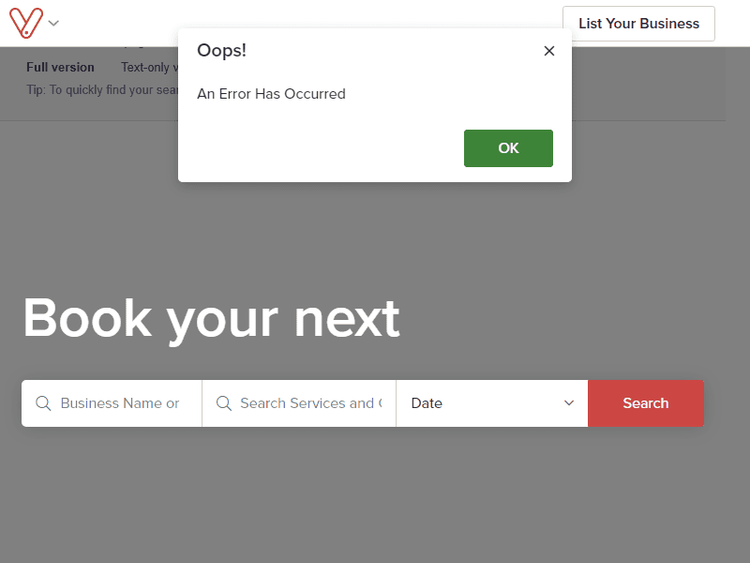

Great! But how about our second target URL?

Sadly, Vagaro's advanced bot detection system was able to identify and block Selenium's undetected_chromedriver.

Method #3: Scrape Google's Cache

When Google bots crawl websites for indexing, they cache their pages. This is an opportunity for us because most bot detection protections have an allowlist where search engines are included, so we can scrape Google's cache as a workaround.

This approach is easier than trying to imitate user behavior, but it's only viable if your target website doesn't change its data often and the type of data you want to extract. Not to mention that some websites don't allow caching, which means this won't work for every project.

But let's see an example using Selenium and adding the prefix https://webcache.googleusercontent.com/search?q=cache to our target page.

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import time

options = Options()

options.add_argument("--headless")

driver = webdriver.Chrome(options=options)

# replace the website URL with the one you want to scrape

website_url = "TARGET_URL"

# create the Google cache URL by adding the prefix

google_cache_url = f"https://webcache.googleusercontent.com/search?q=cache:{website_url}"

# navigate to the Google cache URL

driver.get(google_cache_url)

time.sleep(20)

driver.save_screenshot("datacache.png")

driver.close()

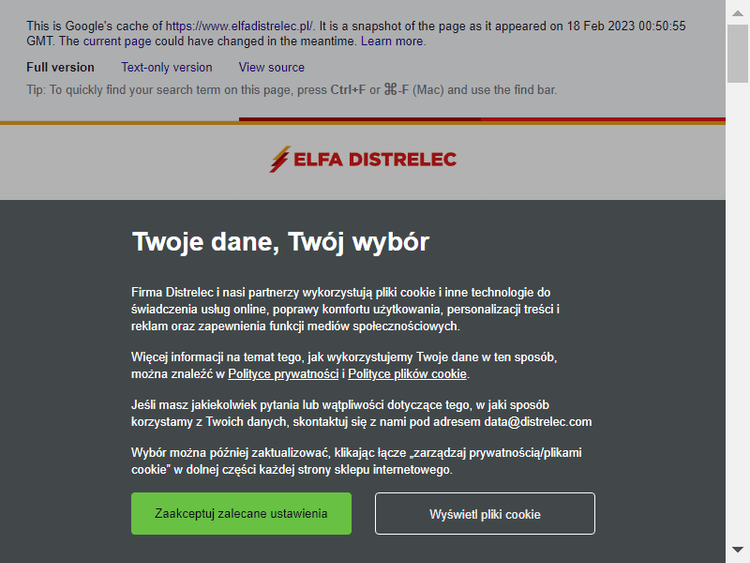

Here's the output for the e-commerce store:

Nice, we managed to scrape the cache! However, the data's different compared to Selenium's undetected_chromedriver's result. This means that while you gain access, the received content may not match your needs.

Let's try now with Vadaro:

Ugh! Same result as before. All we get is outdated content.

Conclusion

While security solutions like Incapsula (Imperva) gain more and more popularity, having the means to avoid them has become an essential job for any scraper.

Today, you learned how to bypass Incapsula in this step-by-step guide using three different approaches:

- Using Selenium

undetected_chromedriver, a popular library for emulating user behavior that failed to bypass advanced bot protection. - Scraping Google's cache led to poor results with outdated data.

- ZenRows, a reputable web scraping API, successfully managed to retrieve the desired data at all levels of difficulty.

Feel free to try ZenRows yourself by signing up now and getting 1,000 API credits.

Frequent Questions

Is Incapsula the Same As Imperva?

Yes, Incapsula and Imperva are the same. The story is that Incapsula was founded in 2009 and has its origins in the other company, which owned 85% of its shares. Then, Imperva acquired the rest of the ownership in 2014.

How Do I Get Rid of Imperva?

The approach to getting rid of Imperva is by emulating human behavior the best you can since the firewall uses advanced anti-bot techniques. So, the closest you are to a natural user, the higher your chances of bypassing Imperva are.

How Do You Get Around Incapsula?

Bypassing Incapsula is no mean fit as its bot management systems continuously evolve. However, by employing advanced approaches to make you undetectable, e.g., using a reputable scraping API like ZenRows, you can successfully get around the firewall.

Is Incapsula a WAF?

Incapsula is a Web Application Firewall (WAF) that uses a combination of client fingerprint, signature-based and behavior-based analysis to identify and block bots in real-time. The company also offers services such as load balancing and CDN.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.