Many websites use anti-bot technologies. These make extracting data from them through web scraping more difficult. In this article, you'll learn the most commonly adopted bot protection techniques and how you can bypass bot detection.

Bots generate almost half of the world's Internet traffic, and many of them are malicious. This is why so many sites implement bot detection systems. Such technologies block requests that they don't recognize as executed by humans. As a result, bot detection is a problem for your scraping process.

Let's learn everything you need to know about mitigation and the most popular bot protection approach. Of course, you'll see how to defeat them.

What Is Bot Detection?

Bot detection (or mitigation) is using technology to figure out whether a user is a real human being or a bot. Specifically, these technologies collect data and/or apply statistical models to identify patterns, actions, and behaviors that mark traffic as coming from an automated source.

A bot is an automated software application programmed to perform specific tasks. In detail, they imitate human behavior and interact with web pages and real users. Note that not all of them are bad, and even Google uses such methods to crawl the Internet.

According to the 2022 Imperva Report, bot traffic made up 42.3% of all Internet activity in 2021. This makes detection techniques a critical aspect of security. That's especially true, considering 27.7% of traffic comes from bad bots.

As you can see, malicious software is very popular. Plus, it indiscriminately targets small or large businesses. So, bot mitigation has become vitally important. That's why more and more sites are adopting such protection techniques.

Coincidentally, they form a large part of the anti-scraping technologies, meaning they can block your spiders. After all, web scrapers are software applications that automatically crawl several pages. This makes them bots.

If you want your scraper to be effective, you must know how to bypass bot detection. Generally speaking, you have to avoid anti-scraping.

To learn more, dig into our article on the seven anti-scraping techniques you need to know or try our guide on web scraping without getting blocked.

How Do You Get Past Bot Detection?

We'll start with the general tips that all should be aware of. You should always apply them because they allow your scraper to overcome most obstacles.

Since bot detection is about collecting data, you should protect your scraper under a web proxy. A web scraping proxy server acts as an intermediary between you and your target website server. While doing this, it prevents your IP address and some HTTP headers from being exposed.

This allows you to protect your identity and makes TLS fingerprinting more difficult. A website creates a digital fingerprint when it manages to profile you. This process works by looking at your computer specs, browser version, browser extensions, and preferences.

In other words, the idea is to uniquely identify you based on your settings and hardware. Then, a bot detection system can step in and verify whether your identity is real.

As a general solution to bot detection, you should introduce randomness into your scraper. For example, you could bring in random pauses into the crawling process. After all, no human being works 24/7 nonstop. Also, you need to change your IP and HTTP headers as much as possible. This makes your requests more difficult to track.

As you can see, all these solutions are pretty general. If you want to avoid bot detection, you may need more effective approaches. As you are about to learn, bot detection bypass is generally more complicated than this, but learning about the most used techniques and ways to go around them will surely come in handy.

Top Five Bot Detection Solutions and How To Bypass Them

If you want your scraping process to work relentlessly, you need to overcome several obstacles. Bot detection is one of them.

So, let's dig into the five most adopted and effective anti-bot solutions and how to bypass them.

1. IP Address Reputation

One of the most widely adopted approaches is IP tracking.

The system tracks all the requests a website receives. If too many come from the same IP in a limited time, the system blocks the IP. This happens because only software can make that many requests in such a short time.

It could also block an IP because all its requests come at regular intervals. Once again, this is something that only a bot can do. No human being can act so programmatically.

What's important to notice here is that these anti-bot systems can undermine your IP reputation forever. Reputation measures the behavioral quality of an IP. In other terms, it quantifies the number of unwanted requests sent from the same address.

If your reputation deteriorates, this could represent a serious problem for your scraper. Especially if you aren't using any IP protection system. Verify with Project Honey Pot if your IP has been compromised.

The only way to protect it is to use a rotation system. Keep in mind that premium proxy servers offer IP rotation. You can use a proxy with the Python Requests to bypass bot detection as follows:

import requests

# defining the proxies server

proxies = {

"http" : "http://yourhttpproxyserver.com:8080",

"https" : "http://yourhttpsproxyserver.com:8090",

}

# your web scraping target URL

url = "https://targetwebsite.com/example"

# performing an HTTP request with a proxy

response = requests.get(url, proxies=proxies)

All you have to do is define a proxies dictionary specifying the HTTP and HTTPS connections. This variable maps a protocol to the proxy URLs the premium service provides you with. Then, pass it to requests.get() via the parameters. Learn more about proxies in requests.

ZenRows offers an excellent premium proxy service. Try it and see for yourself.

2. HTTP Headers and User-Agent Tracking

Bot detection technologies typically analyze HTTP headers to identify malicious requests. If a request doesn't contain an expected set of values in some key headers, the system blocks it.

Most commonly, the system singles out the User-Agent header as the most important one. This contains information that identifies the browser, OS, and/or vendor version from which the request came. If the request doesn't appear to originate from a browser, the bot detection system is likely to identify it as coming from a script. In other words, your web crawlers should always set a valid User-Agent header.

The anti-bot system may look at the Referer header. This string contains an absolute or partial address of the web page the request comes from. If this is missing, the system may mark the request as malicious.

You can set your headers with requests to bypass bot detection as below:

import requests

# defining the custom headers

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36",

"Referer": "https://targetwebsite.com/page1"

}

# your web scraping target URL

url = "https://targetwebsite.com/example"

# performing an HTTP request with a proxy

response = requests.get(url, proxies=proxies, headers=headers)

Define a dictionary that stores your custom HTTP headers. Then, pass it to requests.get() through the parameters. Learn more about custom headers in requests.

3. JavaScript Challenges

A JavaScript challenge is a technique bot protection systems use to prevent bots from visiting a given web page. A single page can contain hundreds of JS challenges. All users, even legitimate ones, will have to pass them to access the content.

You can think of it as any kind of challenge executed by the browser via JS. A browser that can execute JavaScript will automatically face one. This means that they run transparently. The user mightn't even be aware of it.

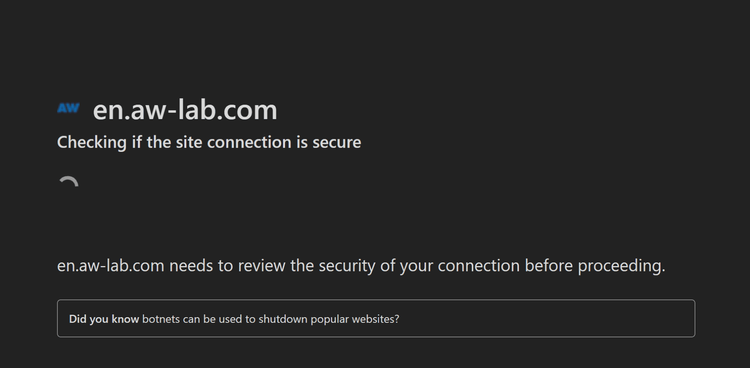

Some of them may take time to run, though. This results in a delay of several seconds in page loading. In this case, the bot detection system may notify as below:

If you see this on your target website, you now know that it uses a bot detection system. This means that if your scraper doesn't have a JavaScript stack, it won't be able to execute and pass the challenge.

Since web crawlers usually execute server-to-server requests, no browsers are involved. This means no JavaScript and no way to bypass bot detection. In other words, if you want to pass a JavaScript challenge, you have to use a browser.

So, your spider should adopt headless browser technology, such as Selenium or Puppeteer. For example, Selenium launches a real browser with no UI to execute requests. So, when using the software, your scraper opens the target page in a browser, and this helps it bypass bot detection.

Approaching a JS challenge and solving it isn't easy, but it is possible. Check out our guides:

4. Activity Analysis

Activity analysis is about collecting and analyzing data to understand whether the current user is a human or a bot. In detail, such a system continuously tracks and processes user data.

Doing so, it looks for well-known patterns of human behavior. If it doesn't find enough of them, the system recognizes the user as a bot. Then, it can block it or challenge it with a JS challenge or CAPTCHA.

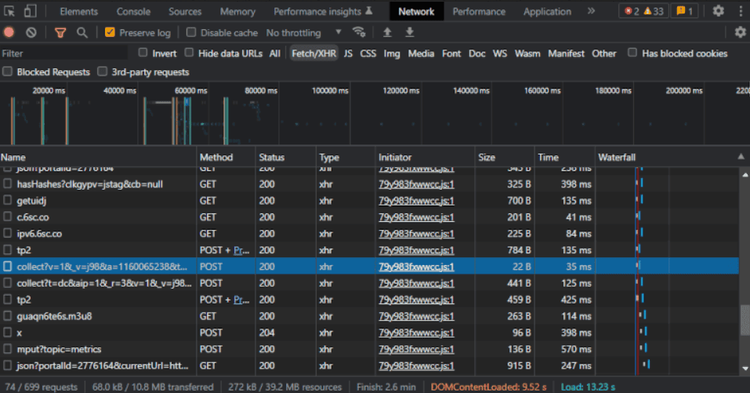

You can try to prevent them by stopping data collection. First, verify if your target website collects user information. To do this, you can examine the XHR section in the DevTools' Network tab.

Look for suspicious POST or PATCH requests that trigger when you perform an action on the page. As in the example above, these requests generally send encoded data. Keep in mind that activity analysis collects user info via JavaScript, so check which JS file performs these requests. You can see it in the "Initiator" column.

Now, block its execution. Note that this approach might not work or even make the situation worse. Anyway, here's how you can do it with Pyppeteer (the Python port of Puppeteer):

import asyncio

from pyppeteer import launch

browser = await launch()

page = await browser.newPage()

# activating the request interception on Pyppeteer to block specific requests on this page

await page.setRequestInterception(value=True)

# registering the request event handler

page.on(event="request", f=lambda request: asyncio.ensure_future(interceptRequest(req)))

# defining the request event handler function

async def interceptRequest(request: Request):

# if the request comes from the user data collection js file, block it

if request.url.endswith("79y983fxwwcc.js"):

await request.abort()

else:

await request.continue_()

# visit the target page

await page.goto("https://yourtargetwebsite.com")

This uses the Puppeteer request interception feature to block unwanted data collection requests. This is what Python has to offer when it comes to web scraping. Now, consider also taking a look at our complete guide on web scraping in Python.

This is just an example. Keep in mind that finding ways to bypass bot detection in this case is very difficult. That's because they use AI and machine learning to learn and evolve. Thus, a workaround might not work for long. At the same time, advanced anti-scraping services such as ZenRows offer solutions to bypass them.

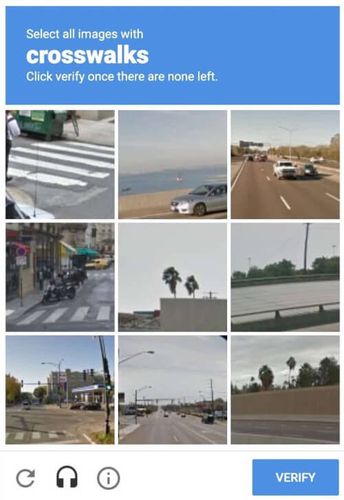

5. CAPTCHAS

A CAPTCHA is a special kind of challenge-response authentification adopted to determine whether a user is human. It provides tests to visitors that are hard for computers to perform but easy to solve for human beings.

Google's reCAPTCHA is one of the market's most advanced and effective bot detection systems for bot mitigation. Over five million sites use it. This makes CAPTCHAs one of the most popular anti-bot protection systems. Also, users got used to it and are not bothered to deal with them.

One of the best ways to pass the challenge is by adopting a CAPTCHA farm company. They offer automated services that scrapers can query to get a pool of human workers to solve the tests for you. However, the fastest and cheapest option is to use a web scraping API that is smart enough to avoid blocking screens. Find out more on how to automate CAPTCHA solving.

Conclusion

You've got an overview of what you need to know about bot detection, including some standard to advanced ways to bypass it. As shown here, there are many ways your scraper can be identified as a bot and blocked. At the same time, there are some precautions and additional techniques you can employ to ensure that doesn't happen.

What matters is to know these bot detection technologies work, so you be better prepared to deal with them.

Since bypassing them is pretty challenging, you can opt for a professional service. ZenRows API provides advanced scraping capabilities that allow you to forget about bot detection problems. Save yourself headaches and many coding hours now. Sign up and try ZenRows for free.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.