Several tools and libraries are available online to build a web scraping application in minutes. Yet, if you want your crawling and scraping process to be reliable, follow the best web scraping practices.

What Is Scraping? And Why Is It Useful?

Web scraping is about extracting data from the web. Specifically, it refers to retrieving data from web pages through an automated script or application.

Scraping a web page means downloading its content and extracting its desired data. Learn more about what web scraping is in our in-depth guide.

Now, why do you need web scraping?

First, scraping the web can save you a lot of time because it allows you to retrieve public data automatically. Compare this operation with manual copying, and you'll see the difference.

Also, web scraping is helpful for the following:

- Competitor analysis: By scraping data from your competitor's websites, you can track their services, pricing, and marketing strategies.

- Market research: You can use web scraping to collect data about a particular market, industry, or niche. For example, this is especially useful when it comes to real estate.

- Machine learning: Data scraped can easily become the primary source of your machine learning and AI processes.

Let's now see how to build a reliable web scraper with the most important web scraping best practices.

Top 10 Web Scraping Best Practices

Let's go through ten tips for succesful web scraping.

1. Don't Overload the Server

Your crawler should only make a few requests to the same server in a short time. That's because your target website may be unable to handle such a high load. So, be sure to add a pause time after each request.

This delay between requests allows your web crawler to visit pages without affecting the experience of other users. After all, performing too many requests could overload the server. That'd make the target website of your scraping process very slow for all visitors.

Plus, executing many requests may activate anti-scraping systems. These can block your scraper from accessing the site, and you shouldn't overlook them. In other terms, the goal of your web crawler is to visit all pages of interest of a website, not to perform a DoS attack.

Also, consider running the crawler at off-peak times. For example, traffic to the target website will likely drop significantly at night. That's one of the most popular web scraping best practices.

2. Look for Public APIs

Many websites rely on APIs to retrieve data. If you aren't familiar with this concept, an API (Application Programming Interface) is a way for two or more applications to communicate and exchange data.

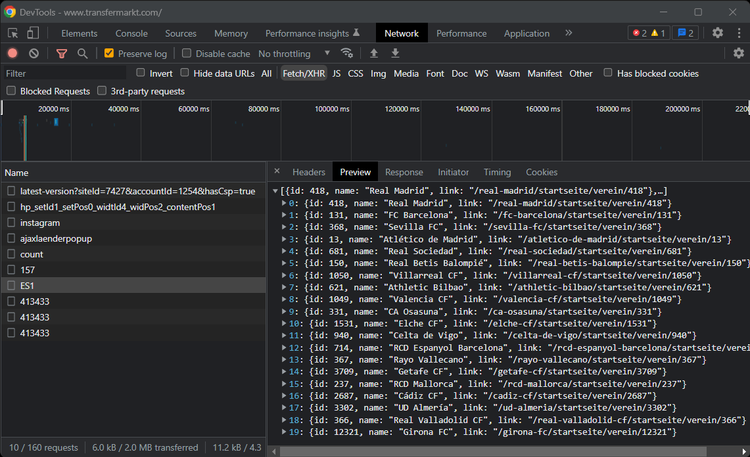

Most websites retrieve the data needed to render web pages from the front-end APIs. Now, let's assume that's what your target site's doing. In this scenario, you can sniff these API calls in the XHR tab of the Network section of your browser's development tools. Learn how to intercept HXR requests in Python.

As shown above, you can see everything you need to replicate those API requests in your scraper. This way, you'll get the data of interest without scraping the website. Also, keep in mind that most APIs are programmable via body or query parameters.

So, you can use these parameters to effortlessly get the desired data in human-readable formats through the API response. Also, these APIs may return URLs and valuable information for web crawling.

3. Respect the robots.txt File and Follow the Sitemap

robots.txt is a text file that search engine crawler bots read to learn how they're supposed to crawl and index the pages of a website. In other words, the robots.txt file generally contains instructions for crawlers.

Thus, your web crawler should take robots.txt into account as well. Typically, you can find the robots.txt file in the root directory of your website. In other words, you can generally access it at https://yourtargetwebsite.com/robots.txt.

This file stores all the rules for web crawlers' interaction with a website. So, you should always check the robots.txt file before crawling your target site. Also, this file can include the path to the sitemap.

The sitemap is a file that contains information about the pages, videos, and other files on a website. That generally stores the set of all canonical URLs that the search engines should index. As a result, following the sitemap can make web crawling very easy. So, thanks to these web scraping best practices, you can save a lot of time.

4. Use Common HTTP Headers and Rotate User-Agent

Anti-scraping technologies examine the HTTP headers to identify malicious users. In detail, if a request doesn't have a set of expected values in some key HTTP headers, the system blocks it. These HTTP headers generally include Referrer, User-Agent, Accept-Language, and Cookie.

Specifically, the User-Agent header contains information that specifies the browser, operating system, and vendor version from where the HTTP request comes. That is one of the essential headers that anti-bot technologies look at. If your scraper doesn't set the User-Agent of a popular browser, its requests will likely be blocked.

To make your scraper less tractable, you should also keep changing the value of these headers. That is especially true for User-Agent headers. For example, you can randomly extract it from a set of valid User-Agent strings.

5. Hide Your IP with Proxy Services

You should never expose your real IP when performing scraping. That is one of the most basic web scraping best practices. The reason is that you don't want anti-scraping systems to block your real IP.

So, you should make requests through a proxy service. In detail, it acts as an intermediary between your scraper and your target website. That means the website server sees the proxy server's IP, not yours.

Keep in mind that premium proxy services also offer IP rotation. That allows your scraper to make requests with ever-changing IPs, making IP banning more difficult. Note that ZenRows offers an excellent premium proxy service.

Learn more on how to rotate proxies in Python.

6. Add Randomness to Your Crawling Logic

Some websites rely on advanced anti-scraping techniques based on user behavior analytics. These technologies look for patterns in user behavior to understand whether the user is human. The idea behind them is that humans don't follow patterns when navigating a website.

You may need to make your web scraper appear human in the eyes of these anti-scraping technologies. You can achieve this by introducing random offset and mouse movements into your web scraping logic and clicking on random links.

7. Watch Out for Honeypots

Honeypot websites are decoy sites containing false data. Similarly, honeypot traps are hidden links that legitimate users can't see in the browser. Honeypot links usually have the CSS display property set to "none," so users can't see them.

When the web scraper visits a honeypot website, an anti-scraping system can track and study its behavior. Then, the protection system can use the collected data to recognize and block your scraper. You can avoid honeypot websites by ensuring that the website the scraper is targeting is real.

Similarly, the anti-bot system blocks requests from IPs that click on honeypot links. In this case, one of the best practices for web scraping is to avoid following hidden links when crawling a website.

8. Cache Raw Data and Write Logs

One of the most effective web scraping best practices is to cache all HTTP requests and responses performed by your scraper. For example, you can store this information in a database or log file.

You can perform new scraping iterations offline if you download all the HTML pages visited during the crawler process. That is great for extracting data you weren't interested in during the first iteration.

If saving the entire HTML document represents a problem in terms of disk space, consider storing only the most important HTML elements in string format in a database.

Also, you need to know when your scraper has already visited a page. In general, you should always keep track of the scraping process. You can achieve this by logging the pages visited, the time required to scrape a page, the outcome of the data extraction operation, and more.

9. Adopt a CAPTCHA Solving Service

CAPTCHAs are one of the most widely used tools by anti-bot protection systems. In detail, these challenges are easy to solve for a human being but not for a machine. If a user can't find a solution, the anti-bot system labels it as a bot.

Popular CDN (Content Delivery Network) services have built-in anti-bot systems involving CAPTCHAS. Adopting a CAPTCHA-solving service is one of the best web scraping practices.

These farm companies offer automated services to get a pool of human workers to solve the tests. Yet, the fastest and cheapest way to avoid them is to use advanced web scraping APIs.

Learn more on how you can automate CAPTCHA solving.

10. Avoid Legal Issues

Before scraping data from a website, you need to be sure that what you want to do is legal. In other words, you must take responsibility for the scraped data. That is why you should take a look at the Terms of Service of the target website. Here, you can learn what you can do with the scraped data.

In most cases, you don't have the right to republish the scraped data elsewhere for copyright reasons. Violating copyright can lead to legal problems for your company, which you want to avoid.

In general, you must responsibly perform web scraping. For example, you should avoid scraping sensitive data. As you can imagine, this is one of the most respectful best practices in scraping data from the web.

What Are the Best Scraping Tools?

The seen web scraping practices are helpful, but, to make the process easier, you need the right tool. Let's take a look at some top options:

1. ZenRows

ZenRows is a next-generation web scraping API and data extraction tool that allows you to scrape any website efficiently. With ZenRows, you no longer have to worry about anti-scraping or anti-bot. And, for the most popular websites, the HTML is converted into structured data. That makes it the best web scraping tool on the market.

2. Oxylabs

Oxylabs is one of the most popular proxy services available. Proxy servers are the basis of anonymity and allow you to protect your IP. Oxylabs is a market-leading proxy and web scraping solution service. It offers both premium and enterprise-level proxies.

3. Apify

Apify is a no-code tool that extracts structured data from any website. Specifically, Apify offers ready-to-use scraping tools that allow you to perform data retrieval processes that you'd typically perform manually in a web browser. Apify is a one-stop shop for web scraping, automation, and data extraction.

4. Import.io

Import.io is a cloud-based platform that allows you to extract, convert, and integrate unstructured and semi-structured data into structured data. You can then integrate into web apps with APIs and webhooks. Import.io offers a point-and-click UI and is specialized in analyzing eCommerce data.

5. Scrapy

Scrapy is an open-source and collaborative framework to extract data from the web. Specifically, Scrapy is a Python framework that provides web scraping API to extract data from online pages via XPath selectors. Scrapy is also a general-purpose web crawler.

Conclusion

Web scraping is a complex science; you need some rules to follow to build a reliable application. There are several best practices, and here you looked at the most important ones.

In detail, you learned:

- What web scraping is and its benefits.

- What tools you should adopt to perform web scraping.

- What the ten best web scraping practices are.

- What the five most important web scraping tools are.

If you want to save some time and effort, sign up for free on ZenRows and get access to several features based on the mentioned best web scraping practices.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.