Scrapy Splash is a library that gives you JavaScript rendering capabilities in the popular library Scrapy. It allows you to control a headless browser to scrape dynamic web pages and mimic human behavior so that you reduce your chances of getting blocked while web scraping.

In this tutorial on Splash in Scrapy using Python, you'll first jump into the basics and then explore more advanced interactions through examples.

Let's dive in!

Prerequisites

The prerequisites include:

- Installing Python.

- Getting Scrapy.

- Starting a new Scrapy Python project.

You'll need Python 3 to follow this Splash Scrapy tutorial. Many systems come with it preinstalled, so verify that with the command below:

python --version

If yours does, you'll obtain an output like this:

Python 3.11.3

If you get an error or version 2.x, you must install Python. Download the Python 3.x installer, execute it, and follow the instructions. On Windows, don't forget to check the "Add python.exe to PATH" option in the wizard.

You now have everything required to initialize a Python project. Use the following commands create a scrapy-splash folder with a Python virtual environment:

mkdir scrapy-splash

cd scrapy-splash

python -m venv env

Activate and enter the environment. On Linux or macOS, launch:

env/bin/activate

On Windows, run:

env\Scripts\activate.ps1

Next, install Scrapy. Be patient, as this may take a couple of minutes.

pip install scrapy

Great! The scraping library is set up.

Initialize a Scrapy project called splash_scraper:

scrapy startproject splash_scraper

The splash_scraper directory will now contain these folders and files:

├── scrapy.cfg

└── splash_scraper

├── _ _init_ _.py

├── items.py

├── middlewares.py

├── pipelines.py

├── settings.py

└── spiders

└── _ _init_ _.py

Open in your favorite Python IDE, and you're ready to write some code!

Steps to Integrate Scrapy Splash for Python

Time to see how to get started with Scrapy Splash in Python.

Install Docker

Splash is a JavaScript rendering library that relies on many tools to work. The recommended way to have them all configured and ready to use is the official Docker image.

The easiest way to get Docker is through its Desktop app. Click one of the links below to download the right installer for your platform:

Launch the executable file and follow the wizard. You'll need to restart your system at the end of the installation process.

Start Docker Desktop as an administrator, accept the terms, and wait for the engine to load.

Fantastic! You can now retrieve, run, and deal with containers on your local machine.

Install Scrapy Splash for Python Follow the following instructions to install and launch Splash.

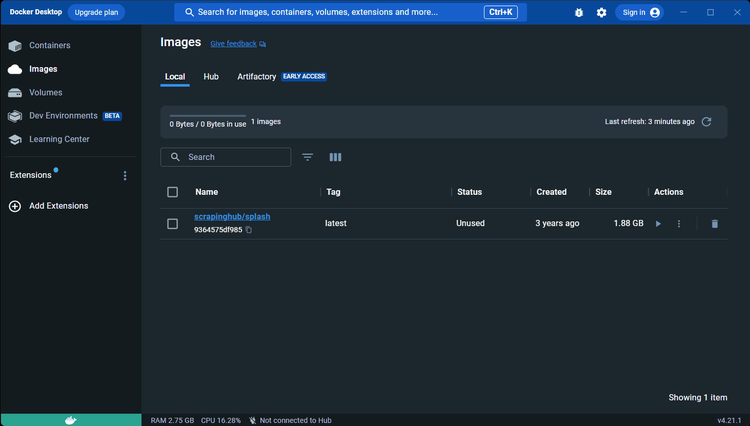

Make sure the Docker engine is running, open a terminal, and download the latest Splash image:

docker pull scrapinghub/splash

On Linux, add sudo before the Docker commands:

sudo docker pull scrapinghub/splash

Visit the "Images" section of Docker Desktop, and you'll now see the scrapinghub/splash image:

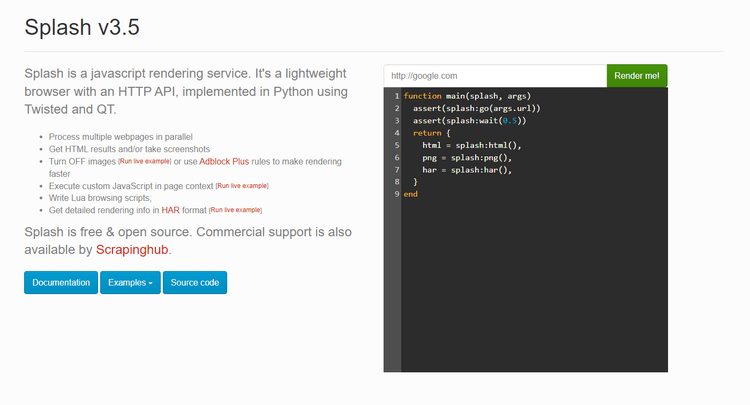

On macOS and Windows, start the container at port 8050:

docker run -it -p 8050:8050 --rm scrapinghub/splash

On Linux, add sudo again:

sudo docker run -it -p 8050:8050 --rm scrapinghub/splash

Wait until the Splash service logs the message below:

Server listening on http://0.0.0.0:8050

Splash is now available at http://0.0.0.0:8050. Open it in the browser, and you should see this page:

Perfect! Splash is working.

Back to your Python project, enter the scrapy-splash folder and run the below command. That library makes it easier to integrate Splash with Scrapy:

pip install scrapy-splash

To configure Scrapy to be able to connect to the Splash service, add the following lines to settings.py:

# set the Splash local server endpoint

SPLASH_URL = "http://localhost:8050"

# enable the Splash downloader middleware and

# give it a higher priority than HttpCompressionMiddleware

DOWNLOADER_MIDDLEWARES = {

"scrapy_splash.SplashCookiesMiddleware": 723,

"scrapy_splash.SplashMiddleware": 725,

"scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware": 810,

}

# enable the Splash deduplication argument filter to

# make Scrapy Splash saves spice disk on cached requests

SPIDER_MIDDLEWARES = {

"scrapy_splash.SplashDeduplicateArgsMiddleware": 100,

}

# set the Splash deduplication class

DUPEFILTER_CLASS = "scrapy_splash.SplashAwareDupeFilter"

Well done! You're now fully equipped!

How to Scrape with Scrapy Splash

You can start scraping with Splash now. And, as a target URL, we'll use an infinite scrolling demo page. It loads more products dynamically via JavaScript as the user scrolls down:

That's a good example of a dynamic-content page that requires JavaScript rendering for data extraction.

Initialize a Scrapy spider for the target page:

scrapy genspider scraping_club https://scrapingclub.com/exercise/list_infinite_scroll/

The /spiders folder will contain the scraping_club.py file below. That's what a basic Scrapy spider looks like.

import scrapy

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

allowed_domains = ["scrapingclub.com"]

start_urls = ["https://scrapingclub.com/exercise/list_infinite_scroll/"]

def parse(self, response):

pass

Now, you need to import Scrapy Splash in that file by adding the following line on top:

from scrapy_splash import SplashRequest

Note: SplashRequest helps you instruct the script to perform HTTP requests through Splash.

Overwrite the start_requests() method with a new function that involves SplashRequest, responsible for returning the first page that the spider should visit. Scrapy will call it at the beginning of the scraper's execution.

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SplashRequest(url, callback=self.parse)

ScrapingClubSpider will now visit pages in the browser instead of performing web requests through the built-in scrapy.Request object. As start_requests() replaces start_urls, you can remove the attribute from the class.

This is what the complete code of your new spider looks like:

import scrapy

from scrapy_splash import SplashRequest

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

allowed_domains = ["scrapingclub.com"]

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SplashRequest(url, callback=self.parse)

def parse(self, response):

# scraping logic...

pass

It only remains to implement the scraping logic in parse(). The below snippet selects all product card HTML elements with the css() function. Then, it iterates over them to extract the useful information and add them to the Scrapy scraped object with yield.

def parse(self, response):

# iterate over the product elements

for product in response.css(".post"):

url = product.css("a").attrib["href"]

image = product.css(".card-img-top").attrib["src"]

name = product.css("h4 a::text").get()

price = product.css("h5::text").get()

# add scraped product data to the list

# of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

The current script is close to a regular Scrapy script. The main difference is that SplashRequest can accept Lua scripts. Thanks to them, you can interact with pages that use JavaScript for rendering or getting data.

Use the lus_source argument to pass Lua code to SplashRequest. Also, instruct the library to execute it before accessing the HTML response. This should be the code of your full scraper:

import scrapy

from scrapy_splash import SplashRequest

lua_script = """

function main(splash, args)

splash:go(args.url)

-- custom rendering script logic...

return splash:html()

end

"""

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

allowed_domains = ["scrapingclub.com"]

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SplashRequest(url, callback=self.parse, endpoint="execute", args={"lua_source": lua_script})

def parse(self, response):

# iterate over the product elements

for product in response.css(".post"):

url = product.css("a").attrib["href"]

image = product.css(".card-img-top").attrib["src"]

name = product.css("h4 a::text").get()

price = product.css("h5::text").get()

# add scraped product data to the list

# of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

endpoint="execute" instructs Scrapy to make the request via the Splash execute endpoint. In other words, it tells the tool to run the Lua code after rendering the page in the browser. Here, the JavaScript rendering logic just opens the page and then returns its content.

Scrapy Splash takes care of passing the current HTML of the page to parse() through the response argument. That reflects the actions performed by the Lua script. In this method, you can extract data from the page, as explained in our tutorial on web scraping with Scrapy in Python.

Run the spider:

scrapy crawl scraping_splash

And you'll see in the logs:

{'url': '/exercise/list_detail_infinite_scroll/90008-E/', 'image': '/static/img/90008-E.jpg', 'name': 'Short Dress', 'price': '$24.99'}

{'url': '/exercise/list_detail_infinite_scroll/96436-A/', 'image': '/static/img/96436-A.jpg', 'name': 'Patterned Slacks', 'price': '$29.99'}

# 56 other products omitted for brevity...

{'url': '/exercise/list_detail_infinite_scroll/93756-D/', 'image': '/static/img/93756-D.jpg', 'name': 'V-neck Top', 'price': '$24.99'}

{'url': '/exercise/list_detail_infinite_scroll/96643-A/', 'image': '/static/img/96643-A.jpg', 'name': 'Short Lace Dress', 'price': '$59.99'}

Your output will involve only ten items because the page uses infinite scrolling to load data. However, you'll learn how to scrape all product cards in the next section of this Scrapy tutorial.

Well done! The basics of Splash in Scrapy are no longer a secret!

Scrapy Splash Tutorial to Interact with Web Pages

Scrapy Splash allows you to control a headless browser, supporting many web page interactions, such as waiting for elements or moving your mouse. These help your script looks like a human user to fool anti-bot technologies.

The interactions supported by Splash include:

- Scroll down or up the page.

- Click page elements or other mouse actions.

- Wait for page elements to load.

- Wait for the page to render.

- Fill out input fields and submit a form.

- Take screenshots.

- Stop image rendering or abort specific asynchronous requests.

You can achieve those operations using Splash parameters and Lua scripts (you check out the Splash documentation for details if you need them at some point). Let's see how this feature will help you scrape all the products from the infinite scroll demo page and then explore other popular Splash Scrapy interactions!

Scrolling Your target URL didn't include all products yet because the page relied on infinite scrolling to load new data, and your spider hasn't implemented scrolling capabilities yet. That's a popular interaction, especially on mobile. It requires JavaScript, and the base Scrapy doesn't allow you to deal with that… but Splash does!

Instruct a browser to perform infinite scrolling with Lua as below. Note the script runs JavaScript code on the page through the jsfunc() function from the Splash API.

function main(splash, args)

splash:go(args.url)

local num_scrolls = 8

local wait_after_scroll = 1.0

local scroll_to = splash:jsfunc("window.scrollTo")

local get_body_height = splash:jsfunc(

"function() { return document.body.scrollHeight; }"

)

-- scroll to the end for "num_scrolls" time

for _ = 1, num_scrolls do

scroll_to(0, get_body_height())

splash:wait(wait_after_scroll)

end

return splash:html()

end

Use it in scraping_club.py to get all products. This should be your new complete code:

import scrapy

from scrapy_splash import SplashRequest

lua_script = """

function main(splash, args)

splash:go(args.url)

local num_scrolls = 8

local wait_after_scroll = 1.0

local scroll_to = splash:jsfunc("window.scrollTo")

local get_body_height = splash:jsfunc(

"function() { return document.body.scrollHeight; }"

)

-- scroll to the end for "num_scrolls" time

for _ = 1, num_scrolls do

scroll_to(0, get_body_height())

splash:wait(wait_after_scroll)

end

return splash:html()

end

"""

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

allowed_domains = ["scrapingclub.com"]

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SplashRequest(url, callback=self.parse, endpoint="execute", args={"lua_source": lua_script})

def parse(self, response):

# iterate over the product elements

for product in response.css(".post"):

url = product.css("a").attrib["href"]

image = product.css(".card-img-top").attrib["src"]

name = product.css("h4 a::text").get()

price = product.css("h5::text").get()

# add scraped product data to the list

# of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

The response passed to parse() will now contain all the 60 product elements in the HTML code instead of just the first ten as before.

Check that by running the spider again:

scrapy crawl scraping_club

This time, it'll log all 60 products:

{'url': '/exercise/list_detail_infinite_scroll/90008-E/', 'image': '/static/img/90008-E.jpg', 'name': 'Short Dress', 'price': '$24.99'}

{'url': '/exercise/list_detail_infinite_scroll/96436-A/', 'image': '/static/img/96436-A.jpg', 'name': 'Patterned Slacks', 'price': '$29.99'}

# 56 other products omitted for brevity...

{'url': '/exercise/list_detail_infinite_scroll/96771-A/', 'image': '/static/img/96771-A.jpg', 'name': 'T-shirt', 'price': '$6.99'}

{'url': '/exercise/list_detail_infinite_scroll/93086-B/', 'image': '/static/img/93086-B.jpg', 'name': 'Blazer', 'price': '$49.99'}

This time, it'll log all 60 products:

Awesome, mission complete! You just scraped all products from the page.

Time to see other useful interactions in action.

Click Elements

Clicking an element on a page is useful to trigger AJAX calls or perform JavaScript functions. You can click on a .card-title a element in Lua the following way. The snippet gets an element with the select() function and then clicks it with mouse_click().

function main(splash,args)

splash:go(args.url)

-- click the element

local a_element = splash:select(".card-title a")

a_element:mouse_click()

-- wait for a fixed amount of time

splash:wait(splash.args.wait)

return splash:html()

end

See in action in the ScrapingClubSpider:

import scrapy

from scrapy_splash import SplashRequest

lua_script = """

function main(splash, args)

splash:go(args.url)

-- click the first product element

local a_element = splash:select(".card-title a")

a_element:mouse_click()

return splash:html()

end

"""

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

allowed_domains = ["scrapingclub.com"]

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SplashRequest(url, callback=self.parse, endpoint="execute", args={"lua_source": lua_script})

def parse(self, response):

# new scraping logic...

The click will trigger a page change (you'll be accessing a new URL), so you'll have to change the scraping logic accordingly.

Wait for Element

Pages can retrieve data dynamically or render nodes in the browser, so the definitive DOM takes time to get built. So to avoid errors, wait for an element to be present on the page before interacting with it.

In our case, wait for the .post element to be on the page with Lua:

function main(splash, args)

splash:go(args.url)

while not splash:select(".post") do

splash:wait(0.2)

print("waiting...")

end

return { html=splash:html() }

end

Update the Scrapy Splash example spider as below to use this capability:

import scrapy

from scrapy_splash import SplashRequest

lua_script = """

function main(splash, args)

splash:go(args.url)

while not splash:select(".post") do

splash:wait(0.2)

print("waiting...")

end

return splash:html()

end

"""

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

allowed_domains = ["scrapingclub.com"]

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SplashRequest(url, callback=self.parse, endpoint="execute", args={"lua_source": lua_script})

def parse(self, response):

# iterate over the product elements

for product in response.css(".post"):

url = product.css("a").attrib["href"]

image = product.css(".card-img-top").attrib["src"]

name = product.css("h4 a::text").get()

price = product.css("h5::text").get()

# add scraped product data to the list

# of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

Wait for Time

Since dynamic-content web pages take time to load and render, you should wait for a few seconds before accessing their HTML content.

SplashRequest offers the wait argument for that:

yield SplashRequest(url, callback=self.parse, args={"wait": 2})

If you want to use it in combination with a Lua script, you need to manually call wait(). Note the use of args.wait.

function main(splash, args)

splash:wait(args.wait)

splash:go(args.url)

-- parsing log

return splash:html()

end

Run Your Own JavaScript Snippets

Splash also allows you to run custom JavaScript scripts on web pages. That's helpful for simulating user interactions such as clicking, filling out forms, or scrolling with an easier syntax than Lua.

scrapy-splash will execute the JS code after the server responds –including wait– but before the page gets rendered. That means you can use JavaScript code to update the content of the page before passing it to parse(). Also, this feature allows you to perform any task that the limited Splash HTTP API can't do.

To use it, define the JavaScript logic in a string. The below snippet uses querySelectorAll() to get all product card price elements and update their content.

document.querySelectorAll(".card h5").forEach(e => e.innerHTML = e.innerHTML.replace("$", "€"))

Then, pass it to the js_source argument in place of lua_source. The library will now execute JS instead of Lua logic.

js_script = """

document.querySelectorAll(".card h5").forEach(e => e.innerHTML = e.innerHTML.replace("$", "€"))

"""

Here's a complete working code that changes the currency of the product prices from dollar to euro:

import scrapy

from scrapy_splash import SplashRequest

# replace usd with euro

js_script = """

document.querySelectorAll(".post h5").forEach(e => e.innerHTML = e.innerHTML.replace("$", "€"))

"""

class ScrapingClubSpider(scrapy.Spider):

name = "scraping_club"

allowed_domains = ["scrapingclub.com"]

def start_requests(self):

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

yield SplashRequest(url, callback=self.parse, args={"js_source": js_script})

def parse(self, response):

# iterate over the product elements

for product in response.css(".post"):

url = product.css("a").attrib["href"]

image = product.css(".card-img-top").attrib["src"]

name = product.css("h4 a::text").get()

price = product.css("h5::text").get()

# add scraped product data to the list

# of scraped items

yield {

"url": url,

"image": image,

"name": name,

"price": price

}

Verify this works as expected by launching the spider again, and the logs will include:

{'url': '/exercise/list_detail_infinite_scroll/90008-E/', 'image': '/static/img/90008-E.jpg', 'name': 'Short Dress', 'price': '€24.99'}

{'url': '/exercise/list_detail_infinite_scroll/96436-A/', 'image': '/static/img/96436-A.jpg', 'name': 'Patterned Slacks', 'price': '€29.99'}

# 6 other products omitted for brevity...

{'url': '/exercise/list_detail_infinite_scroll/93756-D/', 'image': '/static/img/93756-D.jpg', 'name': 'V-neck Top', 'price': '€24.99'}

{'url': '/exercise/list_detail_infinite_scroll/96643-A/', 'image': '/static/img/96643-A.jpg', 'name': 'Short Lace Dress', 'price': '€59.99'}

The currency of the products went from $ to €. Well done! You now know how to run both Lua and JavaScript scripts in Splash.

Avoid Getting Blocked with a Scrapy Splash Proxy

The biggest challenge when it comes to web scraping is getting blocked because many sites protect access to their web pages with anti-bot techniques, such as IP rate limiting and IP bans. One of the most effective ways to avoid them is to simulate different users with web scraping proxy, so let's learn how to implement one for Scrapy Splash in this tutorial.

You can get a free proxy from providers like Free Proxy List and integrate it into SplashRequest through the proxy argument:

yield SplashRequest(url, callback=self.parse, args={"proxy": "http://107.1.93.217:80" })

Note that proxy URLs must be in the following format:

[<proxy_protocol>://][<proxy_user>:<proxy_password>@]<proxy_host>[:<proxy_port>]

Note: Free proxies typically don't have a username and password.

Keep in mind that free proxies are unreliable, short-lived, and error-prone. Let's be honest: you can't rely on them in a real-world scenario. Instead, you need a proxy rotator based on a list of residential IPs. Learn how to build it in our guide on Scrapy proxies.

You might also be interested in checking out our guide on Scrapy User Agent.

Conclusion

In this Scrapy Splash tutorial for Python, you learned the fundamentals of using Splash in Scrapy. You started from the basics and dove into more advanced techniques to become an expert.

Now you know:

- How to set up a Scrapy Splash Python project.

- What interactions you can simulate with it.

- How to run custom JavaScript and Lua scripts in Scrapy.

- The challenges of web scraping with

scrapy-splash.

Important to consider that no matter how sophisticated your browser automation is, anti-bots can still detect you. But you can easily avoid that with ZenRows, a web scraping API with IP rotation, headless browser capabilities, and an advanced built-in bypass for anti-scraping measures. Scraping dynamic-content sites has never been easier. Try ZenRows for free!

Frequent Questions

What Is Scrapy Splash?

In the Scrapy world, Splash is a headless browser to render sites in a lightweight web browser. When integrated into Scrapy, spiders can render and interact with JavaScript-based websites. That allows you to get data from dynamic-content pages that can't be scraped with traditional parsing methods.

What Is Splash Used For?

Splash is usually used for web scraping tasks that need JavaScript execution. It can handle pages that use JavaScript for rendering or data retrieval purposes. Spiders can click buttons, follow links, and interact with elements through its API.

What Is the Maximum Timeout in Scrapy Splash?

By default, timeout is 60, and the maximum timeout supported by Scrapy Splash is 90 seconds. When it gets set to more than 90 seconds, Splash return a 400 error status code.

However, you can override that behavior by starting Splash with the --max-timeout command line option:

docker run -it -p 8050:8050 scrapinghub/splash --max-timeout 300

That way, you can set a timeout up to 3600 seconds now. For instance, 1800 seconds:

yield scrapy\_splash.SplashRequest(url, self.parse, arg={"timeout": 1800})

How Do I Start Scrapy Splash?

After installing Docker and setting up Splash, start Scrapy Splash following these steps:

- Launch the Docker engine.

- Pull the Spash Docker image with the command line

docker pull scrapinghub/splash. - Start the Docker Splash image on port 8050 with

docker run -it -p 8050:8050 --rm scrapinghub/splash(addsudoat the beginning on GNU/Linux). - Integrate Scrapy Splash in your Scrapy project.

- Run the Scrapy spider performing requests through Splash with

scrapy crawl <spider_name>.

How Do You Install Splash in Scrapy?

To install Splash in Scrapy, follow the steps below:

- Start the Splash local server on Docker.

- Add the

scrapy-splashpip dependency to your Scrapy project with the command linepip install scrapy-splash. - Configure the project to use the Scrapy Splash middleware and configurations by adding the below code to

setting.py:

SPLASH_URL = "http://localhost:8050"

DOWNLOADER_MIDDLEWARES = {

"scrapy_splash.SplashCookiesMiddleware": 723,

"scrapy_splash.SplashMiddleware": 725,

"scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware": 810,

}

SPIDER_MIDDLEWARES = {

"scrapy_splash.SplashDeduplicateArgsMiddleware": 100,

}

DUPEFILTER_CLASS = "scrapy_splash.SplashAwareDupeFilter"

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.