Web crawling is a technique that refers to visiting pages and discovering URLs on a site. When used in a Python web scraping app, it enables the collection of large amounts of data from many pages. In this tutorial, you'll learn how to build a Python web crawler through step-by-step examples.

What Is a Web Crawler in Python?

A Python web crawler is an automated program that browses a website or the internet in search of web pages. It's a Python script that explores pages, discovers links, and follows them to increase the data you can extract from relevant websites.

Search engines rely on crawling bots to build and maintain their index of pages, while web scrapers use it to visit and find all pages to apply the data extraction logic on.

To better understand how a web crawling Python script works, let's consider an example:

Assume that you want to get all products related to a search query on Amazon, the world's most popular e-commerce site. It'll return the results in a paginated list, therefore your script will have to visit several pages to retrieve all the information of interest. Here's where crawling comes in!

First, the crawler sends a request to the search results page with the input query. Then, it parses the HTML document returned by the server and runs the scraping task. For each product, it gets the name, price, and rating.

Next, it focuses on the pagination part storing the links to the following pages. It extracts the URLs from the links and adds those related to pages not yet visited to a queue.

Then, it repeats the process. It gets a new URL from the queue, adds it to the list of visited pages, sends the request, parses the HTML, and extracts data. This continues until there are no more pages or the script hits a predefined limit.

As shown in this example, scraping and crawling are connected, and one is part of the other. To learn more, read our detailed comparison of web crawling vs. web scraping.

Build Your First Python Web Crawler

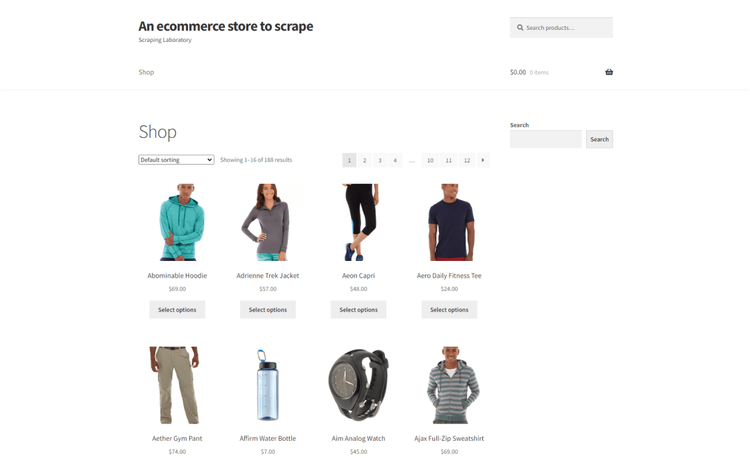

We'll use ScrapingCourse, an e-commerce with a paginated list of products, as a target website.

We'll build a Python crawler to visit every page and retrieve the information from each product.

Let's dive in!

Prerequisites

Before getting started, you'll need:

- Python 3+: Download the installer, double-click it, and follow the installation wizard.

- A Python IDE: Visual Studio Code with the Python extension or PyCharm Community Edition will do.

Run the commands below to initialize a Python project called web-crawler:

mkdir web-crawler

cd web-crawler

python -m venv env

_Italic_To do web crawling, you'll need a library to perform HTTP requests and an HTML parser. The two most popular Python packages for that are:

- Requests: A powerful HTTP client library that facilitates the execution of HTTP requests and handles their responses.

- Beautiful Soup: A full-featured HTML and XML parser that exposes a complete API to explore the DOM, select HTML elements, and retrieve data from them.

Install them both:

pip install beautifulsoup4 requests

In the project folder, create a crawler.py file and import the project dependencies:

import requests

from bs4 import BeautifulSoup

# crawling logic...

This script is ready to execute crawling Python instructions.

Launch the command below to run it:

python crawler.py

Great, you're fully set up! Time to learn how to implement the crawling logic!

Initial Crawling Script

In this section, you'll see how to build a basic Python web crawler script step by step.

To get started, use requests to download the first page. Behind the scene, the get() method performs an HTTP GET request to the specified URL.

response = requests.get("https://www.scrapingcourse.com/ecommerce/")

response.content will now contain the HTML document produced by the server. Feed it to BeautifulSoup. The "html.parser" option specifies the parser the library will use.

soup = BeautifulSoup(response.content, "html.parser")

Select all HTML link elements on the page. select() applies a CSS selector strategy, returning all <a> elements with an href attribute. That's how you can identify links in an HTML document.

link_elements = soup.select("a[href]")

Populate a list with the shop URLs extracted from the link elements. Use an if condition to avoid empty and external URLs.

urls = []

for link_element in link_elements:

url = link_element['href']

if "https://www.scrapingcourse.com/ecommerce/" in url:

urls.append(url)

Extend the logic by repeating the same procedure for each new page. The script below keeps crawling the site as long as there are shop pages left to visit. URLs discovered here include pagination and product pages.

import requests

from bs4 import BeautifulSoup

# initialize the list of discovered urls

# with the first page to visit

urls = ["https://www.scrapingcourse.com/ecommerce/"]

# until all pages have been visited

while len(urls) != 0:

# get the page to visit from the list

current_url = urls.pop()

# crawling logic

response = requests.get(current_url)

soup = BeautifulSoup(response.content, "html.parser")

link_elements = soup.select("a[href]")

for link_element in link_elements:

url = link_element['href']

if "https://www.scrapingcourse.com/ecommerce/" in url:

urls.append(url)

Given a product URL, you can extend the logic to perform web scraping. This way, you're able to extract product data and store it in a dictionary while crawling. We've used CSS Selectors.

product = {}

product["url"] = current_url

product["image"] = soup.select_one(".wp-post-image")["src"]

product["title"] = soup.select_one(".product_title").text()

# product["price"] = ...

Then, you can initialize an array and store each scraped product in it:

products.push(product)

Fantastic! The crawling script is now working as expected! Before it's done and have the full code, let's extract the data to an external format.

Extract Data into CSV

After crawling the site and retrieving data from it, you can export the scraped information to CSV.

To do that, import csv import on the top of your file:

import csv

Then, add these instructions to export to CSV:

with open('products.csv', 'w') as csv_file:

writer = csv.writer(csv_file)

# populating the CSV

for product in products:

writer.writerow(product.values())

Here's what the complete web spider Python script looks like:

import requests

from bs4 import BeautifulSoup

import csv

# initialize the data structure where to

# store the scraped data

products = []

# initialize the list of discovered urls

# with the first page to visit

urls = ["https://www.scrapingcourse.com/ecommerce/"]

# until all pages have been visited

while len(urls) != 0:

# get the page to visit from the list

current_url = urls.pop()

# crawling logic

response = requests.get(current_url)

soup = BeautifulSoup(response.content, "html.parser")

link_elements = soup.select("a[href]")

for link_element in link_elements:

url = link_element["href"]

if "https://www.scrapingcourse.com/ecommerce/" in url:

urls.append(url)

# if current_url is product page

product = {}

product["url"] = current_url

product["image"] = soup.select_one(".wp-post-image")["src"]

product["name"] = soup.select_one(".product_title").text()

product["price"] = soup.select_one(".price")

products.append(product)

# initialize the CSV output file

with open('products.csv', 'w') as csv_file:

writer = csv.writer(csv_file)

# populating the CSV

for product in products:

writer.writerow(product.values())

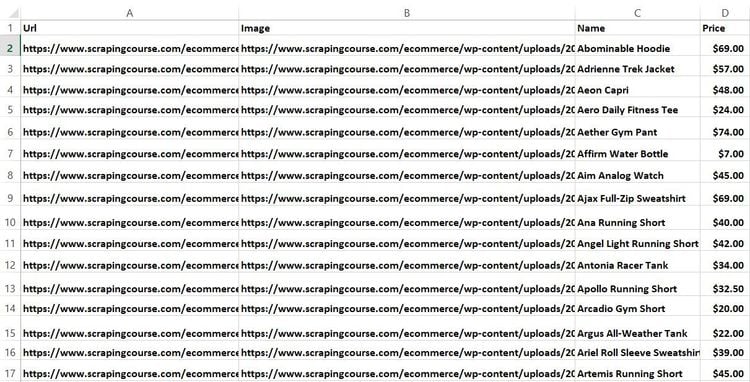

Run the script, and the products.csv file will appear in your project's folder:

Congratulations, you just learned how to build a basic web crawler!

Yet, there is still work to do to prepare your script for production. Let's see that next!

Transitioning to a Real-World Web Crawler in Python

The Python web crawler built above does its job, but comes with several limitations:

- Duplicate URLs: As there are no controls, it can visit the same page multiple times. This leads to duplicated data, as well as unnecessary network requests and processing.

- Limited to a single domain: It can't crawl pages across other domains or subdomains.

- No priority: It follows a basic depth-first search approach and doesn't prioritize the most important URLs in any particular way.

- Sequential crawling: It crawls just one page at a time, taking a lot of time to complete the task.

-

Lack of frequency control: It doesn't respect the

crawl-delayinstruction fromrobots.txtor wait some time before revisiting pages. That can result in excessive requests to the target server and IP blocks.

You need to address those drawbacks to make the current crawling procedure scalable and production-ready. There are many strategies you can implement into your script to improve it, but here we'll focus only on the most important ones. Additionally, you'll find a complete list of best practices later in this article.

To prevent crawling the same page twice, you need a list to keep track of the URLs already visited. So before adding a URL to urls, make sure it isn't in that list. And since a page can contain the same link several times, you also need to check it isn't already in urls.

The below Python crawler snippet will now go through each page only once. At the same time, it'll download pages in the order it discovers them.

urls = ["https://www.scrapingcourse.com/ecommerce/"]

# to store the pages already visited

visited_urls = []

while len(urls) != 0:

current_url = urls.pop()

response = requests.get(current_url)

soup = BeautifulSoup(response.content, "html.parser")

# marke the current URL as visited

visited_urls.append(current_url)

link_elements = soup.select("a[href]")

for link_element in link_elements:

url = link_element['href']

if "https://www.scrapingcourse.com/ecommerce/" in url:

# if the URL discovered is new

if url not in visited_urls and url not in urls:

urls.append(url)

However, this may not be the best approach, especially on a paginated website. The script should first prioritize the pagination pages, discover all product URLs, and tackle them. To do so, use a PriorityQueue instead of a simple list to keep track of the URLs to visit.

Assign a lower priority value to pagination pages to instruct the script to crawl them first. A low value means a high priority, thus the get() method will return the lowest-valued entries first.

If you crawl the entire site, you'll have all the page URLs in visited_urls. Consider turning that list into a priority queue by assigning a score to every visited page to make it easier to choose which ones to revisit first and how often.

import requests

from bs4 import BeautifulSoup

import queue

import re

urls = queue.PriorityQueue()

urls.put((0.5, "https://www.scrapingcourse.com/ecommerce/"))

visited_urls = []

while not urls.empty():

# ignore the priority value

_, current_url = urls.get()

# crawling logic

response = requests.get(current_url)

soup = BeautifulSoup(response.content, "html.parser")

visited_urls.append(current_url)

link_elements = soup.select("a[href]")

for link_element in link_elements:

url = link_element['href']

if "https://www.scrapingcourse.com/ecommerce/" in url:

if url not in visited_urls and url not in [item[1] for item in urls.queue]:

# default priority score

priority_score = 1

# if the current URL refers to a pagination page

if re.match(r"^https://scrapingcourse\.com/ecommerce/page/\d+/?$", url):

priority_score = 0.5

urls.put((priority_score, url))

Prevent the script from running forever with some limits:

while not urls.empty() and len(visited_urls) < 50:

#...

Note that the spider is still firing requests to the target site nonstop. Add a delay to avoid overloading the server or getting your IP banned. Use time.sleep() at the end of the while loop to pause execution for a specified time interval. Randomize the pause time interval to make the automated program harder to be detected as a bot.

import time

# ...

while not urls.empty():

# link discover logic...

# delay for 1 second before making the next request

time.sleep(1)

The Python script for the web crawler is now smarter and much more effective. Yet, it's still limited to a single domain. Use separate queues to extend the crawling logic to a set of related domains or subdomains of interest.

If you want to allow subdomains, use a regex instead of not in in the if condition. Here's what an improved version of the original crawler looks like.

import requests

from bs4 import BeautifulSoup

import queue

import re

import time

import random

# to store the URLs discovered to visit

# in a specific order

urls = queue.PriorityQueue()

# high priority

urls.put((0.5, "https://www.scrapingcourse.com/ecommerce/"))

# to store the pages already visited

visited_urls = []

# until all pages have been visited

while not urls.empty():

# get the page to visit from the list

_, current_url = urls.get()

# crawling logic

response = requests.get(current_url)

soup = BeautifulSoup(response.content, "html.parser")

visited_urls.append(current_url)

link_elements = soup.select("a[href]")

for link_element in link_elements:

url = link_element['href']

# if the URL is relative to scrapingcourse.com or

# any of its subdomains

if re.match(r"https://(?:.*\.)?scrapingcourse\.com", url):

# if the URL discovered is new

if url not in visited_urls and url not in [item[1] for item in urls.queue]:

# low priority

priority_score = 1

# if it is a pagination page

if re.match(r"^https://scrapingcourse\.com/ecommerce/page/\d+/?$", url):

# high priority

priority_score = 0.5

urls.put((priority_score, url))

# pause the script for a random delay

# between 1 and 3 seconds

time.sleep(random.uniform(1, 3))

Fantastic, you made a more professional web crawling for Python!

Keep in mind, though, that this is only an example. To deal with more complex scenarios and large-scale crawling activities, there's still much to do, like implementing measures to avoid getting blocked.

Let's now focus on the elements that can help you take your Python crawl process to the next level!

Getting Blocked When Web Crawling in Python

The biggest challenge when it comes to web crawling in Python is getting blocked. Many sites protect their access with anti-bot measures, which can identify and stop automated applications, preventing them from accessing pages.

Here are some recommendations to overcome anti-scraping technologies:

-

Rotate

User-Agent: Keeping changing theUser-Agentheader in requests helps to mimic different web browsers and avoid detection as a bot. Learn how to set User-Agents in Python Requests. - Run during off-peak hours: Launching the crawler during off-peak hours and incorporating delays between requests helps prevent overwhelming the site's server and triggering blocking mechanisms.

-

Respect

robots.txt: Following the website'srobots.txtdirectives demonstrates ethical crawling practices. Also, it helps avoid visiting restricted areas and making requests from your script suspicious. See our guide on how to read robots.txt for web scraping. - Avoid honeytraps: Not all links are the same, and some hide traps for bots. By following them, you'll be marked as a bot. Find out more about what a honeypot is and how to avoid it.

Yet, these tips are great for simple scenarios but will not be enough for more complex ones. Check out our complete guide on web scraping without getting blocked.

Bypassing all defense measures isn't easy and requires a lot of effort. Plus, a solution that works today may stop working tomorrow. But wait, there's a better solution! ZenRows is a complete scraping API with built-in anti-bot bypass capabilities. Sign up to try it for free now!

Web Crawling Tools for Python

There are several useful web crawling tools to make the process of discovering links and visiting pages easier. Here's a list of the best Python web crawling tools that can assist you:

- ZenRows: A comprehensive scraping and crawling API. It offers rotating proxies, geo-localization, JavaScript rendering and advanced anti-blocking bypass.

- Scrapy: One of the most powerful Python crawling library options for beginners. It provides a high-level framework for building scalable and efficient crawlers.

- Selenium: A popular headless browser library for web scraping and crawling. Unlike BeautifulSoup, it can interact with web pages in a browser like human users would.

Best Web Crawling Practices in Python and Considerations

The Python crawling best practices below will help you build a robust script that can go through any site:

Crawling JavaScript-Rendered Web Pages in Python

Pages that rely on JavaScript for rendering or data retrieval represent a challenge for scraping and crawling. The reason is that traditional libraries like BeautifulSoup can't help with them.

To perform web scraping on JavaScript-rendered pages in Python, you need other tools. An example is Selenium, a library to control web browsers programmatically. This allows you even to crawl React apps.

Parallel Scraping in Python with Concurrency

Right now, the crawler deals with one page at a time. After sending an HTTP request, it stays idle, waiting for the server to respond. This leads to inefficiencies and slows down the crawling time.

How to tackle this and avoid these conflicts? Since all threads share the urls list, you have to make sure that only one thread at a time can access it through synchronization or locking. In other words, you need to make urls thread-safe.

Luckily, Python [queues](https://docs.python.org/3/library/queue.html) are thread-safe. Thus, threads can read and write their information simultaneously without any problems. If one is using the resource, the others will have to wait for it to release it.

Make visited_urls a queue and create a worker using the threading module. Note that you need to define a new function where to handle the queued items.

import requests

from bs4 import BeautifulSoup

import queue

import re

from threading import Thread

def queue_worker(i, urls, visited_urls):

while not urls.empty() and visited_urls.qsize() < 50:

# crawling logic...

urls.task_done()

urls = queue.PriorityQueue()

urls.put((0.5, "https://www.scrapingcourse.com/ecommerce/"))

visited_urls = queue.Queue()

num_workers = 4

for i in range(num_workers):

Thread(target=queue_worker, args=(i, urls, visited_urls), daemon=True).start()

urls.join()

Note: Be careful when running it since big numbers in num_workers would start lots of requests. If the script had some minor bug for any reason, you could perform hundreds of requests in a few seconds.

Take a look at the benchmarks below to verify the performance results:

- Sequential requests: 29,32s.

- Queue with one worker (

num_workers = 1): 29,41s. - Queue with two workers (

num_workers = 2): 20,05s. - Queue with five workers (

num_workers = 5): 11,97s. - Queue with ten workers (

num_workers = 10): 12,02s.

There's almost no difference between sequential requests and having one worker. But after adding multiple workers, that overhead pays off. You could add even more threads, and that won't affect the outcome since they'll be idle most of the time. Find out why in our tutorial on web scraping with concurrency in Python.

Distributed Web Scraping in Python

Distributing the crawling process among several servers for maximum scalability is complex. Python allows it, and some libraries can help you with it (Celery or Redis Queue). It's a huge step, and you can find the details in our guide on distributed web crawling.

As a quick preview, the idea behind it is the same as the one with the threads. Each page will be processed in different threads or even machines running the same code.

With this approach, you can scale even further. Theoretically, with no limit, there's always a limit or bottleneck. Generally, it's the central node that handles the distribution logic.

Separation of Concerns for Easier Debugging

Separate concerns to prevent the code of the script from becoming highly coupled. You can create three functions: get_HTML(), crawl_page(), and scrape_page(). As their names imply, each of them will perform only one main task.

get_html() uses requests to get the HTML from a URL and wrap the logic in a try block for robustness:

def get_html(url):

try:

return requests.get(url).content

except Exception as e:

print(e)

return ''

crawl_page() takes care of the link discovery part:

def crawl_page(soup, url, visited_urls, urls):

link_elements = soup.select("a[href]")

for link_element in link_elements:

url = link_element['href']

if re.match(r"https://(?:.*\.)?scrapingcourse\.com", url):

if url not in visited_urls and url not in [item[1] for item in urls.queue]:

priority_score = 1

if re.match(r"^https://scrapingcourse\.com/shop/page/\d+/?$", url):

priority_score = 0.5

urls.put((priority_score, url))

scrape_page() extracts the product data:

def scrape_page(soup, url, products):

product = {}

product["url"] = url

product["title"] = soup.select_one(".product_title").text()

# product["price"] = ...

products.push(product)

Assembling it all together:

import requests

from bs4 import BeautifulSoup

import queue

import re

import time

import random

urls = queue.PriorityQueue()

urls.put((0.5, "https://www.scrapingcourse.com/ecommerce/"))

visited_urls = []

while not urls.empty():

_, current_url = urls.get()

soup = BeautifulSoup(get_html(current_url), "html.parser")

visited_urls.append(current_url)

crawl_page(soup, current_url, visited_urls, urls)

# if it is a product page:

# scrape_page(soup, url, products)

time.sleep(random.uniform(1, 3))

The resulting script is way more elegant! Each of the helpers handles a single piece and could be moved to different files.

Persistency

Not persisting any data isn't good for scalability. In a real-world scenario, you should store:

- The URLs discovered timestamps.

- The scraped content.

- The HTML documents for later processing.

You should export this information to files and/or store it in a database.

Canonicals to Avoid Duplicate URLs

The link extraction section doesn't take into consideration canonical links. These enable website owners to define different pages as the same one and are usually specified for SEO and followed by search engines.

The right approach would be to add the canonical URL (if present) to the visited list. When arriving at that same page from a different origin URL, you'll then be able to detect it as a duplicate.

Similarly, a page can have more URLs: query strings or hashes might modify it. With the approach presented here, you'd crawl it twice. Avoid that by removing query parameters using url_query_cleaner.

Conclusion

In this guide, you learned the fundamentals of web crawling. You started from the basics and dove into more advanced topics to become a Python crawling expert!

Now you know:

- What a web crawler is.

- How to build a crawler in Python.

- What to do to make your script production-ready.

- What the best Python crawling libraries are.

- The Python web crawling best practices and advanced techniques.

Regardless of how smart your crawler is, anti-bot measures can detect and block it. But you can get rid of any challenges with ZenRows, a web scraping API with rotating premium proxies, JavaScript rendering, and many other must-have features to avoid getting blocked. Crawling has never been easier!

Frequent Questions

How Do I Create a Web Crawler in Python?

To create a web crawler in Python, start by defining the initial URL and maintain a set of visited URLs. Use libraries such as Requests or Scrapy in Python to send HTTP requests and retrieve HTML content to then extract relevant information from the HTML. Repeat the process by following links discovered within the pages.

Can Python Be Used for a Web Crawler?

Yes, Python is widely used for web crawling thanks to its rich ecosystem of libraries and tools. It offers libraries like Requests, Beautiful Soup, and Scrapy. These make it easier to send HTTP requests, parse HTML content, and handle data extraction.

What Is a Web Crawler Used for?

A web crawler can be used to systematically browse and collect info from sites. They automate the process of fetching web pages and following links to discover new web content. Crawlers are popular for web indexing, content aggregation, and URL discovery.

How Do You Crawl Data from a Website in Python?

To crawl data from a website in Python, send an HTTP request to the desired URL using a library like Requests. Retrieve the HTML response, analyze the page structure apply data extraction techniques such as CSS selectors or XPath expressions to find and extract the desired elements and do the parsing with libraries such as BeautifulSoup.

What Are the Different Ways to Crawl Web Data in Python?

There are several ways to crawl web data in Python. These depend on the complexity of the target site and the size of the project. For basic crawling and scraping tasks, use libraries like Requests and Beautiful Soup. For more advanced functionality and flexibility, you might prefer complete crawling frameworks like Scrapy. And to reduce a lot of the complexity to a single API call, try ZenRows.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.