Have you ever attempted web scraping with the Python Requests library only to be blocked by your target website? You're not alone!

The User Agent (UA) string is one of the most critical factors in website detection because it's like a fingerprint that identifies the client, so it easily gives you away as a bot.

However, you can fly under the radar and retrieve the data you want by randomizing the User Agent in Python Requests. You'll learn how to do that at scale in this tutorial.

What Is the User Agent in Python Requests

The User Agent is a key component of the HTTP headers sent along with every HTTP request.

These headers contain information the server uses to tailor its response, such as the preferred content format and language. Also, the UA header tells the web server what operating system and browser is making the request, among others.

For example, a Google Chrome browser may have the following string:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36

It shares that the requester uses the Mozilla product token, AppleWebKit as a rendering engine on its version 537.36, runs on a 64-bit Windows 10, displays compatibility with Firefox and Konqueror rendering engine, Google Chrome 111 as the browser, and there's Safari compatibility.

Similarly, here's an example of a Firefox UA:

Mozilla/5.0 (X11; Linux i686; rv:110.0) Gecko/20100101 Firefox/110.0.

And you see a mobile UA next:

Mozilla/5.0 (iPhone; CPU iPhone OS 15_1 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Mobile/15E148'

Your Python Request web scraper is denied access because its default UA screams "I am a bot." Let's see how!

The following script sends an HTTP request to http://httpbin.org/headers.

import requests

response = requests.get('http://httpbin.org/headers')

print(response.status_code)

print(response.text)

Since HTTPBin is an API, it produces the request's default headers as HTML content. So, the code above brings this result:

200

{

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "python-requests/2.28.2",

"X-Amzn-Trace-Id": "Root=1-64107531-3d822dab0eca3d0a07faa819"

}

}

Take a look at the User-Agent string python-requests/2.28.2, and you'll agree that any website can tell it isn't from an actual browser. That's why you have to specify a custom and well-formed User Agent for Python Requests.

Set User Agent Using Python Requests

To set the Python Requests User Agent, pass a dictionary containing the new string in your request configuration.

Start by importing the Python Requests library, then create an HTTP headers dictionary containing the new User Agent, and send a request with your specified User-Agent string. The last step is printing the response.

import requests

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36'}

response = requests.get('http://httpbin.org/headers', headers=headers)

print(response.status_code)

print(response.text)**Bold**

Note: You can find some well-formed User Agents for web scraping in our other article.

If you get a result similar to the example below, you've succeeded in faking your User Agent.

200

{

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-6410831d-666f197b78c8e97a3013bea9"

}

}

Use a Random User Agent with Python Requests

You need to use a random User Agent in Python Requests to avoid being blocked because it helps identify you.

You can rotate between UAs using the random.choice() method. Here's a step-by-step example:

- Import

random.

import random

- Create an array containing the User Agents you want to rotate.

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36'

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36'

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36'

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36'

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36'

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15'

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15'

]

- Create a dictionary, setting

random.choice()as the User-Agent string.

headers = {'User-Agent': random.choice(user_agents)}

- Include the random UA string in your request.

response = requests.get('https://www.example.com', headers=headers)

- Use a for loop to repeat steps three and four for multiple requests.

for i in range(7):

headers = {'User-Agent': random.choice(user_agents)}

response = requests.get('https://www.example.com', headers=headers)

print(response.headers)

Putting it all together, your complete code will look like this:

import requests

import random

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36'

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36'

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36'

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36'

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36'

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15'

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15'

]

for i in range(7):

headers = {'User-Agent': random.choice(user_agents)}

response = requests.get('http://httpbin.org/headers', headers=headers)

print(response.text)

And here's the result:

{

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-64109cab-38fc3e707383ccc92fda9034"

}

}

{

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15",

"X-Amzn-Trace-Id": "Root=1-64109cac-3971637b5318a6f87c673747"

}

}

{

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-64109cac-42301391639825ca0497d9a3"

}

}

// ...

Awesome, right?

However, that would work for a handful of requests. Let's see how to do this at scale for web scraping.

How To Rotate Infinite Fake User Agents

How to come up with a huge list of real User Agents? If you create some that indicate contradicting information, miss information, or contain outdated software versions, your traffic might be blocked. Furthermore, the order of your headers is important.

Websites have databases of suspicious headers and related components. Also, you'll have to deal with other anti-scraping challenges, like CAPTCHAs, user behavior analysis, etc.

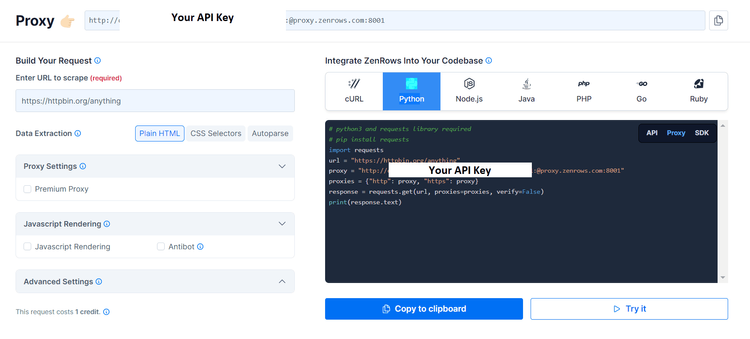

The good news is** you can complement Python Requests with a tool like ZenRows to generate UAs that work** and overcome the rest of the anti-bot protections.

Let's see how ZenRows works with Python Request step-by-step.

- Sign up to get your free API key.

- In your script, set your target URL, API key, and the ZenRows base URL.

url = "http://httpbin.org/headers" # ... your URL here

apikey = "Your_API_key"

zenrows_api_base = "https://api.zenrows.com/v1/"

- Make your request using the required ZenRows parameters. For example, to bypass anti-bot measures, add the

antibotparameter (additionally, premium proxies and JavaScript rendering would help).

response = requests.get(zenrows_api_base, params={

"apikey": apikey,

"url": url,

"antibot": True,

})

- This is what your complete code should look like:

import requests

url = "http://httpbin.org/headers" # ... your URL here

apikey = "Your_API Key"

zenrows_api_base = "https://api.zenrows.com/v1/"

response = requests.get(zenrows_api_base, params={

"apikey": apikey,

"url": url,

"antibot": True,

})

print(response.text) # pages's HTML

From now onwards, you won't need to worry about your User Agent using Python Requests anymore.

Option to Set User Agent with Sessions

Another way to specify custom headers is using the Python Requests library Session object. It's especially useful when you want to maintain consistent headers for every request to a particular website.

Here's how to set a User Agent using the Session object:

- Start by creating a

Sessionobject.

session = requests.Session()

- Then, set a User Agent in the

Sessionheaders.

session.headers.update({'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36'})

- Lastly, make your request with the

Session.

response = session.get('http://httpbin.org/headers')

Here's our complete code:

import requests

session = requests.Session()

session.headers.update({'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36'})

response = session.get('http://httpbin.org/headers')

# print response

print(response.content)

And your result should look like this:

{

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-6410bb12-31fe62664c156c98547c1fd8"

}

}

Congratulations, you've learned a new way to set your User Agent. 🎉

Browser User Agent in Python: How to Change in Popular Libraries

Like Requests, you can change the User Agent header using other Python web scraping libraries. Two popular tools are aiohttp and HTTPX.

HTTPX is a high-performance synchronous and asynchronous HTTP client, while aiohttp is a library for making asynchronous requests.

Let's explore how to get the job done with those two libraries.

User Agent with aiohttp

These are the steps you need to set a custom User Agent with aiohttp:

- Install the library with the following command:

pip install aiohttp

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36'

}

- Pass the headers dictionary as an argument in the client

Sessionobject.

async with aiohttp.ClientSession() as session:

async with session.get('http://httpbin.org/headers', headers=headers) as response:

print(await response.text())

Your complete code should look like this:

import aiohttp

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36'

}

async with aiohttp.ClientSession() as session:

async with session.get('http://httpbin.org/headers', headers=headers) as response:

print(await response.text())

To rotate User Agents, create an array and use the random.choice() method, like in Python Requests. That way, your code will look like this:

import aiohttp

import random

user_agents = [

YOUR_USER_AGENT_LIST

]

headers = {

'User-Agent': random.choice(user_agents)

}

async with aiohttp.ClientSession() as session:

async with session.get('http://httpbin.org/headers', headers=headers) as response:

print(await response.text())

User Agent with HTTPX

Here's the alternative to set the User Agent with HTTPX:

- Like with aiohttp, install HTTPX.

pip install httpx

- Create a headers dictionary containing your User Agent.

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36'

}

- Create a new HTTPX client instance and pass the headers dictionary as an argument.

async with httpx.AsyncClient() as client:

response = await client.get('http://httpbin.org/headers', headers=headers)

Putting it all together, here's your complete code:

import httpx

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36'

}

async with httpx.AsyncClient() as client:

response = await client.get('http://httpbin.org/headers', headers=headers)

print(response.text)

To rotate UAs, create a list and use the random.choice() method like in the previous example.

import httpx

import random

user_agents = [

YOUR_USER_AGENT_LIST

]

headers = {

'User-Agent': random.choice(user_agents)

}

async with httpx.AsyncClient() as client:

response = await client.get('https://www.example.com', headers=headers)

print(response.text)

And that's it! How does it feel knowing you can manipulate the User Agent using Python Requests, aiohttp, and HTTPX?

Conclusion

Setting and randomizing User Agents in web scraping can't be overemphasized. But what's even more crucial is ensuring your UA is correctly formed and matches the other HTTP headers.

However, all of that may not be enough to avoid getting blocked while web scraping because of JavaScript challenges, CAPTCHAs, user behavior analysis, etc. That's why you might want to consider ZenRows a versatile addition to the Python Requests library. Get your free API key now.

Frequent Questions

What Is the Default User Agent for Requests in Python?

Python's default User Agent for Requests is Python-requests/x.y.z, where x.y.z represents the Requests' version. For example, python-requests/2.28.2.

How to Fake a User Agent in Python?

To fake a User Agent in Python, you need to specify a new User Agent in a headers dictionary, then pass it as a parameter in your request.

How Do You Fake and Rotate User Agents in Python 3?

Follow the steps below to fake and rotate User Agents in Python 3:

- Install an HTTP Python library such as Requests, aiohttp, or HTTPX.

- Import the installed library and the

randommodule. - Create an array of User Agents to choose from.

- Create a headers dictionary using the

random.choice()method to select one User Agent from the list. - Pass the dictionary into the headers attribute of your request.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.