A Python headless browser is a tool that can scrape dynamic content smoothly without the need for a real browser. It'll reduce scraping costs and scale your crawling process.

Web scraping using a browser-based solution helps you deal with a site that requires JavaScript. On the other hand, web scraping can be a long process, especially when dealing with complex sites or a massive data list.

In this guide, we'll cover Python headless browsers, their types, pros and cons.

Let's dive right in!

What Is a Headless Browser in Python?

A headless browser is a web browser without a graphical user interface (GUI) but the capabilities of a real browser.

It carries all standard functionalities like dealing with JavaScript, clicking links, etc. Python is a programming language that lets you enjoy its full capabilities.

You can automate the browser and learn the language using a Python headless browser. It can also save you time in the development and scraping phase, as it uses less memory.

Benefits of a Python Headless Browser

Any headless browser process uses less memory than a real browser. That's because it doesn't need to draw graphical elements for the browser and the website.

Furthermore, Python headless browsers are fast and can notably speed up scraping processes. For example, if you want to extract data from a website, you can program your scraper to grab the data from the headless browser and avoid waiting for the page to load fully.

It also allows multitasking since you can use the computer while the headless browser runs in the background.

Disadvantages of a Python Headless Browser

The main downsides of Python headless browsers are the inability to perform actions that need visual interaction and that it's hard to debug.

Therefore, you won’t be able to inspect elements or watch your tests running.

Moreover, you’ll get a very limited idea on how a user would normally interact with the website.

Python Selenium Headless

The most popular Python headless browser is Python Selenium, and its primary use is automating web applications, including web scraping. Selenium also supports plugins like the Undetected ChromeDriver to bypass anti-bot detection.

Python Selenium carries the same capability as the browser and Selenium. Consequently, if the browser can be operated headlessly, so can Python Selenium.

What Headless Browser Is Included in Selenium?

Chrome, Edge, and Firefox are the three headless browsers in Selenium Python. These three browsers can be used to perform Python Selenium headlessly.

1. Headless Chrome Selenium Python

Starting from version 59, Chrome was shipping with headless capability. You can call the Chrome binary from the command line to perform headless Chrome.

chrome --headless --disable-gpu --remote-debugging-port=9222 https://zenrows.com

2. Edge

This browser was initially built with EdgeHTML, a proprietary browser engine from Microsoft. However, in late 2018, it was rebuilt as a Chromium browser with Blink and V8 engines, making it one of the best headless browsers.

3. Firefox

Firefox is another widely used browser. To open headless Firefox, type the command on the command line or terminal, as seen below.

firefox -headless https://zenrows.com

How Do You Go Headless in Selenium Python?

Let's see how to do browser automation headlessly through Python Selenium headless.

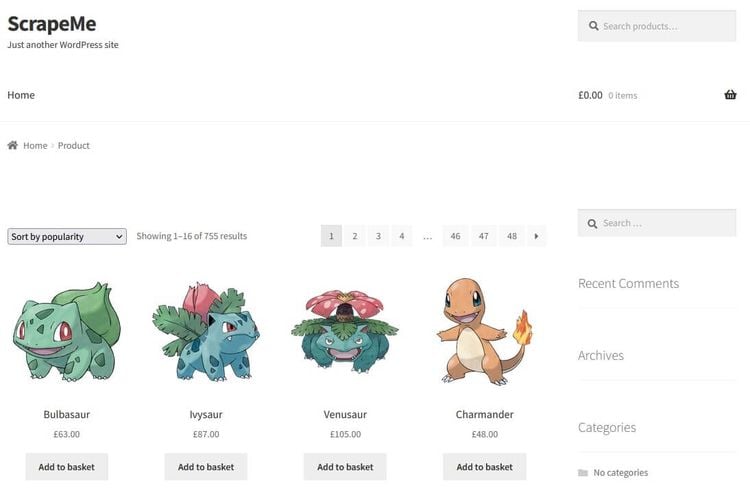

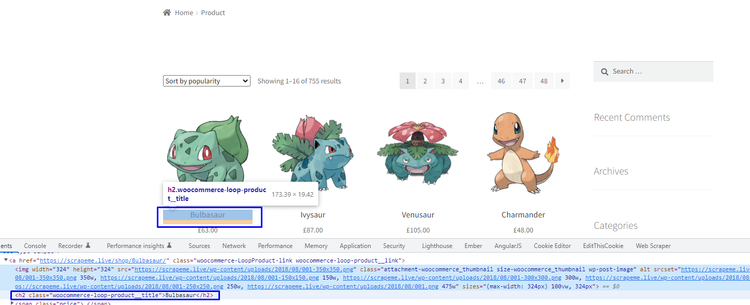

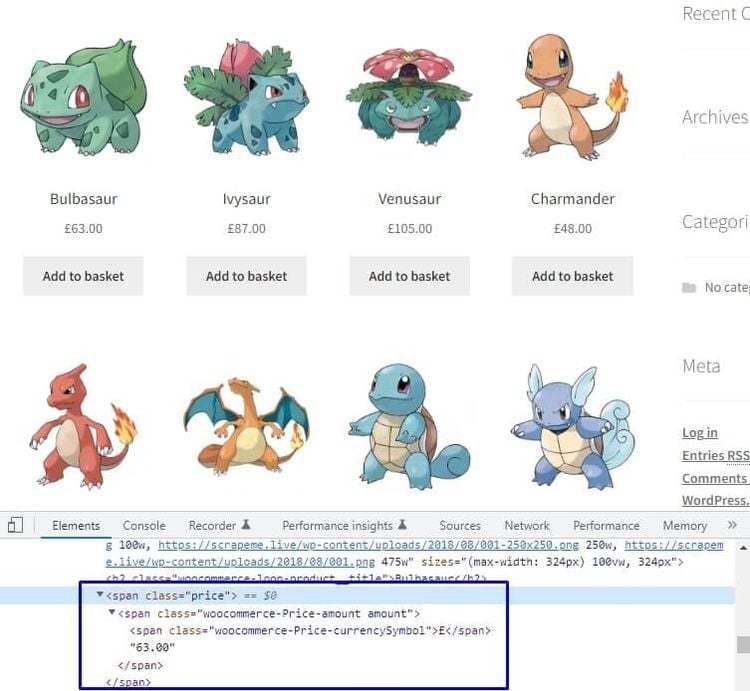

For this example, we'll scrape Pokémon details like names, links, and prices from ScrapeMe.

Here's what the page looks like.

Prerequisites

Before scraping a web page with a Python headless browser, let's install the following tools:

- Python: Download Python 3.x from the official website and follow the installation wizard to set it up.

-

Selenium: Run the command

pip install selenium. - Chrome: Download it as a regular browser if you don't have it installed yet.

Installing and importing WebDriver used to be necessary, but not anymore. For Selenium’s version 4 or higher, it comes built-in.

If you have an earlier version of Selenium installed, update it for the latest features and functionality. To check your current version, run pip show selenium. To force-install the newest version, run pip install --upgrade selenium.

Now we're ready to scrape some Pokémon data using a Python headless browser. Let's dive right in!

Step 1: Import WebDriver from Selenium

Selenium WebDriver is a web automation tool that allows you to control web browsers. It supports browsers multiple browsers, but we'll use Chrome.

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

url = "https://scrapeme.live/shop/"

with webdriver.Chrome(service=ChromeService(ChromeDriverManager().install())) as driver:

driver.get(url)

Step 2: Open the Page

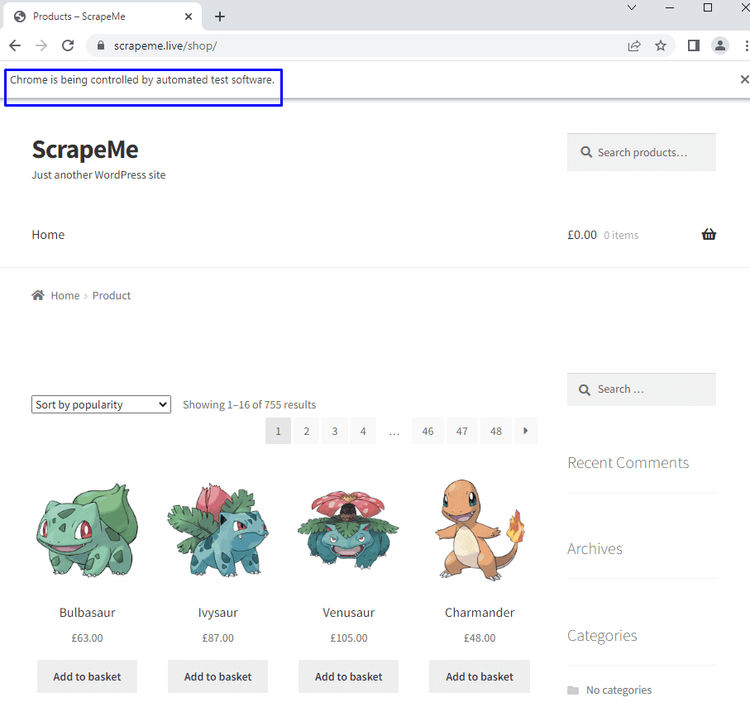

Let's write some code to open a page and confirm that your environment is set up properly and ready for scraping. Running the code below will open Chrome automatically and go to your target page.

from selenium import webdriver

url = "https://scrapeme.live/shop/"

driver = webdriver.Chrome()

driver.get(url)

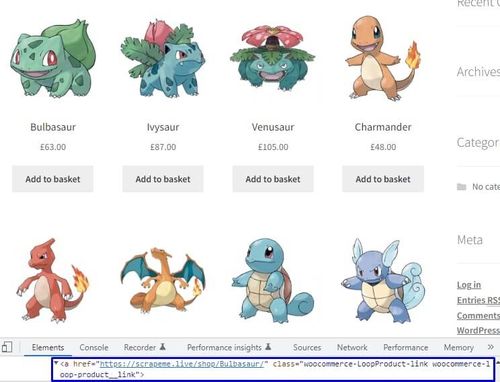

The page should look like this:

Step 3: Switch to Python Selenium Headless Mode

Once the page is open, the rest of the process will be easier. Of course, we don't want the browser to appear on the monitor, but Chrome to run headlessly. Switching to headless Chrome in Python is pretty straightforward.

We only need to add two lines of code and use the options parameter when calling the Webdriver.

# ...

options = webdriver.ChromeOptions()

options.headless = True

with webdriver.Chrome(service=ChromeService(ChromeDriverManager().install()), options=options) as driver:

# ...

The complete code will look like this:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

url = "https://scrapeme.live/shop/"

options = webdriver.ChromeOptions() #newly added

options.headless = True #newly added

with webdriver.Chrome(service=ChromeService(ChromeDriverManager().install()), options=options) as driver: #modified

driver.get(url)

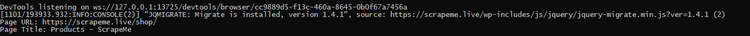

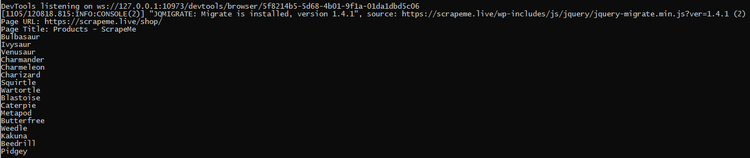

If you run the code, you'll see nothing but minor information on the command line or terminal.

No Chrome appears and invades our screen.

Awesome!

But is the code successfully reaching the page? How to verify if the scraper opens the right page? Is there any error? Those are some questions raised when you perform a Python Selenium headless.

Your code must create a log for as much as needed to address the question above.

The logs can vary, and it depends on your needs. It can be a log file in text format, a dedicated log database, or a simple output on the terminal.

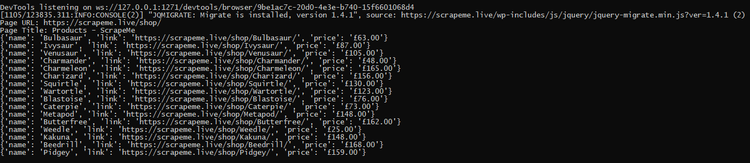

For simplicity, we'll create a log by outputting the scraping result on the terminal. Let's add two lines of code below to print the page URL and title. That'll ensure that the Python Selenium headless runs as wanted.

# ...

with webdriver.Chrome(service=ChromeService(ChromeDriverManager().install()), options=options) as driver:

# ...

print("Page URL:", driver.current_url)

print("Page Title:", driver.title)

Our crawler won't get unintended results, which is amazing. Since that's done, let's scrape some Pokémon data.

Step 3: Scrape the Data

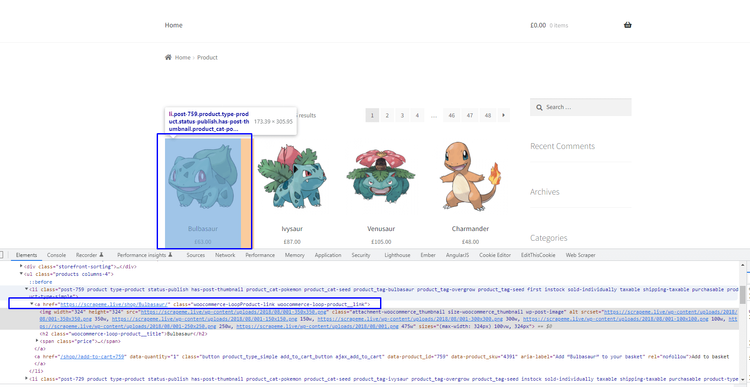

Before going deep, we'll need to find the HTML element that holds the data using a real browser to inspect the web page.

To do this, right-click on any image and select Inspect. Chrome DevTools will be opened on the Elements tab.

Let's go ahead and find the elements that hold the names, prices, and other information from the options shown. The browser will highlight the area covered by the selected element, making it easier to identify the right element.

<a href="https://scrapeme.live/shop/Bulbasaur/" class="woocommerce-LoopProduct-link woocommerce-loop-product__link">

Quite easy, right? To get the name element, hover over the name until the headless browser highlights it.

<h2 class="woocommerce-loop-product__title">Bulbasaur</h2>

The "h2" element stays inside the parent element. Let's put that element into the code so headless Chrome in Selenium Python can identify which element should be extracted from the page.

Selenium provides several ways to select and extract the desired element, like using element ID, Tag Name, class, CSS Selectors, and XPath. Let's use the XPath method.

Tweaking the initial code a little bit will make the scraper show more information, like the URL, title, and Pokémon names.

Here's what our new code looks like.

#...

from selenium.webdriver.common.by import By

#...

with webdriver.Chrome(service=ChromeService(ChromeDriverManager().install()), options=options) as driver:

#...

pokemons_data = []

parent_elements = driver.find_elements(By.XPATH, "//a[@class='woocommerce-LoopProduct-link woocommerce-loop-product__link']")

for parent_element in parent_elements:

pokemon_name = parent_element.find_element(By.XPATH, ".//h2")

print(pokemon_name.text)

Next, pull the Pokémon links from the "href" attribute under the parent element. This HTML tag will help the scraper spot the links.

<a href="https://scrapeme.live/shop/Bulbasaur/" class="woocommerce-LoopProduct-link woocommerce-loop-product__link">

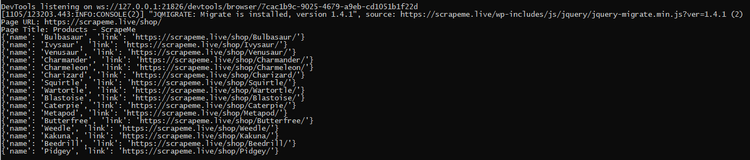

Since we got that out of the way, let's add some code to help our scraper extract and store the names and links.

We can then use a Python dictionary to manage the data, so it doesn't get mixed up. The dictionary will contain each Pokémon's data.

#...

parent_elements = driver.find_elements(By.XPATH, "//a[@class='woocommerce-LoopProduct-link woocommerce-loop-product__link']")

for parent_element in parent_elements:

pokemon_name = parent_element.find_element(By.XPATH, ".//h2")

pokemon_link = parent_element.get_attribute("href")

temporary_pokemons_data = {

"name": pokemon_name.text,

"link": pokemon_link

}

pokemons_data.append(temporary_pokemons_data)

print(temporary_pokemons_data)

Superb!

Now let's go for the last piece of Pokémon data we need, the price.

Hop over to the real browser and do a quick inspection of the element that holds the price. We can then insert a span tag on the code for the scraper to know which element contains the prices.

#...

for parent_element in parent_elements:

pokemon_name = parent_element.find_element(By.XPATH, ".//h2")

pokemon_link = parent_element.get_attribute("href")

pokemon_price = parent_element.find_element(By.XPATH, ".//span")

temporary_pokemons_data = {

"name": pokemon_name.text,

"link": pokemon_link,

"price": pokemon_price.text

}

print(temporary_pokemons_data)

And that's all! The only thing left is to run the script; voila, we got all the Pokémon data.

If you got lost somewhere, here's what the complete code should look like.

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

url = "https://scrapeme.live/shop/"

options = webdriver.ChromeOptions()

options.headless = True

with webdriver.Chrome(service=ChromeService(ChromeDriverManager().install()), options=options) as driver:

driver.get(url)

print("Page URL:", driver.current_url)

print("Page Title:", driver.title)

parent_elements = driver.find_elements(By.XPATH, "//a[@class='woocommerce-LoopProduct-link woocommerce-loop-product__link']")

for parent_element in parent_elements:

pokemon_name = parent_element.find_element(By.XPATH, ".//h2")

pokemon_link = parent_element.get_attribute("href")

pokemon_price = parent_element.find_element(By.XPATH, ".//span")

temporary_pokemons_data = {

"name": pokemon_name.text,

"link": pokemon_link,

"price": pokemon_price.text

}

print(temporary_pokemons_data)

What Is the Best Headless Browser?

Selenium headless Python isn't the only headless browser out there, as there are other alternatives, some serve only one programming language, and some others provide binding to many languages.

Excluding Selenium, here are some of the best headless browsers for your scraping project.

1. ZenRows

ZenRows is an all-in-one web scraping tool that uses a single API call to handle all anti-bot bypasses, from rotating proxies and headless browsers to CAPTCHAs.

The residential proxies provided help you crawl a web page and browse like a real user without getting blocked.

ZenRows works great with almost all popular programming languages, and you can take advantage of the ongoing free trial with no credit card required.

2. Puppeteer

Puppeteer is a Node.js library providing an API to operate Chrome/Chromium in headless mode. Google developed it in 2017, and it keeps gathering momentum.

Puppeteer and Selenium can run in headless mode. But Puppeteer performs better when dealing with Chrome because it has complete access to Chrome's DevTools protocols.

Puppeteer is also easier to set up and faster than Selenium. The downside is it works only with JavaScript. So if you aren't familiar with the language, you'll find it challenging to use Puppeteer.

3. HtmlUnit

This headless browser is a GUI-Less browser for Java programs, and It can simulate specific browsers (i.e., Chrome, Firefox, or Internet Explorer) when properly configured.

The JavaScript support is fairly good and keeps enhancing. Unfortunately, you can only use HtmlUnit with Java language.

4. Zombie.JS

Zombie.JS is a lightweight framework for testing client-side JavaScript code and also serves as a Node.js library.

Because the main purpose is for testing, it runs flawlessly with a testing framework. Similarly to Puppeteer, you can only use Zombie.JS with JavaScript.

5. Playwright

Playwright is essentially a Node.js library made for browser automation, but it provides API for other languages like Python, .NET, and Java. It's relatively fast compared to Python Selenium. You might want to see our article on Playwright vs. Selenium to learn how both tools compare.

Conclusion

Python headless browser offers benefits for the web scraping process. For example, it minimizes memory footprint, deals with JavaScript perfectly, and can run on a no-GUI environment; plus, the implementation takes only a few lines of code.

In this guide, we showed you step-by-step instructions on scraping data from a web page using a headless Chrome in Selenium Python.

Some of the disadvantages of Python headless browsers discussed in this guide are as follows:

- It can't evaluate graphical elements; therefore, you won't be able to perform any actions that need visual interaction under headless mode.

- It's hard to debug.

ZenRows is a tool that provides various services to help you do web scraping in any scenario, including using a Python headless browser with just a simple API call. Take advantage of the free trial and scrape data without stress and headaches.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.