Every day, users from all over the world produce immeasurable amounts of data online. Retrieving this programmatically requires a great deal of time and resources. As you can imagine, a manual approach can't work. That's why you need to rely on a large-scale web scraping process.

Implementing such a process isn't easy. There are so many challenges to face that you may feel discouraged. Yet, there are a lot of solutions! Here, you'll learn everything you need to know to get started with large-scale web scraping.

Let's get into it!

What Is Large-Scale Web Scraping?

Performing web scraping on a large scale means building an automatic process that can crawl and scrape millions of pages. It also involves running several spiders on one or more websites simultaneously.

There are two types of large-scale web scraping:

- Scraping thousands of web pages from a large website like Amazon, LinkedIn, or Transfermarkt.

- Crawling and extracting content from thousands of different small websites at once.

In both cases, large-scale web scraping's all about building a robust infrastructure to extract data from the web. This requires an advanced system, and you'll soon see what you need to create one.

Let's illustrate what large-scale is with a few examples:

Examples of Large-Scale Scraping

Say you want to extract data from each product in an Amazon category. This category includes 20,000 pages, containing 20 articles each. That'd mean 400,000 pages to crawl and scrape. In other words, that equals 400,000 HTTP GET requests.

Suppose each web page takes 2.5 seconds to load in the browser. That'd mean spending 400,000*2.5 seconds, which is 1,000,000 seconds. This is the equivalent of over 11 days, and that's just the time it takes to load all the pages. Extracting the data from each one and saving it would take much longer.

It isn't possible to manually retrieve all the info from an entire product category from Amazon. That's where a large-scale scraping system comes into play!

By making the GET requests on the server to parse the HTML content directly, you can reduce each to a few hundred milliseconds. Also, you can run the scraping process in parallel, extracting data from several web pages per second.

So, a large-scale scraping system would allow you to achieve the same result in a few hours without human work. This may seem easy, but it involves some challenges you can't avoid. Let's dig deeper into them.

Challenges in Large-Scale Scraping

Let's see the three most important challenges of scraping at scale.

1. Performance

Whether scraping the same website or many different ones, getting a page from a server takes time. Also, if it uses AJAX, you may need a headless browser. These open a browser behind the scenes. But waiting for a page to fully load in the browser can take several seconds.

2. Websites Changing Their Structure

Web scraping involves selecting particular DOM elements and extracting data from them. Yet, the structure of a web page is likely to change over time. This requires you to update the logic of your scrapers.

3. Anti-Scraping Techniques

The value of most websites lies in their data. Although it's publicly accessible, they don't want competitors to steal it. This is why they implement techniques to identify bots and prevent unwanted requests. Check out our guide on how to avoid being blocked while scraping to learn more.

What Do You Need To Perform Web Scraping at a Large Scale?

Now, let's see what you need to do and know to set up a large-scale web scraping process. This will include tools, methodologies, and tips for the smartest web scraping.

1. Build a Continuous Scraping Process With Scheduled Tasks

Many small scrapers are better than using one large spider crawling several pages. Let's assume you design a small one for each type of page on a website. You could launch these scrapers in parallel and extract data from different sections simultaneously.

Also, each can scrape several pages in parallel behind the scenes. This way, you could achieve a double level of parallelism.

Of course, such an approach to web scraping requires an orchestration system. That's because you don't want your scrapers to crawl a page twice simultaneously. That'd mean a waste of time and resources. One way to prevent this is to write the crawled pages' URLs and the current timestamp to the database.

Plus, a large-scale system must act continuously. This means crawling all the pages of interest and then scraping them one by one. If you want to learn more, see our guide on web crawling in JavaScript.

2. Premium Web Proxies

Websites log the IP related to each request received. When the same request comes from the same address too many times in a limited time interval, the IP gets blocked.

As you can imagine, this represents a problem for your web scraper, especially if they have to scrape thousands of pages from the same website.

To avoid your IP from being exposed and blocked, you can use a proxy server. It acts as an intermediary between your scraper and the server of your target website.

Most web proxies online are free, but these are generally unreliable and fast solutions. This is why your large-scale scraping system should rely on premium proxies. Note that ZenRows provides an excellent premium proxy service.

Premium web proxies offer several features, including rotating IPs. This gives you a fresh IP each time you perform a request, so you don't have to worry about getting banned or blocklisted. Premium web proxies also allow your scrapers to be anonymous and untraceable.

3. Advanced Data Storage Systems

Scraping thousands of web pages means extracting a lot of data. This data can be divided into two categories: raw and processed. In both cases, you need to store them somewhere.

The raw data can be the HTML documents crawled by your spiders. Keeping track of this information's helpful for future scraping iterations. To store it, you can choose one of the many cloud storage services available: they allow you to have virtually unlimited storage space but come with a cost.

Your scraper's likely to extract only a small part of the data contained in an HTML document. This information's then converted into new formats. This is the other type of data, the processed data.

It's generally stored in database rows or aggregated in human-readable formats. The best solution's to save it in a database; both relational or NoSQL ones will do the job.

4. Technologies to Bypass Anti-Bot Detection

More and more websites have been adopting anti-bot strategies. That's especially true since many CDN (Cloud Delivery Network) services now offer built-in protection systems.

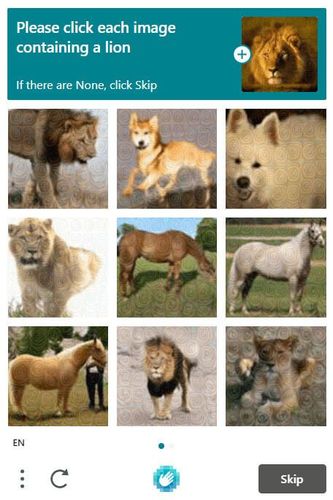

Usually, these anti-bot systems involve completing challenges only humans can handle. For example, this is how CAPTCHAs work. They typically require selecting particular images or objects to grant you access.

Such anti-bot methods prevent automated software from accessing and navigating a website, meaning they represent a significant obstacle to your scrapers. At first glance, they might seem impossible to overcome. But they aren't.

You can bypass the Cloudflare anti-bot system or the Akamai anti-bot technologies. Though, don't expect easy solutions. Moreover, the current workaround you're exploiting may not work in the future.

Anti-bot detection's just one of the anti-scraping protection systems. Your large-scale scraping process may have to deal with several of them. That's why we wrote a list of ways to avoid being blocked while web scraping.

5. Keep Your Scrapers Up To Date

Technology's constantly evolving. As a result, websites, security policies, protection systems, and libraries change. For this reason, keeping your scrapers up to date's crucial. Yet, knowing what to change isn't easy.

To make this web scraping with scale easier, you should implement a logging system. This will tell you if everything works as expected or if something goes wrong. Logging will help you understand how to update your scrapers if they no longer work, and ZenRows allows you to log everything easily.

What Are Some Tools for Large-Scale Web Scraping?

If you want to perform web scraping with scale, you must be in control of your process. There are so many challenges to face that you're likely to need a system customized to your needs. Building such a custom application's difficult for all the reasons you saw early.

Luckily, you don't have to start from scratch. You could use the most popular web scraping libraries and build a large-scale process. To get all the elements, you'd need to subscribe, adopt and integrate several different services... which takes time and money.

Or you could use an all-in-one API-based solution such as ZenRows. In this case, a single solution would get you access to premium proxies, anti-bot protection, and CAPTCHA bypass systems, as well as everything you need to perform web scraping. Join ZenRows for free.

Conclusion

Today, you learned everything essential about performing web scraping on a large scale. As shown, large-scale web scraping comes with several challenges, but they all have a solution.

Here's a quick recap. You now know:

- What large-scale web scraping is.

- What challenges it involves, and how to solve them.

- The building block of a reliable large-scale web scraping system.

- Why you should adopt an API-based solution to run scraping with scale and without troubles.

Try ZenRows for free today and see it for yourself!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.