In this web scraping jQuery tutorial, you'll learn how to build a jQuery web crawler. jQuery is one of the most popular JavaScript libraries. Specifically, jQuery enables HTML document traversal and manipulation.

This makes jQuery the perfect library to crawl web pages to perform web scraping. Here, you'll see if you can use jQuery for client-side scraping. Also, you'll learn how to use jQuery for server-side scraping.

Let's now create a jQuery scraper and achieve your data retrieval goals.

What Is Client-Side Scraping?

Client-side scraping is about retrieving web content directly from your browser. In other words, the front-end executes client-side scraping, typically through JavaScript.

You can perform it by calling a public API or parsing the page's HTML content. Keep in mind that most websites don't offer public APIs. So, you'll most likely resort to the second option: downloading HTML documents and parsing them to extract data.

Time to see how it works! Let's learn how to do client-side scraping with jQuery.

How Do I Scrape a Web Page With jQuery?

First, you need to download your target page's HTML content. Let's see how to achieve this in jQuery.

We'll fetch Google's homepage and get its content. You can do so with the get() method. get() performs a GET HTTP request and exposes what the server returns in a callback. Here's how to use it:

$.get("https://google.com/", function(html) {

console.log(html);

});

Yet, this snippet won't work! That's because you'll get the No 'Access-Control-Allow-Origin' header is present on the requested resource CORS (Cross-Origin Resource Sharing) error.

This happens because your browser's performing the HTTP request. Modern browsers automatically use the Origin HTTP header for security reasons. In detail, they place the domain you are running your request from in that header.

To comply with new CORS rules, web servers should apply domain protection approaches. This'll block requests from unwanted domains while allowing others. Thus, if your target server doesn't allow yours, you'll get the CORS error seen above.

That's why you can't scrape web content client-side using JavaScript.

This brings us to the following question:

What Is the Best Way to Scrape a Website?

As you just learned, client-side scraping is too limited for security reasons. So, currently, the most effective way to retrieve data is through server-side scraping.

It helps you solve the CORS problem seen earlier, among many others. That's because it's your server that'll be executing HTTP requests, not your browser.

You may think JavaScript is a front-end technology that can't be used on your server. That's not true. You can actually build a JS web scraper with Node.js.

Is this true also for jQuery?

Can You Use jQuery With Node.js?

Yes! Install the jquery npm library with the following command, and you're good to go:

npm install jquery

You can now use it to build a real jQuery spider. Let's see how!

How Can You Use jQuery to Scrape Data From a Website?

Get ready to do some web scraping with jQuery! We'll use our scraper to retrieve data from ScrapeMe, a static-content site perfect for testing our skills.

Here's what our target page looks like:

You can find the code in this GitHub repo. Clone it and install the project's dependencies with the following commands:

git clone https://github.com/Tonel/web-scraper-jquery

cd web-scraper-jquery

npm install

Now, launch the spider with:

npm run start

Fantastic! Keep reading to learn how to create your own jQuery web scraper with Node.js.

Prerequisites

Here's what you need to follow this tutorial:

- Node.js and npm >= 8.0+

-

jquery>= 3.6.1 -

jsdom>= 20.0.0

If you don't have Node.js on your system, you can download it by following the link above.

jQuery requires a window with a document to work. Since no such a window exists natively in Node, you can mock one with jsdom.

jsdom is a JS implementation of many web standards for Node.js. Specifically, its goal is to emulate a web browser for testing and scraping purposes.

Now you can use jQuery in Node.js for scraping!

const { JSDOM } = require( "jsdom" );

// initialize JSOM in the "https://target-domain.com/" page

// to avoid CORS problems

const { window } = new JSDOM("", {

url: "https://target-domain.com/",

});

const $ = require( "jquery" )( window );

// scraping https://target-domain.com/ web pages

Note that you must specify the url option while initializing JSDOM to avoid CORS issues. Learn more about it here.

Retrieve the HTML Document With the jQuery get() Function

You now know that to download an HTML document, you have to employ the jQuery get() function.

const { JSDOM } = require( "jsdom" );

// initialize JSOM in the "https://scrapeme.live/" page

// to avoid CORS problems

const { window } = new JSDOM("", {

url: "https://scrapeme.live/",

});

const $ = require( "jquery" )( window );

$.get("https://scrapeme.live/shop/", function(html) {

console.log(html);

});

This'll print:

<!doctype html>

<html lang="en-GB">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=2.0">

<link rel="profile" href="http://gmpg.org/xfn/11">

<link rel="pingback" href="https://scrapeme.live/xmlrpc.php">

<title>Products – ScrapeMe</title>

<!-- omitted for brevity ... -->

Neat! That's precisely what ScrapeMe's HTML content looks like.

Extract the Desired HTML Element in jQuery With find()

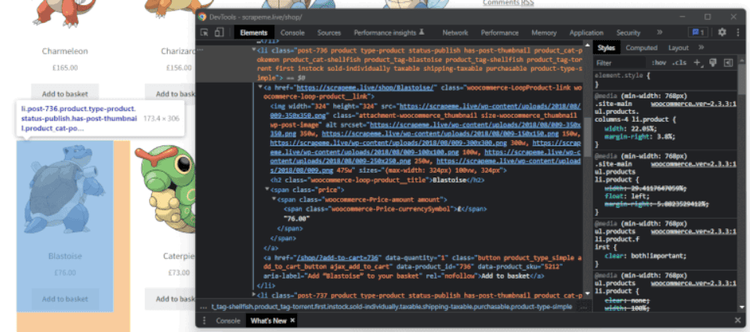

Now, let's retrieve the info associated with every product. Right-click on a product HTML element. Then, open the DevTools window by selecting the "Inspect" option. That's what you should get:

As seen, the CSS selector identifying the product elements is li.product. You can retrieve the list of these HTML elements with find() as follows:

$.get("https://scrapeme.live/shop/", function(html) {

const productList = $(html).find("li.product");

});

In detail, the find() jQuery function returns the set of DOM elements that match the CSS selector, jQuery object, or HTML element passed as a parameter.

$.get("https://scrapeme.live/shop/", function(html) {

// retrieve the list of all HTML products

const productHTMLElements = $(html).find("li.product");

});

Note that each product HTML element contains a URL, a name, an image, and a price. You can find this info in an a, img, h2, and span, respectively.

How to extract this data with find()? See below:

$.get("https://scrapeme.live/shop/", function(html) {

// retrieve the list of all HTML products

const productHTMLElements = $(html).find("li.product");

const products = [];

// populate products with the scraped data

productHTMLElements.each((i, productHTML) => {

// scrape data from the product HTML element

const product = {

name: $(productHTML).find("h2").text(),

url: $(productHTML).find("a").attr("href"),

image: $(productHTML).find("img").attr("src"),

price: $(productHTML).find("span").first().text(),

};

products.push(product);

});

console.log(JSON.stringify(products));

// store the product data on a db ...

});

You can get all the data you need by using jQuery attr() and text() functions. The good news is this requires only a few lines of code.

attr() returns the data in the HTML attribute passed as a parameter, while text() gives us the text contained in the selected element.

When run, this would print:

[

{

"name": "Bulbasaur",

"url": "https://scrapeme.live/shop/Bulbasaur/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png",

"price": "£63.00"

},

{

"name": "Ivysaur",

"url": "https://scrapeme.live/shop/Ivysaur/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/002-350x350.png",

"price": "£87.00"

},

// ...

{

"name": "Beedrill",

"url": "https://scrapeme.live/shop/Beedrill/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/015-350x350.png",

"price": "£168.00"

},

{

"name": "Pidgey",

"url": "https://scrapeme.live/shop/Pidgey/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/016-350x350.png",

"price": "£159.00"

}

]

At this point, you should save your scraped data to a database. Also, you can extend your crawling logic to go through all paginated pages, as shown in this web crawling tutorial in JavaScript.

Et voilà! You just learned how to scrape a page and retrieve all its product info.

Get the HTML Element Content With the jQuery html() Function

When scraping, consider storing the original HTML of each DOM element of interest. This makes running scraping processes on those easier in the future. Learn how to achieve this with the jQuery html() function:

const product = {

name: $(productHTML).find("h2").text(),

url: $(productHTML).find("a").attr("href"),

image: $(productHTML).find("img").attr("src"),

price: $(productHTML).find("span").first().text(),

// store the original HTML content

html: $(productHTML).html()

};

For Blastoise, this would contain:

{

"name": "Blastoise",

"url": "https://scrapeme.live/shop/Blastoise/",

"image": "https://scrapeme.live/wp-content/uploads/2018/08/009-350x350.png",

"price": "£76.00",

"html": "\n\t<a href=\"https://scrapeme.live/shop/Blastoise/\" class=\"woocommerce-LoopProduct-link woocommerce-loop-product__link\"><img width=\"324\" height=\"324\" src=\"https://scrapeme.live/wp-content/uploads/2018/08/009-350x350.png\" class=\"attachment-woocommerce_thumbnail size-woocommerce_thumbnail wp-post-image\" alt=\"\" srcset=\"https://scrapeme.live/wp-content/uploads/2018/08/009-350x350.png 350w, https://scrapeme.live/wp-content/uploads/2018/08/009-150x150.png 150w, https://scrapeme.live/wp-content/uploads/2018/08/009-300x300.png 300w, https://scrapeme.live/wp-content/uploads/2018/08/009-100x100.png 100w, https://scrapeme.live/wp-content/uploads/2018/08/009-250x250.png 250w, https://scrapeme.live/wp-content/uploads/2018/08/009.png 475w\" sizes=\"(max-width: 324px) 100vw, 324px\"><h2> class=\"woocommerce-loop-product__title\">Blastoise</h2>\n\t<span class=\"price\"><span class=\"woocommerce-Price-amount amount\"><span>76.00 class=\"woocommerce-Price-currencySymbol\">£</span>76.00</span></span>\n</a><a> href=\"/shop/?add-to-cart=736\" data-quantity=\"1\" class=\"button product_type_simple add_to_cart_button ajax_add_to_cart\" data-product_id=\"736\" data-product_sku=\"5212\" aria-label=\"Add “Blastoise” to your basket\" rel=\"nofollow\">Add to basket</a>"

}

Note that the html field stores the original HTML content. If you wanted to retrieve more data from it, you could now do it without having to crawl the entire website again.

Use Regex in jQuery

One of the best ways to retrieve your target data from an HTML document is through jQuery regex. A regex, or regular expression, is a sequence of characters that defines a text search pattern.

Let's assume you want to retrieve the price of each product element. If the <span> element containing the price doesn't have a unique CSS class, extracting this info might turn challenging.

jQuery regex will help solve this. Let's learn how:

const prices = new Set();

// use a regex to identify price span HTML elements

$(html).find("span").each((i, spanHTMLElement) => {

// keep only HTML elements whose text is a price

if (/^£\d+.\d{2}$/.test($(spanHTMLElement).text())) {

// add the scraped price to the prices set

prices.add($(spanHTMLElement).text());

}

});

// use the price data to achieve something ...

At the end of the loop, prices will contain the following results:

["£0.00","£63.00","£87.00","£105.00","£48.00","£165.00","£156.00","£130.00","£123.00","£76.00","£73.00","£148.00","£162.00","£25.00","£168.00","£159.00"]

That's it! Congratulations, you've mastered all the building blocks needed to create a jQuery web scraper.

What Are the Benefits of jQuery for Web Scraping?

Considering how popular jQuery is, you're likely familiar with it. You probably know how to use jQuery to traverse the DOM. That's its primary benefit for web scraping!

After all, scraping is about selecting HTML elements and extracting their data. You've done most of the work if you already use jQuery to retrieve them.

It's also one of the most adopted libraries for DOM manipulation. That's because it provides many features to extract and change data in the DOM effortlessly. This makes it a perfect tool for scraping.

jQuery is so powerful that it doesn't even need other dependencies to perform web scraping! It provides everything you need to build a complete scraping application. However, you might prefer to use an HTTP client such as Axios to go along with it. Check out our guide on web scraping with Axios to learn more about it.

Conclusion

You now know everything important about web scraping in jQuery, from basic to advanced techniques. As shown above, building a web scraper in jQuery isn't that difficult, but doing it client-side has limitations.

To avoid these restrictions, you have to use jQuery with Node.js.

Here's a quick recap of what you learned:

- Why client-side scraping may not be possible.

- How to use jQuery with Node.js.

- How to perform web scraping with

find()and by using regex in jQuery. - Why jQuery is an excellent tool for web scraping.

To master more useful scraping techniques, check out our JavaScript web scraping guide.

And if you're looking for a tool to take care of everything for you, use ZenRows. Scrape data with a single API request, easily bypassing all anti-bot protections. Try it for free today!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.