Web scraping is the process of extracting content and data from a website. Today we'll learn how to do it with the help of Axios, one awesome HTTP client that works for Node.js and the browser.

What Does Axios Do?

Axios allows you to make requests to websites and servers. It's similar to the way a browser does so, but instead of presenting the results visually, Axios allows you to manipulate the response using code. That is very useful in the context of web scraping.

In this tutorial, we'll extract the names and prices of some products from ScrapeMe, an e-commerce website designed to be scraped, to learn the process.

Once you learn the technique, you can use Axios to scrape any website you want.

Before Getting Started

- You should know how to develop in any programming language, ideally JavaScript.

- You should have experience using CSS selectors for accessing DOM elements.

- You have Node.js and npm installed on your machine. But if you need help, you can refer to downloading and installing Node.js and npm.

What Is the Purpose of Axios Web Scraping?

Sometimes, you can get the data you need from a web API in a structured way, for example, in a JSON format. On many occasions, the only way to access certain data is by getting it from a public website.

That can be costly and time-consuming, but Axios web scraping allows you to automate this process to get the data and content from a website efficiently.

Even if you're a front-end developer who has used Axios for ages, you may be surprised that it's possible to scrape a website with it.

In fact, it's a great idea because it runs on browsers and Node.js, has great support for Typescript, solid documentation, and many examples on the web.

Initial Setup

Create a new folder for your Axios web scraping project. We named it "scraper", but you can come up with a more imaginative name. Open your terminal in that folder, and execute the following commands to set up a new npm package and install Axios on it.

npm init -y

npm install axios

Using your favorite IDE or code editor, create a new file named "index.js" at the root of that folder and paste the following code on it.

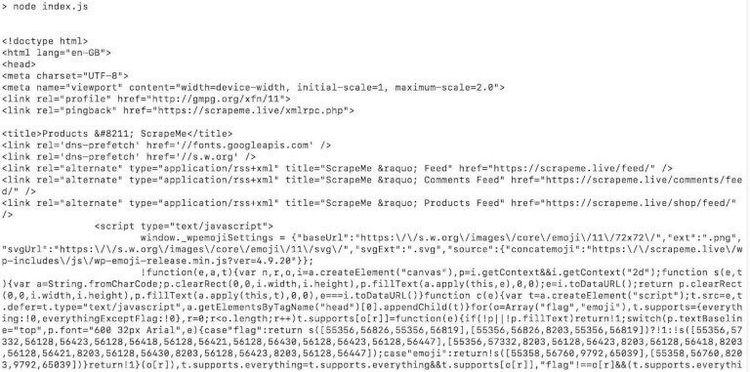

const axios = require('axios');

axios.get('https://scrapeme.live/shop/')

.then(({ data }) => console.log(data));

We need to tweak our package.json file to be able to run our code. Inside the scripts section, we'll add a new script to be able to run our index.js file. Your package.json file should look similar to this.

{

"name": "scraper",

"version": "1.0.0",

"description": "",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1",

"start": "node index.js"

},

"keywords": [],

"author": "",

"license": "ISC",

"dependencies": {

"axios": "^0.27.2"

}

}

Type npm start on your terminal to run the code. If it works, you'll see all the HTML of the webpage printed on your terminal.

That was easy! But not very useful because the information we want is all scrambled with the other contents of the site.

That is where Cheerio comes in handy. It's a library that provides us with an efficient implementation of core jQuery designed specifically for the server.

What Does Cheerio Do?

In the context of Axios web scraping, Cheerio is useful for selecting specific HTML elements and extracting their information.

Then you can organize or transform that information according to your needs. In our case, we'll use it to get the names and prices of the products on our target website.

Using Cheerio with Axios

First, install it by running the following command in the same folder that we have been using.

npm install cheerio

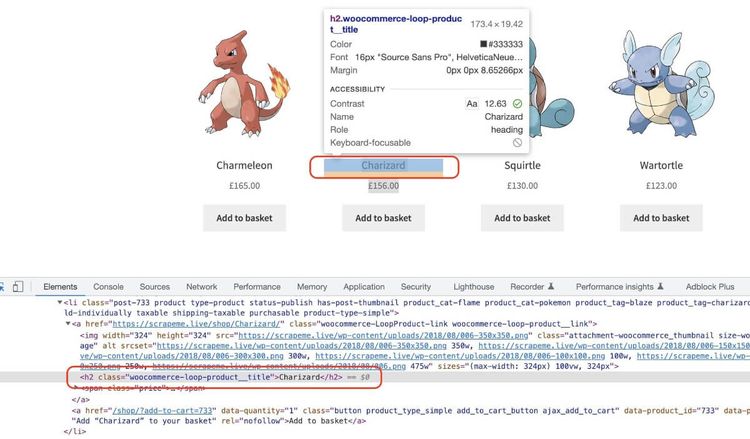

Now we'll need to tell Cheerio what information we're interested in. For that, inspect the webpage using your browser developer tools, for example, using Chrome.

As you can see, the elements containing the products' names use the class attribute woocommerce-loop-product__title.

Let's modify our index.js file, so it looks like this to select those elements.

const axios = require('axios');

const cheerio = require('cheerio');

axios.get('https://scrapeme.live/shop/')

.then(({ data }) => {

const $ = cheerio.load(data);

const pokemonNames = $('.woocommerce-loop-product__title')

.map((_, product) => {

const $product = $(product);

return $product.text()

})

.toArray();

console.log(pokemonNames)

});

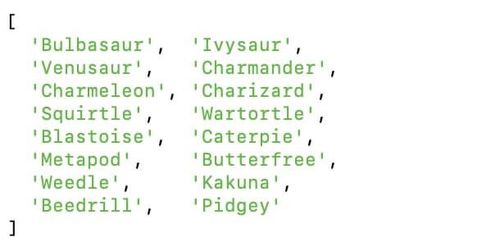

Are you ready to run the code allowing you to do your first Axios web scraping?

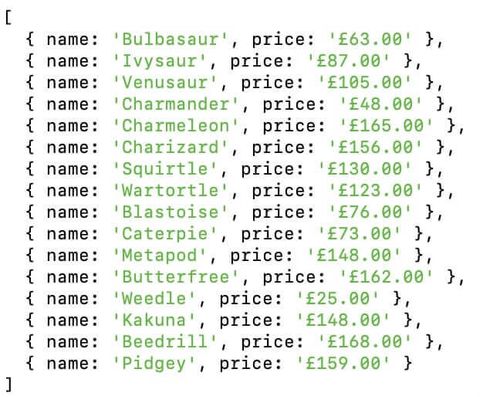

If the answer is yes, run the code, and if everything goes right, you'll see a nice list of products on the screen.

Let's modify our code a bit to get the products' prices as well.

First, we'll target a parent element in the DOM that contains the product's name and price. li.product seems to be enough.

In this case, the class attribute that we want is woocommerce-Price-amount, so we should add a piece of code to our index.js file that looks similar to this.

const axios = require('axios');

const cheerio = require('cheerio');

axios.get('https://scrapeme.live/shop/')

.then(({ data }) => {

const $ = cheerio.load(data);

const pokemons = $('li.product')

.map((_, pokemon) => {

const $pokemon = $(pokemon);

const name = $pokemon.find('.woocommerce-loop-product__title').text()

const price = $pokemon.find('.woocommerce-Price-amount').text()

return {'name': name, 'price': price}

})

.toArray();

console.log(pokemons)

});

Running this will output a nice list of products retrieved using Axios web scraping.

What Is the Website You're Trying to Scrape?

The technique we just used works fine for simple websites. However, others will try to block Axios from web scraping. In those cases, making our requests look similar to those done by actual browsers is useful.

One of the websites' most basic verification is checking the User-Agent header. That string informs the server about the operating system, vendor, and version of the requesting User-Agent.

There are different ways of writing the User-Agent string. For example, in our case, we got the following.

Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36

This "presentation card" informs a website it's being accessed by a Chrome browser version 104 on an Intel Mac running macOS Catalina.

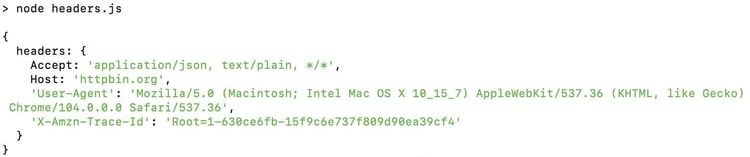

We can pass another config parameter to the request method to send additional headers using Axios. We can check that Axios is sending these headers using httpbin.

So let's create a new file named headers.js in the root folder of our project with the following content.

const axios = require('axios');

const config = {

headers: {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36',

},

};

axios.get('https://httpbin.org/headers', config)

.then(({ data }) => {

console.log(data)

});

We also need to create a new command in our package.json file. You can name it "headers" like in the following snippet.

{

"name": "scraper",

"version": "1.0.0",

"description": "",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1",

"start": "node index.js",

"headers": "node headers.js"

},

"keywords": [],

"author": "",

"license": "ISC",

"dependencies": {

"axios": "^0.27.2",

"cheerio": "^1.0.0-rc.12"

}

}

Run it on the terminal with the command npm run headers, and the header we sent should appear on the screen.

For a better shot of preventing websites from blocking your script, it's better to send additional headers apart from the User-Agent. You can visit httpbin with your browser to check all the headers it's sending. It should return something similar to this.

{

"headers": {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "en-US,en;q=0.9",

"Host": "httpbin.org",

"Sec-Ch-Ua": "\"Chromium\";v=\"104\", \" Not A;Brand\";v=\"99\", \"Google Chrome\";v=\"104\"",

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": "\"macOS\"",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "none",

"Sec-Fetch-User": "?1",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-630ce94f-289bc1c678d8cd7153e42f5a"

}

}

You can start adding some of those headers to check if you have good results with the website you're trying to scrape. If you want to check the complete code of the examples we have used, you can find it on this Axios scraping repository.

Avoiding Blocks

Keep in mind that header checking isn't the only method websites use to identify crawlers and scrapers.

For example, you can get blocked or banned if you make too many requests from the same IP address. In that case, you can command Axios to use a proxy server that'll act as an intermediary between your Axios web scraping script and the website host.

Another common problem is when the target website is a Single Page Application. For example, when you use Axios to scrape a react website.

In that case, Axios scrapes the HTML code sent by the server, but that might not contain the data you need. The reason is that the data is fetched by JavaScript code that runs on the browser of the final user. Then, using a headless browser or a web scraping API is probably a better solution.

Tip: For more information on how to configure Axios to use a proxy and how to scrape using a headless browser, read our guide on web scraping with JavaScript and Node.js.

Conclusion

As we saw in this tutorial, web scraping can be easy or challenging, depending on the website you're trying to scrape.

Sometimes, all you need to do is:

- Fire the request with Axios.

- Get the data in a callback.

- Select the relevant parts with Cheerio.

- Format the information with vanilla JavaScript.

But if the target website implements anti-scraping measures, you need to use more advanced techniques. Some of these techniques include adding HTTP headers or using proxies.

If you prefer to focus on generating value from the data you scrape instead of bypassing anti-bot systems, try a web scraping API with Axios.

ZenRows offers a professional and easy-to-use suite of tools for web scraping. The best of it? Every plan includes automatic data extraction, smart rotating proxies, anti-bot & CAPTCHA bypass, and JavaScript rendering, among other useful features. Try it for free now.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.