With millions of weekly downloads, Puppeteer is the most popular headless browser for Node.js. While it makes it possible to scrape dynamic sites, your script might still get identified as a bot and your IP blocked. Learn how to avoid that with a Puppeteer proxy!

Let's jump into it!

What Is a Puppeteer Proxy

A proxy works as an intermediary between a client and a server. When a client makes a request through a proxy, this forwards it to the server. The target site will then see the request as coming from the proxy server, not the original client. As a result, this mechanism guarantees anonymity for the client.

Most tools that make web requests support it, including headless browsers. A Puppeteer proxy protects the client's IP when visiting a site running a Node.js script. Plus, it provides a solution to bypass blocks and geographic restrictions.

Read on to find out how to set up a proxy in Puppeteer for web scraping!

Prerequisites

If you don't have Node.js installed on your machine, download the latest LTS version. Double-click on the installer and follow the wizard.

Verify that Node.js works:

node -v

It should print something like this:

v18.16.0

Next, initialize a Node.js project and add the puppeteer npm package to its dependencies:

npm install puppeteer

Use Puppeteer to control Chrome with the snippet below. It imports the scraping library, initializes a headless browser instance, and instructs it to visit a sample target page.

const puppeteer = require('puppeteer')

async function scrapeData() {

// launch a browser instance

const browser = await puppeteer.launch()

// open a new page in the current browser context

const page = await browser.newPage()

// visit the target page in the browser

await page.goto('https://developer.chrome.com/')

// scraping logic...

await browser.close()

}

scrapeData()

Great! You just saw how to get started with Puppeteer in Node.js. It's time to learn how to use it with a proxy!

How Do I Add a Proxy to Puppeteer?

To set a proxy in Puppeteer, do the following:

- Get a valid proxy server URL.

- Specify it in the

--proxy-serverChrome flag. - Connect to the target page.

Let's go through the entire procedure step by step.

First, retrieve the URL of a proxy server from Free Proxy List. Then, configure Puppeteer to start Chrome with the --proxy-server option:

// free proxy server URL

const proxyURL = `http://64.225.8.82:9995`

// launch a browser instance with the

// --proxy-server flag enabled

const browser = await puppeteer.launch({

args: [`--proxy-server=${proxyURL}`]

})

The controlled Chrome instance will run all requests via the proxy server set in the flag.

To make sure the proxy works, use http://httpbin.org/ip as a target page:

await page.goto('https://httpbin.org/ip')

This site returns the IP of the caller.

Extract the text content of the target web page and print it as a JSON value:

const body = await page.waitForSelector('body')

const ip = await body.getProperty('textContent')

console.log(await ip.jsonValue())

Put it all together:

const puppeteer = require('puppeteer')

async function scrapeData() {

// free proxy server URL

const proxyURL = 'http://64.225.8.82:9995'

// launch a browser instance with the

// --proxy-server flag enabled

const browser = await puppeteer.launch({

args: [`--proxy-server=${proxyURL}`]

})

// open a new page in the current browser context

const page = await browser.newPage()

// visit the target page

await page.goto('https://httpbin.org/ip')

// extract the IP the request comes from

// and print it

const body = await page.waitForSelector('body')

const ip = await body.getProperty('textContent')

console.log(await ip.jsonValue())

await browser.close()

}

scrapeData()

Here's what this script will print:

{ "origin": "64.225.8.82" }

That's the same IP of the proxy server, proving Puppeteer is visiting the page through the specified proxy, as desired. 🎉

Free proxies are unreliable and short-lived, so the one used in the snippet above is unlikely to work. Don't worry; we'll explore a better alternative later in the article.

Fantastic! You now know the basics of using a Node.js Puppeteer proxy. Let's dive into more advanced concepts!

Puppeteer Proxy Authentication: Username and Password

Commercial and premium proxy services often enforce authentication to a Puppeteer proxy. That way, only users with valid credentials can connect to their servers.

This is what the URL of an authenticated proxy involving a username and password looks like:

<PROXY_PROTOCOL>://<USERNAME>:<PASSWORD>@<PROXY_IP_ADDRESS>:<PROXY_PORT>

The problem is Chrome doesn't support this syntax, as it ignores the username and password by default. For that reason, Puppeteer introduced the authenticate() method.

It accepts a pair of credentials and uses them to perform basic HTTP authentication:

await page.authenticate({ username, password })

Use that special method to deal with proxy authentication in Puppeteer as follows:

const puppeteer = require('puppeteer')

async function scrapeData() {

// authenticated proxy server info

const proxyURL = 'http://138.91.159.185:8080'

const proxyUsername = 'jlkrtcxui'

const proxyPassword = 'dna8103ns7ahdb390dn'

// launch a browser instance with the

// --proxy-server flag enabled

const browser = await puppeteer.launch({

args: [`--proxy-server=${proxyURL}`]

})

// open a new page in the current browser context

const page = await browser.newPage()

// specify the proxy credentials before

// visiting the page

await page.authenticate({

username: proxyUsername,

password: proxyPassword,

})

// visit the target page

await page.goto('https://httpbin.org/ip')

// extract the IP the request comes from

// and print it

const body = await page.waitForSelector('body')

const ip = await body.getProperty('textContent')

console.log(await ip.jsonValue()) // { "origin": "138.91.159.185" }

await browser.close()

}

scrapeData()

When the credentials are wrong, the proxy server will return a 407: Proxy Authentication Required, and the script will fail with ERR_HTTP_RESPONSE_CODE_FAILURE. So, always make sure your username and password are valid.

Use a Rotating Proxy in Puppeteer with NodeJS

If you make too many requests quickly, the server may mark your script as a threat and ban your IP. You can prevent that with a rotating proxy approach, which involves changing proxies after a specified time or a number of requests.

Your end IP will constantly change, making the server unable to track you. That's what a Puppeteer proxy rotator is all about!

Let's learn how to implement proxy rotation and bypass anti-bots like Cloudflare in Puppeteer.

First, you need a bucket of proxies to draw from. In this example, we'll rely on a list of free proxies:

const proxies = [

'http://45.84.227.55:1000',

'http://66.70.178.214:9300',

// ...

'http://104.248.90.212:80'

]

Next, create a function that extracts a random proxy and uses it to launch a new Chrome instance:

const puppeteer = require('puppeteer')

// the proxies to rotate on

const proxies = [

'http://19.151.94.248:88',

'http://149.169.197.151:80',

// ...

'http://212.76.118.242:97'

]

async function launchBrowserWithProxy() {

// extract a random proxy from the list of proxies

const randomProxy = proxies[Math.floor(Math.random() * proxies.length)]

const browser = await puppeteer.launch({

args: [`--proxy-server=${randomProxy}`]

})

return browser

}

Use launchBrowserWithProxy() instead of the launch() method from Puppeteer:

// launchBrowserWithProxy() definition...

async function scrapeSpecificPage() {

const browser = await launchBrowserWithProxy()

const page = await browser.newPage()

// visit the target page

await page.goto('https://example.com/page-to-scrape')

// scrape data...

await browser.close()

}

scrapeSpecificPage()

Repeat this logic every time you need to scrape a new page.

Way to go! Your Puppeteer rotating proxy script is ready now.

However, the problem with this solution is that it requires instantiating a new browser for each page to scrape. An unnecessary waste of resources! On the bright side, there is a more efficient alternative. Let's dive into it!

Custom IP per Page with puppeteer-page-proxy

puppeteer-page-proxy extends Puppeteer to allow setting proxies per page or request basis.

This Node.js package also supports HTTP/HTTPS and SOCKS proxies and authentication.

It enables scraping several pages in parallel through different proxies in the same browser. That makes it the perfect tool to build a scalable proxy rotator in Puppeteer.

Switching the proxy server with puppeteer-page-proxy only takes three steps:

1. Install it with the following command:

npm install puppeteer-page-proxy

- Next, import it:

const useProxy = require('puppeteer-page-proxy')

- Finally, with the

useProxy()function, set the proxy to use for the current page:

await useProxy(page, proxy)

See it in action in the snippet below:

const puppeteer = require('puppeteer')

const useProxy = require('puppeteer-page-proxy')

const proxies = [

'http://19.151.94.248:88',

'http://149.169.197.151:80',

// ...

'http://212.76.118.242:97'

]

async function scrapeData() {

const browser = await puppeteer.launch()

const page = await browser.newPage()

// get a random proxy

const proxy = proxies[Math.floor(Math.random() * proxies.length)]

// specify a per-page proxy

await useProxy(page, proxy)

await page.goto('https://httpbin.org/ip')

const body = await page.waitForSelector('body')

const ip = await body.getProperty('textContent')

console.log(await ip.jsonValue())

await browser.close()

}

scrapeData()

Every time you run this script, you'll see a different IP. Repeat this logic before calling page.goto() to implement a Puppeteer proxy rotator.

To use an authenticated proxy, you have to specify it in the following format:

const proxy = 'protocol://username:password@host:port'

Here's an example:

const proxy = "http://jlkrtcxui:[email protected]:8080"

If the target page makes AJAX calls, and you want to make them run through a proxy, use this:

await page.setRequestInterception(true)

page.on('request', async (request) => {

const proxy = proxies[Math.floor(Math.random() * proxies.length)]

await useProxy(request, proxy)

})

Well done! Now you know an alternative approach to accomplishing proxy rotation in Puppeteer!

What Proxy Is Best for Puppeteer

Time to test the rotation proxy built earlier against a real target. Suppose you want to take a screenshot of data contained in the G2's Reviews page for Microsoft Teams: https://www.g2.com/products/microsoft-teams/reviews

You can use this script:

const puppeteer = require('puppeteer')

const useProxy = require('puppeteer-page-proxy')

const proxies = [

'http://19.151.94.248:88',

'http://149.169.197.151:80',

// ...

'http://212.76.118.242:97'

]

async function scrapeData() {

const browser = await puppeteer.launch()

const page = await browser.newPage()

// specify the page viewport to

// get a screenshot in the desired format

await page.setViewport({

width: 1200,

height: 800,

})

const proxy = proxies[Math.floor(Math.random() * proxies.length)]

await useProxy(page, proxy)

await page.goto('https://www.g2.com/products/microsoft-teams/reviews')

// take a screenshot of the reviews on the page

await page.screenshot({ 'path': 'reviews.png' })

await browser.close()

}

scrapeData()

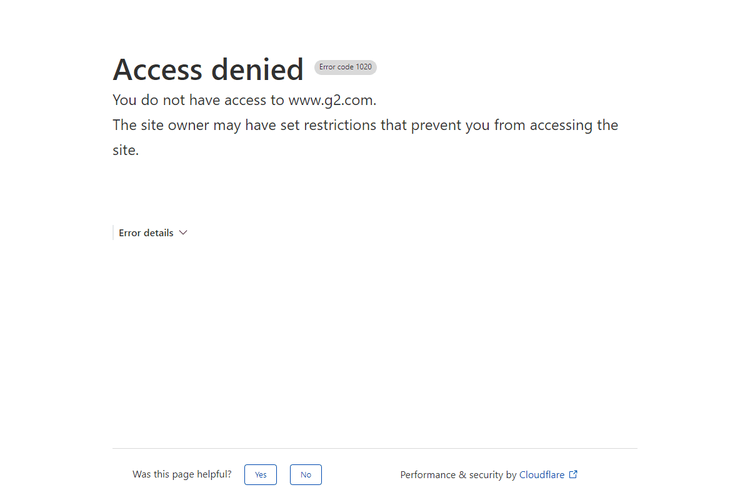

And this is what you'll get:

The destination site detected your script as a bot and responded with a 403 forbidden error.

That's common when relying on free proxies because they'll get you blocked most of the time. We used them to show the basics, but you should never adopt them in a real-world script. Instead, check out our list of the best proxy types for scraping.

How to overcome that limitation? With a premium proxy!

A headless browser helps you avoid getting blocked when configured for scraping and used with premium proxies. Take a look at our guide to get some tips on how to prevent bot detection in Puppeteer. But even with customizations and the right proxies, you're still likely to get blocked. The reason? Due to the automated nature of Puppeteer, anti-scraping systems can detect and block it.

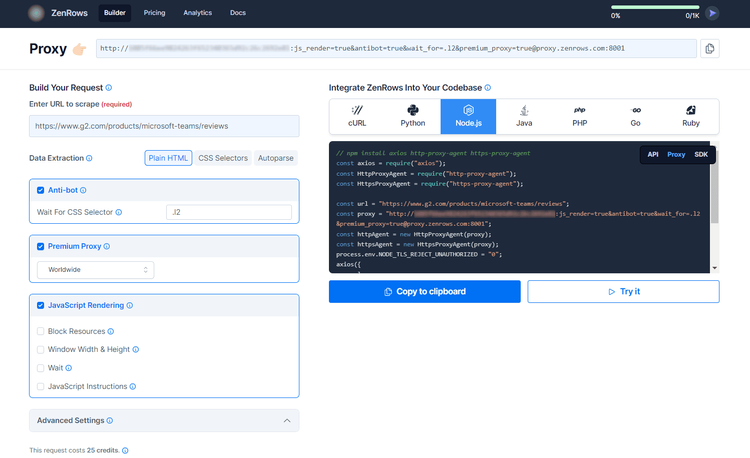

A better alternative to avoid blocks is ZenRows. This scraping API offers similar functions to Puppeteer but with a significantly higher success rate. Its full-featured toolkit can bypass all anti-scraping measures. Sign up now to get your 1,000 free API credits.

After creating your account, reach the Request Builder page. Paste the target URL and check "JavaScript Rendering", "Premium Proxy", and "Anti-bot", and wait for the .l2 CSS selector.

Choose "Node.js" as a language, select the "Proxy" mode on the right, and finally, click the "Copy to clipboard" button.

You'll get this code:

const axios = require("axios");

const HttpProxyAgent = require("http-proxy-agent");

const HttpsProxyAgent = require("https-proxy-agent");

const url = "https://www.g2.com/products/microsoft-teams/reviews";

const proxy = "http://<YOUR_ZENROWS_API_KEY>:js_render=true&antibot=true&wait_for=.l2&[email protected]:8001";

const httpAgent = new HttpProxyAgent(proxy);

const httpsAgent = new HttpsProxyAgent(proxy);

process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0";

axios({

url,

httpAgent,

httpsAgent,

method: 'GET',

})

.then(response => console.log(response.data))

.catch(error => console.log(error));

Install the new dependencies:

npm install axios http-proxy-agent https-proxy-agent

Run the script, and you'll get access to the data you want with no headaches. Bye, bye, error 403.

Incredible! Now you have a proxy scraping solution with Puppeteer's capabilities, but much more effective!

Conclusion

This step-by-step tutorial explained how to configure a proxy in Puppeteer. You began with the basics and have become a Puppeteer Node.js proxy ninja!

Now, you know:

- What a Puppeteer proxy is.

- The basics of using a proxy in Puppeteer.

- How to deal with an authenticated proxy in Puppeteer.

- How to build a rotating proxy and why this mechanism doesn't work with free proxies.

Using proxies in Puppeteer helps avoid IP blocks. However, remember that some anti-scraping technologies will still be able to block you. Bypass them all with ZenRows, a scraping tool with the best rotating residential proxies on the market and advanced anti-bot bypass features. To top it off, all you need to make is a single API call.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.