Have you ever had your Selenium web scraper blocked? You're not alone! Websites use Selenium's automation properties to detect and block you out. But you can mask your bot with the Selenium Stealth plugin to fortify it.

In this tutorial, you'll learn how to web scrape with Selenium in Python without getting blocked. We'll show you how to integrate Selenium Stealth and then see some alternatives.

Ready? Let's dive in!

What Is Selenium Stealth

Developed initially to test website behavior, Selenium has quickly become a popular web scraping tool. It's instrumental when crawling dynamic websites as it renders JavaScript like an actual browser and enables browser-like actions such as clicking and filling forms.

Selenium is versatile and capable of automating different browsers, including Chrome, Firefox, Safari, etc., through WebDriver middleware. However, anti-bot measures can easily detect it due to built-in automation properties and command line flags.

That's where the Selenium Stealth plugin plays a key role. By masking detection leaks, it enables you to bypass anti-bot detection.

Prerequisites

Before we dive into scraping using Selenium Stealth in Python, let's see how base Selenium gets blocked. As a target, we'll use OpenSea, a website with anti-bot protection.

To follow along in this tutorial, ensure you have Python installed. Then, install Selenium using the following command:

pip install selenium

BoldSelenium WebDriver serves as a web automation tool that empowers you to control web browsers. It supports most major browsers, but we'll use Chrome in this tutorial because Chrome is the most used one.

Previously, it was necessary to install and import WebDriver, but this is no longer required. Starting from Selenium version 4 and above, WebDriver is included by default. If you're using an older version of Selenium, update it to access the latest features and capabilities. To check your current version, use the command pip show selenium, and install the most recent one with pip install --upgrade selenium.

Create a Python file (for example, selenium_scraper.py) in your favorite code editor, then import WebDriver, Service, and ChromeDriver Manager.

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

Next, create a new Service instance, specifying the path to the ChromeDriver executable using executable_path. Then, define the Chrome options object to use the headless option.

# create a new Service instance and specify path to Chromedriver executable

service = ChromeService(executable_path=ChromeDriverManager().install())

# create ChromeOptions object

options = webdriver.ChromeOptions()

options.add_argument('--headless')

Initialize a Chrome WebDriver instance, passing in the Service and options objects as arguments.

# create a new Chrome webdriver instance, passing in the Service and options objects

driver = webdriver.Chrome(service=service, options=options)

Lastly, navigate to the target website and take a screenshot.

# navigate to the webpage

driver.get('https://opensea.io/')

# Wait for page to load

while driver.execute_script("return document.readyState") != "complete":

pass

# take a screenshot of the webpage

driver.save_screenshot('screenshot.png')

# close the webdriver

driver.quit()

Putting everything together, your complete code should look like this:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

# create a new Service instance and specify path to Chromedriver executable

service = ChromeService(executable_path=ChromeDriverManager().install())

# create ChromeOptions object

options = webdriver.ChromeOptions()

options.add_argument('--headless')

# create a new Chrome webdriver instance, passing in the Service and options objects

driver = webdriver.Chrome(service=service, options=options)

# navigate to the webpage

driver.get('https://opensea.io/')

# take a screenshot of the webpage

driver.save_screenshot('opensea-blocked.png')

# close the webdriver

driver.quit()

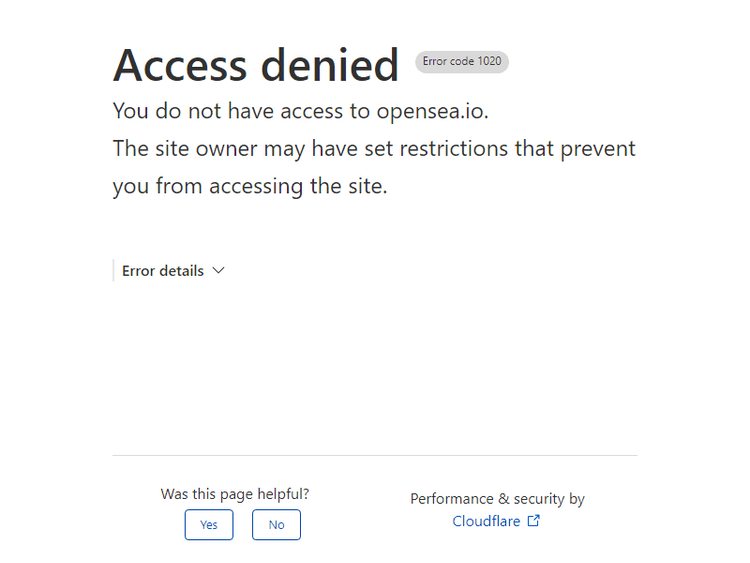

And this is the screenshot we took:

The above result shows base Selenium got blocked by OpenSea's anti-bot challenges.

Now, let's try scraping the same website using the Stealth plugin.

How to Web Scrape with Selenium Stealth in Python

Here are the steps we're taking to scrape using Selenium Stealth in Python:

Step 1: Install and Import Selenium Stealth

Install Selenium Stealth using the following command:

pip install selenium-stealth

BoldNow, create a new Python file and a new Service instance like in our previous script. Then, import Stealth from Selenium Stealth alongside Service and the WebDriver module.

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

from selenium_stealth import stealth

# create a new Service instance and specify path to Chromedriver executable

service = ChromeService(executable_path=ChromeDriverManager().install())

Step 2: Change Browser Properties

You can change default browser properties such as navigator.webdriver, automation-controlled features, viewport, etc.

To do that, create a Chrome options object and set the different ChromeDriver options you want, including headless mode. For example:

# create a ChromeOptions object

options = webdriver.ChromeOptions()

#run in headless mode

options.add_argument("--headless")

# disable the AutomationControlled feature of Blink rendering engine

options.add_argument('--disable-blink-features=AutomationControlled')

# disable pop-up blocking

options.add_argument('--disable-popup-blocking')

# start the browser window in maximized mode

options.add_argument('--start-maximized')

# disable extensions

options.add_argument('--disable-extensions')

# disable sandbox mode

options.add_argument('--no-sandbox')

# disable shared memory usage

options.add_argument('--disable-dev-shm-usage')

Additionally, set the navigator.webdriver property to undefined. For that, create a new driver instance and call the driver.execute_script() function as in the code below.

#create a new driver instance

driver = webdriver.Chrome(service=Service, options=options)

# Change the property value of the navigator for webdriver to undefined

driver.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

Well done! The properties are masked now.

Step 3: Rotate User Agents

Selenium Stealth enables additional features like rotating User Agents. To do that, first import random, and then define a list of User Agent strings.

//...

import random

user_agents = [

# Add your list of user agents here

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

]

Select a random User Agent from your list and add it to the browser options using options.add_argument.

user_agent = random.choice(user_agents)

options.add_argument(f'user-agent={user_agent}')

You can also set User Agents using execute_cdp_cmd.

driver.execute_cdp_cmd('Network.setUserAgentOverride', {"userAgent": user_agent})

Done! You'll request with a different UA each time.

Step 4: Scrape with Stealth

Now that we've fortified our Python scraper with Selenium Stealth, it's time to see if we can scrape the page that blocked us before.

First, initialize the Chrome driver with the specified options and the Service module.

driver = webdriver.Chrome(service=Service("chromedriver.exe"), options=options)

Then, enable the Stealth mode by applying the stealth function, as in the code below.

stealth(driver,

languages=["en-US", "en"],

vendor="Google Inc.",

platform="Win32",

webgl_vendor="Intel Inc.",

renderer="Intel Iris OpenGL Engine",

fix_hairline=True,

)

Lastly, navigate to the target website, wait for the page to load, and take a screenshot.

#navigate to opensea

driver.get('https://opensea.io/')

# Wait for page to load

while driver.execute_script("return document.readyState") != "complete":

pass

# Take screenshot

driver.save_screenshot("opensea.png")

# Close browser

driver.quit()

Putting all of it together, here's our complete code:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

from selenium_stealth import stealth

import random

# create a new Service instance and specify path to Chromedriver executable

service = ChromeService(executable_path=ChromeDriverManager().install())

# Step 2: Change browser properties

# create a ChromeOptions object

options = webdriver.ChromeOptions()

#run in headless mode

options.add_argument("--headless")

# disable the AutomationControlled feature of Blink rendering engine

options.add_argument('--disable-blink-features=AutomationControlled')

# disable pop-up blocking

options.add_argument('--disable-popup-blocking')

# start the browser window in maximized mode

options.add_argument('--start-maximized')

# disable extensions

options.add_argument('--disable-extensions')

# disable sandbox mode

options.add_argument('--no-sandbox')

# disable shared memory usage

options.add_argument('--disable-dev-shm-usage')

# Set navigator.webdriver to undefined

# create a driver instance

driver = webdriver.Chrome(service=service, options=options)

# Change the property value of the navigator for webdriver to undefined

driver.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

# Step 3: Rotate user agents

user_agents = [

# Add your list of user agents here

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

]

# select random user agent

user_agent = random.choice(user_agents)

# pass in selected user agent as an argument

options.add_argument(f'user-agent={user_agent}')

# Step 4: Scrape using Stealth

#enable stealth mode

stealth(driver,

languages=["en-US", "en"],

vendor="Google Inc.",

platform="Win32",

webgl_vendor="Intel Inc.",

renderer="Intel Iris OpenGL Engine",

fix_hairline=True,

)

# navigate to opensea

driver.get("https://opensea.io/")

# Wait for page to load

while driver.execute_script("return document.readyState") != "complete":

pass

# Take screenshot

driver.save_screenshot("opensea.png")

# Close browser

driver.quit()

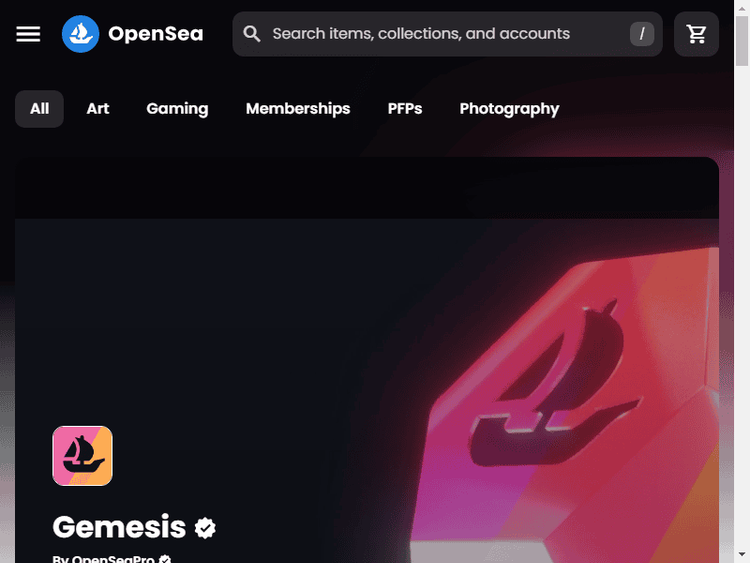

And this is the screenshot we'll get:

Congrats, you've successfully avoided Selenium detection!

Limitations of Selenium Stealth and Alternatives

While Selenium Stealth does a lot to bypass e.g. OpenSea, it'll fail to bypass some advanced anti-bot detection techniques.

Let's try our Selenium Stealth script on NowSecure, a webpage that tests your requests with anti-bot challenges and prompts a "you passed" message if you're successful.

Replacing our previous target URL with nowsecure.nl, we have the following code:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

from selenium_stealth import stealth

import random

# create a new Service instance and specify path to Chromedriver executable

service = ChromeService(executable_path=ChromeDriverManager().install())

# Step 2: Change browser properties

# create a ChromeOptions object

options = webdriver.ChromeOptions()

#run in headless mode

options.add_argument("--headless")

# disable the AutomationControlled feature of Blink rendering engine

options.add_argument('--disable-blink-features=AutomationControlled')

# disable pop-up blocking

options.add_argument('--disable-popup-blocking')

# start the browser window in maximized mode

options.add_argument('--start-maximized')

# disable extensions

options.add_argument('--disable-extensions')

# disable sandbox mode

options.add_argument('--no-sandbox')

# disable shared memory usage

options.add_argument('--disable-dev-shm-usage')

# Set navigator.webdriver to undefined

# create a driver instance

driver = webdriver.Chrome(service=Service, options=options)

# Change the property value of the navigator for webdriver to undefined

driver.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

# Step 3: Rotate user agents

user_agents = [

# Add your list of user agents here

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

]

# select random user agent

user_agent = random.choice(user_agents)

# pass in selected user agent as an argument

options.add_argument(f'user-agent={user_agent}')

# set user agent using execute_cpd_cmd

driver.execute_cdp_cmd('Network.setUserAgentOverride', {"userAgent": user_agent})

# Step 4: Scrape using Stealth

#enable stealth mode

stealth(driver,

languages=["en-US", "en"],

vendor="Google Inc.",

platform="Win32",

webgl_vendor="Intel Inc.",

renderer="Intel Iris OpenGL Engine",

fix_hairline=True,

)

# navigate to nowsecure

driver.get("https://nowsecure.nl/")

# Wait for page to load

while driver.execute_script("return document.readyState") != "complete":

pass

# Take screenshot

driver.save_screenshot("nowsecure.png")

# Close browser

driver.quit()

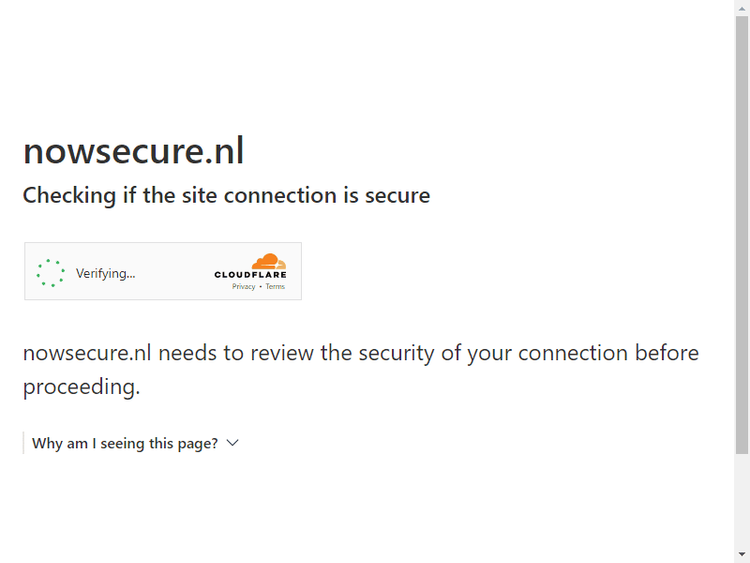

And this is the screenshot we get:

The screenshot above proves that Selenium Stealth is insufficient and doesn't work against advanced Cloudflare protection.

You can check out how to bypass Cloudflare with Selenium for a detailed explanation of how to get around it.

An alternative Python library that could help you avoid detection with Selenium is Undetected ChromeDriver. It's maintained better than Selenium Stealth but has similar limitations, as it doesn't work on many websites.

That raises the question: What works?

Luckily, the ZenRows library offers the ultimate solution for bypassing anti-bot detection, regardless of the level of complexity. Let's see it in action against NowSecure, where Selenium Stealth failed.

First, sign up to get your free API key. Then, install ZenRows by entering the following command in your terminal:

pip install zenrows

Next, import the ZenRows client and create a new instance using your API key.

from zenrows import ZenRowsClient

#Create a new zenrowsClient instance

client = ZenRowsClient("APIKey")

Specify your target URL and set the necessary parameters. To bypass anti-bot measures, you must set the antibot, premium_proxy and js_render parameters to true.

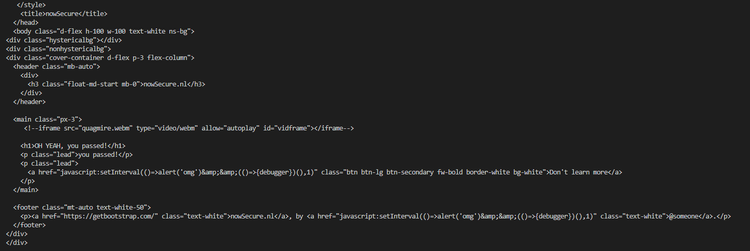

Here's the result:

Bingo! How does it feel to know you can bypass everything? Great, right?

Conclusion

Selenium is a popular web scraping and automation tool, but its default properties make it easy for websites to detect and deny you access. As a quick fix, Selenium Stealth masks such properties' presence to allow you to fly under the radar.

However, the plugin doesn't even work against advanced detection techniques. Fortunately, ZenRows, a constantly evolving web scraping solution, offers convenient ways to bypass even the most complicated anti-bot measures. Sign up for your free API key to get started.

Frequent Questions

Can Selenium Be Traced?

Yes, Selenium can be traced by websites using anti-bot measures by analyzing different parameters, such as navigator properties, User Agents, mouse movements, etc. That way, websites can identify automated browsers like Selenium.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.