What's the best Java web scraping library? With so many options out there, our review will bring clarity on what to consider and what tools you should implement for your use case. Moreover, you'll see a real example of each one.

Let's explore!

1. ZenRows

ZenRows is an all-in-one library that allows developers to scrape data with a single API request. It bypasses all anti-scraping protections (CAPTCHAs, honeypot traps…) and saves proxy costs for you.

👍 Pros:

- Easy to use: ZenRows' API is simple and intuitive, allowing developers of any skill level to quickly set up a basic integration.

- Well-documented.

- Flexible and scalable: You get 1,000 free API credits. After that, you'll just pay for what you need and for successful requests only.

- Reliable and secure: ZenRows ensures 99.9% uptime and has live chat support.

👎 Cons:

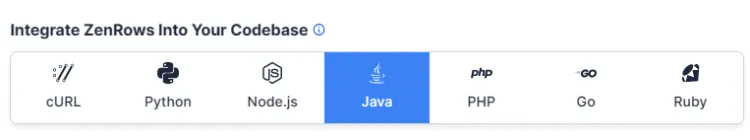

- Data parsing: You'll need a complementary library, like Jsoup, to perform HTML parsing. Now, let's see how it works. Sign up to get to the Request Builder page. Then, select Java from the dashboard.

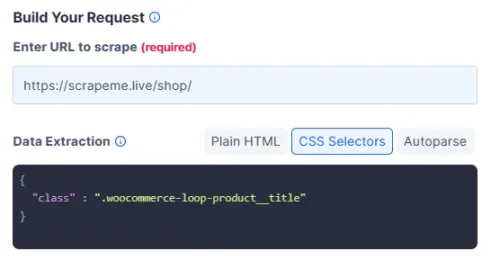

Next, enter a target URL. For this example, we'll use ScrapeMe. Also, select CSS Selector and add the product class name, which is .woocommerce-loop-product__title.

That will lead to the code you see below on the right:

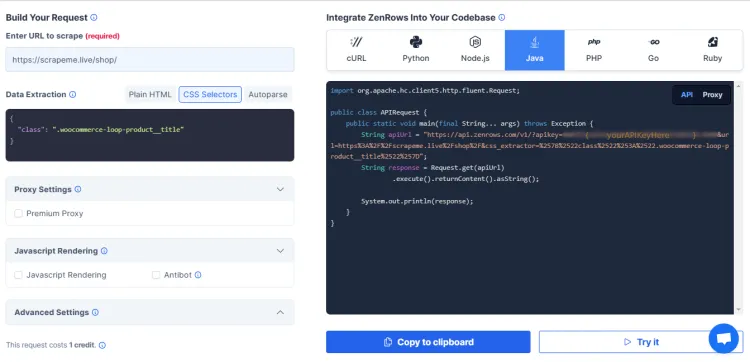

Almost there! All that's left to do is paste this code into your file:

import org.apache.hc.client5.http.fluent.Request;

public class APIRequest {

public static void main(final String... args) throws Exception {

String apiUrl = "https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fscrapeme.live%2Fshop%2F&js_render=true&antibot=true&premium_proxy=true&css_extractor=%257B%2522class%2522%253A%2522.woocommerce-loop-product__title%2522%257D";

String response = Request.get(apiUrl)

.execute().returnContent().asString();

System.out.println(response);

}

}

The result will be a list of the product item names with the woocommerce-loop-product__title class title.

2. Selenium

Selenium is a popular library used to extract data from dynamic web pages (the ones with elements generated by JavaScript) and interact like a human (clicking on a button, scrolling down and more). With a few lines of code, you easily create a script that will control the browser and extract any required element on the page.

👍 Pros:

- Open-source and free to use.

- Widely supported with good documentation available.

- It allows dynamic scraping.

- Multiple languages are supported, including Java, C#, Perl and Python.

👎 Cons:

- Selenium is a maintenance-heavy framework that's difficult to scale up. Let's test it! Here's how you can use Selenium to scrape data from a web page:

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.chrome.ChromeDriver;

public class ExampleTitleScraper {

public static void main(String[] args) {

// Setting up the web driver

WebDriver driver = new ChromeDriver();

// Navigating to the website

driver.get("https://scrapeme.live/shop/");

// Scraping the title of the website

String title = driver.getTitle();

System.out.println("Website title: " + title);

// Closing the web driver

driver.close();

}

}

BoldThe script's output should be "Website title: Products – ScrapeMe" Well done! You now know how to extract valuable information using Selenium.

3. Gecco

Gecco is another efficient open-source option for those who want to extract web data. The framework is built on top of the Apache HttpClient library and it uses annotations to define request procedures. That helps developers build both simple and complex crawlers without much effort.

Once the Gecco class is set up, you can easily crawl multiple domains in one go, inject customized headers for authentication requirements, and even use object models for the asynchronous processing of HTML pages.

👍 Pros:

- Able to crawl hundreds of pages per second due to its efficient data processing system and multithreading technology.

- No subscription fees since this is an open-source Java web crawler library.

👎 Cons:

- Limited customization options compared to other libraries, like Scrapy or Apache Nutch.

- It doesn't support dynamic scraping.

- Let's do some mini-scraping with Gecco! The script below extracts the h1 of Angular's homepage.

import com.geccocrawler.gecco.GeccoEngine;

import com.geccocrawler.gecco.annotation.*;

import com.geccocrawler.gecco.spider.HtmlBean;

@Gecco(matchUrl="https://angular.io", pipelines="consolePipeline")

public class Main implements HtmlBean {

@Text

@HtmlField(cssPath="h1") // css selectors

private String title;

public String getTitle() {

System.out.println("The title is: " + title);

return title;

}

public void setTitle(String title) {

this.title = title;

}

public static void main(String[] args) {

GeccoEngine.create() // Create an instance of GeccoEngine

.classpath("com.geccosample.gecco") // Specify the package where the HtmlBeans are located

.start("https://angular.io") // Start Gecco crawler engine

.thread(1)

.run(); // Run the engine and start crawling

}

}

And this is our output:

The title is: Deliver web apps with confidence.

4. Jsoup

Jsoup is an open-source Java web crawling library designed as an HTML parser to extract and manipulate data from HTML and XML documents. It provides a very convenient API for scraping, using the best of DOM, CSS, and jQuery-like methods. The framework supports manipulating attributes, cleaning HTML tags and even transforming HTML to plain text.

Additionally, Jsoup implements the WHATWG HTML5 specification and parses HTML to the same DOM as modern browsers do.

👍 Pros:

- Jsoup provides an extensive selection of methods that help create powerful scripts, allowing developers to interact with websites in different ways.

- The library is quite small (~800kb), so it can be easily integrated into other projects while adding little overhead to existing resources and files.

👎 Cons:

- Jsoup heavily relies on external third-party libraries like Apache Commons Codecs.

- Performance Lag from Node Traversal during scraping. This issue is more apparent for larger documents with multiple nodes that need traversing.

- It doesn't support dynamic scraping. Let's see an example of how you could use Jsoup to retrieve the title from ScrapeMe’s homepage:

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

public class Main {

public static void main(String[] args) throws Exception{

// Request the entire page from the URL

// define a String variable and scrape the website with jsoup for product title and price

String url = "https://scrapeme.live/shop/";

Document doc = Jsoup.connect(url).get();

String bannerTitle = doc.select("div.site-title").text();

// Print out output in console

System.out.println("Banner: " + bannerTitle);

}

}

The output is this:

Banner: ScrapeMe

5. Jaunt

Jaunt is a lightweight and fast scraping library that supports HTML and XML parsing. It also includes a headless browser component to crawl static web pages.

It provides easier access to HTML documents and enables developers to access, search, read, and modify the website content using simple methods and syntax similar to jQuery or XPath selectors.

👍 Pros:

- The library is designed to be lightweight and easy to understand, meaning it doesn't require any special skills or advanced coding knowledge.

- It's faster than most Java web crawling frameworks because it uses underlying JS code for its functionalities, enabling the execution of certain tasks in fewer steps.

- Jaunt offers extensive documentation, a growing community forum, and FAQs to help you troubleshoot any unforeseen issues.

👎 Cons:

- Unable to scrape dynamic web pages.

- It isn't easily customizable. Let's try now to scrape Vue Storefront's title.

import com.jaunt.*;

public class App {

public static void main(String[] args) {

try {

UserAgent userAgent = new UserAgent();

userAgent.visit("https://scrapeme.live/shop/");

String title = userAgent.doc.findFirst("<title>").getTextContent();

System.out.println("Title of the page: " + title);

} catch (JauntException e) {

System.err.println(e);

}

}

}

Our output looks like this:

Title of the page: Products - ScrapeMe

6. Jauntium

Jauntium is an open-source library that lets you automate web browsers. It's a combination of Jaunt and Selenium in an effort to overcome the deficiencies of each.

With its well-maintained and simple API, Jauntium is used to scrape websites of all types and sizes, including dynamic single-page apps. It also offers support for automated page navigation and custom data parsers that facilitate parsing complex data structures.

👍 Pros:

- All functionality of Selenium to automate any modern browser.

- User-friendly (Jaunt-like) architecture.

- It enables your programs to work with tables and forms without relying too much on XPath or CSS selectors.

- Fluent DOM navigation and search chaining.

👎 Cons:

- Relatively slower compared to Jaunt as it loads the entire page content, including the JavaScript content.

- It's heavily dependent on other libraries like Selenium and Jaunt.

- Let's fetch the first book from a store using Jauntium:

import org.openqa.selenium.chrome.ChromeDriver;

import com.jauntium.Browser;

import com.jauntium.Element;

public class ScrapeExample {

public static void main(String[] args) throws Exception {

Browser browser = new Browser(new ChromeDriver());

// Visit the webpage containing book

browser.visit("http://books.toscrape.com/");

// Identify the div that contains all the book elements

Element containerDiv = browser.doc.findFirst("<ol class=row>");

// Locate the element for first book

Element firstBook = containerDiv.findFirst("<article class=product_pod>");

// Extract name and price of the first book

String name = firstBook.findFirst("<h3>").getTextContent();

String price = firstBook.findFirst("<p class=price_color>").getTextContent();

System.out.println("The name of the first book is: " + name);

System.out.println("The price of the first book is: " + price);

}

}

Find the output below:

The name of the first book is: A Light in the ...

The price of the first book is: £51.77

7. WebMagic

WebMagic is a popular Java web scraping library that provides developers with a scalable and fast way to extract structured information. It supports distributed crawling and data processing through pluggable components such as automatic scheduling.

The framework's primary goal is to make web scrapers simple and intuitive. It supports breadth-first and depth-first search algorithms, allowing users to create spiders with varied crawling strategies.

👍 Pros:

- WebMagic has a straightforward API, making it accessible to professionals of all skill levels.

- Designed to be efficient and fast.

- Able to handle and extract large amounts of data simultaneously from multiple pages.

- This Java scraping library provides built-in support to handle common web scraping tasks like logging in and handling cookies or redirects.

👎 Cons:

- It's not a browser automation tool, meaning it can't interact with web pages the same way a human would.

- The library doesn't execute JavaScript and can only scrape the page's initial HTML.

Here's an example of how to use WebMagic to scrape an

h1:

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.Spider;

import us.codecraft.webmagic.processor.PageProcessor;

public class Main implements PageProcessor{

Site site = Site.me().setRetryTimes(3).setSleepTime(100);

public void process(Page page){

// Extract the footer link

String footer = page.getHtml().xpath("//*[@id=\"colophon\"]/div/div[1]/a").get();

System.out.println("This is a footer information: " + footer);

}

public Site getSite(){ return site; }

public static void main(String[] args){

Spider PriceScraper = Spider.create(new Main()).addUrl("https://scrapeme.live/shop/");

PriceScraper.run();

}

}

BoldRemark: The JAR file can be found on the Maven Central Repository website.

Here's the output:

This is a footer information: Built with Storefront & WooCommerce.

8. Apache Nutch

Apache Nutch is an open-source Java web crawler software that is highly extensible. It provides a high-performance, reliable, and flexible architecture for efficient crawling. It helps you create a search engine that can index multiple websites, blog posts, images, and videos.

The library has been integrated with Apache Solr to provide the most comprehensive solution for web search applications. Through its scalability and flexibility, it allows developers to crawl an entire web or specific pages.

👍 Pros:

- Nutch is freely available and open-source, letting developers customize and extend the software as needed.

- It scales up so that you can index millions of pages with ease.

- The framework is highly extensible since developers can add their own plugins for parsing, data storage, and indexing.

👎 Cons:

- Unable to crawl JavaScript or AJAX-based websites.

- It requires significant set-up time and resources. How does Apache Nutch crawl sites? Let's check:

import org.apache.hadoop.conf.Configuration;

import org.apache.nutch.crawl.Crawl;

import org.apache.nutch.fetcher.Fetcher;

import org.apache.nutch.util.NutchConfiguration;

public class NutchScraper {

public static void main(String[] args) throws Exception {

// Initialize Nutch instance.

String url = "https://scrapeme.live/shop/";

Configuration conf = NutchConfiguration.create();

Crawl nutch = new Crawl(conf);

// Crawl the given URL

nutch.crawl(url);

Fetcher fetcher = new Fetcher(conf);

fetcher.fetch(url);

System.out.println("Title of website is: " + fetcher.getPage().getTitle());

}

}

Here's the script output:

Title of the page: Products - ScrapeMe

9. HtmlUnit

HtmlUnit is a Java framework used to simulate a web browser and automate tasks such as filling out forms, clicking buttons, and navigating between pages.

👍 Pros:

- The library doesn't have a GUI, which makes it great for running scraping and automation tasks on a server or in a continuous integration environment.

- It can be easily embedded in other Java applications, which is useful for those who want to add scraping capabilities to their existing projects.

- Suitable for professionals of any skill level since its API is simple and easy to use.

- It supports a wide range of web technologies, including HTML, CSS, and JavaScript.

👎 Cons:

- Unable to consistently simulate a real browser's behavior, which may lead to issues when scraping JavaScript-reliant pages.

- It supports only basic authentication techniques, so any website with more sophisticated login techniques may require a different tool for automation. Do you want to see it in action? Here's an example:

import com.gargoylesoftware.htmlunit.WebClient;

import com.gargoylesoftware.htmlunit.html.HtmlPage;

import com.gargoylesoftware.htmlunit.html.*;

public class HtmlUnitScraper {

public static void main(String[] args) throws Exception{

// Create a new web client and open a webpage.

WebClient webclient = new WebClient();

HtmlPage page = webclient.getPage("https://angular.io");

// Get h1 which has a class name of 'hero-headline no-title no-toc no-anchor'

DomNodeList<DomElement> element = page.getByXPath("//div[@class='hero-headline no-title no-toc no-anchor']");

System.out.println(element.asText());

}

}

_Italic_The output displays the h1 content:

Deliver web apps with confidence

10. Htmleasy

Htmleasy is an open-source Java library designed to make parsing and generating HTML easier for developers. It provides a simple and intuitive interface on top of the underlying HTML structure, automatically trading complexity for ease of use.

👍 Pros:

- Easy to use.

- It provides great flexibility while still being intuitive.

- Open-source.

- It works with any modern version of Java.

👎 Cons:

- The documentation is incomplete.

- It may be overkill for small projects since its large size might not be practical for simple tasks.

How does Htmleasy work? See below targeting Shopping:

import com.htmeasy; // Importing HTMLEasy library

import java.util.*; // Import util package for ArrayList

public class ShoppingScraper {

public static void main(String args) {

// Set url of web page we want to scrape from

String url = "https://www.shopping.com";

// Get content from the web page using the HTMLEasy get() function with the URL defined above

HtmlContent html = HtmlEasy.get(url);

// Create an array list to store products and their details from web page HTML content

List<Map<String, String>> products = new ArrayList<>();

// Iterate through each product container ('h3') found on the HTML page by class name (using query selector) and store relevant details in our Array List

for (HtmlElement product : html.getElementsByClassName("topOfferCard card") ) {

// Create hash map to store individual product details (title, image link and price)

Map<String, String> itemDetails = new HashMap<>();

// Get & store product title by selecting 'a' tag

itemDetails.put("title: ", product.selectFirst(".topDealName card-link").innerText());

products.add(itemDetails); // add our hashmap of item details to our arraylist

}

// Print all the items scraped from the webpage

System.out.println(products);

}

}

And here's a product list as output:

Conclusion

As you can see, there's a variety of good Java web scraping libraries to choose from. With so many options, the process of evaluating and selecting the best one for your needs gets even harder and more crucial.

| ZenRows | Selenium | Gecco | Jsoup | Jaunt | Jauntium | WebMagic | Apache Nutch | HtmlUnit | Htmleasy | |

|---|---|---|---|---|---|---|---|---|---|---|

| Proxy Configuration | Auto | Manual | - | Manual | - | Manual | - | Manual | - | - |

| Dynamic Content | - | - | - | - | - | - | - | |||

| Documentation | - | - | - | - | - | - | - | - | ||

| Anti-bot Bypass | - | - | - | - | - | - | - | - | - | |

| Infrastructure Scalability | Auto | - | - | - | - | - | - | - | - | - |

| HTML Auto-Parsing | - | - | - | - | - | - | - | - | - | |

| Ease of use | Easy | Moderate | Moderate | Easy | Moderate | Moderate | Moderate | Difficult | Moderate | Easy |

While one great option to consider is ZenRows to handle anti-bot bypass (including rotating proxies, headless browsers, CAPTCHAs, and more), here's a brief summary of all Java web scraping tools we examined:

- ZenRows: It provides API-based web data extraction without getting blocked by anti-bot protections.

- Selenium: A well-known Java library for browser automation that identifies elements through the DOM.

- Gecco: With its versatility and easy-to-use interface, you can scrape entire websites or just parts of them.

- Jsoup: A Java web crawling library for parsing HTML and XML documents with a focus on ease of use and extensibility.

- Jaunt: A scraping and automation library that's used to extract data and automate web tasks.

- Jauntium: The framework allows you to automate with almost all modern web browsers.

- WebMagic: It provides a set of APIs that can be used to scrape web data in a declarative and efficient way.

- Apache Nutch: Open-source web crawler designed for large-scale projects involving millions of pages. It supports distributed processing on Hadoop clusters or other cloud computing frameworks.

- HtmlUnit: A Java crawler library used for simulating a browser, managing cookies, and making HTTP requests. Useful in testing scenarios with non-browser clients such as RESTful applications.

- Htmleasy: Easy webpage parser. Happy scraping!

Frequent Questions

Which Libraries Are Used for Web Scraping in Java?

Some of the most used Java web scraping libraries include Selenium, ZenRows, Jsoup, and Jaunt. They provide headless browsing, anti-bot bypass and data parsing, respectively.

What Is a Good Scraping Library for Java?

Choosing a good scraping library for Java depends on the nature of your project. You might select a browser automation tool like Selenium, a data parser like Jsoup, or anti-bot bypass APIs like ZenRows. Sometimes, a combination of them will bring the best results.

What Is the Most Popular Java Library for Web Scraping?

Jsoup is a good candidate for the most popular Java web scraping library. It's a powerful and versatile tool that's used to extract structured data from different kinds of pages, such as HTML and XML documents.

Which Java Web scraping Library Works Best for You?

The best Java scraping library depends on your specific needs and requirements. For instance, Selenium is well suited for automating web browsers, Jsoup excels at data extraction, and ZenRows will allow you to scrape without getting blocked.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.