With websites implementing measures to prevent bots, web scrapers are often flagged and blocked. That's where proxies play a crucial role. They act as an intermediary between you and your target website and significantly reduce your chances of getting blocked.

In this tutorial, you'll learn how to implement a Playwright proxy. Playwright is a popular headless browser that facilitates browser automation with just a few lines of code. While it supports numerous languages, we'll use Python.

Ready to enhance your web scraping capabilities? Let's dive in!

How Do I Add a Proxy to Playwright

We'll follow these steps to add a proxy to Playwright:

- Choose a proxy provider. Select a reliable proxy provider that meets your requirements. That includes type, speed, security, and results.

- Configure the proxy settings in Playwright. In your Python script, launch your browser and send your proxy credentials values as separate parameters.

- Scrape the target website using your Playwright proxy. With a configured proxy, your requests will seemingly originate from the IP address of the proxy server. Navigate to the target website to extract the necessary data.

Let's get proxies and write some code to the steps above.

Prerequisites

To follow along in this tutorial, install the Playwright Python library using the pip command:

pip install playwright

Then install the necessary browsers using install.

playwright install

Installing Chromium, Webkit, and Firefox might take some time.

Next, you can quickly set up a Playwright web scraper. Here's how:

How to Use a Proxy in Playwright in Python

Playwright supports two variations: synchronous and asynchronous. The former is great for small-scale scraping where concurrency isn't an issue. The second approach is recommended for projects where concurrency, scalability, and performance are essential factors.

On that note, this tutorial focuses on the Playwright asynchronous API. So, we need to import the async_playwright and asyncio modules.

from playwright.async_api import async_playwright

import asyncio

After that, define an asynchronous function, where you'll launch a new browser and pass your proxy values as separate parameters, like in the example below. We got a free proxy from FreeProxyList.

from playwright.async_api import async_playwright

import asyncio

async def main():

async with async_playwright() as playwright:

browser = await playwright.chromium.launch(

proxy={

'server': "181.129.43.3:8080",

},

)

Next, create a new browser context and open a new page within it.

context = await browser.new_context()

page = await context.new_page()

Lastly, navigate to your target website, scrape the necessary data, and close the browser afterward. For this example, we'll scrape HTTBin, an API whose HTML content is the IP address of the requester.

await page.goto("https://httpbin.org/ip")

html_content = await page.content()

print(html_content)

await context.close()

await browser.close()

Putting it all together and calling the function, we have the following code:

from playwright.async_api import async_playwright

import asyncio

async def main():

async with async_playwright() as playwright:

browser = await playwright.chromium.launch(

proxy={

'server': "181.129.43.3:8080",

},

)

context = await browser.new_context()

page = await context.new_page()

await page.goto("https://httpbin.org/ip")

html_content = await page.content()

print(html_content)

await context.close()

await browser.close()

asyncio.run(main())

This is our output:

##....

{

"origin": "181.129.43.3"

}

Bingo! The result above is the IP address of our free proxy. Now you know how to set up a Playwright proxy.

Playwright Proxy Authentication

Some proxy providers, particularly the ones offering premium proxies, require authentication to access their servers.

Add the necessary credentials as parameters in the browser_type.launch() method to authenticate a Playwright proxy. Here's an example:

browser = await playwright.chromium.launch(

proxy={

'server': "proxy_host:port",

'username': 'your_username',

'password': 'your_password',

},

)

So, your Playwright scraper now looks like this:

from playwright.async_api import async_playwright

import asyncio

async def main():

async with async_playwright() as playwright:

browser = await playwright.chromium.launch(

proxy={

'server': 'proxy_host:port',

'username': 'your_username',

'password': 'your_password',

},

)

context = await browser.new_context()

page = await context.new_page()

await page.goto("https://httpbin.org/ip")

html_content = await page.content()

print(html_content)

await context.close()

await browser.close()

asyncio.run(main())

Protocols to Set a Playwright Proxy: HTTP, HTTPS, SOCKS

Playwright supports adding proxies using protocols like HTTP, HTTPS, and SOCKS. Below is a summary of why and when to use each one for web scraping with Playwright.

- HTTP: Use it when you're making HTTP requests. They're generally used to interact with websites over the HTTP protocol.

- HTTPS: Use it when making HTTPS requests. In other words, for web scraping tasks that involve websites using SSL or TLS encryption. HTTPS proxies also work with HTTP.

- SOCKS: Use it when you require advanced features beyond HTTP and HTTPS support. SOCKS proxies are typically preferred for their flexibility and ability to handle various traffic, including non-HTTP protocols.

All in all, your protocol choice depends on your project needs and your proxy server features. However, consider the target websites' protocol when setting up your Playwright proxy.

Implement a Rotating Proxy in Playwright Using Python

Websites can detect and block requests from specific addresses. However, you can dynamically switch IP addresses between requests by rotating proxies. That way, your target website believes the requests come from different users.

You need a proxy pool to set up a rotating Playwright proxy using Python. Let's see how to create a list from FreeProxyList.

Start by importing the necessary dependencies and defining your proxy pool. We'll import the Random Python module since we want to assign a random proxy server for each request.

from playwright.async_api import async_playwright

import asyncio

import random

proxy_pool = [

{"server": "190.61.88.147:8080"},

{"server": "64.225.4.12:9991"},

{"server": "213.230.108.208:3128"},

# Add more proxy servers as needed

]

Select a server from the proxy pool using the Random library. Then, pass the chosen proxy as a parameter in the browser_type.launch() method.

async def main():

proxy = random.choice(proxy_pool)

async with async_playwright() as playwright:

browser = await playwright.chromium.launch(proxy=proxy)

Lastly, add the rest of the Playwright code, like in our earlier examples, to complete it. Here's our complete program:

from playwright.async_api import async_playwright

import asyncio

import random

proxy_pool = [

{"server": "68.183.185.62:80"},

{"server": "61.28.233.217:3128"},

{"server": "213.230.108.208:3128"},

# Add more proxy servers as needed

]

async def main():

proxy = random.choice(proxy_pool)

async with async_playwright() as playwright:

browser = await playwright.chromium.launch(proxy=proxy)

context = await browser.new_context()

page = await context.new_page()

await page.goto("https://httpbin.org/ip")

text_content = await page.content()

print(text_content)

await context.close()

await browser.close()

asyncio.run(main())

If you manually run the code multiple times, you should use a randomly selected IP address each time. But to do that automatically, let's iterate over a loop to make multiple requests by adding a for loop to the above code:

from playwright.async_api import async_playwright

import asyncio

import random

proxy_pool = [

{"server": "68.183.185.62:80"},

{"server": "61.28.233.217:3128"},

{"server": "213.230.108.208:3128"},

# Add more proxy servers as needed

]

async def main():

for _ in range(5): # Make 5 requests in this example

proxy = random.choice(proxy_pool)

async with async_playwright() as playwright:

browser = await playwright.chromium.launch(proxy=proxy)

context = await browser.new_context()

page = await context.new_page()

await page.goto("https://httpbin.org/ip")

text_content = await page.content()

print(text_content)

await context.close()

await browser.close()

asyncio.run(main())

Here's the corresponding result:

{

"origin": "68.183.185.62"

}

{

"origin": "61.28.233.217"

}

{

"origin": "213.230.108.208"

}

{

"origin": "61.28.233.217"

}

{

"origin": "68.183.185.62"

}

Awesome, you've created your first Playwright proxy rotator.

However, it's essential to note that free proxies are unreliable and only used for testing purposes. For actual web scraping projects, you need premium proxies or a web scraping API that gives and rotates proxies automatically for you.

Let's explore these options next.

Proxies for Web Scraping: What to Know

While free proxies are readily available, they're easily detected and blocked. To avoid this issue, you need premium proxies, specifically residential, which are IP addresses assigned to real devices. We made a list of the best web scraping proxy services if you want to check it out.

Yet, in many cases, more than just rotating residential proxies is required. That's where ZenRows comes in. It's a solution that enables you to bypass any anti-bot measures to retrieve the data you want.

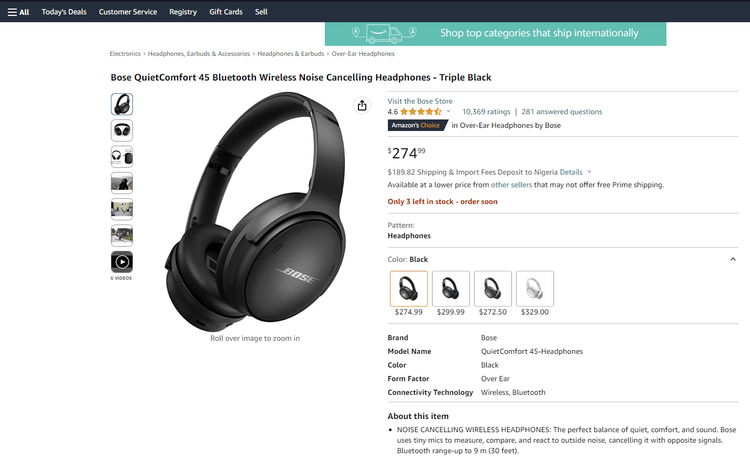

Let's see ZenRows in action against a well-protected Amazon product page.

To use ZenRows, sign up to get your free API key. It'll appear on the top of your screen.

After that, install the client with the pip command:

pip install zenrows

Then, import the ZenRows client in your Python script and create a new instance using your API key.

from zenrows import ZenRowsClient

client = ZenRowsClient("Your_API_key")

Next, define your target URL and set the premium proxy parameter to true. And to bypass any anti-bot measures, set the JavaScript rendering and anti-bot ones.

Lastly, make a GET request.

url = "https://www.amazon.com/Bose-QuietComfort-45-Bluetooth-Canceling-Headphones/dp/B098FKXT8L?th=1"

params = {"js_render":"true","antibot":"true","premium_proxy":"true"}

response = client.get(url, params=params)

Combining the above steps, we have the following code:

#import the ZenRows client

from zenrows import ZenRowsClient

#create new ZenRows instance using your API key

client = ZenRowsClient("Your_API_key")

#specify target URL and parameters

url = "https://www.amazon.com/Bose-QuietComfort-45-Bluetooth-Canceling-Headphones/dp/B098FKXT8L?th=1"

params = {"js_render":"true","antibot":"true","premium_proxy":"true"}

#make get request to target URL

response = client.get(url, params=params)

Verify it works by printing the response:

print(response.text)

Here's our result:

//...

<title>Amazon.com: Bose QuietComfort 45 Bluetooth Wireless Noise Cancelling Headphones - Triple Black : Clothing, Shoes & Jewelry</title>

//...

<span class="a-size-base a-text-bold"> This item: </span><span class="a-size-base"> Bose QuietComfort 45 Bluetooth Wireless Noise Cancelling Headphones - Triple Black </span></div></div><div class="a-section a-spacing-none a-spacing-top-micro _p13n-desktop-sims-fbt_fbt-desktop_display-flex__1gorZ"><div class="_p13n-desktop-sims-fbt_fbt-desktop_price-section__1Wo6p"><div class="a-row"><div class="_p13n-desktop-sims-fbt_price_p13n-sc-price-animation-wrapper__3ROfY"><div class="a-row"><span class="a-price" data-a-size="medium_plus" data-a-color="base"><span class="a-offscreen">$279.00</span><span aria-hidden="true"><span class="a-price-symbol">$</span><span class="a-price-whole">279<span class="a-price-decimal">.</span></span><span class="a-price-fraction">00</span></span></span></div></div></div></div></div><div class="a-section a-spacing-none _p13n-desktop-sims-fbt_fbt-desktop_shipping-info-show-box__17yWM"><div><div></div><div><div class="a-row"><span class="a-color-error">Only 2 left in stock - order soon.</span></div></div><span class="a-size-base a-color-secondary">Sold by WORLD WIDE STEREO and ships from Amazon Fulfillment.</span>

Great work! It feels great to gain access, right?Great work! It feels great to gain access, right? You might want to see our tutorial on how to block resources in Playwright and scrape faster.

Conclusion

Setting up a Playwright proxy can be a valuable tool for bypassing website blocks. However, you may need more than that in some cases.

We've explored several options and learned that free proxies are unreliable for real-world use cases. That's why rotating residential proxies is a better approach. But using an anti-bot bypass toolkit like ZenRows is the ultimate solution. Sign up to get your free 1,000 API credits.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.