Getting blocked while web scraping? Use a proxy server with Python requests to hide your IP and increase your chances of extracting the data you want.

Let's see how!

Prerequisites

You'll need Python 3 installed on your machine. And since following this tutorial will be easier if you know the fundamentals of web scraping with Python, feel free to check our guide.

Requests, the most popular HTTP client for Python, is the best library to implement a proxy. Install it with the following command:

pip install requests

Now, let's dive in!

How to Use Proxies with Python Requests

In this section, you'll learn how to perform basic Python requests with a proxy, where to get proxies from, how to authenticate and some other day-to-day mechanisms.

Perform Requests with Proxies

To use proxies with Python requests, start by importing the HTTP client library:

import requests

Then, get some valid proxies from Free Proxy List and define a dictionary with the proxy URLs associated with the HTTP and HTTPS protocols:

proxies = {

'http': 'http://103.167.135.111:80',

'https': 'http://116.98.229.237:10003'

}

requests will perform HTTP requests over the http proxy and handle HTTPS traffic over the https one.

Note: Free proxies are short-lived! The ones above will probably no longer work for you. Replace them with fresh free proxies.

As you can deduct above, we used this syntax:

<PROXY_PROTOCOL>://<PROXY_IP_ADDRESS>:<PROXY_PORT>

Now, perform an HTTP request with Python requests through the proxy server:

# target website

url = 'https://httpbin.org/ip'

# making an HTTP GET request through a proxy

response = requests.get(url, proxies=proxies)

The full code of what your basic Python requests proxy script will look like this:

import requests

proxies = {

'http': 'http://103.167.135.111:80',

'https': 'http://116.98.229.237:10003'

}

url = 'https://httpbin.org/ip'

response = requests.get(url, proxies=proxies)

Verify it works:

print(response)

You'll get the following response:

<Response [200]>

That means the website of the target server responded with an HTTP 200 status code. In other words: The HTTP request was successful! 🥳

Please note that requests supports only HTTP and HTTPS proxies. If you have to route HTTP, HTTPS, FTP, or other traffic, you'll need a SOCKS proxy. The library doesn't support it natively, but you can install the socks extension.

pip install requests[socks]

Then, you can specify a SOCKS proxy use.

import requests

proxies = {

'http': 'socks5://<PROXY_IP_ADDRESS>:<PROXY_PORT>',

'https': 'socks5://<PROXY_IP_ADDRESS>:<PROXY_PORT>'

}

url = 'https://httpbin.org/ip'

response = requests.get(url, proxies=proxies)

Print the Response

HTTPBin, our target page, returns the IP of the caller in JSON format, so retrieve the response to the request with the json() method.

printable_response = response.json()

In case of a non-JSON response, use this other method:

printable_response = response.text

Time to print the response.

print(printable_response)

Your Python requests proxy script should look as follows:

import requests

proxies = {

'http': 'http://103.167.135.111:80',

'https': 'http://116.98.229.237:10003'

}

url = 'https://httpbin.org/ip'

response = requests.get(url, proxies=proxies)

print(response.json())

Run it, and you'll get an output similar to this:

{'origin': '116.98.229.237'}

The origin field contains the IP of the proxy, not yours. That confirms requests made the HTTPS request over a proxy.

Requests Methods

The methods exposed by requests match the HTTP methods. The most popular ones are:

-

GET: To retrieve data from a server.

response = requests.get('https://httpbin.org/ip')

-

POST: To send data to a server.

response = requests.post('https://httpbin.org/anything', data={"key1": "a", "key2": "b"})

Take a look at the remaining HTTP methods in this library:

| Method | Syntax | Used to |

|---|---|---|

| PUT | requests.put(url, data=update_data) |

Update an existing resource on the server |

| PATCH | requests.patch(url, data=partial_update_data) |

Partially update a resource on the server |

| DELETE | requests.delete(url) |

Delete a resource on the server |

| HEAD | requests.head(url) |

Retrieve the headers of a resource |

| OPTIONS | requests.options(url) |

Retrieve the supported HTTP methods for a URL |

Proxy Authentication with Python Requests: Username & Password

Some proxy servers are protected by authentication for security reasons so that only users with a pair of credentials can access them. That usually happens with premium proxies or commercial solutions.

Follow this syntax to specify a username and password in the URL of an authenticated proxy:

<PROXY_PROTOCOL>://<USERNAME>:<PASSWORD>@<PROXY_IP_ADDRESS>:<PROXY_PORT>

See an example:

# ...

proxies = {

'http': 'http://fgrlkbxt:[email protected]:7492',

'https': 'https://fgrlkbxt:[email protected]:6286'

}

# ...

Error 407: Proxy Authentication Required

The HTTP status error code 407: Proxy Authentication Required occurs when making a request through a proxy server that requires authentication. This error indicates that the user didn't provide valid credentials.

To fix it, make sure the proxy URL contains the correct username and password. Find out more about the several types of authentications supported.

Proxy Session Using Python Requests

When making many requests through a proxy server, you may need a session. A Session object can reuse the same TCP connection for several requests, which saves time and improves performance compared to making single requests. Also, it keeps track of cookies.

Use a proxy session in Python requests as shown here:

import requests

# initializate a session

session = requests.Session()

# set the proxies in the session object

session.proxies = {

'http': 'http://103.167.135.111:80',

'https': 'http://116.98.229.237:10003'

}

url = 'https://httpbin.org/ip'

# perform an HTTP GET request over the session

response = session.get(url)

Environment Variable for a Python requests Proxy

You can DRY up some code if your Python script uses the same proxies for each request. By default, requests relies on the HTTP proxy configuration defined by these environment variables:

-

HTTP_PROXY: It corresponds to thehttpkey of theproxiesdictionary. -

HTTPS_PROXY: It corresponds to thehttpskey of theproxiesdictionary.

Open the terminal and set the two environment variables this way:

export HTTP_PROXY="http://103.167.135.111:80"

export HTTPS_PROXY="http://116.98.229.237:10003"

Then, remove the proxy logic from your script, and you'll get to this:

import requests

url = 'https://httpbin.org/ip'

response = requests.get(url)

Great! You now know the basics of proxies in Python with requests! Let's see them in action in some more advanced scenarios.

Use a Rotating Proxy with Python Requests

When your script makes many requests in a short time, the server might consider that suspicious and ban your IP. But that won't happen with a multiple-proxy strategy. The idea behind rotating proxies is to use a new one after a certain period of time or a number of requests to appear as a different user each time.

Let's see how to implement a proxy rotator in Python with requests in a real scenario!

Rotate IPs with a Free Solution

As before, you need to retrieve a pool of proxies. If you don't know where to get it, check our list of the best proxy providers for web scraping.

Take a look at the Python logic here:

import random

import requests

# some free proxies

HTTP_PROXIES = [

'http://129.151.91.248:80',

'http://18.169.189.181:80',

# ...

'http://212.76.110.242:80'

]

HTTPS_PROXIES = [

'http://31.186.239.245:8080',

'http://5.78.50.231:8888',

# ...

'http://52.4.247.252:8129'

]

# a function to perform an HTTP request

# over a rotating proxy system

def rotating_proxy_request(http_method, url, max_attempts=3):

response = None

attempts = 1

while attempts <= max_attempts:

try:

# get a random proxy

http_proxy = random.choice(HTTP_PROXIES)

https_proxy = random.choice(HTTPS_PROXIES)

proxies = {

'http': http_proxy,

'https': https_proxy

}

print(f'Using proxy: {proxies}')

# perform the request over the proxy

# waiting up to 5 seconds to connect to the server

# through the proxy before failing

response = requests.request(http_method, url, proxies=proxies, timeout=5)

break

except Exception as e:

# log the error

print(e)

print(f'{attempts} failed!')

print(f'Trying with a new proxy...')

# new attempt

attempts += 1

return response

The snippet above uses random.choice() to extract a random proxy from the pool. Then, it performs the desired HTTP request over it with requests.method(), a function that allows you to specify the HTTP method to use.

Free proxies are failure-prone. Thus, rotating_proxy_request() tries up to three times before returning None. Also, free proxies are usually very slow, so you should set the timeout parameter.

Note: Keep in mind that this is just a simple approach to rotating IPs. Take a look at our complete guide to learn more about rotating a proxy in Python.

Let's try the IP rotator function against a real target that implements anti-bot measures:

response = rotating_proxy_request('get', 'https://www.g2.com/products/zenrows/reviews')

print(response.status_code)

The output:

403

It looks like the server responded with a 403 Unauthorized error response, which means the target server detected your rotating-proxy request as a bot. As proved in a real-world example, free proxies aren't reliable, so you should avoid them!

The solution? A premium proxy like ZenRows.

Ignore SSL Certificate

By default, requests verifies SSL certificates on HTTPS requests. And, when dealing with proxies, the certification verification can lead to SSLError errors.

To avoid those errors, disable SSL verification with verify=False:

# ...

response = requests.request(

http_method,

url,

proxies=proxies,

timeout=5

# disable SSL certificate verification

verify=False

)

Note: verify=False is a recommended config when adopting premium proxies.

Premium Proxy to Avoid Getting Blocked

Premium proxies have been a popular solution to avoid being blocked for years. Yet, they used to be expensive, but that has changed with the rise of solutions like ZenRows.

ZenRows offers premium proxies starting at just $49 per month. Also, they provide several benefits, including geo-location, flexible pricing, and a pay-per-successful-request policy.

To get started with ZenRows, sign up for a free trial.

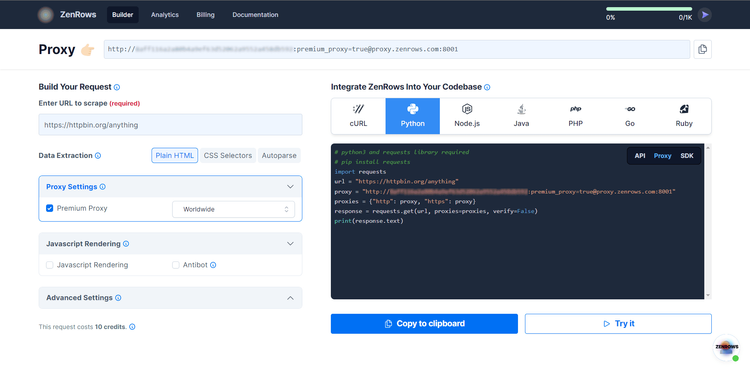

Once you get to the following Request Builder, check Premium Proxy on the left to enable the feature, and choose the Proxy mode on the right. Then, click the "Copy to clipboard" button and paste the Python code into your script.

import requests

proxy = 'http://<YOUR_ZENROWS_API_KEY>:@proxy.zenrows.com:8001'

proxies = {

'http': proxy,

'https': proxy

}

url = 'https://httpbin.org/anything'

response = requests.get(url, proxies=proxies, verify=False)

print(response.text)

Note that verify=False is mandatory when using premium proxies in ZenRows.

You'll see a different IP at each run in the origin field returned by the JSON produced by HTTPBin. Congrats! Your premium proxy with Python requests script is ready!

Conclusion

This step-by-step tutorial covered the most important lessons about proxies with requests in Python. You started from the basic setup and have become a proxy master!

Now you know:

- What a web proxy is and why free proxies aren't reliable.

- The basics of using a proxy with

requestsin Python. - How to implement a rotating proxy.

- How to use a premium proxy.

Proxies help you bypass anti-bot systems. Yet, some proxies are more reliable than others, and ZenRows offers the best on the market. With it, you'll get access to a reliable rotating proxy system with a simple API call.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.