Have you ever gotten blocked while web scraping with Axios? Chances are the target site identified you as a bot because of your User Agent. The UA is a string of data your browser sends to the website's server, indicating information such as the browser and operating system you're using.

You need to change the User Agent in Axios to avoid detection and access the information you want, and we'll explore how to do that in this tutorial.

Let's get started!

What Is the User Agent in Axios

The User Agent is an essential fingerprint for a server to identify clients and deliver the appropriate content (e.g., display a mobile version of a page for mobile browsers). Every HTTP request includes it as part of its headers.

UAs generally have this format:

User-Agent: <product> / <product-version> <comment>

That's the syntax used by web browsers:

User-Agent: Mozilla/5.0 (<system-information>) <platform> (<platform-details>) <extensions>

For example, here's what a Chrome UA string would look like:

Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36

The above string indicates the client is compatible with Mozilla products, uses the AppleWebKit rendering engine, and is compatible with Chrome and Safari.

Alternatively, here's a Firefox UA string:

Mozilla/5.0 (X11; Linux i686; rv:110.0) Gecko/20100101 Firefox/110.0.

For most websites, the first step in bot detection is checking the UA string and blocking any client that doesn't have a valid web browser User Agent.

We'll show you how to see your UA, but first ensure you have Node, which ships with NPM. Also, install Axios with NPM:

npm install axios

So, how to see your Axios User Agent? The HTTPBin endpoint returns information about a client's header, including their UA, when a GET request is made. Let's make a request to see what we get.

const axios = require('axios');

axios.get('https://httpbin.org/headers')

.then(({ data }) => console.log(data));

You should have a response similar to this:

{

headers: {

Accept: 'application/json, text/plain, */*',

'Accept-Encoding': 'gzip, compress, deflate, br',

Host: 'httpbin.org',

'User-Agent': 'axios/1.3.5',

'X-Amzn-Trace-Id': 'Root=1-643cda26-6ce08bef6999e1d419d37041'

}

}

The User Agent here is axios/1.3.5, the default UA string Axios sends for every request. As you can imagine, any website can easily tell it's not a valid browser client and may block it. Specifying a real User Agent for Axios is necessary to avoid this scenario.

Set User Agent Using Axios

You can set a custom Axios User Agent by specifying it in your request configuration's headers option.

Let's say this is the Chrome UA string we want to set:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36

Update your code to add it in the headers:

const axios = require('axios');

const headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36'

}

axios.get('https://httpbin.org/headers', {

headers,

})

.then(({ data }) => console.log(data));

Run it, and you'll get a similar response to the one below:

{

headers: {

Accept: 'application/json, text/plain, */*',

'Accept-Encoding': 'gzip, compress, deflate, br',

Host: 'httpbin.org',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36',

'X-Amzn-Trace-Id': 'Root=1-643d039e-36575b1273b5f45a18b7d9c9'

}

}

Awesome! The UA has changed to the one set.

As a next step, let's get a random UA for each request. Start by creating the array (you can find some suitable options in our list of top User Agents for web scraping).

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

];

Update the User-Agent header, add a loop and make the request multiple times:

for (let i = 0; i < 3; i++) {

// Get random UA

const ua = userAgents[Math.floor(Math.random() * userAgents.length)];

// Set UA in headers

const headers = {

'User-Agent': ua,

}

// Make request

axios.get('https://httpbin.org/headers', {

headers,

})

.then(({ data }) => console.log(data));

}

Now, you'll have a random UA every time a request is made:

{

headers: {

Accept: 'application/json, text/plain, */*',

'Accept-Encoding': 'gzip, compress, deflate, br',

Host: 'httpbin.org',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'X-Amzn-Trace-Id': 'Root=1-643d15cc-32cfd88246735b5928a7fb29'

}

}

{

headers: {

Accept: 'application/json, text/plain, */*',

'Accept-Encoding': 'gzip, compress, deflate, br',

Host: 'httpbin.org',

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'X-Amzn-Trace-Id': 'Root=1-643d15cc-0cfaab326c9e4cf859126dbf'

}

}

{

headers: {

Accept: 'application/json, text/plain, */*',

'Accept-Encoding': 'gzip, compress, deflate, br',

Host: 'httpbin.org',

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

'X-Amzn-Trace-Id': 'Root=1-643d15cc-231af09d4e31bc6e4a198345'

}

}

We've progressed!

Yet, this method for randomly changing the Axios User Agent is fine but not effective as it may be difficult to garner many valid strings and constantly re-test and update them. So, let's see how to do it properly at scale for web scraping.

How To Change the Axios User Agent At Scale

Creating a huge User Agent list to select from randomly is problematic. Namely, you may unknowingly include UAs with wrong information, such as invalid browser versions, or they can quickly become outdated.

Then, aside from the fact that most websites can spot malicious headers and other related components, other anti-scraping challenges can come up, such as CAPTCHAs.

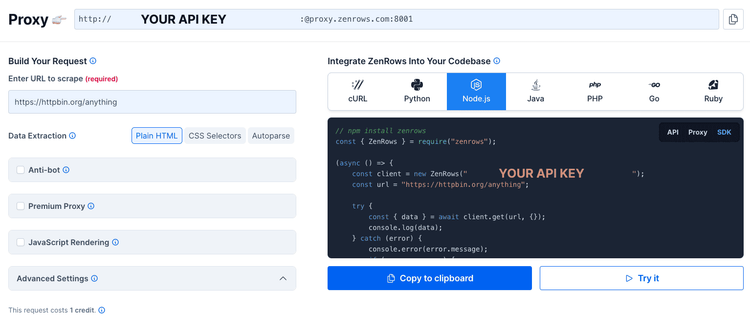

Fortunately, you can use ZenRows with Axios to generate working User Agents and bypass all anti-bot challenges. Let's see how!

First, you need to sign up on ZenRows to get a free API key, after which you'll get to its Request Builder.

Next, create a variable for your API key, the target URL, and ZenRows' base URL:

const targetUrl = 'http://httpbin.org/headers';

const apiKey = '<YOUR_API_KEY>';

const zenRowsBaseUrl = 'https://api.zenrows.com/v1/';

Remember to replace <YOUR_API_KEY> with your ZenRows API key.

Create a params object for all parameters to be passed to ZenRows' base URL:

// Parameters to pass to ZenRows' base URL

const params = {

url: targetUrl,

apikey: apiKey,

antibot: true,

};

The url and apikey parameters are required, while the antibot parameter configures ZenRows to bypass anti-bot measures. You can also add others like premium_proxy to avoid detection and js_render for JavaScript rendering.

Now, make the request using the ZenRows parameters above:

axios.get(zenRowsBaseUrl, {

params,

}).then(({ data }) => console.log(data)); // page HTML

Here's what your completed script should look like:

const axios = require('axios');

const targetUrl = 'http://httpbin.org/headers';

const apiKey = '<YOUR_API_KEY>';

const zenRowsBaseUrl = 'https://api.zenrows.com/v1/';

// Parameters to pass to ZenRows' base URL

const params = {

url: targetUrl,

apikey: apiKey,

antibot: true,

};

axios.get(zenRowsBaseUrl, {

params,

}).then(({ data }) => console.log(data)); // page HTML

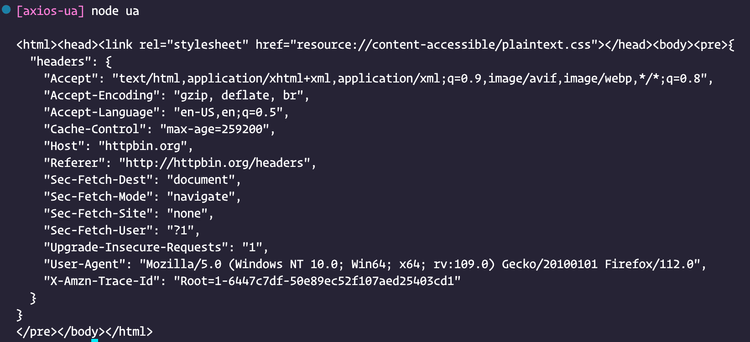

Your output should be similar to this one:

When you make a request, ZenRows will handle Axios User Agent randomization and other anti-bot challenges. Awesome, right?

Fix Error in Axios: Refused to Set Unsafe Header User-Agent

When you use client-side JavaScript code to set a custom User-Agent header in your Axios request on your own, the "Refused to set unsafe header User-Agent" error occurs. That's because modern web browsers see your UA as unsafe and shouldn't be set on the client side.

To resolve this error, remove the custom UA header from your Axios request or set it on the server side instead. You can use ZenRows for cases where you have to use a different User-Agent in client-side JavaScript code, as it'll randomize the UA on the server side.

Conclusion

Randomizing your User Agent in Axios makes it harder for websites to detect your scraping activities, as each request appears from a different software or device. Therefore, it's essential to randomize your UAs properly to make your request look like coming from a real user.

Additionally, apart from bot detection using User Agents, you need to deal with other obstacles, like IP address reputation and JavaScript challenges. Check our article on web scraping without getting blocked to learn the best methods to avoid detection.

Overall, using ZenRows with Axios saves you a lot of effort and resources. It offers premium rotating proxies, headless browser automation, CAPTCHA solving and more to make web scraping easier and more effective.

Frequent Questions

How Do I Pass User Agent in Axios?

You can pass User Agent in Axios by specifying the User-Agent header in the headers object of the request configuration.

How Do I Change My User Agent in Axios?

You can change your User Agent in Axios by setting the User-Agent header value in the headers object of your request configuration. You can also use a tool like ZenRows to change your UA randomly.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.