Choosing a web scraping option between Selenium vs. Beautiful Soup isn't that difficult. While both are excellent libraries, there are some key differences to consider while making this decision, like programming language compatibility, browser support, and performance.

The table below highlights the primary differences between the two:

| Criterion | Beautiful Soup | Selenium |

|---|---|---|

| Ease of use | User-friendly | Complex to set up and use |

| Programming languages | Only Python | Python, C#, JavaScript, PHP, Java and Perl |

| Browser support | It doesn't require a browser instance | Chrome, Brave, Edge, IE, Safari, Firefox and Opera |

| Performance | Faster since it just requires the page source | Slower as it uses a web driver instance to navigate through the web |

| Functionality | Mainly used for parsing and extracting information from HTML and XML documents | Selenium can automate browser actions, like clicking buttons, filling out forms and navigating between pages |

| Operating system support | Windows, Linux and macOS | Windows, Linux and macOS |

| Architecture | Simple HTTP and XML parser to navigate through structured data | JSON Wire protocol to manage Web Drivers |

| Prerequisites | BeautifulSoup package and a module to send requests (like as httpx) | Selenium Bindings and Browser Drivers |

| Dynamic content | It only works with static web pages | Selenium can scrape dynamically generated content |

Beautiful Soup

Beautiful Soup is a Python library for web scraping and parsing HTML and XML documents, giving us more options to navigate through a structured data tree. The library can parse and navigate through the page, allowing you to extract information from the HTML or XML code by providing a simple, easy-to-use API.

What Are the Advantages of Beautiful Soup?

Here are the primary benefits of choosing Beautiful Soup over Selenium:

- It's faster.

- Beautiful Soup's beginner-friendly and easier to set up.

- The library works independently from browsers.

- It requires less time to run.

- It can parse HTML and XML documents.

- The framework's easier to debug.

What Are the Disadvantages of Beautiful Soup?

See below for an overview of Beautiful Soup's shortcomings:

- It can't interact with web pages like a human user.

- The library can just parse data. Therefore, you'll need to install other modules to extract the information, e.g., requests or httpx.

- It solely supports Python.

- You'll need a different module to scrape JavaScript-rendered pages since Beautiful Soup only lets you navigate through HTML or XML files.

When to Use BeautifulSoup

Beautiful Soup is best used for web scraping tasks that involve parsing and extracting information from static HTML pages and XML documents. For instance, if you need to retrieve data from a website with a simple structure, such as a blog or an online store, the Python library can easily extract the information you need by parsing the HTML code.

However, if you're looking to scrape dynamic content, Selenium is a better alternative.

Web Scraping Sample with Beautiful Soup

Let's go through a quick scraping tutorial to gain more insight into the performance comparison between Beautiful Soup vs. Selenium.

Since Beautiful Soup provides just a way to navigate the data, we'll use another module to download the information. Let's use requests and scrape a paragraph from a Wikipedia article:

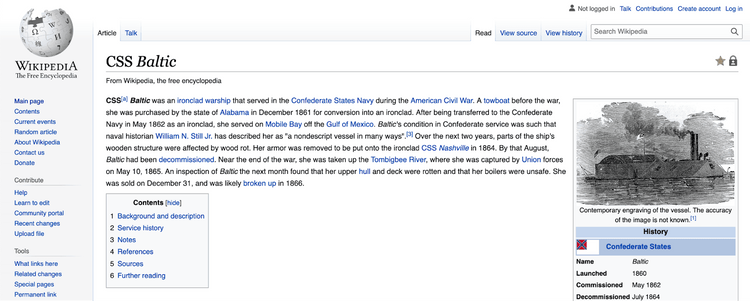

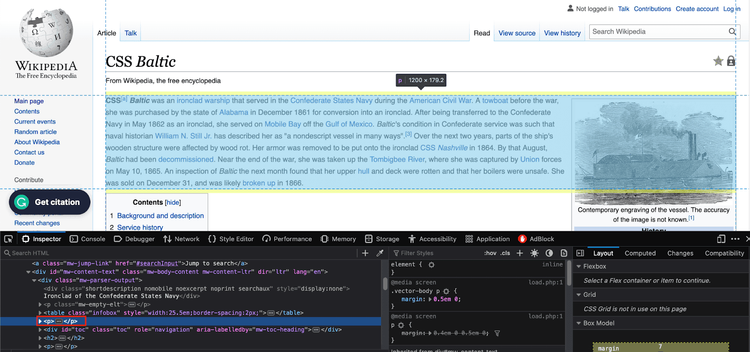

First, inspect the page elements to find the introduction element. It's in the second p tag under the div with mv-parser-output class:

What's left is to send a GET request to the website and define a Beautiful Soup object to get the elements. Start by importing the necessary tools:

# load the required packages

from bs4 import BeautifulSoup

# we need a module to connect to websites, you can also use built-in urrlib module.

import requests

url = "https://en.wikipedia.org/wiki/CSS_Baltic"

# get the website data

response = requests.get(url)

After that, define the object and parse the HTML. Then, extract the data using the find and find_all methods.

-

findreturns the first occurrence of the element. -

find_allreturns all found elements.

# parse response text using html.parser

soup = BeautifulSoup(response.text, "html.parser")

# get the main div element

main_div = soup.find("div", {"class": "mw-body-content mw-content-ltr"})

# extract the content div

content_div = main_div.find("div", {"class": "mw-parser-output"})

# second div is the first paragraph

second_p = main_div.find_all("p")[1]

# print out the extracted data

print(second_p.text)

Here's the full code:

# load the required packages

from bs4 import BeautifulSoup

# we need a module to connect to websites, you can also use built-in urrlib module.

import requests

url = "https://en.wikipedia.org/wiki/CSS_Baltic"

# get the website data

response = requests.get(url)

# parse response text using html.parser

soup = BeautifulSoup(response.text, "html.parser")

# get the main div element

main_div = soup.find("div", {"class": "mw-body-content mw-content-ltr"})

# extract the content div

content_div = main_div.find("div", {"class": "mw-parser-output"})

# second div is the first paragraph

second_p = main_div.find_all("p")[1]

# print out the extracted data

print(second_p.text)

And here's what your output should look like:

CSS[a] Baltic was an ironclad warship that served in the Confederate States Navy during the American Civil War. A towboat before the war, she was purchased by the state of Alabama in December 1861 for conversion into an ironclad. After being transferred to the Confederate Navy in May 1862 as an ironclad, she served on Mobile Bay off the Gulf of Mexico. Baltic's condition in Confederate service was such that naval historian William N. Still Jr. has described her as "a nondescript vessel in many ways".[3] Over the next two years, parts of the ship's wooden structure were affected by wood rot. Her armor was removed to be put onto the ironclad CSS Nashville in 1864. By that August, Baltic had been decommissioned. Near the end of the war, she was taken up the Tombigbee River, where she was captured by Union forces on May 10, 1865. An inspection of Baltic the next month found that her upper hull and deck were rotten and that her boilers were unsafe. She was sold on December 31, and was likely broken up in 1866.

That was it!

Although Beautiful Soup can only scrape static web pages, it's also possible to extract dynamic data by combining it with a different library. Learn how to do that by using ZenRows API with Python Requests and Beautiful Soup.

Selenium

Selenium is an open-source browser automation tool often used for web scraping. Here are its main components:

- Selenium IDE: used to record actions before automating them.

- Selenium WebDriver: a web automation tool that empowers you to control web browsers.

- Selenium Grid: helpful for parallel execution.

Selenium can also handle dynamic web pages, which are difficult to scrape using Beautiful Soup.

What Are the Advantages of Selenium?

Let's see what the primary Selenium advantages are:

- It's easy to use.

- The library supports multiple programming languages, like JavaScript, Ruby, Python, and C#.

- It can automate Firefox, Edge, Safari, and even a custom QtWebKit browser.

- Selenium can interact with the web page's JavaScript code, execute XHR requests, and wait for elements to load before scraping the data. In other words, you can scrape dynamic web pages handily. It also has anti-bot bypass plugins like the Undetected ChromeDriver. All these increase its chances of evading blocks during web scraping.

What Are the Disadvantages of Selenium?

This is what it lacks:

- Selenium set-up methods are complex.

- It uses more resources compared to Beautiful Soup.

- It can become slow when you start scaling up your application.

When to Use Selenium

A key point in the Selenium vs. Beautiful Soup battle is the type of data they can extract.

Selenium is ideal for scraping websites that require interaction with the page, e.g., filling out forms, clicking buttons, or navigating between pages. For instance, if you need to retrieve information from a login-protected site, Selenium can automate the login process and navigate through the pages to get the data.

Keep in mind that Selenium it's also an excellent library for scraping JS-rendered web pages.

Web Scraping Sample with Selenium

Let's run through a tutorial on web scraping with Selenium using the same target. We'll also navigate to the article with Selenium to emphasize the dynamic content scraping.

Start by importing the required packages:

from selenium import webdriver

from selenium.webdriver.common.by import By

# we will need these to wait for dynamic content to load

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

Then, configure the Selenium instance using the WebDriver's options object:

# we can configure selenium using webdriver's options object

options = webdriver.ChromeOptions()

options.add_argument("--headless") # set headless mode

options.add_argument("--window-size=1920x1080") # Set browser window size to standard resolution

# create a new instance of Chrome with the configured options

driver = webdriver.Chrome(options=options)

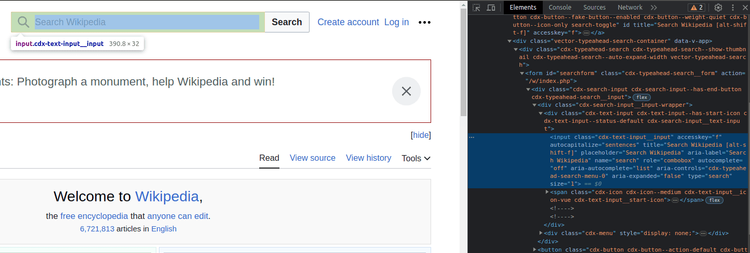

In this case, we'll go to Wikipedia's homepage and use the search bar. That'll show Selenium's ability to interact with the page and scrape dynamic content.

url = "https://en.wikipedia.org/wiki/Main_Page"

# load the website

driver.get(url)

When we inspect the elements, we can see the search bar is an input element with the cdx-text-input__input class.

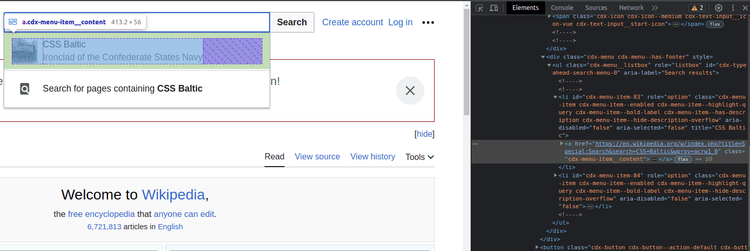

The next step is connecting Selenium to the page, which can be done by interacting with the website. Locate the search bar and search for the article title. You'll find the results stored as anchor tags with the cdx-menu-item__content class:

# find the search box

search_box = driver.find_element(By.CSS_SELECTOR, "input.vector-search-box-input")

# click to the search box

search_box.click()

# search for the article

search_box.send_keys("CSS Baltic")

The next step is connecting Selenium to the page, which can be done by interacting with the website. Locate the search bar and search for the article title. You'll find the results stored as a tags with the mw-searchSuggest-link class:

Click on the first result to extract the data, and use the WebDriverWait() method to wait for the page to load:

from selenium import webdriver

from selenium.webdriver.common.by import By

# we will need these to wait for dynamic content to load

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

# we can configure selenium using webdriver's options object

options = webdriver.ChromeOptions()

options.add_argument("--headless") # set headless mode

options.add_argument("--window-size=1920x1080") # Set browser window size to standard resolution

# create a new instance of Chrome with the configured options

driver = webdriver.Chrome(options=options)

url = "https://en.wikipedia.org/wiki/Main_Page"

# load the website

driver.get(url)

# find the search box

search_box = driver.find_element(By.CSS_SELECTOR, "input.cdx-text-input__input")

# click to the search box

search_box.click()

# search for the article

search_box.send_keys("CSS Baltic")

try:

# wait for 10 seconds for content to load.

search_suggestions = WebDriverWait(driver, 10).until(

EC.presence_of_all_elements_located((By.CSS_SELECTOR, "a.cdx-menu-item__content"))

)

# click to the first suggestion

search_suggestions[0].click()

# extract the data using same selectors as in beautiful soup.

main_div = driver.find_element(By.CSS_SELECTOR, "div.mw-body-content")

content_div = main_div.find_element(By.CSS_SELECTOR, "div.mw-parser-output")

paragraphs = content_div.find_elements(By.TAG_NAME, "p")

# we need the second paragraph

intro = paragraphs[1].text

print(intro)

except Exception as error:

print(error)

driver.quit()

Here's what your code should look like after combining all the separate parts:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

# web driver manager: https://github.com/SergeyPirogov/webdriver_manager

# will help us automatically download the web driver binaries

# then we can use `Service` to manage the web driver's state.

from webdriver_manager.chrome import ChromeDriverManager

# we will need these to wait for dynamic content to load

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

# we can configure the selenium using webdriver's options object

options = webdriver.ChromeOptions()

options.headless = True # just set it to the headless mode

# this returns the path web driver downloaded

chrome_path = ChromeDriverManager().install()

# define the chrome service and pass it to the driver instance

chrome_service = Service(chrome_path)

driver = webdriver.Chrome(service=chrome_service, options=options)

url = "https://en.wikipedia.org/wiki/Main_Page"

driver.get(url)

# find the search box

search_box = driver.find_element(By.CSS_SELECTOR, "input.vector-search-box-input")

# click to the search box

search_box.click()

# search for the article

search_box.send_keys("CSS Baltic")

try:

# wait for 10 seconds for content to load.

search_suggestions = WebDriverWait(driver, 10).until(

EC.presence_of_all_elements_located((By.CSS_SELECTOR, "a.mw-searchSuggest-link"))

)

# click to the first suggestion

search_suggestions[0].click()

# extract the data using same selectors as in beautiful soup.

main_div = driver.find_element(By.CSS_SELECTOR, "div.mw-body-content")

content_div = main_div.find_element(By.CSS_SELECTOR, "div.mw-parser-output")

paragraphs = content_div.find_elements(By.TAG_NAME, "p")

# we need the second paragraph

intro = paragraphs[1].text

print(intro)

except Exception as error:

print(error)

driver.quit()

Voilà! Take a look at the output:

CSS[a] Baltic was an ironclad warship that served in the Confederate States Navy during the American Civil War. A towboat before the war, she was purchased by the state of Alabama in December 1861 for conversion into an ironclad. After being transferred to the Confederate Navy in May 1862 as an ironclad, she served on Mobile Bay off the Gulf of Mexico. Baltic's condition in Confederate service was such that naval historian William N. Still Jr. has described her as "a nondescript vessel in many ways".[3] Over the next two years, parts of the ship's wooden structure were affected by wood rot. Her armor was removed to be put onto the ironclad CSS Nashville in 1864. By that August, Baltic had been decommissioned. Near the end of the war, she was taken up the Tombigbee River, where she was captured by Union forces on May 10, 1865. An inspection of Baltic the next month found that her upper hull and deck were rotten and that her boilers were unsafe. She was sold on December 31, and was likely broken up in 1866.

Key Differences: Selenium vs. Beautiful Soup

To get the complete picture and make a decision, we need to further analyze critical considerations about those two. In the following part, we'll get into detail about both libraries' functionality, speed, and ease of use.

Functionality

Selenium is a browser automation tool that can interact with web pages like a human user, whereas Beautiful Soup is a library for parsing HTML and XML documents. This means that Selenium offers more functionality since it can automate browser actions like clicking buttons, filling out forms, and navigating between pages. Beautiful Soup is more limited and is mainly used for parsing and extracting data.

Speed

Which one is faster in the Beautiful Soup vs. Selenium comparison? You're not the first one to ask this. Here's the answer: Beautiful Soup is faster than Selenium since it doesn't require an actual browser instance.

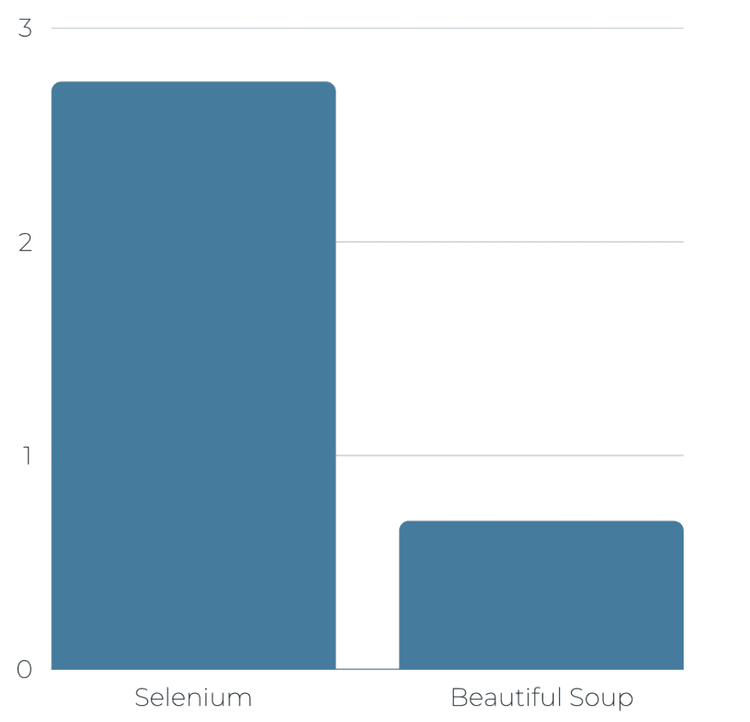

Let's see it for ourselves:

We'll use ScrapeThisSite as our target. We'll run the scripts presented above 1,000 times and plot the results using a bar chart.

We can use the os module in Python to run the scripts and the time module to calculate the time difference. We'll define t0=time.time(), t1=time.time(), and t2=time.time() between the os commands and store the differences. The result is saved in a Pandas data frame.

import os

import time

import matplotlib.pyplot as plt

import pandas as pd

d = {

"selenium": [],

"bs4": []

}

N = 1000

for i in range(N):

print("-"*20, f"Experiment {i+1}", "-"*20)

t0 = time.time()

os.system("python3 'beautifulsoup_script.py'")

t1 = time.time()

os.system("python3 'selenium_script.py'")

t2 = time.time()

d["selenium"].append(t2-t1)

d["bs4"].append(t1-t0)

df = pd.DataFrame(d)

df.to_csv("data.csv", index=False)

What are the results? See below:

This shows that Beautiful Soup is about 70% faster than Selenium!

Ease of Use

Additionally, Beautiful Soup is more user-friendly than Selenium. It offers a simple API that's also beginner-friendly. Selenium, on the other hand, can be more complex to set up and use as it requires knowledge of programming concepts such as web drivers and browser automation.

Which Is Better: Selenium vs. Beautiful Soup

There isn't a direct answer to this question since choosing between Selenium and Beautiful Soup depends on factors like your web scraping needs, long-term library support, and cross-browser support. Beautiful Soup is the faster choice, but when compared to Selenium, you see it supports fewer programming languages and falls short in scraping dynamic content.

Both are excellent libraries for scraping, especially for small-scale projects. Headaches start when you try large-scale web scraping or need to extract data from popular sites since protection measures might detect your bots. The best way to avoid this is using a web scraping API like ZenRows.

ZenRows is a web scraping tool that handles all anti-bot bypass for you in a single API call! It's equipped with essential features like rotating proxies, headless browsers, automatic retries, and so much more. Try it for free today and see it for yourself.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.